Based on ElasticSearch multi instance architecture, realize reasonable resource allocation and separation of hot and cold data

Author: "the wolf of hair", welcome to reprint and contribute

Catalog

Application

Framework

▪ 192.168.1.51 elastic search data deployment dual instance

▪ 192.168.1.52 elastic search data deployment dual instance

▪ 192.168.1.53 elastic search data deployment dual instance

Beta test

purpose

Previously:

▷ in the first EFK tutorial - Quick Start Guide, it describes the installation and deployment of EFK, in which the ES architecture is three nodes, that is, the master, ingest, and data roles are deployed on three servers at the same time.

▷ in the second EFK tutorial - ElasticSearch high performance and high availability architecture, this paper expounds the purpose of EFK's data/ingest/master role and the deployment of three nodes respectively to maximize performance and ensure high availability

In the first two articles, there is only one instance in the ES cluster. In this article, multiple es instances will be deployed in one cluster to realize the reasonable allocation of resources. For example, the data server has SSD and SAS hard disk, which can store the hot data in SSD, and the cold data in SAS, so as to realize the separation of hot and cold data.

In this paper, we will create two instances for the data server, based on SSD and SAS hard disk respectively. We will put the September index of nginx on the SAS disk, and the rest on the SSD disk

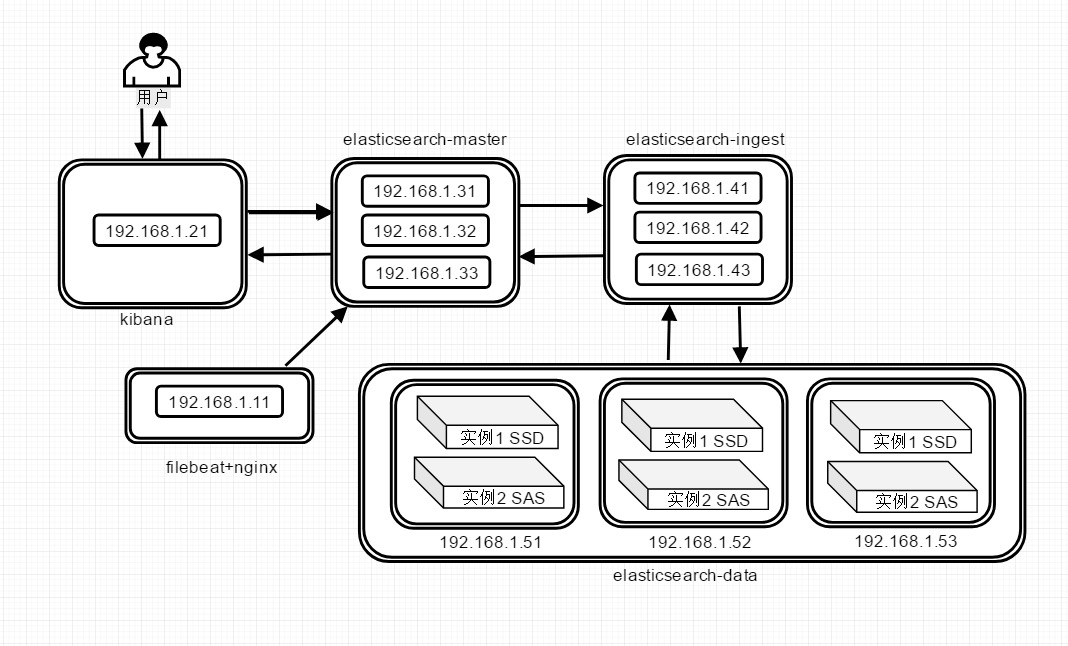

Framework

Architecture diagram

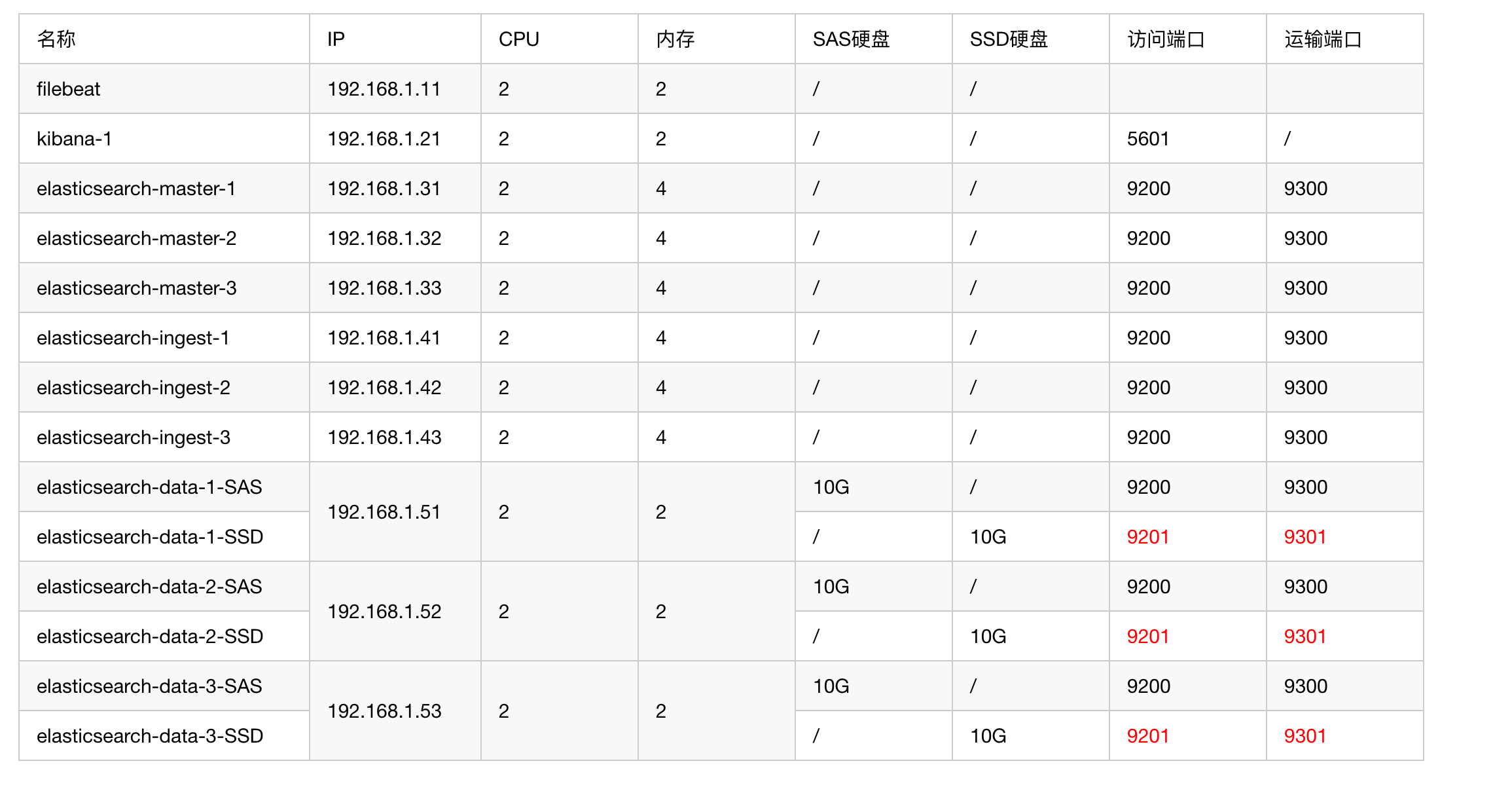

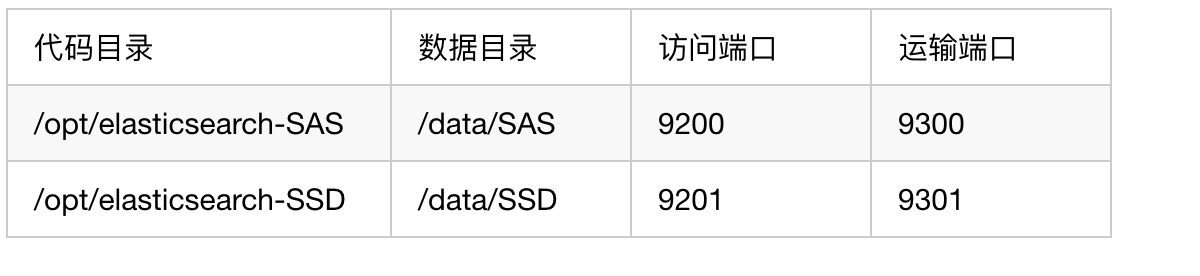

Server configuration

192.168.1.51 elastic search data deployment dual instance

Index migration (this step cannot be ignored): put the index on 192.168.1.51 on two other data nodes

curl -X PUT "192.168.1.31:9200/*/_settings?pretty" -H 'Content-Type: application/json' -d' { "index.routing.allocation.include._ip": "192.168.1.52,192.168.1.53" }'

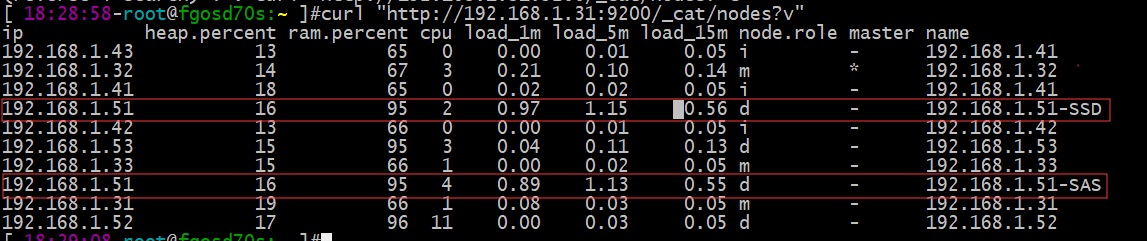

Confirm the current index storage location: confirm that all indexes are not on the 192.168.1.51 node

curl "http://192.168.1.31:9200/_cat/shards?h=n"

Stop the process of 192.168.1.51, modify the directory structure and configuration: Please mount the data disk according to SSD and SAS hard disk

# For installation package download and deployment, please refer to the first EFK tutorial - Quick Start Guide cd /opt/software/ tar -zxvf elasticsearch-7.3.2-linux-x86_64.tar.gz mv /opt/elasticsearch /opt/elasticsearch-SAS mv elasticsearch-7.3.2 /opt/ mv /opt/elasticsearch-7.3.2 /opt/elasticsearch-SSD chown elasticsearch.elasticsearch /opt/elasticsearch-* -R rm -rf /data/SAS/* chown elasticsearch.elasticsearch /data/* -R mkdir -p /opt/logs/elasticsearch-SAS mkdir -p /opt/logs/elasticsearch-SSD chown elasticsearch.elasticsearch /opt/logs/* -R

SAS instance / opt / elasticsearch SAS / config / elasticsearch.yml configuration

cluster.name: my-application node.name: 192.168.1.51-SAS path.data: /data/SAS path.logs: /opt/logs/elasticsearch-SAS network.host: 192.168.1.51 http.port: 9200 transport.port: 9300 # Discovery.seed'hosts and cluster.initial'master'nodes must be provided with port numbers, otherwise http.port and transport.port will be used discovery.seed_hosts: ["192.168.1.31:9300","192.168.1.32:9300","192.168.1.33:9300"] cluster.initial_master_nodes: ["192.168.1.31:9300","192.168.1.32:9300","192.168.1.33:9300"] http.cors.enabled: true http.cors.allow-origin: "*" node.master: false node.ingest: false node.data: true # Only 2 instances can be started in this machine node.max_local_storage_nodes: 2

SSD instance / opt / elasticsearch SSD / config / elasticsearch.yml configuration

cluster.name: my-application node.name: 192.168.1.51-SSD path.data: /data/SSD path.logs: /opt/logs/elasticsearch-SSD network.host: 192.168.1.51 http.port: 9201 transport.port: 9301 # Discovery.seed'hosts and cluster.initial'master'nodes must be provided with port numbers, otherwise http.port and transport.port will be used discovery.seed_hosts: ["192.168.1.31:9300","192.168.1.32:9300","192.168.1.33:9300"] cluster.initial_master_nodes: ["192.168.1.31:9300","192.168.1.32:9300","192.168.1.33:9300"] http.cors.enabled: true http.cors.allow-origin: "*" node.master: false node.ingest: false node.data: true # Only 2 instances can be started in this machine node.max_local_storage_nodes: 2

How to start SAS instance and SSD instance

sudo -u elasticsearch /opt/elasticsearch-SAS/bin/elasticsearch sudo -u elasticsearch /opt/elasticsearch-SSD/bin/elasticsearch

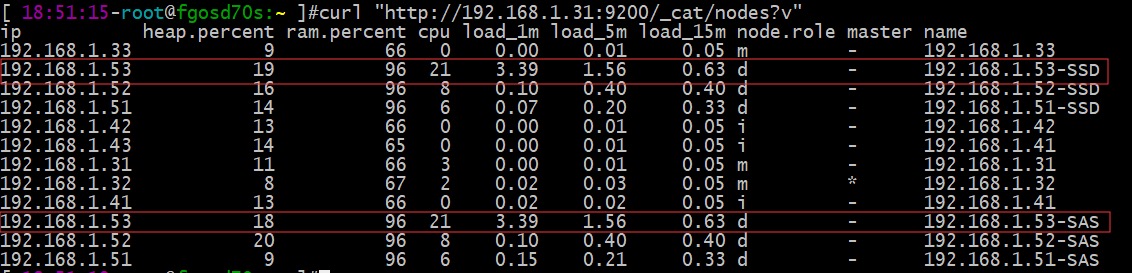

Confirm that SAS and SSD have enabled 2 instances

curl "http://192.168.1.31:9200/_cat/nodes?v"

192.168.1.52 elastic search data deployment dual instance

Index migration (this step cannot be ignored): put the index on 192.168.1.52 on the other 2 data nodes

curl -X PUT "192.168.1.31:9200/*/_settings?pretty" -H 'Content-Type: application/json' -d' { "index.routing.allocation.include._ip": "192.168.1.51,192.168.1.53" }'

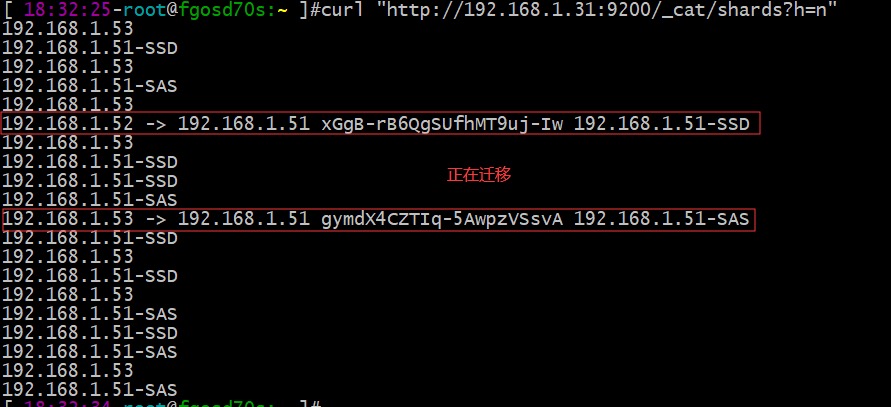

Confirm the current index storage location: confirm that all indexes are not on the 192.168.1.52 node

curl "http://192.168.1.31:9200/_cat/shards?h=n"

Stop the process of 192.168.1.52, modify the directory structure and configuration: Please mount the data disk according to SSD and SAS hard disk.

# For installation package download and deployment, please refer to the first EFK tutorial - Quick Start Guide cd /opt/software/ tar -zxvf elasticsearch-7.3.2-linux-x86_64.tar.gz mv /opt/elasticsearch /opt/elasticsearch-SAS mv elasticsearch-7.3.2 /opt/ mv /opt/elasticsearch-7.3.2 /opt/elasticsearch-SSD chown elasticsearch.elasticsearch /opt/elasticsearch-* -R rm -rf /data/SAS/* chown elasticsearch.elasticsearch /data/* -R mkdir -p /opt/logs/elasticsearch-SAS mkdir -p /opt/logs/elasticsearch-SSD chown elasticsearch.elasticsearch /opt/logs/* -R

SAS instance / opt / elasticsearch SAS / config / elasticsearch.yml configuration

cluster.name: my-application node.name: 192.168.1.52-SAS path.data: /data/SAS path.logs: /opt/logs/elasticsearch-SAS network.host: 192.168.1.52 http.port: 9200 transport.port: 9300 # Discovery.seed'hosts and cluster.initial'master'nodes must be provided with port numbers, otherwise http.port and transport.port will be used discovery.seed_hosts: ["192.168.1.31:9300","192.168.1.32:9300","192.168.1.33:9300"] cluster.initial_master_nodes: ["192.168.1.31:9300","192.168.1.32:9300","192.168.1.33:9300"] http.cors.enabled: true http.cors.allow-origin: "*" node.master: false node.ingest: false node.data: true # Only 2 instances can be started in this machine node.max_local_storage_nodes: 2

SSD instance / opt / elasticsearch SSD / config / elasticsearch.yml configuration

cluster.name: my-application node.name: 192.168.1.52-SSD path.data: /data/SSD path.logs: /opt/logs/elasticsearch-SSD network.host: 192.168.1.52 http.port: 9201 transport.port: 9301 # Discovery.seed'hosts and cluster.initial'master'nodes must be provided with port numbers, otherwise http.port and transport.port will be used discovery.seed_hosts: ["192.168.1.31:9300","192.168.1.32:9300","192.168.1.33:9300"] cluster.initial_master_nodes: ["192.168.1.31:9300","192.168.1.32:9300","192.168.1.33:9300"] http.cors.enabled: true http.cors.allow-origin: "*" node.master: false node.ingest: false node.data: true # Only 2 instances can be started in this machine node.max_local_storage_nodes: 2

How to start SAS instance and SSD instance

sudo -u elasticsearch /opt/elasticsearch-SAS/bin/elasticsearch sudo -u elasticsearch /opt/elasticsearch-SSD/bin/elasticsearch

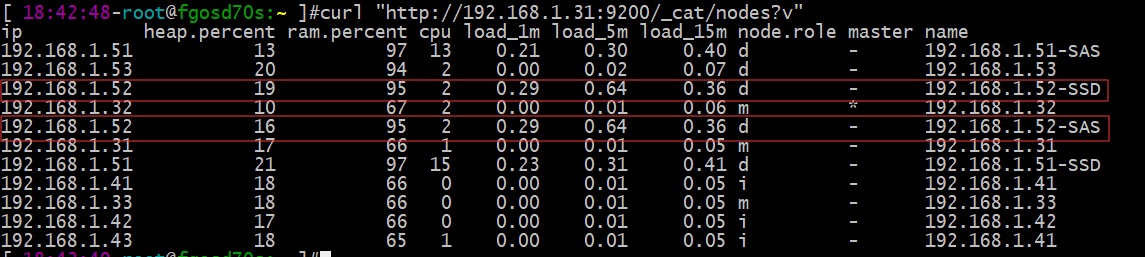

Confirm that SAS and SSD have enabled 2 instances

curl "http://192.168.1.31:9200/_cat/nodes?v"

192.168.1.53 elastic search data deployment dual instance

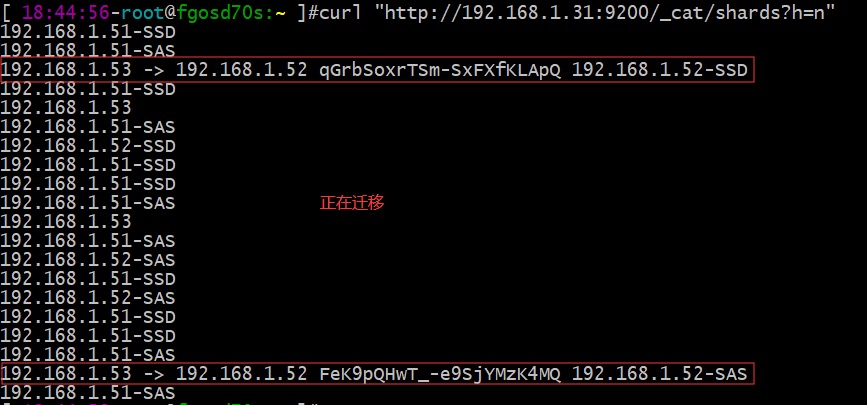

Index migration (this step cannot be ignored): be sure to do this step, and put the index on 192.168.1.53 on the other two data nodes

curl -X PUT "192.168.1.31:9200/*/_settings?pretty" -H 'Content-Type: application/json' -d' { "index.routing.allocation.include._ip": "192.168.1.51,192.168.1.52" }'

Confirm the current index storage location: confirm that all indexes are not on the 192.168.1.52 node

curl "http://192.168.1.31:9200/_cat/shards?h=n"

Stop the process of 192.168.1.53, modify the directory structure and configuration: Please mount the data disk according to SSD and SAS hard disk

# For installation package download and deployment, please refer to the first EFK tutorial - Quick Start Guide cd /opt/software/ tar -zxvf elasticsearch-7.3.2-linux-x86_64.tar.gz mv /opt/elasticsearch /opt/elasticsearch-SAS mv elasticsearch-7.3.2 /opt/ mv /opt/elasticsearch-7.3.2 /opt/elasticsearch-SSD chown elasticsearch.elasticsearch /opt/elasticsearch-* -R rm -rf /data/SAS/* chown elasticsearch.elasticsearch /data/* -R mkdir -p /opt/logs/elasticsearch-SAS mkdir -p /opt/logs/elasticsearch-SSD chown elasticsearch.elasticsearch /opt/logs/* -R

SAS instance / opt / elasticsearch SAS / config / elasticsearch.yml configuration

cluster.name: my-application node.name: 192.168.1.53-SAS path.data: /data/SAS path.logs: /opt/logs/elasticsearch-SAS network.host: 192.168.1.53 http.port: 9200 transport.port: 9300 # Discovery.seed'hosts and cluster.initial'master'nodes must be provided with port numbers, otherwise http.port and transport.port will be used discovery.seed_hosts: ["192.168.1.31:9300","192.168.1.32:9300","192.168.1.33:9300"] cluster.initial_master_nodes: ["192.168.1.31:9300","192.168.1.32:9300","192.168.1.33:9300"] http.cors.enabled: true http.cors.allow-origin: "*" node.master: false node.ingest: false node.data: true # Only 2 instances can be started in this machine node.max_local_storage_nodes: 2

SSD instance / opt / elasticsearch SSD / config / elasticsearch.yml configuration

cluster.name: my-application node.name: 192.168.1.53-SSD path.data: /data/SSD path.logs: /opt/logs/elasticsearch-SSD network.host: 192.168.1.53 http.port: 9201 transport.port: 9301 # Discovery.seed'hosts and cluster.initial'master'nodes must be provided with port numbers, otherwise http.port and transport.port will be used discovery.seed_hosts: ["192.168.1.31:9300","192.168.1.32:9300","192.168.1.33:9300"] cluster.initial_master_nodes: ["192.168.1.31:9300","192.168.1.32:9300","192.168.1.33:9300"] http.cors.enabled: true http.cors.allow-origin: "*" node.master: false node.ingest: false node.data: true # Only 2 instances can be started in this machine node.max_local_storage_nodes: 2

How to start SAS instance and SSD instance

sudo -u elasticsearch /opt/elasticsearch-SAS/bin/elasticsearch sudo -u elasticsearch /opt/elasticsearch-SSD/bin/elasticsearch

Confirm that SAS and SSD have enabled 2 instances

curl "http://192.168.1.31:9200/_cat/nodes?v"

test

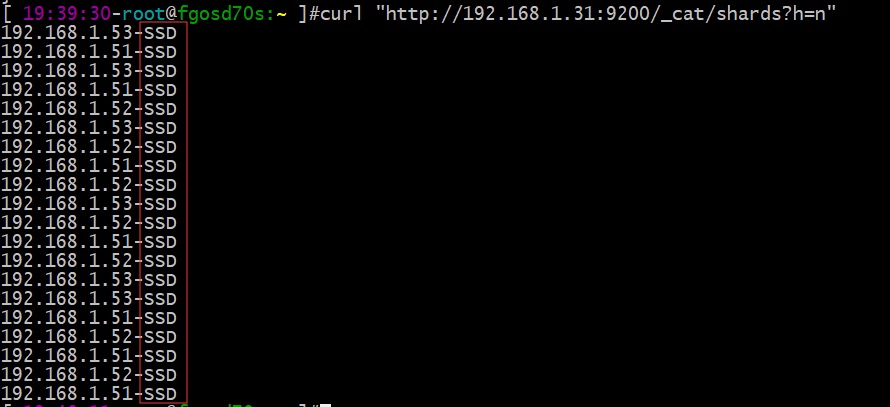

Move all indexes to SSD hard disk

# The following parameters will be explained in the following article. Just copy them here curl -X PUT "192.168.1.31:9200/*/_settings?pretty" -H 'Content-Type: application/json' -d' { "index.routing.allocation.include._host_ip": "", "index.routing.allocation.include._host": "", "index.routing.allocation.include._name": "", "index.routing.allocation.include._ip": "", "index.routing.allocation.require._name": "*-SSD" }'

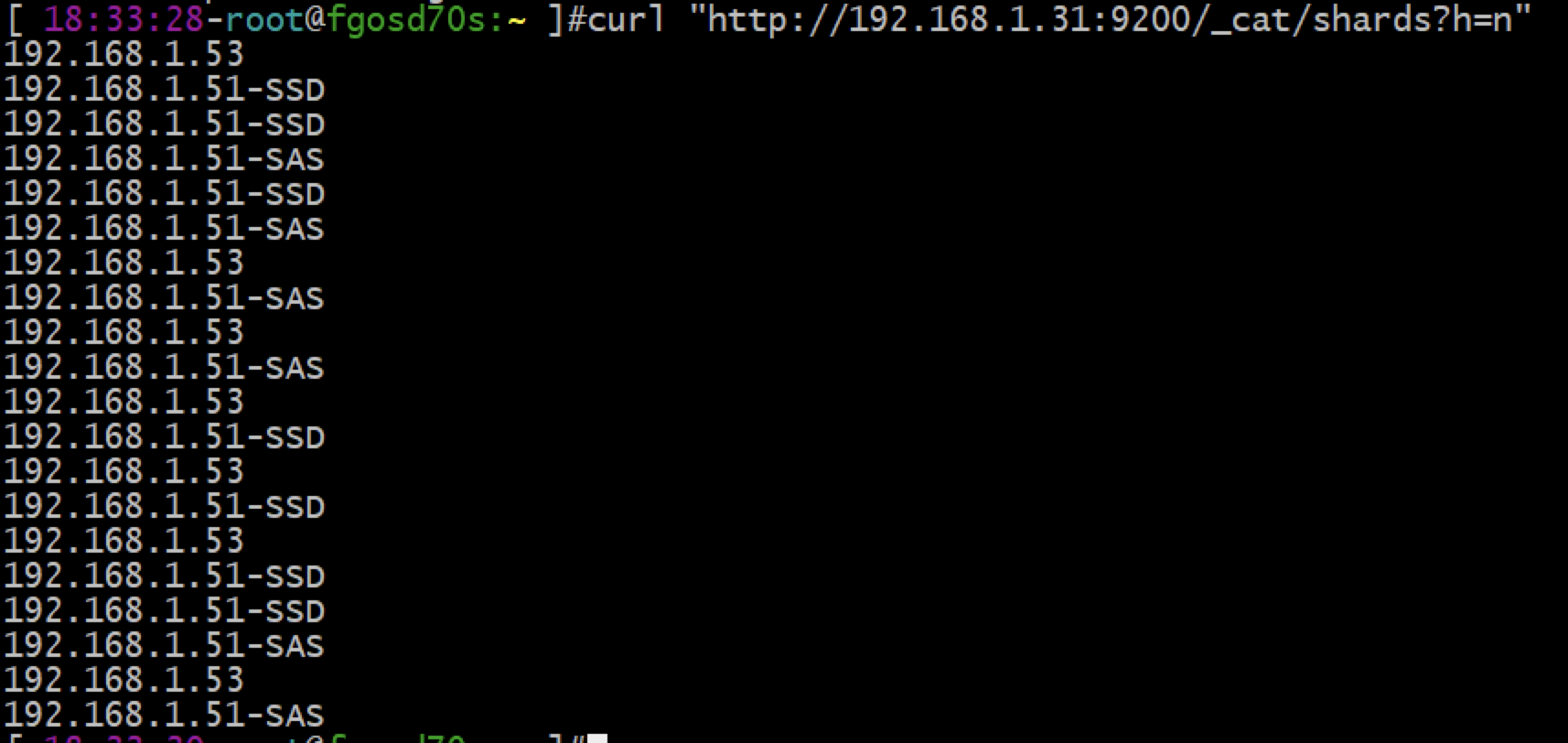

Make sure all indexes are on SSD hard disk

curl "http://192.168.1.31:9200/_cat/shards?h=n"

Migrating the log index of nginx in September to the SAS hard disk

curl -X PUT "192.168.1.31:9200/nginx_*_2019.09/_settings?pretty" -H 'Content-Type: application/json' -d' { "index.routing.allocation.require._name": "*-SAS" }'

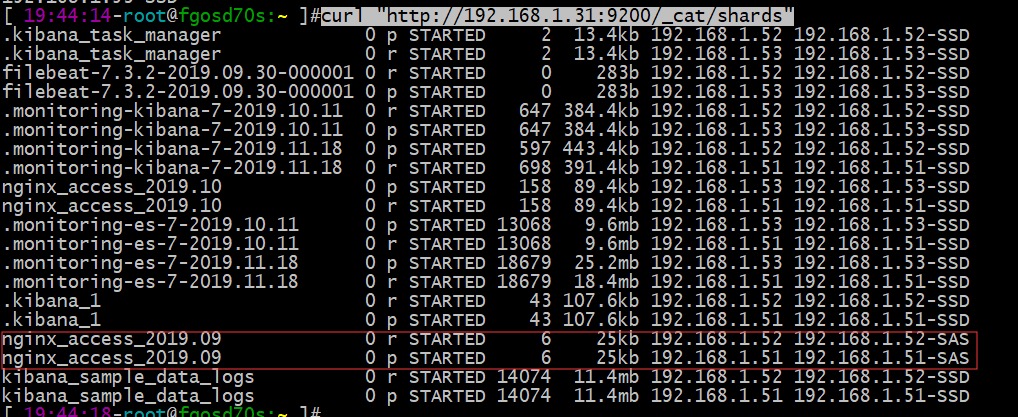

Confirm that the log index of nginx in September is migrated to the SAS hard disk

curl "http://192.168.1.31:9200/_cat/shards"