First, set up the hadoop environment under win10, hadoop can run

Extract the installation package and source package of Hadoop 2.7.7, create an empty directory after decompression, and copy all the jar packages under the unpacked source package and other packages except kms directory package under share/hadoop under the installation package to the newly created empty directory. About 120

Put hadoop.dll under hadoop/bin of win10 into c:windows/system32 and restart the computer

Check whether the local Hadoop environment installed before is configured with Hadoop environment variable and Hadoop's hadoop'user'name defaults to root. Put hadoop.dll file under C disk windows/system32

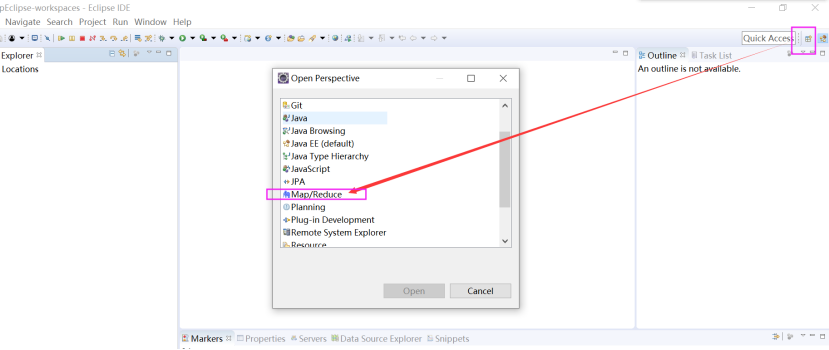

Under the path of installing eclipse, plugins and dropins, put hadoop-eclipse-plugin-2.6.0.jar (you can download your own version of plug-ins) in the path / eclipse/plugins / and / eclipse/dropins, and start eclipse

Successful installation

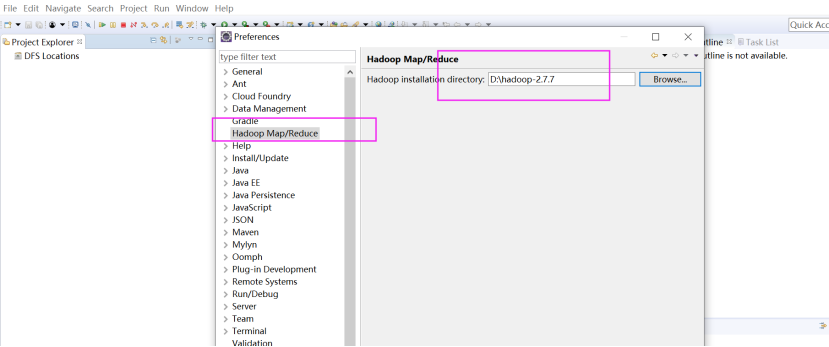

6. In ecplise, find Hadoop Map/Reduce in window - > preferences to specify the local hadoop path.

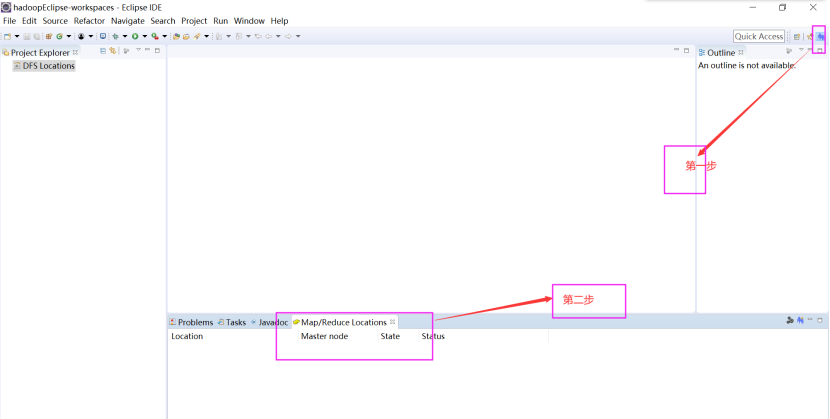

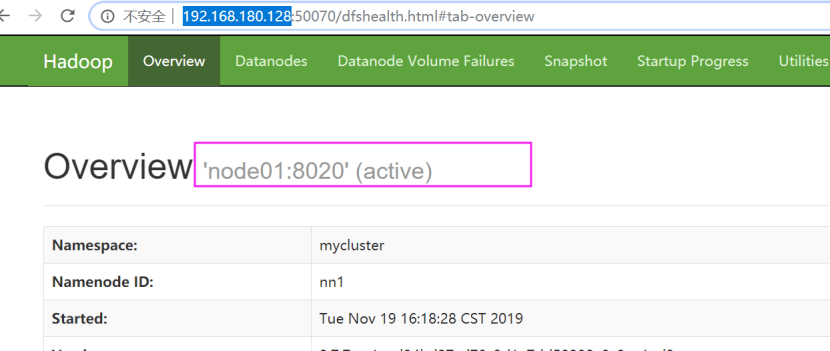

7. First confirm whether hadoop cluster is started, and then

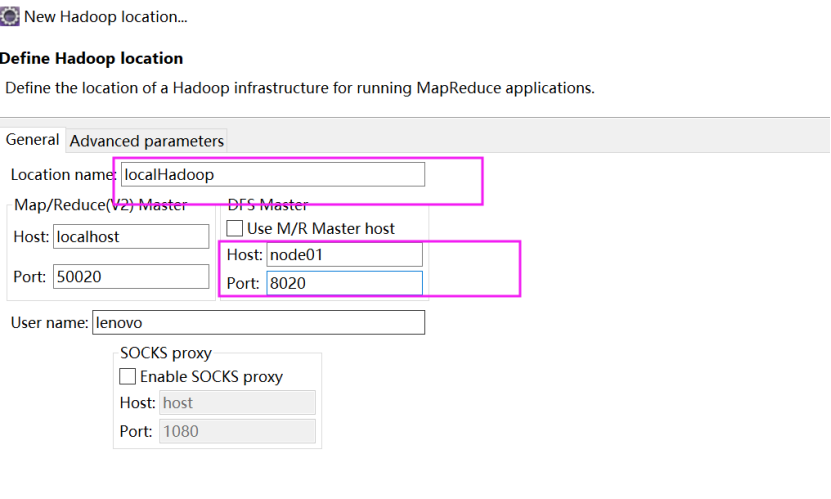

In step 2, create a new location in Map/Reduce Locations

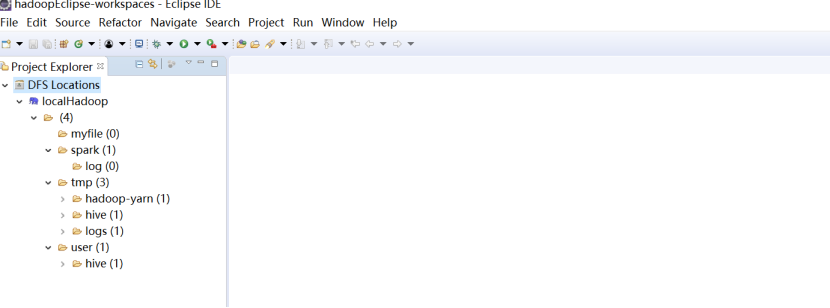

Then click finish, and you can see that ecplise is connected to hadoop

If you can't see it, click local Hadoop, right-click reconnection to reconnect

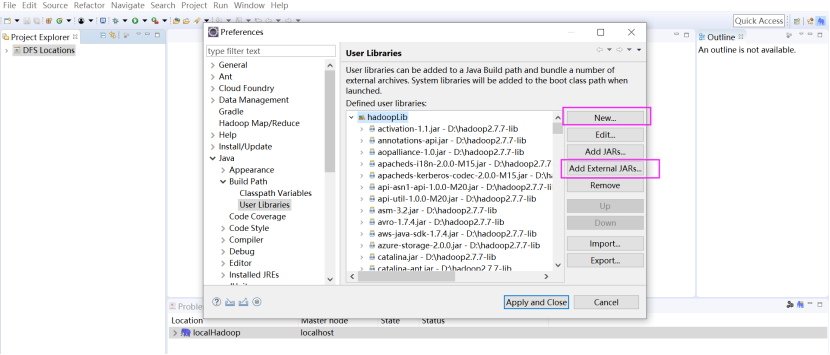

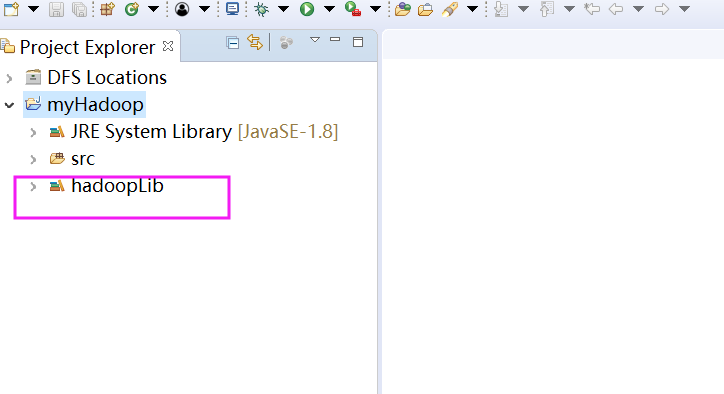

8. import package

In eclipse, windows > Preferences > java > built Path > user libraries

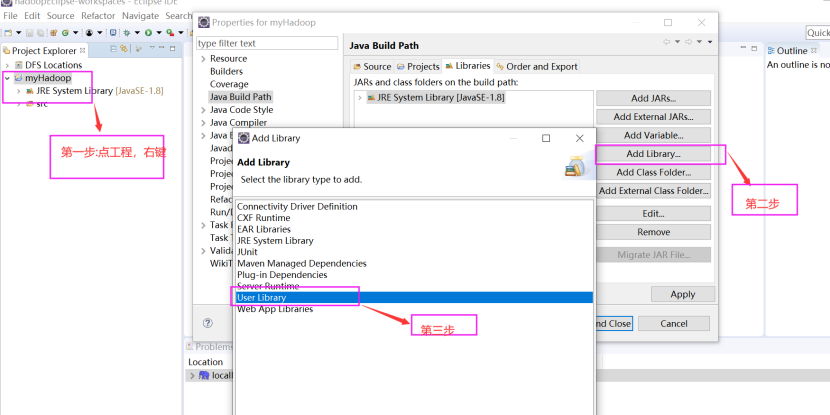

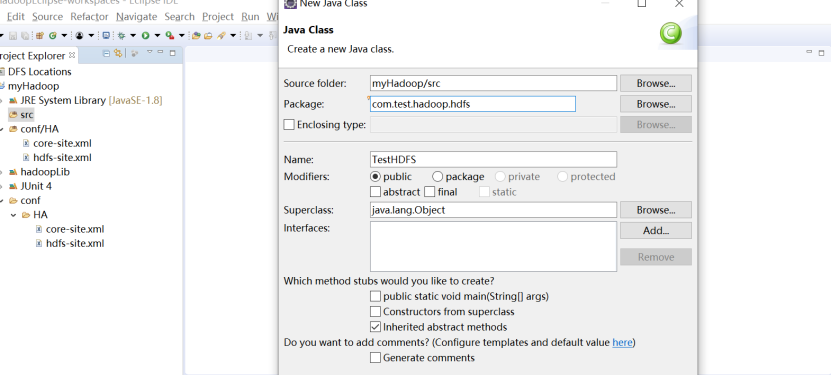

Then ecplise creates a project: File - > New - > Project - > java - > java project

You don't need to type Hadoop lib jar into the jar package, just use the program

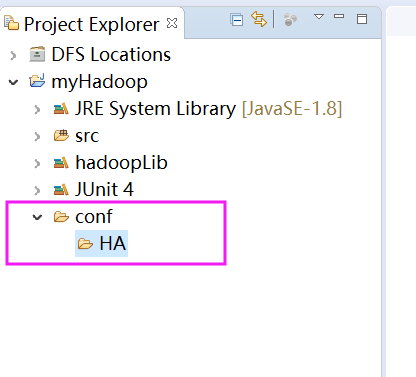

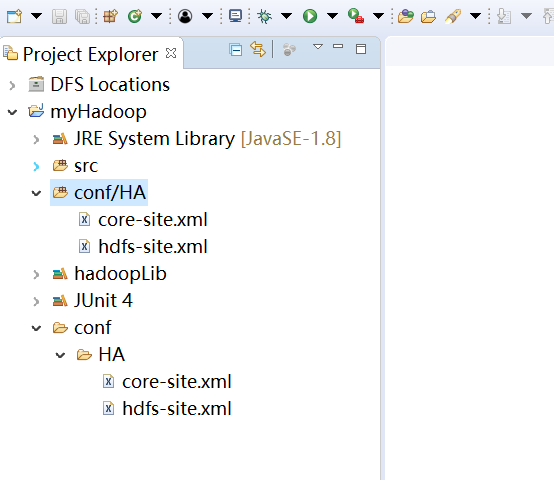

Click on the project to introduce JUnit 4 package. Then create a conf folder in the project, and create another HA directory under the conf directory

Add core-site.xml and hdfs-site.xml from hadoop cluster to HA directory

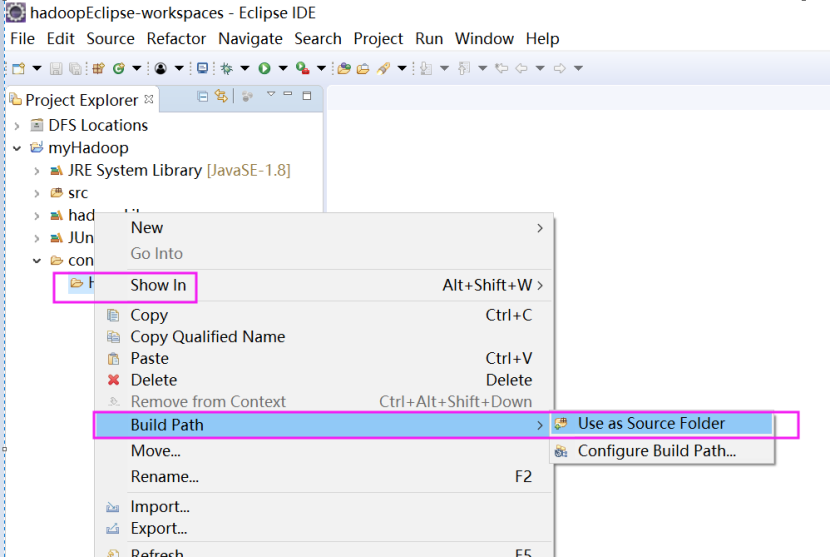

HA folder in point

Test code:

package com.test.hadoop.hdfs;

import java.io.BufferedInputStream;

import java.io.BufferedOutputStream;

import java.io.File;

import java.io.FileInputStream;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.BlockLocation;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

public class TestHDFS {

Configuration conf;

FileSystem fs;

@Before

public void conn() throws IOException {

conf = new Configuration(true);

fs = FileSystem.get(conf);

}

@After

public void close() throws IOException {

fs.close();

}

//Create directory

@Test

public void mkDir() throws IOException {

Path ifile = new Path("/ecpliseMkdir");

if(fs.exists(ifile)) {

fs.delete(ifile, true);

}

fs.mkdirs(ifile);

}

//Upload files

@Test

public void upload() throws IOException {

Path ifile = new Path("/ecpliseMkdir/hello.txt");

FSDataOutputStream output = fs.create(ifile);

InputStream input = new BufferedInputStream(new FileInputStream(new File("d:\\ywcj_chnl_risk_map_estimate_model.sql")));

IOUtils.copyBytes(input, output, conf, true);

}

//download

@Test

public void downLocal() throws IOException {

Path ifile = new Path("/ecpliseMkdir/hello.txt");

FSDataInputStream open = fs.open(ifile);

File newFile = new File("d:\\test.txt");

if(!newFile.exists()) {

newFile.createNewFile();

}

BufferedOutputStream output = new BufferedOutputStream(new FileOutputStream(newFile));

IOUtils.copyBytes(open, output, conf, true);

}

//Get block block information

@Test

public void blockInfo() throws IOException {

Path ifile = new Path("/ecpliseMkdir/hello.txt");

FileStatus fsu = fs.getFileStatus(ifile);

BlockLocation[] fileBlockLocations = fs.getFileBlockLocations(ifile, 0, fsu.getLen());

for(BlockLocation b : fileBlockLocations) {

System.out.println(b);

}

}

//Delete files

@Test

public void deleteFile() throws IOException {

Path ifile = new Path("/ecpliseMkdir/hello.txt");

boolean delete = fs.delete(ifile, true);

if(delete) {

System.out.println("Delete successful---------");

}

}}