The last blog explained the use of Noejs crawls blog posts in blog Gardens This time, we are going to download the pictures on the internet.

The third-party modules needed are:

superagent

Supagent-charset

cheerio

express

async (concurrency control)

Complete code can be used in My github can be downloaded . The main logic is in netbian.js.

On the other side of the table( http://www.netbian.com/ ) Scenic Wallpaper under Column( http://www.netbian.com/fengjing/index.htm ) Explain with examples.

1. Analyzing URL s

It is not difficult to find that:

Home page: column / index.htm

Paging: Column/index_specific page number. htm

Knowing this rule, you can download wallpaper in batches.

2. Analyse the wallpaper thumbnails and find the corresponding wallpaper maps.

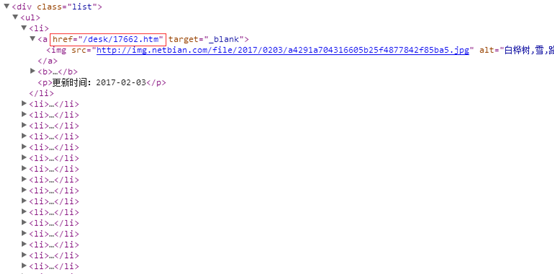

Using chrome's developer tools, you can see that the thumbnail list is in the div of class="list", and the href attribute of a tag is the page where the wallpaper is located.

Part of the code:

1 request 2 .get(url) 3 .end(function(err, sres){ 4 5 var $ = cheerio.load(sres.text); 6 var pic_url = []; // Medium Picture Link Array 7 $('.list ul', 0).find('li').each(function(index, ele){ 8 var ele = $(ele); 9 var href = ele.find('a').eq(0).attr('href'); // Medium Picture Link 10 if(href != undefined){ 11 pic_url.push(url_model.resolve(domain, href)); 12 } 13 }); 14 });

3. Continue the analysis with "http://www.netbian.com/desk/17662.htm"

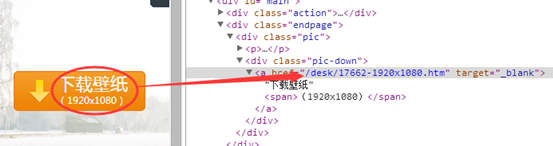

Open this page and find that the wallpaper displayed on this page is still not the highest resolution.

Click on the link in the Download Wallpaper button to open a new page.

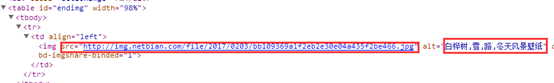

4. Continue the analysis with "http://www.netbian.com/desk/17662-1920x1080.htm"

Open this page, we will eventually download the wallpaper and put it in a table. As shown below, http://img.netbian.com/file/2017/0203/bb109369a1f2eb2e30e04a435f2be466.jpg

It's the URL of the image we're ultimately going to download (behind the scenes, BOSS finally appears ().

Code for downloading pictures:

request .get(wallpaper_down_url) .end(function(err, img_res){ if(img_res.status == 200){ // Save image content fs.writeFile(dir + '/' + wallpaper_down_title + path.extname(path.basename(wallpaper_down_url)), img_res.body, 'binary', function(err){ if(err) console.log(err); }); } });

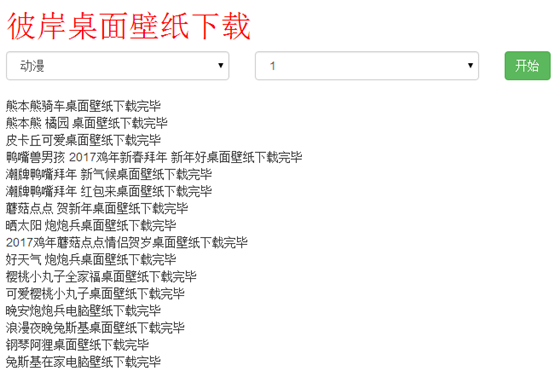

Open the browser and visit http://localhost:1314/fengjing

Select columns and pages and click the Start button:

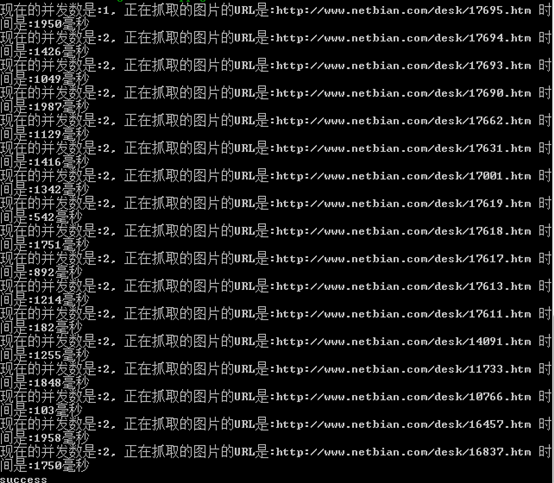

Concurrent request server, download pictures.

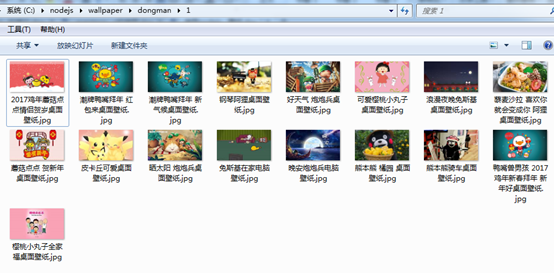

Completion ~

The catalogue of pictures is saved in the form of column + page number.

Attach the complete picture download code:

1 /** 2 * Download pictures 3 * @param {[type]} url [Picture URL] 4 * @param {[type]} dir [Storage directory] 5 * @param {[type]} res [description] 6 * @return {[type]} [description] 7 */ 8 var down_pic = function(url, dir, res){ 9 10 var domain = 'http://www.netbian.com'; // domain name 11 12 request 13 .get(url) 14 .end(function(err, sres){ 15 16 var $ = cheerio.load(sres.text); 17 var pic_url = []; // Medium Picture Link Array 18 $('.list ul', 0).find('li').each(function(index, ele){ 19 var ele = $(ele); 20 var href = ele.find('a').eq(0).attr('href'); // Medium Picture Link 21 if(href != undefined){ 22 pic_url.push(url_model.resolve(domain, href)); 23 } 24 }); 25 26 var count = 0; // Concurrent counter 27 var wallpaper = []; // Wallpaper array 28 var fetchPic = function(_pic_url, callback){ 29 30 count++; // Concurrent plus 1 31 32 var delay = parseInt((Math.random() * 10000000) % 2000); 33 console.log('Now the number of concurrencies is:' + count + ', Pictures being grabbed URL yes:' + _pic_url + ' Time is:' + delay + 'Millisecond'); 34 setTimeout(function(){ 35 // Get Links to Big Maps 36 request 37 .get(_pic_url) 38 .end(function(err, ares){ 39 var $$ = cheerio.load(ares.text); 40 var pic_down = url_model.resolve(domain, $$('.pic-down').find('a').attr('href')); // Big picture link 41 42 count--; // Concurrent reduction of 1 43 44 // Request Large Map Links 45 request 46 .get(pic_down) 47 .charset('gbk') // Set encoding, Webpage GBK Ways to obtain 48 .end(function(err, pic_res){ 49 50 var $$$ = cheerio.load(pic_res.text); 51 var wallpaper_down_url = $$$('#endimg').find('img').attr('src'); // URL 52 var wallpaper_down_title = $$$('#endimg').find('img').attr('alt'); // title 53 54 // Download the big picture 55 request 56 .get(wallpaper_down_url) 57 .end(function(err, img_res){ 58 if(img_res.status == 200){ 59 // Save image content 60 fs.writeFile(dir + '/' + wallpaper_down_title + path.extname(path.basename(wallpaper_down_url)), img_res.body, 'binary', function(err){ 61 if(err) console.log(err); 62 }); 63 } 64 }); 65 66 wallpaper.push(wallpaper_down_title + 'Download completed<br />'); 67 }); 68 callback(null, wallpaper); // Return data 69 }); 70 }, delay); 71 }; 72 73 // Concurrent 2,Download wallpaper 74 async.mapLimit(pic_url, 2, function(_pic_url, callback){ 75 fetchPic(_pic_url, callback); 76 }, function (err, result){ 77 console.log('success'); 78 res.send(result[0]); // Take the element with a subscript of 0 79 }); 80 }); 81 };

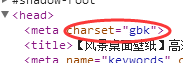

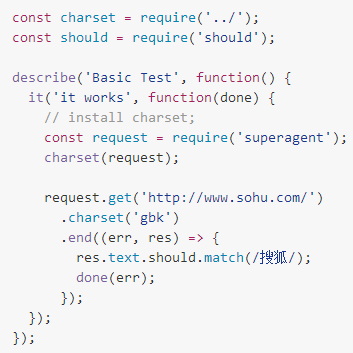

Two points need special attention:

1. The code of the "other desktop" page is "GBK". Noejs itself only supports UTF-8 encoding. Here we introduce the "superagent-charset" module to deal with the encoding of "GBK".

Attach an example from github

https://github.com/magicdawn/superagent-charset

2. Noejs is asynchronous, sending a large number of requests at the same time, which may be rejected by the server as malicious requests. Therefore, the "async" module is introduced here for concurrent processing, using the method of map Limit.

mapLimit(arr, limit, iterator, callback)

This method has four parameters:

The first parameter is the array.

The second parameter is the number of concurrent requests.

The third parameter is an iterator, usually a function.

The fourth parameter is the callback after concurrent execution.

The purpose of this method is to simultaneously limit each element in arr to iterator for execution, and pass the execution result to the final callback.

Later words

So far, the download of the picture has been completed.

Complete code, It's already on github. Welcome star ().

Writing is limited, talent and learning is shallow, if there are incorrect places, welcome the majority of bloggers to correct.