On October 9, I published an article entitled**< Do you know the reason why the server generates a lot of TIME_WAIT? >**Articles

On the day after the article was published, some readers pointed out my mistakes

Here I would like to thank this reader in particular. I think every mistake is an opportunity for my own progress and growth

So, with an inquisitive attitude, I consulted the relevant information on the Internet and asked my senior colleagues during my internship, right< Do you know the reason why the server generates a lot of TIME_WAIT? >Correct some mistakes in this article

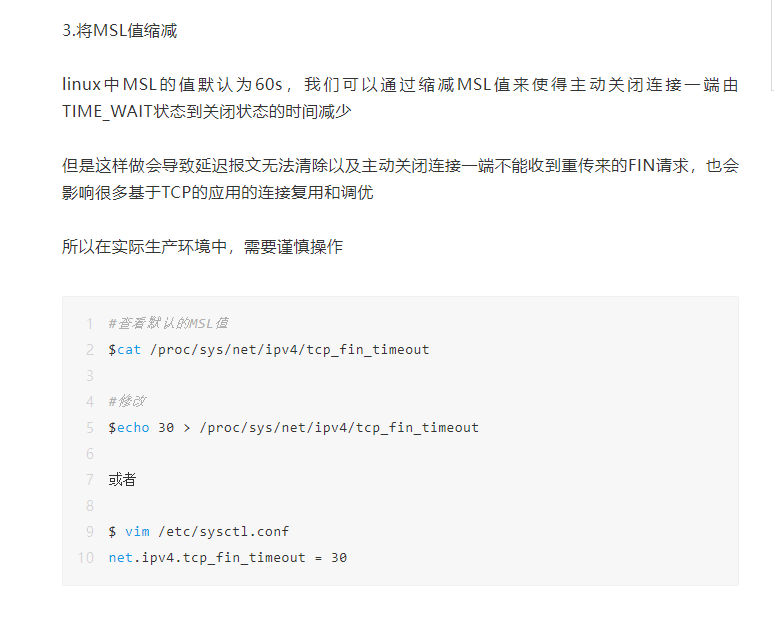

About net.ipv4.tcp_fin_timeout

In< Do you know the reason why the server generates a lot of TIME_WAIT? >In this article, I pointed out that you can use kernel parameters:

/proc/sys/net/ipv4/tcp_fin_timeout

To modify time_ Timeout for wait status

Later, after the big readers pointed out the mistakes and I checked the relevant documents, I found that this was not the case

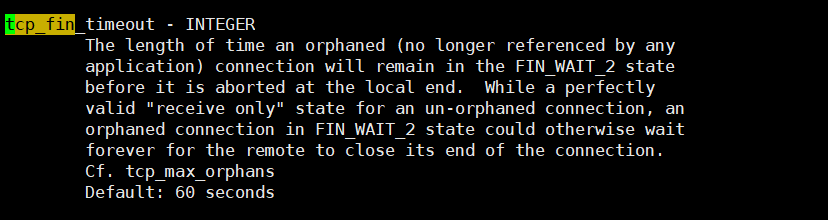

Let's take a look at the description of this kernel parameter

When the Linux kernel source code is released, it will contain some explanatory documents about kernel parameters and functions to facilitate people to better understand

In CentOS and RedHat systems, we can download the following information from the official website: https://www.kernel.org/ Download the kernel source package, which will have these documents

In addition, we can also use the kernel doc toolkit to view it

#Install the kit first [root@promote]# yum install -y kernel-doc #View version [root@promote]# rpm -qa kernel-dockernel-doc-3.10.0-1160.42.2.el7.noarch

After downloading and installing, we enter the following path (the path is different for different toolkit versions)

[root@promote]# cd /usr/share/doc/kernel-doc-3.10.0/Documentation/

This path contains documentation about kernel parameters in txt format. We can use grep command to find the kernel parameters you want to know

Here we look for TCP_ fin_ Documentation for the timeout parameter

Correlation path

/usr/share/doc/kernel-doc-3.10.0/Documentation/networking/ip-sysctl.txt

tcp_fin_timeout - INTEGER

The length of time an orphaned (no longer referenced by any

application) connection will remain in the FIN_WAIT_2 state

before it is aborted at the local end. While a perfectly

valid "receive only" state for an un-orphaned connection, an

orphaned connection in FIN_WAIT_2 state could otherwise wait

forever for the remote to close its end of the connection.

Cf. tcp_max_orphans

Default: 60 seconds

tcp_fin_timeout - INTEGER For local disconnected socket connect, TCP Keep in FIN_WAIT_2 Time of status. The other party may disconnect or never end the connection or unexpected process death. The default value is 60 seconds. In the past, in 2.2 180 seconds in the version of the kernel. You can set this value﹐But we need to pay attention﹐If your machine is heavily loaded web The server﹐ You may run the risk of memory being filled with a large number of invalid datagrams﹐FIN-WAIT-2 sockets Risk of lower than FIN-WAIT-1 ﹐Because they only eat 1 at most.5K Memory﹐ But they last longer. Additional reference tcp_max_orphans. Default: 60 seconds

From about TCP_ fin_ In the documentation of timeout, we can conclude that if the local end actively disconnects, this value means that TCP remains in FIN_WAIT_2 time of status

So really control time_ Which parameter is the wait state timeout?

The content of this article has solved our doubts

What really works in the kernel is a macro definition $KERNEL/include/net/tcp.h Inside, there are the following lines: #define TCP_TIMEWAIT_LEN (60*HZ) This macro is really controlled TCP TIME_WAIT Timeout for status. If we want to reduce TIME_WAIT The number of states (thus saving a little bit of kernel operation time), you can set this value lower. According to our test, it is appropriate to set it to 10 seconds

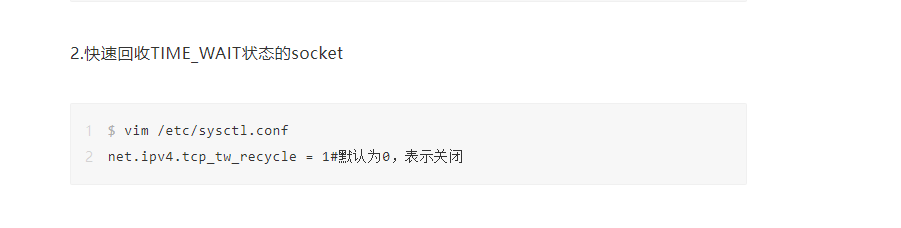

About net.ipv4.tcp_tw_recycle

In< Do you know the reason why the server generates a lot of TIME_WAIT? >In this article, I pointed out that by opening parameters:

net.ipv4.tcp_tw_recycle

To implement time_ Fast recovery of wait sockets

In the linux kernel documentation, net.ipv4.tcp_tw_recycle is described as follows

tcp_tw_recycle - BOOLEAN

Enable fast recycling TIME-WAIT sockets. Default value is 0.

It should not be changed without advice/request of technical

experts.

Chinese version:

Enable fast reclaim time wait sockets. The default value is 0. Without the advice of technical experts/It shall not be changed as required.

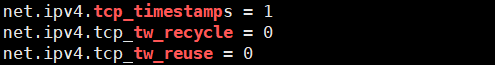

In fact, to achieve rapid time recovery_ For sockets in wait status, not only net.ipv4.tcp needs to be enabled_ tw_ For recycle, you also need to open net.ipv4.tcp_timestamps (on by default) this switch has effect

We can view it through the following shell:

[root@promote]# sysctl -a|grep -E "tcp_timestamp|tw_reuse|tw_recycle"

In addition, I looked at net.ipv4.tcp_ tw_ The source code of recycle was found

When this option is turned on, Linux All messages from the remote end will be discarded timestamp The timestamp is less than the last recorded timestamp(Sent by the same remote end)Any packets. That is, to use this option, you must ensure that the timestamp of the packet is monotonically increasing.

In general, TCP_ tw_ After the cycle is enabled (if tcp_timestamps is enabled together, it will be effective). The system will record and check the timestamp of each connection. If the timestamp of the subsequent connection is smaller than that recorded before, the system will consider it a wrong connection and reject and discard the connection

This mechanism will have major problems in some environments (such as nat environment). For example, LVS is used for load balancing. Generally, LVS is followed by multiple back-end server s.

When the client requests arrive at the lvs, the lvs forwards them to the back-end server, and the time stamps of these requests are not modified

For the back-end server, all requests are initiated by lvs. The back-end server will consider the same connection. In addition, the time of requests from different clients are inconsistent, and the timestamp will be disordered. If TCP is turned on at this time_ tw_ Recycle will cause many requests to be discarded, and some clients can connect to the server and some cannot

summary

-

tcp_fin_timeout means that TCP remains in FIN_WAIT_2 time of status, not time_ Timeout for wait status

-

tcp_ tw_ The recycle option is not applicable to NAT or load balancing before the 4.10 kernel (it is not recommended to enable it in other cases), and is removed in the 4.12 kernel version

-

Time appears_ The connection in wait state is a normal phenomenon. When there are too many connections, we can try to use the following methods for optimization (not one size fits all, but in combination with the actual production environment)

-

- Modify short link to long link

-

- Increase the available port range of the server; Increase the server service port so that the server can accommodate enough TIME_WAIT connection

-

- net.ipv4.tcp_max_tw_buckets is set to a small value (18000 by default). When time_ When the number of wait connections reaches the given value, all time_ The wait connection is cleared immediately and a warning message is printed. However, this rough clearing of all connections means that some connections do not successfully wait for 2MSL, which will cause abnormal communication

Finally, I sincerely thank the big readers who pointed out that my article is insufficient, so that I have a deeper understanding of these parameters. Also let me know that to solve a problem, you can't just find a solution. You have to understand each parameter before you operate, otherwise it may cause tragedy.