preface

To start the in-depth learning journey, we must first determine where the alchemy furnace is placed. It is understood that the conventional platform combination includes:

- win + anaconda

- win + wsl

- ubuntu + anaconda

- ubuntu + docker

The above combination methods are used more frequently. Of course, GPU/CPU and tensorflow/pytorch are based on requirements.

1, My platform

My computer is a combination of 9300H+GTX1650. Although the GPU is a little low, it is better than nothing. I can barely make do with it. After all, it's my own money. It's too cow to get it.

After today's tossing and turning, I decided to choose:

The main considerations of win + wsl + docker are as follows:

- Only one laptop, whether installed with dual systems or Linux, will more or less affect the use of other follow-up work. I'm too lazy to toss around and worry that it will become a brick if I'm not careful;

- wsl is not stable enough, and there will be inexplicable errors. For example, pip3 doesn't respond. After tossing for a week, I can't find the reason and give up directly

- anaconda is too slow to create the environment and start

- docker is more and more widely used, especially when it comes to deployment. Therefore, it is necessary to learn to use it, and multiple images can be established, which is much more affordable than wsl.

To sum up, it is decided to adopt the combination of win + wsl + docker.

2, Disposition

1. wsl

There are many online tutorials for installing and uninstalling wsl. Here you can record the commonly used functions:

The operation of wsl is carried out in PowerShell. The shortcut key to open wsl is win + R, and then open cmd

View WSL

wsl --list

Output is:

PS C:\Users\ASUS> wsl --list Apply to Linux of Windows Subsystem distribution: Ubuntu-20.04 (default) Ubuntu-18.04

cancellation

wsl --unregister Ubuntu-18.04

2. GPU

This is a big deal. Whether your computer can use GPU depends on this step. wsl installing cuda mainly has two steps:

Step 1

Install locally and download the nvidia driver software dedicated to wsl from the official website

Step 2

Installing CUDA toolkit in wsl

The main processes are:

sudo apt-key adv --fetch-keys http://mirrors.aliyun.com/nvidia-cuda/ubuntu2004/x86_64/7fa2af80.pub sudo sh -c 'echo "deb http://mirrors.aliyun.com/nvidia-cuda/ubuntu2004/x86_64 /" > /etc/apt/sources.list.d/cuda.list' sudo apt-get update sudo apt-get install -y cuda-toolkit-11-3

After installation, you need to configure the environment variable. bashrc, and then add the following at the end:

export CUDA_HOME=/usr/local/cuda export PATH=$PATH:$CUDA_HOME/bin export LD_LIBRARY_PATH=/usr/local/cuda-11.3/lib64

To determine whether the installation is completed, you can check through nvcc -V. if the installation is normal, you will have the following feedback:

z@LAPTO:~$ nvcc -V nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2021 NVIDIA Corporation Built on Mon_May__3_19:15:13_PDT_2021 Cuda compilation tools, release 11.3, V11.3.109 Build cuda_11.3.r11.3/compiler.29920130_0

Or compile a samples check:

cd /usr/local/cuda/samples/4_Finance/BlackScholes sudo make ./BlackScholes

3. Docker

Related resource settings:

distribution=$(. /etc/os-release;echo $ID$VERSION_ID) curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add - curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list curl -s -L https://nvidia.github.io/libnvidia-container/experimental/$distribution/libnvidia-container-experimental.list | sudo tee /etc/apt/sources.list.d/libnvidia-container-experimental.list

NVIDIA docker2 installation

sudo apt-get update sudo apt-get install -y nvidia-docker2

Remember to restart after installation:

sudo service docker stop sudo service docker start

Test after installation:

sudo docker run --gpus all nvcr.io/nvidia/k8s/cuda-sample:nbody nbody -gpu -benchmark

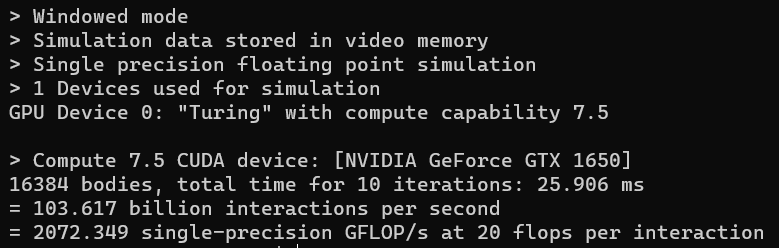

If the installation is successful, the following message appears: