Vi. CephFS use

- https://docs.ceph.com/en/pacific/cephfs/

6.1 deploying MDS services

6.1.1 installation of CEPH MDS

Click to view the coderoot@ceph-mgr-01:~# apt -y install ceph-mds

6.1.2 create MDS service

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph-deploy mds create ceph-mgr-01

[ceph_deploy.conf][DEBUG ] found configuration file at: /var/lib/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mds create ceph-mgr-01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f82357dae60>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mds at 0x7f82357b9350>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] mds : [('ceph-mgr-01', 'ceph-mgr-01')]

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mds][DEBUG ] Deploying mds, cluster ceph hosts ceph-mgr-01:ceph-mgr-01

ceph@ceph-mgr-01's password:

[ceph-mgr-01][DEBUG ] connection detected need for sudo

ceph@ceph-mgr-01's password:

sudo: unable to resolve host ceph-mgr-01

[ceph-mgr-01][DEBUG ] connected to host: ceph-mgr-01

[ceph-mgr-01][DEBUG ] detect platform information from remote host

[ceph-mgr-01][DEBUG ] detect machine type

[ceph_deploy.mds][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph_deploy.mds][DEBUG ] remote host will use systemd

[ceph_deploy.mds][DEBUG ] deploying mds bootstrap to ceph-mgr-01

[ceph-mgr-01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mgr-01][WARNIN] mds keyring does not exist yet, creating one

[ceph-mgr-01][DEBUG ] create a keyring file

[ceph-mgr-01][DEBUG ] create path if it doesn't exist

[ceph-mgr-01][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.ceph-mgr-01 osd allow rwx mds allow mon allow profile mds -o /var/lib/ceph/mds/ceph-ceph-mgr-01/keyring

[ceph-mgr-01][INFO ] Running command: sudo systemctl enable ceph-mds@ceph-mgr-01

[ceph-mgr-01][WARNIN] Created symlink /etc/systemd/system/ceph-mds.target.wants/ceph-mds@ceph-mgr-01.service → /lib/systemd/system/ceph-mds@.service.

[ceph-mgr-01][INFO ] Running command: sudo systemctl start ceph-mds@ceph-mgr-01

[ceph-mgr-01][INFO ] Running command: sudo systemctl enable ceph.target6.2 create CephFS metadata and data storage pool

6.2.1 create metadata storage pool

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph osd pool create cephfs-metadata 32 32 pool 'cephfs-metadata' created

6.2.2 creating a data storage pool

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph osd pool create cephfs-data 64 64 pool 'cephfs-data' created

6.2.3 viewing Ceph cluster status

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph -s

cluster:

id: 6e521054-1532-4bc8-9971-7f8ae93e8430

health: HEALTH_WARN

3 daemons have recently crashed

services:

mon: 3 daemons, quorum ceph-mon-01,ceph-mon-02,ceph-mon-03 (age 7m)

mgr: ceph-mgr-01(active, since 16m), standbys: ceph-mgr-02

mds: 1/1 daemons up

osd: 9 osds: 9 up (since 44h), 9 in (since 44h)

data:

volumes: 1/1 healthy

pools: 4 pools, 161 pgs

objects: 43 objects, 24 MiB

usage: 1.4 GiB used, 179 GiB / 180 GiB avail

pgs: 161 active+clean

6.3 creating CephFS file system

6.3.1 create CephFS file system command format

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs new -hGeneral usage:

usage: ceph [-h] [-c CEPHCONF] [-i INPUT_FILE] [-o OUTPUT_FILE]

[--setuser SETUSER] [--setgroup SETGROUP] [--id CLIENT_ID]

[--name CLIENT_NAME] [--cluster CLUSTER]

[--admin-daemon ADMIN_SOCKET] [-s] [-w] [--watch-debug]

[--watch-info] [--watch-sec] [--watch-warn] [--watch-error]

[-W WATCH_CHANNEL] [--version] [--verbose] [--concise]

[-f {json,json-pretty,xml,xml-pretty,plain,yaml}]

[--connect-timeout CLUSTER_TIMEOUT] [--block] [--period PERIOD]Ceph administration tool

optional arguments:

-h, --help request mon help

-c CEPHCONF, --conf CEPHCONF

ceph configuration file

-i INPUT_FILE, --in-file INPUT_FILE

input file, or "-" for stdin

-o OUTPUT_FILE, --out-file OUTPUT_FILE

output file, or "-" for stdout

--setuser SETUSER set user file permission

--setgroup SETGROUP set group file permission

--id CLIENT_ID, --user CLIENT_ID

client id for authentication

--name CLIENT_NAME, -n CLIENT_NAME

client name for authentication

--cluster CLUSTER cluster name

--admin-daemon ADMIN_SOCKET

submit admin-socket commands ("help" for help)

-s, --status show cluster status

-w, --watch watch live cluster changes

--watch-debug watch debug events

--watch-info watch info events

--watch-sec watch security events

--watch-warn watch warn events

--watch-error watch error events

-W WATCH_CHANNEL, --watch-channel WATCH_CHANNEL

watch live cluster changes on a specific channel

(e.g., cluster, audit, cephadm, or '*' for all)

--version, -v display version

--verbose make verbose

--concise make less verbose

-f {json,json-pretty,xml,xml-pretty,plain,yaml}, --format {json,json-pretty,xml,xml-pretty,plain,yaml}

--connect-timeout CLUSTER_TIMEOUT

set a timeout for connecting to the cluster

--block block until completion (scrub and deep-scrub only)

--period PERIOD, -p PERIOD

polling period, default 1.0 second (for polling

commands only)Local commands:

ping <mon.id> Send simple presence/life test to a mon

<mon.id> may be 'mon.*' for all mons

daemon {type.id|path} <cmd>

Same as --admin-daemon, but auto-find admin socket

daemonperf {type.id | path} [stat-pats] [priority] [<interval>] [<count>]

daemonperf {type.id | path} list|ls [stat-pats] [priority]

Get selected perf stats from daemon/admin socket

Optional shell-glob comma-delim match string stat-pats

Optional selection priority (can abbreviate name):

critical, interesting, useful, noninteresting, debug

List shows a table of all available stats

Run <count> times (default forever),

once per <interval> seconds (default 1)Monitor commands:

fs new <fs_name> <metadata> <data> [--force] [--allow-dangerous-metadata-overlay] make new filesystem using named pools <metadata> and <data>

6.3.2 creating CephFS file system

- A data pool can only create one cephfs file system.

ceph@ceph-deploy:~/ceph-cluster$ ceph fs new wgscephfs cephfs-metadata cephfs-data new fs with metadata pool 7 and data pool 8

6.3.3 viewing the created CephFS file system

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs ls name: wgscephfs, metadata pool: cephfs-metadata, data pools: [cephfs-data ]

6.3.4 viewing the specified CephFS file system status

ceph@ceph-deploy:~/ceph-cluster$ ceph fs status wgscephfs

wgscephfs - 0 clients

=========

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active ceph-mgr-01 Reqs: 0 /s 10 13 12 0

POOL TYPE USED AVAIL

cephfs-metadata metadata 96.0k 56.2G

cephfs-data data 0 56.2G

MDS version: ceph version 16.2.6 (ee28fb57e47e9f88813e24bbf4c14496ca299d31) pacific (stable)6.3.5 enabling multiple file systems

- Every time a cephfs file system is created, a new data pool is required.

ceph@ceph-deploy:~/ceph-cluster$ ceph fs flag set enable_multiple true

6.4 verifying CephFS service status

ceph@ceph-deploy:~/ceph-cluster$ ceph mds stat

wgscephfs:1 {0=ceph-mgr-01=up:active}6.5 creating client accounts

6.5.1 creating accounts

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph auth add client.wgs mon 'allow rw' mds 'allow rw' osd 'allow rwx pool=cephfs-data' added key for client.wgs

6.5.2 verifying account information

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph auth get client.wgs

[client.wgs]

key = AQCrhk5htve9AxAAED3UAwf2P/5YFjBPVoNayw==

caps mds = "allow rw"

caps mon = "allow rw"

caps osd = "allow rwx pool=cephfs-data"

exported keyring for client.wgs6.5.3 create user keyring file

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph auth get client.wgs -o ceph.client.wgs.keyring exported keyring for client.wgs

6.5.4 create key file

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph auth print-key client.wgs > wgs.key

6.5.5 verifying the user keyring file

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ cat ceph.client.wgs.keyring

[client.wgs]

key = AQCrhk5htve9AxAAED3UAwf2P/5YFjBPVoNayw==

caps mds = "allow rw"

caps mon = "allow rw"

caps osd = "allow rwx pool=cephfs-data"6.6 installing ceph client

6.6.1 client ceph-client-centos7-01

6.6.1.1 configure warehouse

Click to view the code[root@ceph-client-centos7-01 ~]# yum -y install epel-release [root@ceph-client-centos7-01 ~]# yum -y install https://mirrors.aliyun.com/ceph/rpm-octopus/el7/noarch/ceph-release-1-1.el7.noarch.rpm

6.6.1.2 installing CEPH common

Click to view the code[root@ceph-client-centos7-01 ~]# yum -y install ceph-common

6.6.2 client CEPH client Ubuntu 20.04-01

6.6.2.1 configure warehouse

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add - OK root@ceph-client-ubuntu20.04-01:~# echo "deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific $(lsb_release -cs) main" >> /etc/apt/sources.list root@ceph-client-ubuntu20.04-01:~# apt -y update && apt -y upgrade

6.6.2.2 installation of CEPH common

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# apt -y install ceph-common

6.7 synchronizing client authentication files

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ scp ceph.conf ceph.client.wgs.keyring wgs.key root@ceph-client-ubuntu20.04-01:/etc/ceph ceph@ceph-deploy:~/ceph-cluster$ scp ceph.conf ceph.client.wgs.keyring wgs.key root@ceph-client-centos7-01:/etc/ceph

6.8 client authentication authority

6.8.1 client ceph-client-centos7-01

Click to view the code[root@ceph-client-centos7-01 ~]# ceph --id wgs -s

cluster:

id: 6e521054-1532-4bc8-9971-7f8ae93e8430

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-mon-01,ceph-mon-02,ceph-mon-03 (age 6h)

mgr: ceph-mgr-01(active, since 17h), standbys: ceph-mgr-02

mds: 1/1 daemons up

osd: 9 osds: 9 up (since 2d), 9 in (since 2d)

data:

volumes: 1/1 healthy

pools: 4 pools, 161 pgs

objects: 43 objects, 24 MiB

usage: 1.4 GiB used, 179 GiB / 180 GiB avail

pgs: 161 active+clean

6.8.2 client CEPH client Ubuntu 20.04-01

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# ceph --id wgs -s

cluster:

id: 6e521054-1532-4bc8-9971-7f8ae93e8430

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-mon-01,ceph-mon-02,ceph-mon-03 (age 6h)

mgr: ceph-mgr-01(active, since 17h), standbys: ceph-mgr-02

mds: 1/1 daemons up

osd: 9 osds: 9 up (since 2d), 9 in (since 2d)

data:

volumes: 1/1 healthy

pools: 4 pools, 161 pgs

objects: 43 objects, 24 MiB

usage: 1.4 GiB used, 179 GiB / 180 GiB avail

pgs: 161 active+clean

6.9 mount cephfs in kernel space (recommended)

6.9.1 verify whether the client can mount cephfs

6.9.1.1 verify client ceph-client-centos7-01

Click to view the code[root@ceph-client-centos7-01 ~]# stat /sbin/mount.ceph File: '/sbin/mount.ceph' Size: 195512 Blocks: 384 IO Block: 4096 regular file Device: fd01h/64769d Inode: 51110858 Links: 1 Access: (0755/-rwxr-xr-x) Uid: ( 0/ root) Gid: ( 0/ root) Access: 2021-09-25 11:52:07.544069156 +0800 Modify: 2021-08-06 01:48:44.000000000 +0800 Change: 2021-09-23 13:57:21.674953501 +0800 Birth: -

6.9.1.1 verify client ceph-client-ubuntu 20.04-01

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# stat /sbin/mount.ceph File: /sbin/mount.ceph Size: 260520 Blocks: 512 IO Block: 4096 regular file Device: fc02h/64514d Inode: 402320 Links: 1 Access: (0755/-rwxr-xr-x) Uid: ( 0/ root) Gid: ( 0/ root) Access: 2021-09-25 11:54:38.642951083 +0800 Modify: 2021-09-16 22:38:17.000000000 +0800 Change: 2021-09-22 18:01:23.708934550 +0800 Birth: -

6.9.2 the client mounts cephfs through the key file

6.9.2.1 mount cephfs command format through key file (recommended)

Click to view the codemount -t ceph {mon01:socket},{mon02:socket},{mon03:socket}:/ {mount-point} -o name={name},secretfile={key_path}6.9.2.2 client ceph-client-centos7-01 mount cephfs

Click to view the code[root@ceph-client-centos7-01 ~]# mkdir /data/cephfs-data

[root@ceph-client-centos7-01 ~]# mount -t ceph 172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789:/ /data/cephfs-data -o name=wgs,secretfile=/etc/ceph/wgs.key

[root@ceph-client-centos7-01 ~]# df -TH

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs tmpfs 2.0G 194M 1.9G 10% /run

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/vda1 xfs 22G 5.9G 16G 28% /

/dev/vdb xfs 215G 9.1G 206G 5% /data

tmpfs tmpfs 399M 0 399M 0% /run/user/1000

tmpfs tmpfs 399M 0 399M 0% /run/user/1003

172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789:/ ceph 61G 0 61G 0% /data/cephfs-data

[root@ceph-client-centos7-01 ~]# stat -f /data/cephfs-data/

File: "/data/cephfs-data/"

ID: de6f23f7f8cf0cfc Namelen: 255 Type: ceph

Block size: 4194304 Fundamental block size: 4194304

Blocks: Total: 14397 Free: 14397 Available: 14397

Inodes: Total: 1 Free: -16.9.2.3 client ceph-client-ubuntu 20.04-01 mount cephfs

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# mkdir /data/cephfs-data

root@ceph-client-ubuntu20.04-01:~# mount -t ceph 172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789:/ /data/cephfs-data -o name=wgs,secretfile=/etc/ceph/wgs.key

root@ceph-client-ubuntu20.04-01:~# df -TH

Filesystem Type Size Used Avail Use% Mounted on

udev devtmpfs 4.1G 0 4.1G 0% /dev

tmpfs tmpfs 815M 1.1M 814M 1% /run

/dev/vda2 ext4 22G 13G 7.9G 61% /

tmpfs tmpfs 4.1G 0 4.1G 0% /dev/shm

tmpfs tmpfs 5.3M 0 5.3M 0% /run/lock

tmpfs tmpfs 4.1G 0 4.1G 0% /sys/fs/cgroup

/dev/vdb ext4 528G 19G 483G 4% /data

172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789:/ ceph 61G 0 61G 0% /data/cephfs-data

root@ceph-client-ubuntu20.04-01:~# stat -f /data/cephfs-data/

File: "/data/cephfs-data/"

ID: de6f23f7f8cf0cfc Namelen: 255 Type: ceph

Block size: 4194304 Fundamental block size: 4194304

Blocks: Total: 14397 Free: 14397 Available: 14397

Inodes: Total: 1 Free: -16.9.3 the client mounts cephfs through the key

6.9.3.1 mount cephfs command format through key

Click to view the codemount cephfs File root directory

mount -t ceph {mon01:socket},{mon02:socket},{mon03:socket}:/ {mount-point} -o name={name},secret={value}

mount cephfs File subdirectory

mount -t ceph {mon01:socket},{mon02:socket},{mon03:socket}:/{subvolume/dir1/dir2} {mount-point} -o name={name},secret={value}

6.9.3.2 view key

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ cat wgs.key AQCrhk5htve9AxAAED3UAwf2P/5YFjBPVoNayw==

6.9.3.3 client ceph-client-centos7-01 mount cephfs

Click to view the code[root@ceph-client-centos7-01 ~]# mkdir /data/cephfs-data

[root@ceph-client-centos7-01 ~]# mount -t ceph 172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789:/ /data/cephfs-data -o name=wgs,secret=AQCrhk5htve9AxAAED3UAwf2P/5YFjBPVoNayw==

[root@ceph-client-centos7-01 ~]# df -TH

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs tmpfs 2.0G 194M 1.9G 10% /run

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/vda1 xfs 22G 5.9G 16G 28% /

/dev/vdb xfs 215G 9.1G 206G 5% /data

tmpfs tmpfs 399M 0 399M 0% /run/user/1000

tmpfs tmpfs 399M 0 399M 0% /run/user/1003

172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789:/ ceph 61G 0 61G 0% /data/cephfs-data

[root@ceph-client-centos7-01 ~]# stat -f /data/cephfs-data/

File: "/data/cephfs-data/"

ID: de6f23f7f8cf0cfc Namelen: 255 Type: ceph

Block size: 4194304 Fundamental block size: 4194304

Blocks: Total: 14397 Free: 14397 Available: 14397

Inodes: Total: 1 Free: -16.9.3.4 client ceph-client-ubuntu 20.04-01 mount cephfs

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# mkdir /data/cephfs-data

root@ceph-client-ubuntu20.04-01:~# mount -t ceph 172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789:/ /data/cephfs-data -o name=wgs,secret=AQCrhk5htve9AxAAED3UAwf2P/5YFjBPVoNayw==

root@ceph-client-ubuntu20.04-01:~# df -TH

Filesystem Type Size Used Avail Use% Mounted on

udev devtmpfs 4.1G 0 4.1G 0% /dev

tmpfs tmpfs 815M 1.1M 814M 1% /run

/dev/vda2 ext4 22G 13G 7.9G 61% /

tmpfs tmpfs 4.1G 0 4.1G 0% /dev/shm

tmpfs tmpfs 5.3M 0 5.3M 0% /run/lock

tmpfs tmpfs 4.1G 0 4.1G 0% /sys/fs/cgroup

/dev/vdb ext4 528G 19G 483G 4% /data

172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789:/ ceph 61G 0 61G 0% /data/cephfs-data

root@ceph-client-ubuntu20.04-01:~# stat -f /data/cephfs-data/

File: "/data/cephfs-data/"

ID: de6f23f7f8cf0cfc Namelen: 255 Type: ceph

Block size: 4194304 Fundamental block size: 4194304

Blocks: Total: 14397 Free: 14397 Available: 14397

Inodes: Total: 1 Free: -16.9.4 client write data and verify

6.9.4.1 client ceph-client-centos7-01 write data

Click to view the code[root@ceph-client-centos7-01 ~]# cd /data/cephfs-data/ [root@ceph-client-centos7-01 cephfs-data]# echo "ceph-client-centos7-01" > ceph-client-centos7-01 [root@ceph-client-centos7-01 cephfs-data]# ls -l total 1 -rw-r--r-- 1 root root 23 Sep 25 12:28 ceph-client-centos7-01

6.9.4.1 client ceph-client-ubuntu 20.04-01 verifying data sharing

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# cd /data/cephfs-data/ root@ceph-client-ubuntu20.04-01:/data/cephfs-data# ls -l total 1 -rw-r--r-- 1 root root 23 Sep 25 12:28 ceph-client-centos7-01 root@ceph-client-ubuntu20.04-01:/data/cephfs-data# cat ceph-client-centos7-01 ceph-client-centos7-01

6.9.5 client uninstall cephfs

6.9.5.1 client ceph-client-centos7-01 uninstalling cephfs

Click to view the code[root@ceph-client-centos7-01 ~]# umount /data/cephfs-data/

6.9.5.2 client ceph-client-ubuntu 20.04-01 uninstall cephfs

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# umount /data/cephfs-data

6.9.6 client boot and mount cephfs

6.9.6.1 client ceph-client-centos7-01 power on and mount cephfs

Click to view the code[root@ceph-client-centos7-01 ~]# cat /etc/fstab 172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789:/ /data/cephfs-data ceph defautls,name=wgs,secretfile=/etc/ceph/wgs.key,noatime,_netdev 0 2

6.9.6.2 client ceph-client-centos7-01 power on and mount cephfs

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# cat /etc/fstab 172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789:/ /data/cephfs-data ceph defautls,name=wgs,secretfile=/etc/ceph/wgs.key,noatime,_netdev 0 2

6.10 user space mount cephfs

- Starting with Ceph 10.x (Jewel), use at least a 4.x kernel. If you are using an older kernel, you should use the fuse client instead of the kernel client.

6.10.1 client configuration warehouse

6.10.1.1 configuring yum source for client ceph-client-centos7-01

Click to view the code[root@ceph-client-centos7-01 ~]# yum -y install epel-release [root@ceph-client-centos7-01 ~]# yum -y install https://mirrors.aliyun.com/ceph/rpm-octopus/el7/noarch/ceph-release-1-1.el7.noarch.rpm

6.10.1.2 add warehouse for client ceph-client-ubuntu 20.04-01

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add - OK root@ceph-client-ubuntu20.04-01:~# echo "deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific $(lsb_release -cs) main" >> /etc/apt/sources.list root@ceph-client-ubuntu20.04-01:~# apt -y update && apt -y upgrade

6.10.2 client installation CEPH fuse

6.10.2.1 installing CEPH fuse on client ceph-client-centos7-01

Click to view the code[root@ceph-client-centos7-01 ~]# yum -y install ceph-common ceph-fuse

6.10.2.2 client-centos7-01 installing CEPH fuse

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# apt -y install ceph-common fuse

6.10.3 client synchronization authentication file

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ scp ceph.conf ceph.client.wgs.keyring root@ceph-client-ubuntu20.04-01:/etc/ceph ceph@ceph-deploy:~/ceph-cluster$ scp ceph.conf ceph.client.wgs.keyring root@ceph-client-centos7-01:/etc/ceph

6.10.4 the client uses CEPH fuse to mount cephfs

6.10.4.1 CEPH fuse usage

Click to view the coderoot@ceph-client-centos7-01:~# ceph-fuse -h

usage: ceph-fuse [-n client.username] [-m mon-ip-addr:mon-port] <mount point> [OPTIONS]

--client_mountpoint/-r <sub_directory>

use sub_directory as the mounted root, rather than the full Ceph tree.

usage: ceph-fuse mountpoint [options]

general options:

-o opt,[opt...] mount options

-h --help print help

-V --version print version

FUSE options:

-d -o debug enable debug output (implies -f)

-f foreground operation

-s disable multi-threaded operation

--conf/-c FILE read configuration from the given configuration file

--id ID set ID portion of my name

--name/-n TYPE.ID set name

--cluster NAME set cluster name (default: ceph)

--setuser USER set uid to user or uid (and gid to user's gid)

--setgroup GROUP set gid to group or gid

--version show version and quit

-o opt,[opt...] Installation options.

-d Run in the foreground and send all log output to stderr And enable FUSE debugging (-o debug).

-c ceph.conf, --conf=ceph.conf Used during startup ceph.conf Profile instead of default profile /etc/ceph/ceph.conf To determine the monitor address.

-m monaddress[:port] Connect to the specified mon Node (not through ceph.conf Find).

-n client.{cephx-username} Pass key for mounting CephX Name of the user.

-k <path-to-keyring> provide keyring The path of the; It is useful when it does not exist in the standard location.

--client_mountpoint/-r root_directory use root_directory As the root directory of the mount, not the complete directory Ceph Trees.

-f Run in the foreground. Do not generate pid File.

-s Disable multithreading.

Use sample

ceph-fuse --id {name} -m {mon01:socket},{mon02:socket},{mon03:socket} {mountpoint}

Specify mount cephfs File system directory

ceph-fuse --id wgs -r /path/to/dir /data/cephfs-data

Designated user keyring File path

ceph-fuse --id wgs -k /path/to/keyring /data/cephfs-data

There are multiple cephfs File system specified mount

ceph-fuse --id wgs --client_fs mycephfs2 /data/cephfs-data

6.10.4.2 the client ceph-client-centos7-01 uses CEPH fuse to mount cephfs

Click to view the code[root@ceph-client-centos7-01 ~]# ceph-fuse --id wgs -m 172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789 /data/cephfs-data ceph-fuse[8979]: starting ceph client 2021-09-25T14:24:32.258+0800 7f2934e9df40 -1 init, newargv = 0x556e4ebb1300 newargc=9 ceph-fuse[8979]: starting fuse [root@ceph-client-centos7-01 ~]# df -TH Filesystem Type Size Used Avail Use% Mounted on devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm tmpfs tmpfs 2.0G 194M 1.9G 10% /run tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup /dev/vda1 xfs 22G 6.0G 16G 28% / /dev/vdb xfs 215G 9.2G 206G 5% /data tmpfs tmpfs 399M 0 399M 0% /run/user/1000 tmpfs tmpfs 399M 0 399M 0% /run/user/1003 ceph-fuse fuse.ceph-fuse 61G 0 61G 0% /data/cephfs-data

6.10.4.3 the client ceph-client-ubuntu 20.04-01 uses CEPH fuse to mount cephfs

Click to view the coderoot@ceph-client-ubuntu20.4-01:~# ceph-fuse --id wgs -m 172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789 /data/cephfs-data 2021-09-25T14:26:17.664+0800 7f2473c04080 -1 init, newargv = 0x560939e8b8c0 newargc=15 ceph-fuse[8696]: starting ceph client ceph-fuse[8696]: starting fuse root@ceph-client-ubuntu20.4-01:~# df -TH Filesystem Type Size Used Avail Use% Mounted on udev devtmpfs 4.1G 0 4.1G 0% /dev tmpfs tmpfs 815M 1.1M 814M 1% /run /dev/vda2 ext4 22G 13G 7.9G 61% / tmpfs tmpfs 4.1G 0 4.1G 0% /dev/shm tmpfs tmpfs 5.3M 0 5.3M 0% /run/lock tmpfs tmpfs 4.1G 0 4.1G 0% /sys/fs/cgroup /dev/vdb ext4 528G 21G 480G 5% /data tmpfs tmpfs 815M 0 815M 0% /run/user/1001 ceph-fuse fuse.ceph-fuse 61G 0 61G 0% /data/cephfs-data

6.10.5 client write data and verify

6.10.5.1 client ceph-client-centos7-01 write data

Click to view the code[root@ceph-client-centos7-01 ~]# cd /data/cephfs-data/ [root@ceph-client-centos7-01 cephfs-data]# mkdir -pv test/test1 mkdir: created directory 'test' mkdir: created directory 'test/test1'

6.10.5.2 client ceph-client-ubuntu 20.04-01 validation data

Click to view the coderoot@ceph-client-ubuntu20.4-01:~# cd /data/cephfs-data/

root@ceph-client-ubuntu20.4-01:/data/cephfs-data# tree .

.

└── test

└── test1

2 directories, 0 files

6.10.6 client uninstall cephfs

6.10.6.1 client ceph-client-centos7-01 uninstalling cephfs

Click to view the code[root@ceph-client-centos7-01 ~]# umount /data/cephfs-data/

6.10.6.2 client ceph-client-ubuntu 20.04-01 uninstall cephfs

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# umount /data/cephfs-data

6.10.7 client boot and mount cephfs

6.10.7.1 client ceph-client-centos7-01 power on and mount cephfs

Click to view the code[root@ceph-client-centos7-01 ~]# cat /etc/fstab none /data/cephfs-data fuse.ceph ceph.id=wgs,ceph.conf=/etc/ceph/ceph.conf,_netdev,defaults 0 0

6.10.7.2 client ceph-client-centos7-01 power on and mount cephfs

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# cat /etc/fstab none /data/cephfs-data fuse.ceph ceph.id=wgs,ceph.conf=/etc/ceph/ceph.conf,_netdev,defaults 0 0

6.11 deleting cephfs file system (multiple file systems)

6.11.1 viewing cephfs file system information

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs ls name: wgscephfs, metadata pool: cephfs-metadata, data pools: [cephfs-data ] name: wgscephfs01, metadata pool: cephfs-metadata01, data pools: [cephfs-data02 ]

6.11.2 check whether cephfs file system is being mounted

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs status wgscephfs

wgscephfs - 1 clients

=========

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active ceph-mgr-01 Reqs: 0 /s 13 15 14 2

POOL TYPE USED AVAIL

cephfs-metadata metadata 216k 56.2G

cephfs-data data 0 56.2G

MDS version: ceph version 16.2.6 (ee28fb57e47e9f88813e24bbf4c14496ca299d31) pacific (stable)6.11.3 find the client to which cephfs is attached

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph tell mds.0 client ls

2021-09-25T18:30:09.856+0800 7f0fbdffb700 0 client.1094242 ms_handle_reset on v2:172.16.10.225:6802/1724959904

2021-09-25T18:30:09.884+0800 7f0fbdffb700 0 client.1084719 ms_handle_reset on v2:172.16.10.225:6802/1724959904

[

{

"id": 1094171,

"entity": {

"name": {

"type": "client",

"num": 1094171

},

"addr": {

"type": "v1",

"addr": "172.16.0.126:0",

"nonce": 1257114724

}

},

"state": "open",

"num_leases": 0,

"num_caps": 2,

"request_load_avg": 0,

"uptime": 274.89986021499999,

"requests_in_flight": 0,

"num_completed_requests": 0,

"num_completed_flushes": 0,

"reconnecting": false,

"recall_caps": {

"value": 0,

"halflife": 60

},

"release_caps": {

"value": 0,

"halflife": 60

},

"recall_caps_throttle": {

"value": 0,

"halflife": 1.5

},

"recall_caps_throttle2o": {

"value": 0,

"halflife": 0.5

},

"session_cache_liveness": {

"value": 1.6026981127772033,

"halflife": 300

},

"cap_acquisition": {

"value": 0,

"halflife": 10

},

"delegated_inos": [],

"inst": "client.1094171 v1:172.16.0.126:0/1257114724",

"completed_requests": [],

"prealloc_inos": [],

"client_metadata": {

"client_features": {

"feature_bits": "0x00000000000001ff"

},

"metric_spec": {

"metric_flags": {

"feature_bits": "0x"

}

},

"entity_id": "wgs",

"hostname": "bj2d-prod-eth-star-boot-03",

"kernel_version": "4.19.0-1.el7.ucloud.x86_64",

"root": "/"

}

}

]6.11.4 client uninstall cephfs

6.11.4.1 client actively uninstalls cephfs

Click to view the code[root@ceph-client-ubuntu20.04-01 ~]# umount /data/cephfs-data/

6.11.4.2 the client is expelled manually

6.11.4.2.1 use the unique ID of the client

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph tell mds.0 client evict id=1094171 2021-09-25T18:31:02.895+0800 7fc5eeffd700 0 client.1094254 ms_handle_reset on v2:172.16.10.225:6802/1724959904 2021-09-25T18:31:03.671+0800 7fc5eeffd700 0 client.1084740 ms_handle_reset on v2:172.16.10.225:6802/1724959904

6.11.4.2.2 view the status of the mount point after the client is expelled

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# ls -l /data/ ls: cannot access '/data/cephfs-data': Permission denied total 32 d????????? ? ? ? ? ? cephfs-data

6.11.4.2.3 client solutions

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# umount /data/cephfs-data

6.11.4.2.4 client verification of mount point status

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# ls -l total 4 drwxr-xr-x 2 root root 6 Sep 25 11:46 cephfs-data

6.11.5 deleting cephfs file system

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs rm wgscephfs01 --yes-i-really-mean-it

6.11.6 verifying and deleting cephfs file system

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs ls name: wgscephfs, metadata pool: cephfs-metadata, data pools: [cephfs-data ]

six point one two Delete cephfs file system (single file system)

6.12.1 viewing cephfs file system information

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs ls name: wgscephfs, metadata pool: cephfs-metadata, data pools: [cephfs-data ]

6.12.2 check whether cephfs file system is being mounted

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs status wgscephfs

wgscephfs - 1 clients

=========

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active ceph-mgr-01 Reqs: 0 /s 13 15 14 2

POOL TYPE USED AVAIL

cephfs-metadata metadata 216k 56.2G

cephfs-data data 0 56.2G

MDS version: ceph version 16.2.6 (ee28fb57e47e9f88813e24bbf4c14496ca299d31) pacific (stable)6.12.3 find the client on which cephfs is mounted

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph tell mds.0 client ls

2021-09-25T18:30:09.856+0800 7f0fbdffb700 0 client.1094242 ms_handle_reset on v2:172.16.10.225:6802/1724959904

2021-09-25T18:30:09.884+0800 7f0fbdffb700 0 client.1084719 ms_handle_reset on v2:172.16.10.225:6802/1724959904

[

{

"id": 1094171,

"entity": {

"name": {

"type": "client",

"num": 1094171

},

"addr": {

"type": "v1",

"addr": "172.16.0.126:0",

"nonce": 1257114724

}

},

"state": "open",

"num_leases": 0,

"num_caps": 2,

"request_load_avg": 0,

"uptime": 274.89986021499999,

"requests_in_flight": 0,

"num_completed_requests": 0,

"num_completed_flushes": 0,

"reconnecting": false,

"recall_caps": {

"value": 0,

"halflife": 60

},

"release_caps": {

"value": 0,

"halflife": 60

},

"recall_caps_throttle": {

"value": 0,

"halflife": 1.5

},

"recall_caps_throttle2o": {

"value": 0,

"halflife": 0.5

},

"session_cache_liveness": {

"value": 1.6026981127772033,

"halflife": 300

},

"cap_acquisition": {

"value": 0,

"halflife": 10

},

"delegated_inos": [],

"inst": "client.1094171 v1:172.16.0.126:0/1257114724",

"completed_requests": [],

"prealloc_inos": [],

"client_metadata": {

"client_features": {

"feature_bits": "0x00000000000001ff"

},

"metric_spec": {

"metric_flags": {

"feature_bits": "0x"

}

},

"entity_id": "wgs",

"hostname": "bj2d-prod-eth-star-boot-03",

"kernel_version": "4.19.0-1.el7.ucloud.x86_64",

"root": "/"

}

}

]6.12.4 client uninstall cephfs

6.12.4.1 client actively uninstalls cephfs

Click to view the code[root@ceph-client-ubuntu20.04-01 ~]# umount /data/cephfs-data/

6.12.4.2 client is expelled manually

6.12.4.2.1 use the unique ID of the client

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph tell mds.0 client evict id=1094171 2021-09-25T18:31:02.895+0800 7fc5eeffd700 0 client.1094254 ms_handle_reset on v2:172.16.10.225:6802/1724959904 2021-09-25T18:31:03.671+0800 7fc5eeffd700 0 client.1084740 ms_handle_reset on v2:172.16.10.225:6802/1724959904

6.12.4.2.2 view the status of the mount point after the client is expelled

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# ls -l /data/ ls: cannot access '/data/cephfs-data': Permission denied total 32 d????????? ? ? ? ? ? cephfs-data

6.12.4.2.3 client solutions

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# umount /data/cephfs-data

6.12.4.2.4 client verification of mount point status

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# ls -l total 4 drwxr-xr-x 2 root root 6 Sep 25 11:46 cephfs-data

6.12.5 deleting Cephfs file system

6.12.5.1 viewing cephfs service status

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph mds stat

wgscephfs:1 {0=ceph-mgr-01=up:active}6.12.5.2 close cephfs file system

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs fail wgscephfs wgscephfs marked not joinable; MDS cannot join the cluster. All MDS ranks marked failed.

6.12.5.3 viewing ceph cluster status

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph -s

cluster:

id: 6e521054-1532-4bc8-9971-7f8ae93e8430

health: HEALTH_ERR

1 filesystem is degraded

1 filesystem is offline

services:

mon: 3 daemons, quorum ceph-mon-01,ceph-mon-02,ceph-mon-03 (age 97m)

mgr: ceph-mgr-01(active, since 25h), standbys: ceph-mgr-02

mds: 0/1 daemons up (1 failed), 1 standby

osd: 9 osds: 9 up (since 2d), 9 in (since 2d)

data:

volumes: 0/1 healthy, 1 failed

pools: 6 pools, 257 pgs

objects: 44 objects, 24 MiB

usage: 1.4 GiB used, 179 GiB / 180 GiB avail

pgs: 257 active+clean

6.12.5.4 viewing Cephfs service status

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph mds stat wgscephfs:0/1 1 up:standby, 1 failed

6.12.5.5 deleting cephfs file system

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs rm wgscephfs --yes-i-really-mean-it

6.12.5.6 viewing cephfs file system

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs ls No filesystems enabled

6.12.5.7 viewing ceph cluster status

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph -s

cluster:

id: 6e521054-1532-4bc8-9971-7f8ae93e8430

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-mon-01,ceph-mon-02,ceph-mon-03 (age 9m)

mgr: ceph-mgr-01(active, since 25h), standbys: ceph-mgr-02

osd: 9 osds: 9 up (since 2d), 9 in (since 2d)

data:

pools: 6 pools, 257 pgs

objects: 43 objects, 24 MiB

usage: 1.4 GiB used, 179 GiB / 180 GiB avail

pgs: 257 active+clean

6.12.5.8 viewing cephfs service status

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph mds stat 1 up:standby

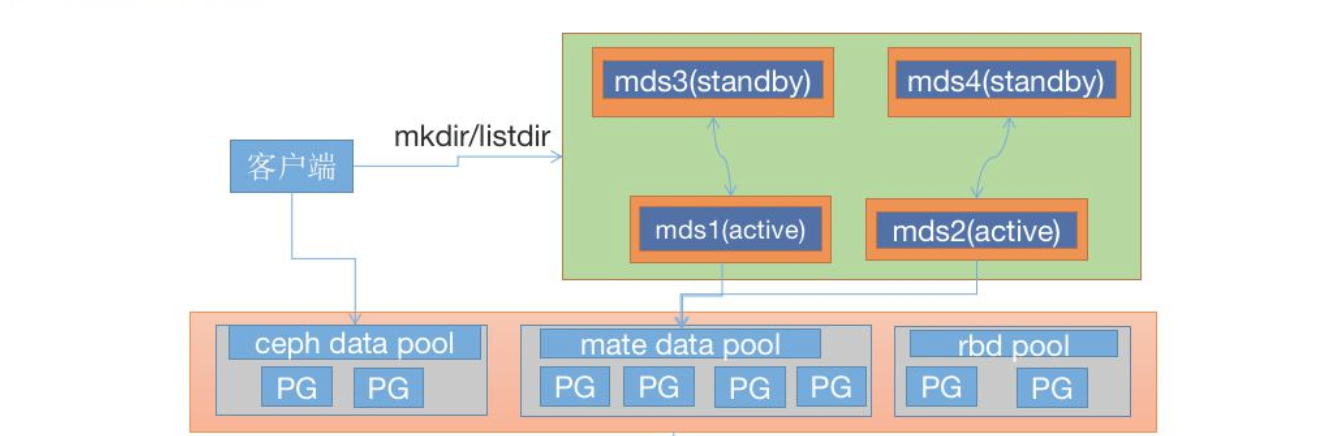

6.13 Ceph MDS high availability

6.13.1 introduction to CEPH MDS high availability

- Ceph mds, as the access portal of ceph, needs to achieve high performance and data backup.

6.13.2 Ceph MDS high availability architecture diagram

- Two main and two standby

6.13.3 common options for CEPH MDS configuration

- mds_standby_replay: a value of true indicates that the replay mode is enabled. In this mode, the data in the slave MDS will be synchronized with the master MDS in real time. If the Master goes down, the slave can switch quickly. If the value is false, data will be synchronized from the MDS only during downtime, with a period of interruption.

- mds_standby_for_name: set that the current MDS process is only used to back up MDS with the specified name.

- mds_standby_for_rank: set that the current MDS process is only used to back up the rank, usually the rank number. In addition, MDS can also be used in the cephfs file system_ standby_ fir _ Fscid parameter to specify a different file system.

- mds_standby_for_fscid: Specify the cephfs file system ID, which requires federated mds_standby_for_rank takes effect if MDS is set_ standby_ for_ Rank is used to specify the rank of the file. If it is not set, it is all the rank of the specified file system.

6.13.4 current mds service status

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph mds stat

wgscephfs:1 {0=ceph-mgr-01=up:active}6.13.5 add mds server

6.13.5.1 installation of CEPH MDS

Click to view the coderoot@ceph-mgr-02:~# apt -y install ceph-mds root@ceph-mon-01:~# apt -y install ceph-mds root@ceph-mon-02:~# apt -y install ceph-mds root@ceph-mon-03:~# apt -y install ceph-mds

6.13.5.2 create mds service

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph-deploy mds create ceph-mgr-02 ceph-mon-01 ceph-mon-02 ceph-mon-03

6.13.6 view the current mds service status

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs status

wgscephfs - 0 clients

=========

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active ceph-mgr-01 Reqs: 0 /s 10 13 12 0

POOL TYPE USED AVAIL

cephfs-metadata metadata 96.0k 56.2G

cephfs-data data 0 56.2G

STANDBY MDS

ceph-mgr-02

ceph-mon-02

ceph-mon-03

ceph-mon-01

MDS version: ceph version 16.2.6 (ee28fb57e47e9f88813e24bbf4c14496ca299d31) pacific (stable)6.13.7 viewing the current ceph cluster status

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph -s

cluster:

id: 6e521054-1532-4bc8-9971-7f8ae93e8430

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-mon-01,ceph-mon-02,ceph-mon-03 (age 65m)

mgr: ceph-mgr-01(active, since 26h), standbys: ceph-mgr-02

mds: 1/1 daemons up, 4 standby

osd: 9 osds: 9 up (since 2d), 9 in (since 2d)

data:

volumes: 1/1 healthy

pools: 4 pools, 161 pgs

objects: 43 objects, 24 MiB

usage: 1.4 GiB used, 179 GiB / 180 GiB avail

pgs: 161 active+clean

6.13.8 view the current cephfs file system status

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs get wgscephfs

Filesystem 'wgscephfs' (8)

fs_name wgscephfs

epoch 39

flags 13

created 2021-09-25T20:01:13.237645+0800

modified 2021-09-25T20:05:16.835799+0800

tableserver 0

root 0

session_timeout 60

session_autoclose 300

max_file_size 1099511627776

required_client_features {}

last_failure 0

last_failure_osd_epoch 0

compat compat={},rocompat={},incompat={1=base v0.20,2=client writeable ranges,3=default file layouts on dirs,4=dir inode in separate object,5=mds uses versioned encoding,6=dirfrag is stored in omap,7=mds uses inline data,8=no anchor table,9=file layout v2,10=snaprealm v2}

max_mds 1

in 0

up {0=1104195}

failed

damaged

stopped

data_pools [14]

metadata_pool 13

inline_data disabled

balancer

standby_count_wanted 1

[mds.ceph-mgr-01{0:1104195} state up:active seq 113 addr [v2:172.16.10.225:6802/3134604779,v1:172.16.10.225:6803/3134604779]]6.13.9 delete MDS service

- MDS will automatically notify Ceph monitor that it is shutting down. This enables the monitor to immediately fail over to an available standby database, if one exists. It is not necessary to use administrative commands to achieve this failover, for example, by using ceph fs fail mds.id

6.13.9.1 stop mds service

Click to view the coderoot@ceph-mon-03:~# systemctl stop ceph-mds@ceph-mon-03

6.13.9.2 view current mds status

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs status

wgscephfs - 0 clients

=========

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active ceph-mgr-02 Reqs: 0 /s 10 13 12 0

1 active ceph-mgr-01 Reqs: 0 /s 10 13 11 0

POOL TYPE USED AVAIL

cephfs-metadata metadata 168k 56.2G

cephfs-data data 0 56.2G

STANDBY MDS

ceph-mon-02

ceph-mon-01

MDS version: ceph version 16.2.6 (ee28fb57e47e9f88813e24bbf4c14496ca299d31) pacific (stable)6.13.9.3 delete / var/lib/ceph/mds/ceph-${id}

Click to view the coderoot@ceph-mon-03:~# rm -rf /var/lib/ceph/mds/ceph-ceph-mon-03

6.13.10 set the number of mds active

Click to view the code#Set the maximum value of master mds in simultaneous active state to 2 ceph@ceph-deploy:~/ceph-cluster$ ceph fs set wgscephfs max_mds 2

6.13.11 view current mds status

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs status

wgscephfs - 0 clients

=========

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active ceph-mgr-02 Reqs: 0 /s 10 13 12 0

1 active ceph-mgr-01 Reqs: 0 /s 10 13 11 0

POOL TYPE USED AVAIL

cephfs-metadata metadata 168k 56.2G

cephfs-data data 0 56.2G

STANDBY MDS

ceph-mon-02

ceph-mon-01

MDS version: ceph version 16.2.6 (ee28fb57e47e9f88813e24bbf4c14496ca299d31) pacific (stable)6.13.12 mds high availability optimization

- Currently, ceph-mgr-01 and ceph-mgr-02 are active.

- Set ceph-mgr-02 as the standby of ceph-mgr-01.

- Set ceph-mon-02 to the standby of ceph-mon-01.

ceph@ceph-deploy:~/ceph-cluster$ cat ceph.conf [global] fsid = 6e521054-1532-4bc8-9971-7f8ae93e8430 public_network = 172.16.10.0/24 cluster_network = 172.16.10.0/24 mon_initial_members = ceph-mon-01 mon_host = 172.16.10.148 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx mon_allow_pool_delete = truemon clock drift allowed = 2

mon clock drift warn backoff = 30

[mds.ceph-mon-01]

mds_standby_for_name = ceph-mgr-01

mds_standby_replay = true

[mds.ceph-mon-02]

mds_standby_for_name = ceph-mgr-02

mds_standby_replay = true

6.13.13 distribution of configuration files

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph-deploy --overwrite config push ceph-mgr-01 ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy --overwrite config push ceph-mgr-02 ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy --overwrite config push ceph-mon-01 ceph@ceph-deploy:~/ceph-cluster$ ceph-deploy --overwrite config push ceph-mon-02

6.13.14 restart mds service

Click to view the coderoot@ceph-mon-02:~# systemctl restart ceph-mds@ceph-mon-02 root@ceph-mon-01:~# systemctl restart ceph-mds@ceph-mon-01 root@ceph-mgr-02:~# systemctl restart ceph-mds@ceph-mgr-02 root@ceph-mgr-01:~# systemctl restart ceph-mds@ceph-mgr-01

6.13.15 high availability status of CEPH cluster mds

Click to view the codeceph@ceph-deploy:~/ceph-cluster$ ceph fs status

wgscephfs - 0 clients

=========

RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS

0 active ceph-mgr-02 Reqs: 0 /s 10 13 12 0

1 active ceph-mon-01 Reqs: 0 /s 10 13 11 0

POOL TYPE USED AVAIL

cephfs-metadata metadata 168k 56.2G

cephfs-data data 0 56.2G

STANDBY MDS

ceph-mon-02

ceph-mgr-01

MDS version: ceph version 16.2.6 (ee28fb57e47e9f88813e24bbf4c14496ca299d31) pacific (stable)6.13.15 verify mds status one-to-one correspondence

- ceph-mgr-02 and ceph-mgr-01 are alternately switched to active.

- ceph-mon-02 and ceph-mon-01 are alternately switched to active.

6.14 exporting cephfs to NFS via ganesha

- https://docs.ceph.com/en/pacific/cephfs/nfs/

6.14.1 configuration requirements

- Ceph file system is luminous or later.

- In NFS server hosts, 'libcephfs2' is luminous or later, 'NFS Ganesha' and 'NFS Ganesha CEPH' packages (Ganesha v2.5 or later).

- The NFS Ganesha server host is connected to Ceph public network.

- It is recommended to use NFS Ganesha package of 3.5 or higher stable version and Ceph package of pacific (16.2.x) or higher stable version.

- Install NFS Ganesha and NFS Ganesha CEPH on the node where cephfs is installed

6.14.2 install ganesha service on the node with CEPH MDS deployed

6.14.2.1 viewing ganesha version information

Click to view the coderoot@ceph-mgr-01:~# apt-cache madison nfs-ganesha-ceph nfs-ganesha-ceph | 2.6.0-2 | http://mirrors.ucloud.cn/ubuntu bionic/universe amd64 Packages nfs-ganesha | 2.6.0-2 | http://mirrors.ucloud.cn/ubuntu bionic/universe Sources

6.12.2.2 installation of ganesha service

Click to view the coderoot@ceph-mgr-01:~# apt -y install nfs-ganesha-ceph nfs-ganesha

6.12.2.3 ganesha configuration information

- https://github.com/nfs-ganesha/nfs-ganesha/blob/next/src/config_samples/ceph.conf

root@ceph-mgr-01:~# mv /etc/ganesha/ganesha.conf /etc/ganesha/ganesha.conf.back

root@ceph-mgr-01:~# vi /etc/ganesha/ganesha.conf

NFS_CORE_PARAM

{

# Ganesha can lift the NFS grace period early if NLM is disabled.

Enable_NLM = false;

# rquotad doesn't add any value here. CephFS doesn't support per-uid

# quotas anyway.

Enable_RQUOTA = false;

# In this configuration, we're just exporting NFSv4. In practice, it's

# best to use NFSv4.1+ to get the benefit of sessions.

Protocols = 4;

}

EXPORT

{

# Export Id (mandatory, each EXPORT must have a unique Export_Id)

Export_Id = 77;

# Exported path (mandatory)

Path = /;

# Pseudo Path (required for NFS v4)

Pseudo = /cephfs-test;

# Time out attribute cache entries immediately

Attr_Expiration_Time = 0;

# We're only interested in NFSv4 in this configuration

Protocols = 4;

# NFSv4 does not allow UDP transport

Transports = TCP;

# Time out attribute cache entries immediately

Attr_Expiration_Time = 0;

# setting for root Squash

Squash="No_root_squash";

# Required for access (default is None)

# Could use CLIENT blocks instead

Access_Type = RW;

# Exporting FSAL

FSAL {

Name = CEPH;

hostname="172.16.10.225"; #Current node ip address

}

}

LOG {

# default log level

Default_Log_Level = WARN;

}

6.12.2.4 ganesha service management

Click to view the coderoot@ceph-mgr-01:~# systemctl restart nfs-ganesha root@ceph-mgr-01:~# systemctl status nfs-ganesha

6.12.3 ganesha client settings

6.12.3.1 ubuntu system

Click to view the coderoot@ceph-client-ubuntu18.04-01:~# apt -y install nfs-common

6.12.3.2 centos system

Click to view the code[root@ceph-client-centos7-01 ~]# yum install -y nfs-utils

6.12.4 client mount

6.12.4.1 the client is mounted in ceph mode

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# mount -t ceph 172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789:/ /data/cephfs-data -o name=wgs,secretfile=/etc/ceph/wgs.key root@ceph-client-ubuntu20.04-01:~# df -TH Filesystem Type Size Used Avail Use% Mounted on udev devtmpfs 4.1G 0 4.1G 0% /dev tmpfs tmpfs 815M 1.1M 814M 1% /run /dev/vda2 ext4 22G 18G 2.1G 90% / tmpfs tmpfs 4.1G 0 4.1G 0% /dev/shm tmpfs tmpfs 5.3M 0 5.3M 0% /run/lock tmpfs tmpfs 4.1G 0 4.1G 0% /sys/fs/cgroup /dev/vdb ext4 528G 32G 470G 7% /data tmpfs tmpfs 815M 0 815M 0% /run/user/1001 172.16.10.148:6789,172.16.10.110:6789,172.16.10.182:6789:/ ceph 61G 0 61G 0% /data/cephfs-data

6.12.4.2 write test data

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# cd /data/cephfs-data/ root@ceph-client-ubuntu20.04-01:/data/cephfs-data# echo "mount nfs" > nfs.txt root@ceph-client-ubuntu20.04-01:/data/cephfs-data# ls -l total 1 -rw-r--r-- 1 root root 10 Sep 26 22:08 nfs.txt

6.12.4.3 the client is mounted in nfs mode

[root@ceph-client-centos7-01 ~]# mount -t nfs -o nfsvers=4.1,proto=tcp 172.16.10.225:/cephfs-test /data/cephfs-data/ [root@ceph-client-centos7-01 ~]# df -TH Filesystem Type Size Used Avail Use% Mounted on udev devtmpfs 2.1G 0 2.1G 0% /dev tmpfs tmpfs 412M 51M 361M 13% /run /dev/vda1 ext4 106G 4.1G 97G 5% / tmpfs tmpfs 2.1G 0 2.1G 0% /dev/shm tmpfs tmpfs 5.3M 0 5.3M 0% /run/lock tmpfs tmpfs 2.1G 0 2.1G 0% /sys/fs/cgroup /dev/vdb ext4 106G 16G 85G 16% /data tmpfs tmpfs 412M 0 412M 0% /run/user/1003 172.16.10.225:/cephfs-test nfs4 61G 0 61G 0% /data/cephfs-data

6.12.4.4 verifying nfs mount data

Click to view the code[root@ceph-client-centos7-01 ~]# ls -l /data/cephfs-data/ total 1 -rw-r--r-- 1 root root 10 Sep 26 22:08 nfs.txt [root@ceph-client-centos7-01 ~]# cat /data/cephfs-data/nfs.txt mount nfs

6.12.5 client uninstall

6.12.5.1 ubuntu system

Click to view the coderoot@ceph-client-ubuntu20.04-01:~# umount /data/cephfs-data

6.12.5.2 ubuntu system

Click to view the code[root@ceph-client-centos7-01 ~]# umount /data/cephfs-data