Disk management RAID

summary

- RAID: (Redundant Array of Independent Disk)

- RAID initially developed a certain level of data protection technology in order to combine small cheap disks to replace large expensive disks, and hope that the access to data will not be lost when the disk fails.

- RAID is a redundant array composed of multiple cheap disks. It appears as an independent large storage device under the operating system.

- RAID can give full play to the advantages of multiple hard disks, improve the speed and capacity of hard disks, provide fault tolerance, ensure data security and easy management, and can continue to work in case of any hard disk problem without being affected by damage to the hard disk.

Common RAID levels

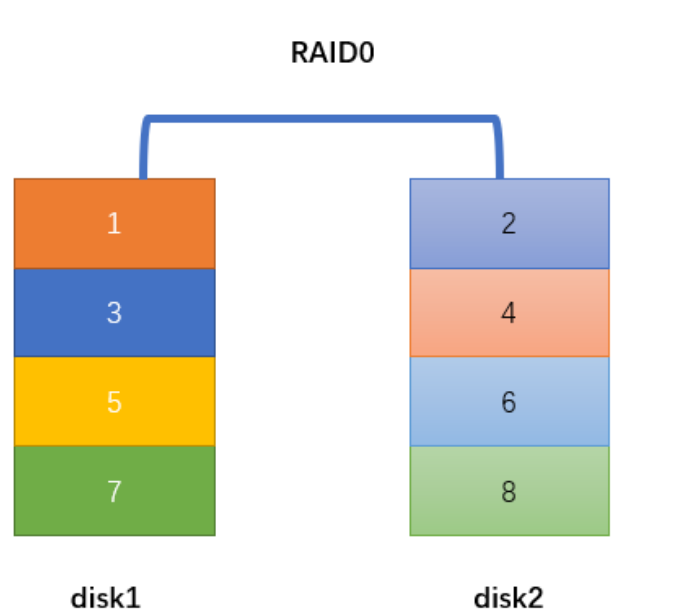

RAID0

- At least two disks are required

- The data is striped and distributed to the disk (if the data to be stored is 12345678, 1357 may be stored on disk1 and 2468 on disk2)

- High read-write performance and 100% high storage space utilization

- Without data redundancy, if a disk fails, the data cannot be recovered

- Application: for scenes with high performance requirements but low data security and reliability requirements, such as audio, video and other storage

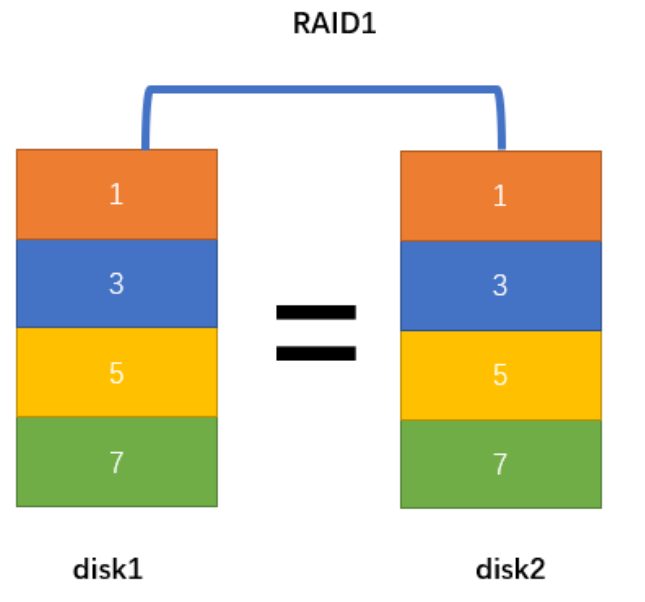

RAID1

- At least two disks are required

- Data mirror backup is written to disk (working disk and mirror disk)

- High reliability, 50% disk utilization

- A disk failure will not affect data reading and writing

- The read performance is OK, but the write performance is poor

- Applied to: scenarios with high requirements for data security and reliability, such as e-mail system, trading system, etc.

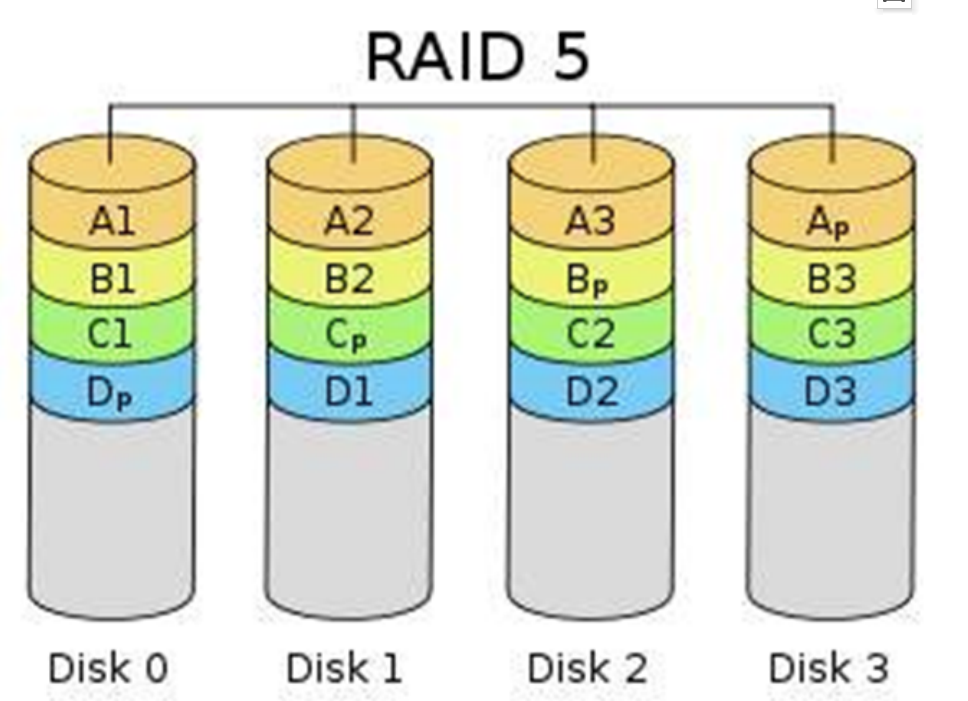

RAID5

- At least 3 disks are required

- The data is striped and stored on the disk, with good read-write performance and disk utilization of (n-1)/n

- Data redundancy with parity check (dispersion)

- If one disk fails, the damaged data can be reconstructed according to other data blocks and corresponding verification data (performance consumption)

- It is the data protection solution with the best comprehensive performance at present, taking into account storage performance, data security, storage cost and other factors

- It is applicable to most application scenarios

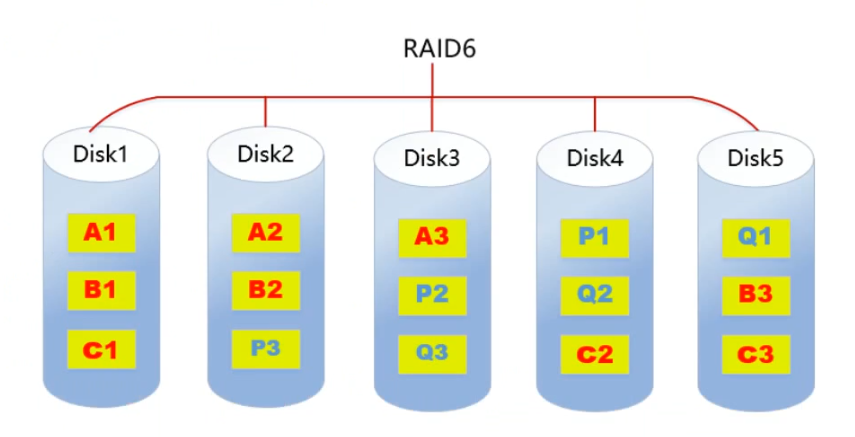

RAID6

- At least 4 disks are required

- Data is striped and stored on disk, with good reading performance and strong fault tolerance

- Double check is adopted to ensure data security

- If two disks fail at the same time, the data of two disks can be reconstructed through two verification data

- The cost is higher and more complex than other levels

- It is generally used in occasions with very high requirements for data security

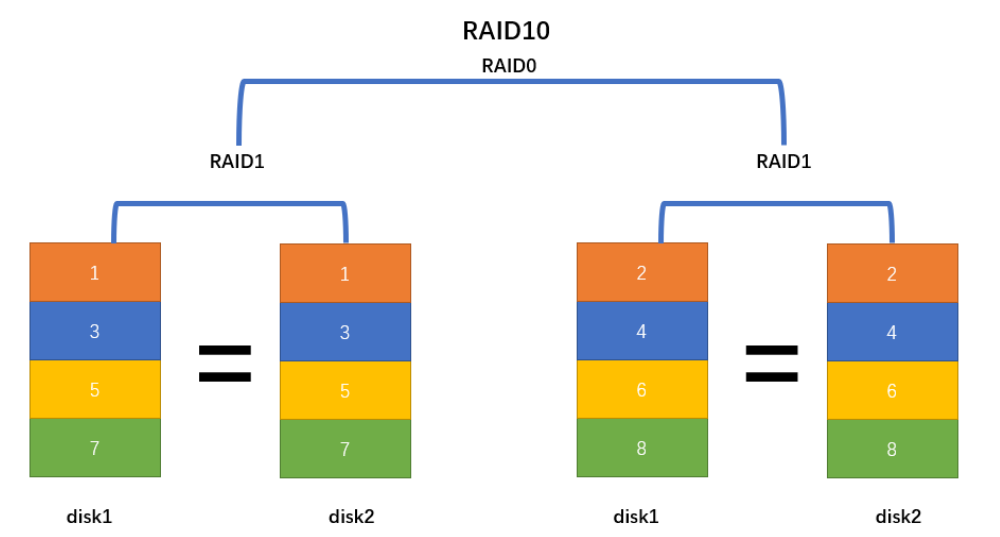

RAID10

- RAID10 is a combination of raid1+raid0

- At least 4 disks are required

- Give consideration to data redundancy (raid1 mirroring) and read / write performance (raid0 data striping)

- Disk utilization is 50% and the cost is high

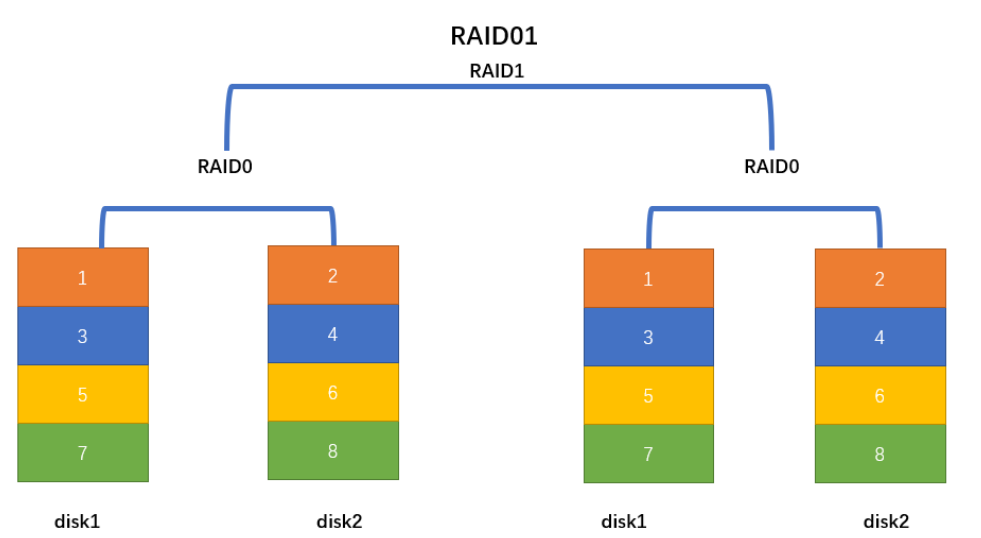

RAID01

- RAID10 is a combination of raid0+raid1

- At least 4 disks are required

- Give consideration to data redundancy (raid1 mirroring) and read / write performance (raid0 data striping)

- Disk utilization is 50% and the cost is high

summary

| type | Read performance | Write performance | reliability | Disk utilization | cost |

|---|---|---|---|---|---|

| RAID0 | best | best | minimum | 100% | Lower |

| RAID1 | normal | Two copies of data | high | 50% | high |

| RAID5 | Approximate RAID0 | More verification | Between RAID0 and RAID1 | (n-1)/n | Between RAID0 and RAID1 |

| RAID6 | Approximate RAID0 | Double check added | Greater than RAID5 | (n-2)/n | Greater than RAID1 |

| RAID10 | Equal to RAID0 | Equal to RAID1 | high | 50% | highest |

| RAID01 | Equal to RAID0 | Equal to RAID1 | high | 50% | highest |

Hard and soft RAID

Soft RAID

Soft RAID runs at the bottom of the operating system, virtualizes the physical disk submitted by SCSI or IDE controller into a virtual disk, and then submits it to the management program for management.

Soft RAID has the following features:

- Occupied memory space

- Occupied CPU resources

- If the program or operating system fails, it cannot run

- Now enterprises rarely use soft raid.

Hard RAID

The RAID function is realized by hardware. The independent RAID card and the RAID chip integrated on the motherboard are all hard RAID.

RAID card is a board used to realize RAID function. It is usually composed of a series of components such as I/O processor, hard disk controller, hard disk connector and cache.

Different raid cards support different raid functions. Supports RAlD0, RAID1, RAID5 and RAID10.

Component RAID array

Environmental preparation

- Add one disk (or add multiple disks directly, without partition, and use directly)

- Partition a large capacity disk (20G) to form multiple (10) small partitions

[root@server1 ~]# fdisk /dev/sdc [root@server1 ~]# partprobe /dev/sdc [root@server1 ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 20G 0 disk ├─sda1 8:1 0 1G 0 part /boot └─sda2 8:2 0 19G 0 part ├─centos-root 253:0 0 17G 0 lvm / └─centos-swap 253:1 0 2G 0 lvm [SWAP] sdb 8:16 0 5G 0 disk └─sdb1 8:17 0 2G 0 part sdc 8:32 0 20G 0 disk ├─sdc1 8:33 0 2G 0 part ├─sdc2 8:34 0 2G 0 part ├─sdc3 8:35 0 2G 0 part ├─sdc4 8:36 0 1K 0 part ├─sdc5 8:37 0 2G 0 part ├─sdc6 8:38 0 2G 0 part ├─sdc7 8:39 0 2G 0 part ├─sdc8 8:40 0 2G 0 part ├─sdc9 8:41 0 2G 0 part ├─sdc10 8:42 0 2G 0 part └─sdc11 8:43 0 2G 0 part sr0 11:0 1 973M 0 rom

- Installing mdadm tools

[root@server1 ~]# yum install -y mdadm

| parameter | effect |

|---|---|

| -a | Name of testing equipment |

| -n | Specify the number of devices |

| -l | Specify RAID level |

| -C | establish |

| -v | Display process |

| -f | Analog equipment damage |

| -r | Remove device |

| -Q | View summary information |

| -D | View details |

| -S | Stop RAID disk array |

| -x | Specify the number of hot spares |

Build RAID0

establish raid0

[root@server1 ~]# mdadm -C /dev/md0 -l 0 -n 2 /dev/sdc1 /dev/sdc2

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

[root@server1 ~]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Thu Sep 2 23:25:16 2021

Raid Level : raid0

Array Size : 4188160 (3.99 GiB 4.29 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Thu Sep 2 23:25:16 2021

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Consistency Policy : none

Name : server1:0 (local to host server1)

UUID : 2e5a48fa:87654810:3460dfd6:1d4951d5

Events : 0

Number Major Minor RaidDevice State

0 8 33 0 active sync /dev/sdc1

1 8 34 1 active sync /dev/sdc2

format

[root@server1 ~]# mkfs.xfs /dev/md0

meta-data=/dev/md0 isize=512 agcount=8, agsize=130944 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=1047040, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

mount

[root@server1 ~]# mkdir /mnt/mnt_md0

[root@server1 ~]# mount /dev/md0 /mnt/mnt_md0/

test

#Replication terminal for monitoring

[root@server1 ~]# yum install -y sysstat

[root@server1 ~]# iostat -m -d /dev/sdc[12] 3

[root@server1 ~]# dd if=/dev/zero of=/mnt/mnt_md0/bigfile bs=1M count=1024

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

sdc1 156.00 0.00 77.84 0 233

sdc2 155.67 0.00 77.83 0 233

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

sdc1 140.33 0.00 70.17 0 210

sdc2 140.33 0.00 70.17 0 210

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

sdc1 45.33 0.00 22.67 0 68

sdc2 45.33 0.00 22.67 0 68

Permanent preservation RAID0 information

[root@server1 ~]# echo "/dev/md0 /mnt/mnt_md0 xfs defaults 0 0" >> /etc/fstab

Set up RAID1

establish raid1

[root@server1 ~]# mdadm -C /dev/md1 -l 1 -n 2 /dev/sdc3 /dev/sdc5

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

Continue creating array? y #Enter y and agree

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md1 started.

View status information

[root@server1 ~]# cat /proc/mdstat

Personalities : [raid0] [raid1]

md1 : active raid1 sdc5[1] sdc3[0]

2094080 blocks super 1.2 [2/2] [UU]

md0 : active raid0 sdc2[1] sdc1[0]

4188160 blocks super 1.2 512k chunks

unused devices: <none>

[root@server1 ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Thu Sep 2 23:43:43 2021

Raid Level : raid1

Array Size : 2094080 (2045.00 MiB 2144.34 MB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Thu Sep 2 23:43:54 2021

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : server1:1 (local to host server1)

UUID : bc67728d:e1c60a3c:8d445c5d:67a55444

Events : 17

Number Major Minor RaidDevice State

0 8 35 0 active sync /dev/sdc3

1 8 37 1 active sync /dev/sdc5

format

[root@server1 ~]# mkfs.xfs /dev/md1

meta-data=/dev/md1 isize=512 agcount=4, agsize=130880 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=523520, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

mount

[root@server1 ~]# mkdir /mnt/mnt_md1

[root@server1 ~]# mount /dev/md1 /mnt/mnt_md1

[root@server1 ~]# df -Th

file system type Capacity used available used% Mount point

devtmpfs devtmpfs 899M 0 899M 0% /dev

tmpfs tmpfs 910M 0 910M 0% /dev/shm

tmpfs tmpfs 910M 9.6M 901M 2% /run

tmpfs tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/mapper/centos-root xfs 17G 1.9G 16G 11% /

/dev/sda1 xfs 1014M 194M 821M 20% /boot

tmpfs tmpfs 182M 0 182M 0% /run/user/0

/dev/md0 xfs 4.0G 33M 4.0G 1% /mnt/mnt_md0

/dev/md1 xfs 2.0G 33M 2.0G 2% /mnt/mnt_md1

Test (hot plug)

#Analog data

[root@server1 ~]# touch /mnt/mnt_md1/file{1..5}

#Simulated fault

[root@server1 ~]# mdadm /dev/md1 -f /dev/sdc3

mdadm: set /dev/sdc3 faulty in /dev/md1

[root@server1 ~]# cat /proc/mdstat

Personalities : [raid0] [raid1]

md1 : active raid1 sdc5[1] sdc3[0](F) F Indicates failure

2094080 blocks super 1.2 [2/1] [_U] _Indicates that a disk has failed

md0 : active raid0 sdc2[1] sdc1[0]

4188160 blocks super 1.2 512k chunks

unused devices: <none>

[root@server1 ~]# mdadm -D /dev/md1

/dev/md1:

Version : 1.2

Creation Time : Thu Sep 2 23:43:43 2021

Raid Level : raid1

Array Size : 2094080 (2045.00 MiB 2144.34 MB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Thu Sep 2 23:57:53 2021

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 1

Spare Devices : 0

Consistency Policy : resync

Name : server1:1 (local to host server1)

UUID : bc67728d:e1c60a3c:8d445c5d:67a55444

Events : 19

Number Major Minor RaidDevice State

- 0 0 0 removed

1 8 37 1 active sync /dev/sdc5

0 8 35 - faulty /dev/sdc3

#Remove failed disk

[root@server1 ~]# mdadm /dev/md1 -r /dev/sdc3

mdadm: hot removed /dev/sdc3 from /dev/md1

[root@server1 ~]# cat /proc/mdstat

Personalities : [raid0] [raid1]

md1 : active raid1 sdc5[1]

2094080 blocks super 1.2 [2/1] [_U]

md0 : active raid0 sdc2[1] sdc1[0]

4188160 blocks super 1.2 512k chunks

unused devices: <none>

#It does not affect reading and writing data

[root@server1 ~]# touch /mnt/mnt_md1/test1

[root@server1 ~]# ls /mnt/mnt_md1

file1 file2 file3 file4 file5 test1

#Add new disk (hot plug)

[root@server1 ~]# mdadm /dev/md1 -a /dev/sdc6

mdadm: added /dev/sdc6

[root@server1 ~]# cat /proc/mdstat

Personalities : [raid0] [raid1]

md1 : active raid1 sdc6[2] sdc5[1]

2094080 blocks super 1.2 [2/2] [UU]

md0 : active raid0 sdc2[1] sdc1[0]

4188160 blocks super 1.2 512k chunks

unused devices: <none>

Permanent preservation RAID1 information

[root@server1 ~]# echo "/dev/md1 /mnt/mnt_md1 xfs defaults 0 0" >> /etc/fstab

Set up RAID5

establish RAID5(Reserve a hot spare)

[root@server1 ~]# mdadm -C /dev/md5 -l 5 -n 3 -x 1 /dev/sdc{7,8,9,10}

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md5 started.

[root@server1 ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4]

md5 : active raid5 sdc9[4] sdc10[3](S) sdc8[1] sdc7[0]

4188160 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

md1 : active raid1 sdc6[2] sdc5[1]

2094080 blocks super 1.2 [2/2] [UU]

md0 : active raid0 sdc2[1] sdc1[0]

4188160 blocks super 1.2 512k chunks

unused devices: <none>

[root@server1 ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Fri Sep 3 00:11:41 2021

Raid Level : raid5

Array Size : 4188160 (3.99 GiB 4.29 GB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Fri Sep 3 00:11:54 2021

State : clean

Active Devices : 3

Working Devices : 4

Failed Devices : 0

Spare Devices : 1 Hot spare

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : server1:5 (local to host server1)

UUID : 0536fd77:e916c80b:f7e945cf:a4d4d0f2

Events : 18

Number Major Minor RaidDevice State

0 8 39 0 active sync /dev/sdc7

1 8 40 1 active sync /dev/sdc8

4 8 41 2 active sync /dev/sdc9

3 8 42 - spare /dev/sdc10

format

[root@server1 ~]# mkfs.ext4 /dev/md5

mount

[root@server1 ~]# mount /dev/md5 /mnt/mnt_md5

[root@server1 ~]# df -Th

file system type Capacity used available used% Mount point

devtmpfs devtmpfs 899M 0 899M 0% /dev

tmpfs tmpfs 910M 0 910M 0% /dev/shm

tmpfs tmpfs 910M 9.6M 901M 2% /run

tmpfs tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/mapper/centos-root xfs 17G 1.9G 16G 11% /

/dev/sda1 xfs 1014M 194M 821M 20% /boot

tmpfs tmpfs 182M 0 182M 0% /run/user/0

/dev/md0 xfs 4.0G 33M 4.0G 1% /mnt/mnt_md0

/dev/md1 xfs 2.0G 33M 2.0G 2% /mnt/mnt_md1

/dev/md5 ext4 3.9G 16M 3.7G 1% /mnt/mnt_md5

test

#Simulated fault

[root@server1 ~]# mdadm /dev/md5 -f /dev/sdc7

mdadm: set /dev/sdc7 faulty in /dev/md5

[root@server1 ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4]

md5 : active raid5 sdc9[4] sdc10[3] sdc8[1] sdc7[0](F)

4188160 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

[root@server1 ~]# mdadm -D /dev/md5

/dev/md5:

Version : 1.2

Creation Time : Fri Sep 3 00:11:41 2021

Raid Level : raid5

Array Size : 4188160 (3.99 GiB 4.29 GB)

Used Dev Size : 2094080 (2045.00 MiB 2144.34 MB)

Raid Devices : 3

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Fri Sep 3 00:19:14 2021

State : clean

Active Devices : 3

Working Devices : 3

Failed Devices : 1

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : resync

Name : server1:5 (local to host server1)

UUID : 0536fd77:e916c80b:f7e945cf:a4d4d0f2

Events : 37

Number Major Minor RaidDevice State

3 8 42 0 active sync /dev/sdc10

1 8 40 1 active sync /dev/sdc8

4 8 41 2 active sync /dev/sdc9

0 8 39 - faulty /dev/sdc7

#Remove failed disk

[root@server1 ~]# mdadm /dev/md5 -r /dev/sdc7

mdadm: hot removed /dev/sdc7 from /dev/md5

[root@server1 ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4]

md5 : active raid5 sdc9[4] sdc10[3] sdc8[1]

4188160 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

[root@server1 ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4]

md5 : active raid5 sdc9[4] sdc10[3] sdc8[1]

4188160 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

#Add a hot spare

[root@server1 ~]# mdadm /dev/md5 -a /dev/sdc3

mdadm: added /dev/sdc3

[root@server1 ~]# cat /proc/mdstat

Personalities : [raid0] [raid1] [raid6] [raid5] [raid4]

md5 : active raid5 sdc3[5](S) sdc9[4] sdc10[3] sdc8[1]

4188160 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/3] [UUU]

Permanent preservation RAID1 information

[root@server1 ~]# echo "/dev/md5 /mnt/mnt_md5 ext4 defaults 0 0" >> /etc/fstab

RAID stop and start

Stop (in) RAID0 (for example) [root@server1 ~]# umount /mnt/mnt_md0 [root@server1 ~]# mdadm --stop /dev/md0 mdadm: stopped /dev/md0 start-up [root@server1 ~]# mdadm -A /dev/md0 /dev/sdc[12] mdadm: /dev/md0 has been started with 2 drives.

RAID delete

#Take RAID1 as an example Uncoupling [root@server1 ~]# umount /dev/md1 Stop operation [root@server1 ~]# mdadm --stop /dev/md1 mdadm: stopped /dev/md1 delete/etc/fstab Relevant contents in [root@server1 ~]# vim /etc/fstab #/dev/md1 /mnt/mnt_md1 ext4 defaults 0 0 remove metadata [root@server1 ~]# mdadm --zero-superblock /dev/sdc[56]