Framework is the second implementation of the kubernetes extension. Compared to Scheduler Extender's extension based on remote independent services, the Framework core implements a localized specification process management mechanism based on extension points

1. Expand Achieving Goals

The design of the Framework has been clearly described in the official documentation, and there is no Stable yet. This article is based on version 1.18 to talk about some details of the implementation in addition to the official description.

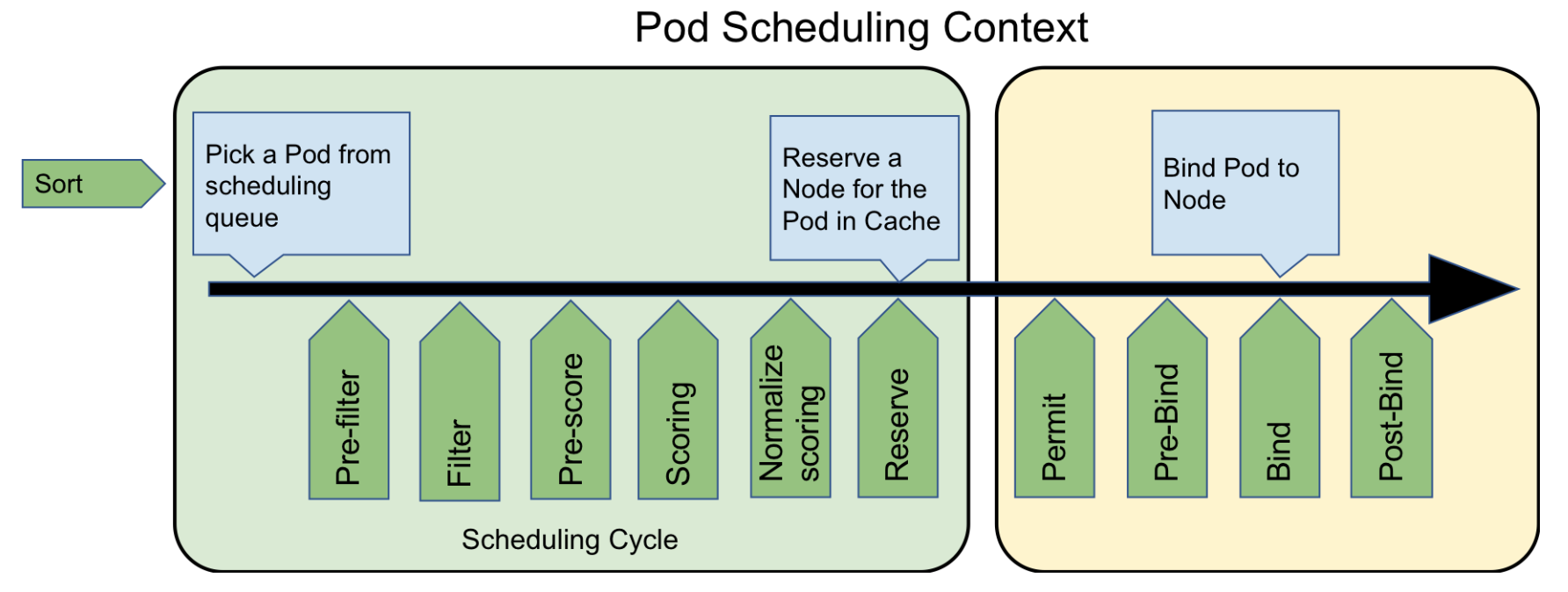

Phase 1.1 Extension Points

The current official expansion is mainly around the pre-selection and pre-selection phases, providing more extension points, where each extension point is a type of plug-in, we can write the extension in the corresponding phase according to our needs, to achieve scheduling enhancements

The current official expansion is mainly around the pre-selection and pre-selection phases, providing more extension points, where each extension point is a type of plug-in, we can write the extension in the corresponding phase according to our needs, to achieve scheduling enhancements

Priority plug-ins have been extracted into the framework in the current version, and the preferred plug-ins should continue to be extracted in the future, which should take some time to stabilize

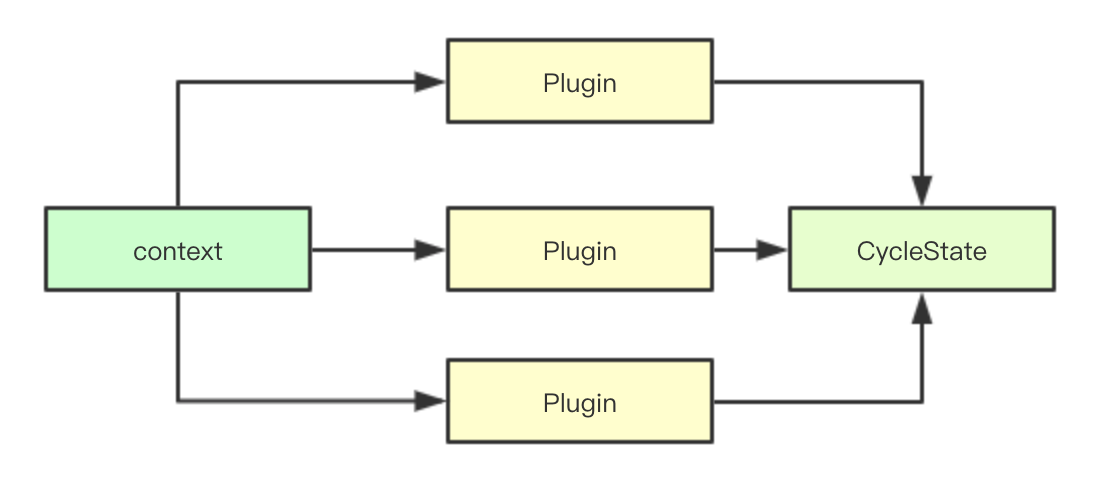

1.2 context and Cycle State

In the implementation of Framework, each plug-in extension phase call passes both contexts and cycleState objects, where contexts are similar to what we use in most go programming, mainly for the unified exit operation when multistage parallel processing occurs, while CycleState stores all data for the current scheduling cycle, a concurrent and secure structure.Internal contains a read-write lock

In the implementation of Framework, each plug-in extension phase call passes both contexts and cycleState objects, where contexts are similar to what we use in most go programming, mainly for the unified exit operation when multistage parallel processing occurs, while CycleState stores all data for the current scheduling cycle, a concurrent and secure structure.Internal contains a read-write lock

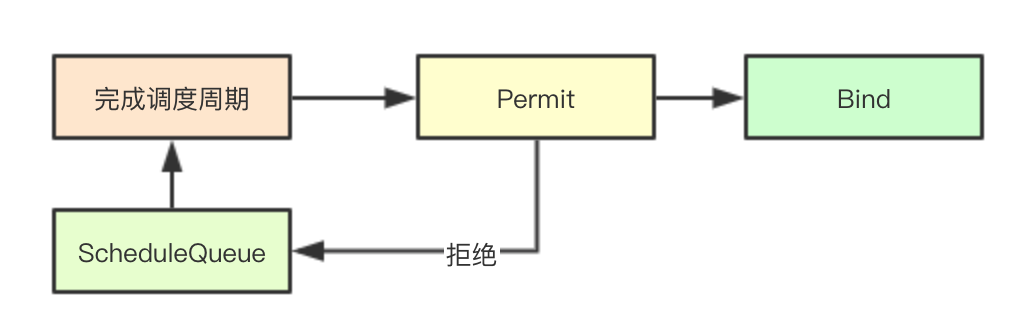

1.3 Bind Permit

Permit is an operation that precedes the Bind Bind operation. Its primary design goal is to make the final decision before the Bind operation, that is, whether the current pod permits the final Bind operation, has a veto right, and if the plugin inside refuses, the corresponding pod will be rescheduled

Permit is an operation that precedes the Bind Bind operation. Its primary design goal is to make the final decision before the Bind operation, that is, whether the current pod permits the final Bind operation, has a veto right, and if the plugin inside refuses, the corresponding pod will be rescheduled

2. Core Source Implementation

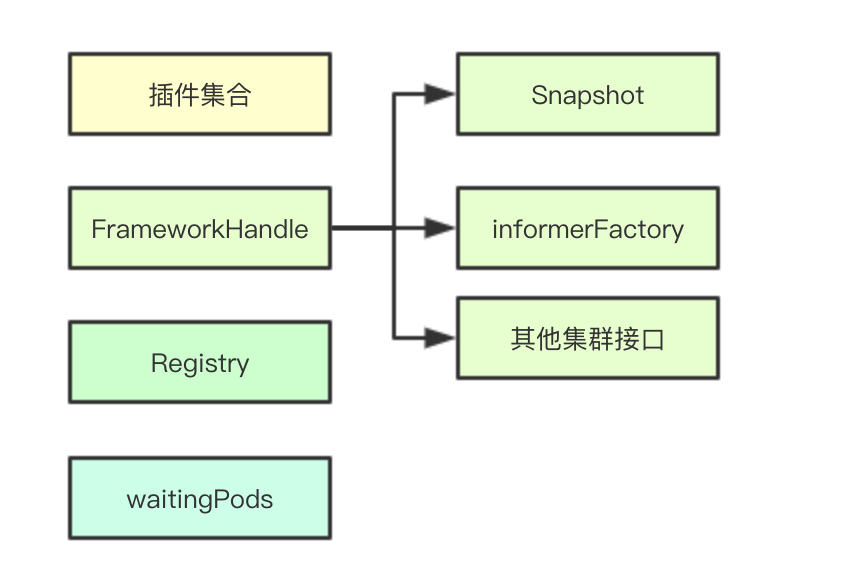

2.1 Framework Core Data Structure

The core data structure of the Framework is simply divided into three parts: a collection of plug-ins (for each extension stage there will be its own collection), a metadata acquisition interface (for clustering and snapshot data acquisition), and a waiting Pod collection

The core data structure of the Framework is simply divided into three parts: a collection of plug-ins (for each extension stage there will be its own collection), a metadata acquisition interface (for clustering and snapshot data acquisition), and a waiting Pod collection

2.1.1 Plug-in Collection

The collection of plug-ins is classified and saved according to the type of plug-in. There is also a plug-in's priority store map, which is currently used only in the preferred phase and may be added later to the preferred priority.

pluginNameToWeightMap map[string]int queueSortPlugins []QueueSortPlugin preFilterPlugins []PreFilterPlugin filterPlugins []FilterPlugin postFilterPlugins []PostFilterPlugin scorePlugins []ScorePlugin reservePlugins []ReservePlugin preBindPlugins []PreBindPlugin bindPlugins []BindPlugin postBindPlugins []PostBindPlugin unreservePlugins []UnreservePlugin permitPlugins []PermitPlugin

2.1.2 Cluster Data Acquisition

This is mainly the implementation of some data acquisition interfaces in the cluster, mainly for the implementation of FrameworkHandle, which mainly provides some interfaces for data acquisition and cluster operation for plug-ins to use

clientSet clientset.Interface informerFactory informers.SharedInformerFactory volumeBinder *volumebinder.VolumeBinder snapshotSharedLister schedulerlisters.SharedLister

2.1.3 Waiting for pod Collection

Waiting for pods collection is mainly stored in the Permit phase for waiting pods, which will be rejected if pods are deleted during the waiting cycle

waitingPods *waitingPodsMap

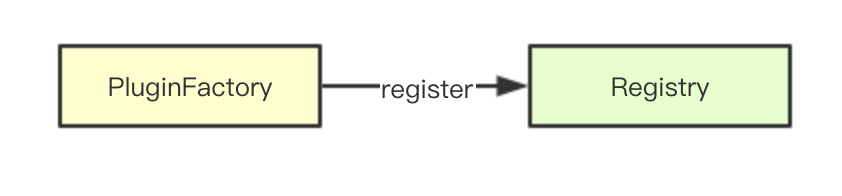

2.1.4 Plugin Factory Registry

Store all registered plug-in factories through the plug-in factories, then build specific plug-ins through the plug-in factories

registry Registry

2.2 Plugin Factory Registry

2.2.1 Plugin Factory Function

The factory function passes in the corresponding parameters and builds a Plugin in which the FrameworkHandle is primarily used to take snapshots and other data for the cluster

type PluginFactory = func(configuration *runtime.Unknown, f FrameworkHandle) (Plugin, error)

Implementation of 2.2.2 Plug-in Factory

Most plug-in factory implementations in go are done by map, and the same is true here, exposing Register and UnRegister interfaces

type Registry map[string]PluginFactory // Register adds a new plugin to the registry. If a plugin with the same name // exists, it returns an error. func (r Registry) Register(name string, factory PluginFactory) error { if _, ok := r[name]; ok { return fmt.Errorf("a plugin named %v already exists", name) } r[name] = factory return nil } // Unregister removes an existing plugin from the registry. If no plugin with // the provided name exists, it returns an error. func (r Registry) Unregister(name string) error { if _, ok := r[name]; !ok { return fmt.Errorf("no plugin named %v exists", name) } delete(r, name) return nil } // Merge merges the provided registry to the current one. func (r Registry) Merge(in Registry) error { for name, factory := range in { if err := r.Register(name, factory); err != nil { return err } } return nil }

2.3 Plugin Registration Implementation

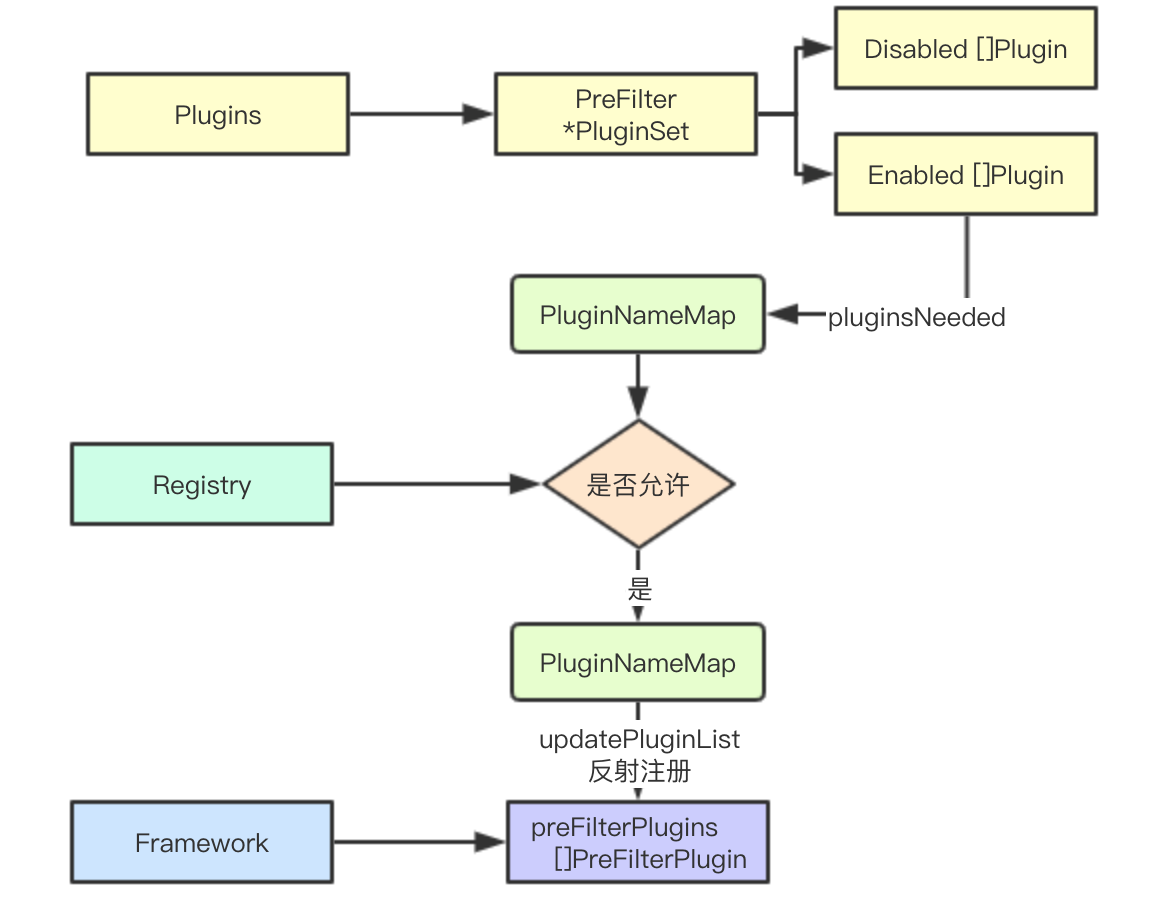

Here's a preFilterPlugins example to show the registration of the entire process

Here's a preFilterPlugins example to show the registration of the entire process

2.3.1 Plugins

Plugins are constructed during the configuration phase, which saves all plug-ins registered in the current framework and saves the corresponding allowed and disabled plug-ins through the PluginSet

type Plugins struct { // QueueSort is a list of plugins that should be invoked when sorting pods in the scheduling queue. QueueSort *PluginSet // PreFilter is a list of plugins that should be invoked at "PreFilter" extension point of the scheduling framework. PreFilter *PluginSet // Filter is a list of plugins that should be invoked when filtering out nodes that cannot run the Pod. Filter *PluginSet // PostFilter is a list of plugins that are invoked after filtering out infeasible nodes. PostFilter *PluginSet // Score is a list of plugins that should be invoked when ranking nodes that have passed the filtering phase. Score *PluginSet // Reserve is a list of plugins invoked when reserving a node to run the pod. Reserve *PluginSet // Permit is a list of plugins that control binding of a Pod. These plugins can prevent or delay binding of a Pod. Permit *PluginSet // PreBind is a list of plugins that should be invoked before a pod is bound. PreBind *PluginSet // Bind is a list of plugins that should be invoked at "Bind" extension point of the scheduling framework. // The scheduler call these plugins in order. Scheduler skips the rest of these plugins as soon as one returns success. Bind *PluginSet // PostBind is a list of plugins that should be invoked after a pod is successfully bound. PostBind *PluginSet // Unreserve is a list of plugins invoked when a pod that was previously reserved is rejected in a later phase. Unreserve *PluginSet }

2.3.2 Plugin Collection Mapping

The main purpose of this method is to map the corresponding plug-in type to an array of corresponding plug-in types stored in the framework, such as Prefilter and its associated preFilterPlugins slices, string ->[]PreFilterPlugin (&reflect.SliceHeader slice header)

func (f *framework) getExtensionPoints(plugins *config.Plugins) []extensionPoint { return []extensionPoint{ {plugins.PreFilter, &f.preFilterPlugins}, {plugins.Filter, &f.filterPlugins}, {plugins.Reserve, &f.reservePlugins}, {plugins.PostFilter, &f.postFilterPlugins}, {plugins.Score, &f.scorePlugins}, {plugins.PreBind, &f.preBindPlugins}, {plugins.Bind, &f.bindPlugins}, {plugins.PostBind, &f.postBindPlugins}, {plugins.Unreserve, &f.unreservePlugins}, {plugins.Permit, &f.permitPlugins}, {plugins.QueueSort, &f.queueSortPlugins}, } }

2.3.3 Scan to register all allowed plugins

It traverses all of the above mappings, but instead of registering in the corresponding slice based on the type, all are registered in the gpMAp

func (f *framework) pluginsNeeded(plugins *config.Plugins) map[string]config.Plugin { pgMap := make(map[string]config.Plugin) if plugins == nil { return pgMap } // Build anonymous functions and use closures to modify pgMap to save all allowed plug-in collections find := func(pgs *config.PluginSet) { if pgs == nil { return } for _, pg := range pgs.Enabled { // Traverse through all allowed plug-in collections pgMap[pg.Name] = pg // Save to map } } // Traverse through all the mapping tables above for _, e := range f.getExtensionPoints(plugins) { find(e.plugins) } return pgMap }

2.3.4 Plug-in Factory Construction Plug-in Mapping

The generated plug-in factory registry is called to build Plugin plug-in instances from each plug-in's Factory and save them in the pluginsMap

pluginsMap := make(map[string]Plugin) for name, factory := range r { // pg is the pgMap generated above, where only the plug-ins needed are generated if _, ok := pg[name]; !ok { continue } p, err := factory(pluginConfig[name], f) if err != nil { return nil, fmt.Errorf("error initializing plugin %q: %v", name, err) } pluginsMap[name] = p // Save weights f.pluginNameToWeightMap[name] = int(pg[name].Weight) if f.pluginNameToWeightMap[name] == 0 { f.pluginNameToWeightMap[name] = 1 } // Checks totalPriority against MaxTotalScore to avoid overflow if int64(f.pluginNameToWeightMap[name])*MaxNodeScore > MaxTotalScore-totalPriority { return nil, fmt.Errorf("total score of Score plugins could overflow") } totalPriority += int64(f.pluginNameToWeightMap[name]) * MaxNodeScore }

2.3.5 Register Plugins by Type

This is mainly to register specific types of plug-ins through e.slicePtr using reflection, combining previously constructed pluginsMap s and reflection

for _, e := range f.getExtensionPoints(plugins) { if err := updatePluginList(e.slicePtr, e.plugins, pluginsMap); err != nil { return nil, err } }

The updatePluginList is primarily done through reflection, which takes the address of the corresponding slice in the framework obtained from the getExtensionPoints above, and then uses reflection to register and validate the plug-in

func updatePluginList(pluginList interface{}, pluginSet *config.PluginSet, pluginsMap map[string]Plugin) error { if pluginSet == nil { return nil } // First get the type of the current array through Elem plugins := reflect.ValueOf(pluginList).Elem() // Get the type of elements inside an array by array type pluginType := plugins.Type().Elem() set := sets.NewString() for _, ep := range pluginSet.Enabled { pg, ok := pluginsMap[ep.Name] if !ok { return fmt.Errorf("%s %q does not exist", pluginType.Name(), ep.Name) } // Legality check: Error if current plugin is found not to implement current interface if !reflect.TypeOf(pg).Implements(pluginType) { return fmt.Errorf("plugin %q does not extend %s plugin", ep.Name, pluginType.Name()) } if set.Has(ep.Name) { return fmt.Errorf("plugin %q already registered as %q", ep.Name, pluginType.Name()) } set.Insert(ep.Name) // Append the plug-in to slice and save the pointer to newPlugins := reflect.Append(plugins, reflect.ValueOf(pg)) plugins.Set(newPlugins) } return nil }

2.4 CycleState

CycleState is mainly responsible for the storage and cloning of data in the scheduling process. It exposes the read-write lock interface, and each extension point plug-in can lock independently according to its needs.

2.4.1 Data Structure

CycleState implements and complicates StateData data storage mainly by implementing a clone interface. Data inside CycleState can be added and modified by all plug-ins of the current framework. Thread security is guaranteed by read-write locks, but there are no restrictions on plug-ins, that is, all plug-ins can be trusted and added or deleted at will.

type CycleState struct { mx sync.RWMutex storage map[StateKey]StateData // if recordPluginMetrics is true, PluginExecutionDuration will be recorded for this cycle. recordPluginMetrics bool } // StateData is a generic type for arbitrary data stored in CycleState. type StateData interface { // Clone is an interface to make a copy of StateData. For performance reasons, // clone should make shallow copies for members (e.g., slices or maps) that are not // impacted by PreFilter's optional AddPod/RemovePod methods. Clone() StateData }

2.4.2 External Interface Implementation

The implementation of external interface requires corresponding plug-ins to actively select read lock or write lock, and then read and modify related data.

func (c *CycleState) Read(key StateKey) (StateData, error) { if v, ok := c.storage[key]; ok { return v, nil } return nil, errors.New(NotFound) } // Write stores the given "val" in CycleState with the given "key". // This function is not thread safe. In multi-threaded code, lock should be // acquired first. func (c *CycleState) Write(key StateKey, val StateData) { c.storage[key] = val } // Delete deletes data with the given key from CycleState. // This function is not thread safe. In multi-threaded code, lock should be // acquired first. func (c *CycleState) Delete(key StateKey) { delete(c.storage, key) } // Lock acquires CycleState lock. func (c *CycleState) Lock() { c.mx.Lock() } // Unlock releases CycleState lock. func (c *CycleState) Unlock() { c.mx.Unlock() } // RLock acquires CycleState read lock. func (c *CycleState) RLock() { c.mx.RLock() } // RUnlock releases CycleState read lock. func (c *CycleState) RUnlock() { c.mx.RUnlock() }

2.5 WatingPodMap and WatingPod

Waiting PodMap stores the pods set by the Permit phase plug-ins that need Wait to wait for, and even after previous selections, the pods may be rejected by some plug-ins

Waiting PodMap stores the pods set by the Permit phase plug-ins that need Wait to wait for, and even after previous selections, the pods may be rejected by some plug-ins

2.5.1 Data Structure

Waiting PodsMAp stores a map map map internally through the pod's uid, while protecting data by reading and writing locks

type waitingPodsMap struct { pods map[types.UID]WaitingPod mu sync.RWMutex }

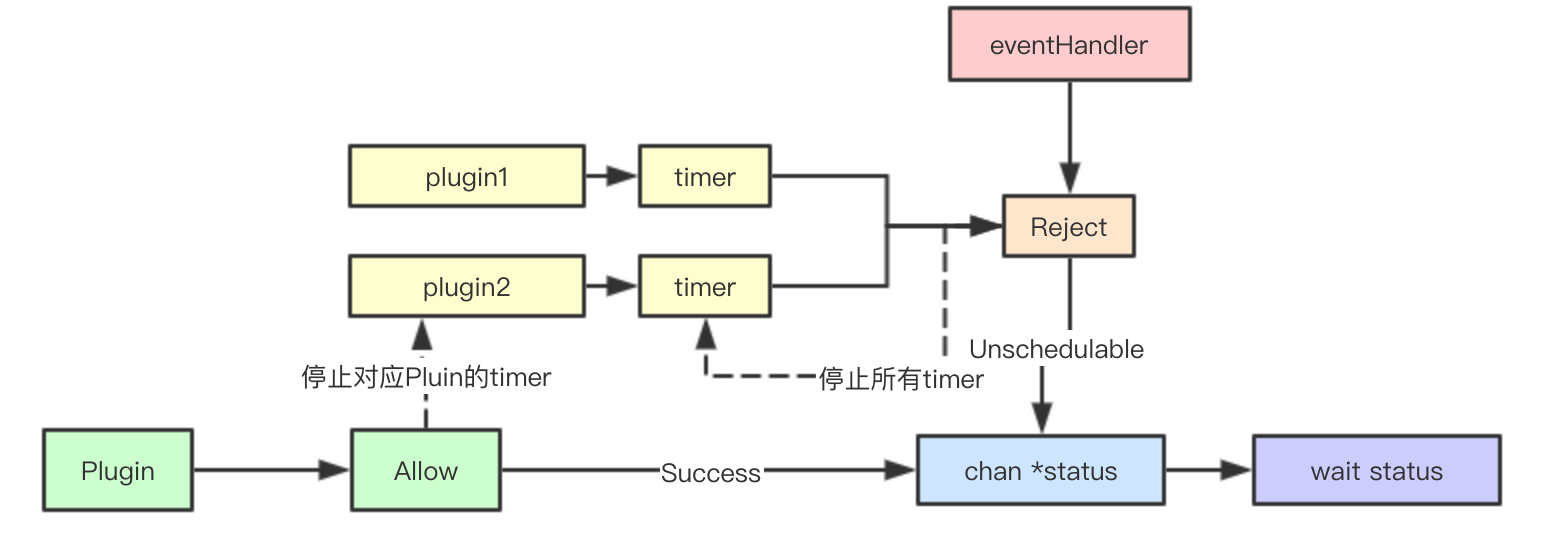

Waiting Pod is a specific waiting instance of a pod. Inside it, pending Plugins saves the timer wait time defined by the plug-in, chan *status accepts the current state of the pod externally, and serializes it by reading and writing locks

type waitingPod struct { pod *v1.Pod pendingPlugins map[string]*time.Timer s chan *Status mu sync.RWMutex }

2.5.2 Building waitingPod and Timers

N timers are constructed based on the wait wait time for each plugin, and rejected if either timer expires

func newWaitingPod(pod *v1.Pod, pluginsMaxWaitTime map[string]time.Duration) *waitingPod { wp := &waitingPod{ pod: pod, s: make(chan *Status), } wp.pendingPlugins = make(map[string]*time.Timer, len(pluginsMaxWaitTime)) // The time.AfterFunc calls wp.Reject which iterates through pendingPlugins map. Acquire the // lock here so that time.AfterFunc can only execute after newWaitingPod finishes. wp.mu.Lock() defer wp.mu.Unlock() // Build a timer based on the plug-in's wait time, and Rejectj if any timer expires and there hasn't been any plugin Allow yet for k, v := range pluginsMaxWaitTime { plugin, waitTime := k, v wp.pendingPlugins[plugin] = time.AfterFunc(waitTime, func() { msg := fmt.Sprintf("rejected due to timeout after waiting %v at plugin %v", waitTime, plugin) wp.Reject(msg) }) } return wp }

2.5.3 Stop Timer Send Rejection Event

Any one plugin's timer expires, or the plugin actively initiates reject, all timers are paused and messages broadcast

func (w *waitingPod) Reject(msg string) bool { w.mu.RLock() defer w.mu.RUnlock() // Stop all timer s for _, timer := range w.pendingPlugins { timer.Stop() } // Pipeline rejection events select { case w.s <- NewStatus(Unschedulable, msg): return true default: return false } }

2.5.4 Send Allow Scheduling Operations

Allow operation must wait until all plugin s are allowed before sending allowed events

func (w *waitingPod) Allow(pluginName string) bool { w.mu.Lock() defer w.mu.Unlock() if timer, exist := w.pendingPlugins[pluginName]; exist { // Stop the timer for the current plugin timer.Stop() delete(w.pendingPlugins, pluginName) } // Only signal success status after all plugins have allowed if len(w.pendingPlugins) != 0 { return true } // Success Allow Event will only occur if all plugin s are allowed select { case w.s <- NewStatus(Success, ""): // Send Events return true default: return false } }

2.5.5 Permit phase Wait implementation

All plug-ins are traversed first, and if the status is set to Wait, the wait operation is performed based on the plug-in's wait time

func (f *framework) RunPermitPlugins(ctx context.Context, state *CycleState, pod *v1.Pod, nodeName string) (status *Status) { startTime := time.Now() defer func() { metrics.FrameworkExtensionPointDuration.WithLabelValues(permit, status.Code().String()).Observe(metrics.SinceInSeconds(startTime)) }() pluginsWaitTime := make(map[string]time.Duration) statusCode := Success for _, pl := range f.permitPlugins { status, timeout := f.runPermitPlugin(ctx, pl, state, pod, nodeName) if !status.IsSuccess() { if status.IsUnschedulable() { msg := fmt.Sprintf("rejected by %q at permit: %v", pl.Name(), status.Message()) klog.V(4).Infof(msg) return NewStatus(status.Code(), msg) } if status.Code() == Wait { // Not allowed to be greater than maxTimeout. if timeout > maxTimeout { timeout = maxTimeout } // Record the wait time for the current plugin pluginsWaitTime[pl.Name()] = timeout statusCode = Wait } else { msg := fmt.Sprintf("error while running %q permit plugin for pod %q: %v", pl.Name(), pod.Name, status.Message()) klog.Error(msg) return NewStatus(Error, msg) } } } // We now wait for the minimum duration if at least one plugin asked to // wait (and no plugin rejected the pod) if statusCode == Wait { startTime := time.Now() // Build waitingPod based on plugin wait time w := newWaitingPod(pod, pluginsWaitTime) // Join Waiting Pods f.waitingPods.add(w) // remove defer f.waitingPods.remove(pod.UID) klog.V(4).Infof("waiting for pod %q at permit", pod.Name) // Wait Status Message s := <-w.s metrics.PermitWaitDuration.WithLabelValues(s.Code().String()).Observe(metrics.SinceInSeconds(startTime)) if !s.IsSuccess() { if s.IsUnschedulable() { msg := fmt.Sprintf("pod %q rejected while waiting at permit: %v", pod.Name, s.Message()) klog.V(4).Infof(msg) return NewStatus(s.Code(), msg) } msg := fmt.Sprintf("error received while waiting at permit for pod %q: %v", pod.Name, s.Message()) klog.Error(msg) return NewStatus(Error, msg) } } return nil }

2.6 Overview of Plugin Call Method Implementation

The plug-ins have been registered above, and the implementation of the data saving and waiting mechanism in the scheduling process is described. What remains is the implementation of each type of plug-in to make calls. In addition to the optimization phase, there is virtually no logical processing for the remaining stages, which is similar to the design of the optimization phase in the previous series of shares, and here is alsoNo more redundancy

2.6.1RunPreFilterPlugins

The process seems straightforward, notice that if any of the plugins in this area refuse, the direct schedule will fail

func (f *framework) RunPreFilterPlugins(ctx context.Context, state *CycleState, pod *v1.Pod) (status *Status) { startTime := time.Now() defer func() { metrics.FrameworkExtensionPointDuration.WithLabelValues(preFilter, status.Code().String()).Observe(metrics.SinceInSeconds(startTime)) }() for _, pl := range f.preFilterPlugins { status = f.runPreFilterPlugin(ctx, pl, state, pod) if !status.IsSuccess() { if status.IsUnschedulable() { msg := fmt.Sprintf("rejected by %q at prefilter: %v", pl.Name(), status.Message()) klog.V(4).Infof(msg) return NewStatus(status.Code(), msg) } msg := fmt.Sprintf("error while running %q prefilter plugin for pod %q: %v", pl.Name(), pod.Name, status.Message()) klog.Error(msg) return NewStatus(Error, msg) } } return nil }

2.6.2 RunFilterPlugins

Similar to the previous one, except that the runAllFilters parameter determines whether all plug-ins should run or not. By default, they will not run because they have failed.

unc (f *framework) RunFilterPlugins( ctx context.Context, state *CycleState, pod *v1.Pod, nodeInfo *schedulernodeinfo.NodeInfo, ) PluginToStatus { var firstFailedStatus *Status startTime := time.Now() defer func() { metrics.FrameworkExtensionPointDuration.WithLabelValues(filter, firstFailedStatus.Code().String()).Observe(metrics.SinceInSeconds(startTime)) }() statuses := make(PluginToStatus) for _, pl := range f.filterPlugins { pluginStatus := f.runFilterPlugin(ctx, pl, state, pod, nodeInfo) if len(statuses) == 0 { firstFailedStatus = pluginStatus } if !pluginStatus.IsSuccess() { if !pluginStatus.IsUnschedulable() { // Filter plugins are not supposed to return any status other than // Success or Unschedulable. firstFailedStatus = NewStatus(Error, fmt.Sprintf("running %q filter plugin for pod %q: %v", pl.Name(), pod.Name, pluginStatus.Message())) return map[string]*Status{pl.Name(): firstFailedStatus} } statuses[pl.Name()] = pluginStatus if !f.runAllFilters { // No need to run all plug-ins to exit return statuses } } } return statuses }

It's time to get here today. Scheduler modifications are still brutal, but it's predictable that in order to have more scheduling plug-ins concentrated in the framework, learning about the kubernetes scheduler series is still a bit tedious as a novice kubernetes, and the scheduler design is relatively less coupled with other modules

Reference

Official design of kubernetes scheduler framework

>Micro-signal: baxiaoshi2020 welcome to exchange, learn and share, there is a small group to welcome big guys >Personal Blog: www.sreguide.com

>Personal Blog: www.sreguide.com

>Microsignal: baxiaoshi2020  >Focus on bulletin numbers to read more source analysis articles

>Focus on bulletin numbers to read more source analysis articles  >More articles www.sreguide.com

>This article is a multi-article blog platform OpenWrite Release

>More articles www.sreguide.com

>This article is a multi-article blog platform OpenWrite Release