1. Background introduction

When mobile live broadcasting applications became popular in the past 15 years, the main live broadcasting protocol was RTMP. The multimedia services were Adobe's AMS, wowza, Red5, crtmpserver, nginx rtmp module, etc., and the RTMP service SRS became popular. The Android player mainly started playing HLS with EXOPlayer, but the HLS was determined to have a high delay. Then we mainly used the open source ijkplayer. Ijkplayer streamed and decoded through ffmpeg, supported a variety of audio and video coding, and had the characteristics of cross platform and consistency between API and system player. Ijkplayer appeared in the live SDK provided by subsequent major manufacturers.

At that time, I was working on a game SDK. The SDK mainly provided game picture and sound collection, audio and video codec, live streaming, live streaming, etc. the SDK provided live broadcasting function for the game, and the ready-made ijkplayer player was used for playback. However, there were problems in the promotion of the SDK. Game manufacturers despised the large volume of the SDK (in fact, it was only about 3Mb in total). We needed a player with small volume and high performance. We didn't have time to do it because of the development cost. Later, during the change of work, we spent a month to develop this player and open source it. oarplayer It is a player based on MediaCodec and SRS librtmp, completely independent of ffmpeg and implemented in pure C language. This paper mainly introduces the implementation idea of this player.

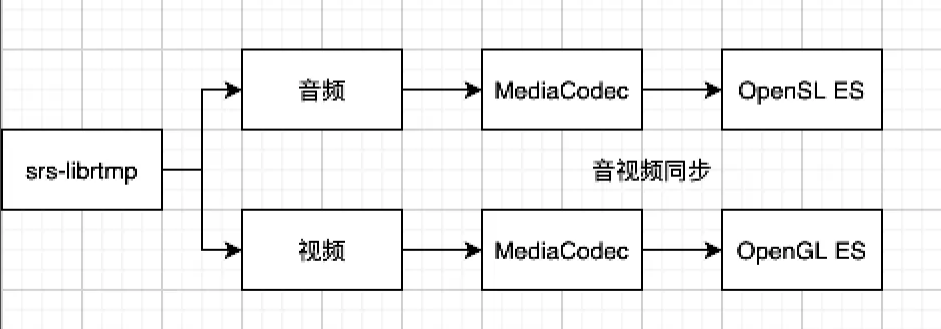

2. Overall architecture design

The overall playing process of the player is as follows:

Pull the live stream through SRS librtmp, separate the audio and video streams through package type, and cache the package data to the package queue. The decoding thread continuously reads the package from the package queue and submits it to the decoder for decoding. The decoder stores the decoded frame in the frame queue. The opensles playback line and opengles rendering thread read the frame playback and rendering from the frame queue, Audio and video synchronization is also involved here.

The player mainly involves the following threads:

- rtmp streaming thread;

- Audio decoding thread;

- Video decoding thread;

- Audio playback thread;

- Video rendering thread;

- JNI callback thread.

3. API interface design

rtmp playback can be completed through the following steps:

- Instantiate OARPlayer:OARPlayer player = new OARPlayer();

- Set video source: player.setDataSource(rtmp_url);

- Set surface:player.setSurface(surfaceView.getHolder());

- Start playing: player.start();

- Stop playing: player.stop();

- Release resources: player.release();

The Java layer method encapsulates the JNI layer method, and the JNI layer encapsulates and calls the corresponding specific functions.

4. rtmp streaming thread

The oarplayer uses SRS librtmp, which is a client library exported from the SRS server. The original intention of the author to provide SRS librtmp is:

- I think the code of rtmpdump/librtmp is too difficult to read, and the code of SRS is very readable;

- Pressure measuring tool srs-bench It is a client and needs a client library;

- I think the server can do well, and the client is no problem

At present, the author of SRS librtmp has stopped maintenance. The main reasons are as stated by the author:

What determines the justice of open source projects is not how good the technology is, but how long it can run. The technology is very good, the performance is very strong, and the code style is very good. Of course, it is a good thing, but these can not withstand the great crime of "not maintaining". If the code is released and not maintained, how can it keep up with the continuous development of technology in the industry. The first thing that determines how long it can run is the technical enthusiasm, and then the field background of the maintainer. SRS maintainers are all server background. Everyone works on the server. They have too little client experience to maintain the client library for a long time. Therefore, SRS decided to resolutely give up SRS librtmp, no longer maintain the client library, and focus on the fast iteration of the server. The client is not unimportant, but should be maintained by open source projects and friends of professional clients. For example, FFmpeg also implements librtmp itself.

The original use of SRS librtmp in oarplayer was based on the readability of SRS librtmp code. Oarplayer has quite high modularity and can easily replace each rtmp lib implementation. The SRS librtmp interface is introduced here:

- Create srs_rtmp_t object: srs_rtmp_create(url);

- Set read / write timeout: srs_rtmp_set_timeout;

- Start Handshake: srs_rtmp_handshake;

- Start connection: srs_rtmp_connect_app;

- Set playback mode: srs_rtmp_play_stream;

- Cyclic reading of audio and video packets: SRS_ rtmp_ read_ packet(rtmp, &type, ×tamp, &data, &size);

- Parse audio package:

- Get encoding type: srs_utils_flv_audio_sound_format;

- Get audio sampling rate: srs_utils_flv_audio_sound_rate;

- Get sampling bit depth: srs_utils_flv_audio_sound_size;

- Get number of channels: srs_utils_flv_audio_sound_type;

- Get audio package type: srs_utils_flv_audio_aac_packet_type;

- Parse video package:

- Get encoding type: srs_utils_flv_video_codec_id;

- Keyframe: srs_utils_flv_video_frame_type;

- Get video package type: srs_utils_flv_video_avc_packet_type;

- Resolve metadata type;

- Destroy srs_rtmp_t object: srs_rtmp_destroy;

Here's a trick. We call SRS in a loop in the pull stream thread_ rtmp_ read_ Packet method, which can be accessed through srs_rtmp_set_timeout sets the timeout, but if the timeout is set too short, it will cause frequent thread arousal. If the timeout is set too long, we must wait for the timeout to end before we can really end. Here, we can use the poll model, put the RTMP tcp socket into the poll, and then put it into a pipeline fd. When we need to stop, write an instruction to the pipeline to wake up the poll and directly stop the RTMP streaming thread.

5. Main data structure

5.1 package structure:

typedef struct OARPacket {

int size;//Packet size

PktType_e type;//Package type

int64_t dts;//Decoding timestamp

int64_t pts;//presentation time stamp

int isKeyframe;//Key frame

struct OARPacket *next;//Next packet address

uint8_t data[0];//Package data content

}OARPacket;

5.2 package queue:

typedef struct oar_packet_queue {

PktType_e media_type;//type

pthread_mutex_t *mutex;//Wire program lock

pthread_cond_t *cond;//Conditional variable

OARPacket *cachedPackets;//Queue header address

OARPacket *lastPacket;//Queue last element

int count;//quantity

int total_bytes;//Total bytes

uint64_t max_duration;//Maximum duration

void (*full_cb)(void *);//Queue full callback

void (*empty_cb)(void *);//Queue empty callback

void *cb_data;

} oar_packet_queue;

5.3 Frame type

typedef struct OARFrame {

int size;//Frame size

PktType_e type;//Frame type

int64_t dts;//Decoding timestamp

int64_t pts;//presentation time stamp

int format;//Format (for video)

int width;//Width (for video)

int height;//High (for video)

int64_t pkt_pos;

int sample_rate;//Sample rate (for audio)

struct OARFrame *next;

uint8_t data[0];

}OARFrame;

5.4 Frame queue

typedef struct oar_frame_queue {

pthread_mutex_t *mutex;

pthread_cond_t *cond;

OARFrame *cachedFrames;

OARFrame *lastFrame;

int count;//Number of frames

unsigned int size;

} oar_frame_queue;

6. Decoding thread

Our rtmp stream pull, decoding, rendering and audio output are all implemented in C layer. In layer C, after Android 21, the system provides amedia codec interface, and we directly find_ Library (media NDK) and import the < media / ndkmediacodec. H > header file. For versions before Android 21, MediaCodec of Java layer can be called in C layer. Two implementations are described below:

6.1 Java layer proxy decoding

Steps for Java layer MediaCodec decoding:

- Create decoder: codec = mediacodec.createcoderbytype (codecname);

- Configure decoder format: codec.configure(format, null, null, 0);

- Start decoder: codec.start()

- Get decode input cache ID: dequeueInputBuffer

- Get decode input buffer: getInputBuffer

- Get decode output cache: dequeueOutputBufferIndex

- Release output cache: releaseOutPutBuffer

- Stop decoder: codec.stop();

Jni layer encapsulates the corresponding calling interface.

6.2 use of layer C decoder

Introduction to layer C interface:

- Create Format: AMediaFormat_new;

- Create decoder: AMediaCodec_createDecoderByType;

- Configure decoding parameters: AMediaCodec_configure;

- Start decoder: amedia codec_ start;

- Input audio and video package:

- Get input buffer sequence: AMediaCodec_dequeueInputBuffer

- Get input buffer: AMediaCodec_getInputBuffer

- Copy data: memcpy

- Input buffer into decoder: amedia codec_ queueInputBuffer

- Get decoded frame:

- Get output buffer sequence: AMediaCodec_dequeueOutputBuffer

- Get output buffer: AMediaCodec_getOutputBuffer

We found that both Java layer and C layer interfaces provide similar ideas. In fact, they finally call the decoding framework of the system.

Here, we can use Java layer interface and C layer interface according to the system version. The main code of our oarplayer is implemented in C layer, so we also use C layer interface limited.

7. Audio output thread

We use opensl for audio output. The Android audio architecture was introduced in the previous article. In fact, AAudio or Oboe can also be used. Here is a brief introduction to the use of opensl es.

- Create engine: slcreateengine (& engineobject, 0, null, 0, null, null);

- Implementation engine: (* engineobject) - > realize (engineobject, sl_boolean_false);

- Get interface: (* engineobject) - > getinterface (engineobject, sl_iid_engine, & engineene);

- Create output mixer: (* engineengine) - > createoutputmix (engineengine, & outputmixobject, 0, null, null);;

- Implement mixer: (* outputmixobject) - > realize (outputmixobject, sl_boolean_false);

- Configure audio source: SLDataLocator_AndroidSimpleBufferQueue loc_bufq = {SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE, 2};

- Configure Format: SLDataFormat_PCM format_pcm = {SL_DATAFORMAT_PCM, channel, SL_SAMPLINGRATE_44_1,SL_PCMSAMPLEFORMAT_FIXED_16, SL_PCMSAMPLEFORMAT_FIXED_16,SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT, SL_BYTEORDER_LITTLEENDIAN};

- Create player: (* engineengine) - > createaudioplayer (engineengine, & bqplayerobject, & audiosrc, & audiosnk, 2, IDS, req);

- Implement player: (* bqplayerobject) - > realize (bqplayerobject, sl_boolean_false);

- Get playback interface: (* bqplayerobject) - > getinterface (bqplayerobject, sl_iid_play, & bqplayerplay);

- Get buffer interface: (* bqplayerobject) - > getinterface (bqplayerobject, sl_iid_android implebufferqueue, & bqplayerbufferqueue);

- Register cache callback: (* bqplayerbufferqueue) - > registercallback (bqplayerbufferqueue, bqplayercallback, oar);

- Get volume regulator: (* bqplayerobject) - > getinterface (bqplayerobject, sl_iid_volume, & bqplayervolume);

- In the cache callback, data is continuously read from the audio frame queue and written to the cache queue: (* bqplayerbufferqueue) - > enqueue (bqplayerbufferqueue, CTX - > buffer, (sluint32) CTX - > frame_size);

The above is the introduction to the use of opensl es interface for audio playback.

8. Rendering thread

Compared with audio playback, video rendering may be more complex. In addition to opengl engine creation and opengl thread creation, oarplayer uses audio-based synchronization, so audio-video synchronization needs to be considered in video rendering.

8.1 OpenGL engine creation

- Generate buffer: glGenBuffers

- Binding buffer: glbindbuffer (gl_array_buffer, model - > vbos [0])

- Set clear color: glClearColor

- Creating texture objects: texture2D

- Create shader object: glCreateShader

- Set shader source code: glShaderSource

- Compile shader source code: glCompileShader

- Attach shader: glAttachShader

- Connecting shaders: glLinkProgram

The interaction between opengl and hardware also requires EGL environment. The EGL initialization process code is shown below:

static void init_egl(oarplayer * oar){

oar_video_render_context *ctx = oar->video_render_ctx;

const EGLint attribs[] = {EGL_SURFACE_TYPE, EGL_WINDOW_BIT, EGL_RENDERABLE_TYPE,

EGL_OPENGL_ES2_BIT, EGL_BLUE_SIZE, 8, EGL_GREEN_SIZE, 8, EGL_RED_SIZE,

8, EGL_ALPHA_SIZE, 8, EGL_DEPTH_SIZE, 0, EGL_STENCIL_SIZE, 0,

EGL_NONE};

EGLint numConfigs;

ctx->display = eglGetDisplay(EGL_DEFAULT_DISPLAY);

EGLint majorVersion, minorVersion;

eglInitialize(ctx->display, &majorVersion, &minorVersion);

eglChooseConfig(ctx->display, attribs, &ctx->config, 1, &numConfigs);

ctx->surface = eglCreateWindowSurface(ctx->display, ctx->config, ctx->window, NULL);

EGLint attrs[] = {EGL_CONTEXT_CLIENT_VERSION, 2, EGL_NONE};

ctx->context = eglCreateContext(ctx->display, ctx->config, NULL, attrs);

EGLint err = eglGetError();

if (err != EGL_SUCCESS) {

LOGE("egl error");

}

if (eglMakeCurrent(ctx->display, ctx->surface, ctx->surface, ctx->context) == EGL_FALSE) {

LOGE("------EGL-FALSE");

}

eglQuerySurface(ctx->display, ctx->surface, EGL_WIDTH, &ctx->width);

eglQuerySurface(ctx->display, ctx->surface, EGL_HEIGHT, &ctx->height);

initTexture(oar);

oar_java_class * jc = oar->jc;

JNIEnv * jniEnv = oar->video_render_ctx->jniEnv;

jobject surface_texture = (*jniEnv)->CallStaticObjectMethod(jniEnv, jc->SurfaceTextureBridge, jc->texture_getSurface, ctx->texture[3]);

ctx->texture_window = ANativeWindow_fromSurface(jniEnv, surface_texture);

}

8.2 audio and video synchronization

There are three common audio and video synchronization methods:

- Based on video synchronization;

- Based on audio synchronization;

- Synchronization based on third-party timestamp.

Here, we use the method based on audio frame synchronization. When rendering the picture, judge the audio timestamp diff and the video picture rendering cycle. If it is greater than the cycle, wait. If it is greater than 0, it is less than the cycle. If it is less than 0, it is drawn immediately.

The rendering code is shown below:

/**

*

* @param oar

* @param frame

* @return 0 draw

* -1 sleep 33ms continue

* -2 break

*/

static inline int draw_video_frame(oarplayer *oar) {

// There may have been no painting last time, so there is no need to take a new one

if (oar->video_frame == NULL) {

oar->video_frame = oar_frame_queue_get(oar->video_frame_queue);

}

// buffer empty ==> sleep 10ms , return 0

// eos ==> return -2

if (oar->video_frame == NULL) {

_LOGD("video_frame is null...");

usleep(BUFFER_EMPTY_SLEEP_US);

return 0;

}

int64_t time_stamp = oar->video_frame->pts;

int64_t diff = 0;

if(oar->metadata->has_audio){

diff = time_stamp - (oar->audio_clock->pts + oar->audio_player_ctx->get_delta_time(oar->audio_player_ctx));

}else{

diff = time_stamp - oar_clock_get(oar->video_clock);

}

_LOGD("time_stamp:%lld, clock:%lld, diff:%lld",time_stamp , oar_clock_get(oar->video_clock), diff);

oar_model *model = oar->video_render_ctx->model;

// diff >= 33ms if draw_mode == wait_frame return -1

// if draw_mode == fixed_frequency draw previous frame ,return 0

// diff > 0 && diff < 33ms sleep(diff) draw return 0

// diff <= 0 draw return 0

if (diff >= WAIT_FRAME_SLEEP_US) {

if (oar->video_render_ctx->draw_mode == wait_frame) {

return -1;

} else {

draw_now(oar->video_render_ctx);

return 0;

}

} else {

// if diff > WAIT_FRAME_SLEEP_US then use previous frame

// else use current frame and release frame

// LOGI("start draw...");

pthread_mutex_lock(oar->video_render_ctx->lock);

model->update_frame(model, oar->video_frame);

pthread_mutex_unlock(oar->video_render_ctx->lock);

oar_player_release_video_frame(oar, oar->video_frame);

JNIEnv * jniEnv = oar->video_render_ctx->jniEnv;

(*jniEnv)->CallStaticVoidMethod(jniEnv, oar->jc->SurfaceTextureBridge, oar->jc->texture_updateTexImage);

jfloatArray texture_matrix_array = (*jniEnv)->CallStaticObjectMethod(jniEnv, oar->jc->SurfaceTextureBridge, oar->jc->texture_getTransformMatrix);

(*jniEnv)->GetFloatArrayRegion(jniEnv, texture_matrix_array, 0, 16, model->texture_matrix);

(*jniEnv)->DeleteLocalRef(jniEnv, texture_matrix_array);

if (diff > 0) usleep((useconds_t) diff);

draw_now(oar->video_render_ctx);

oar_clock_set(oar->video_clock, time_stamp);

return 0;

}

}

9. Summary

Based on the implementation process of RTMP player on Android side, this paper introduces RTMP push-pull stream library, Android MediaCodec Java layer and C layer interface, OpenSL ES interface, OpenGL ES interface, EGL interface, and audio and video related knowledge