window operation on data

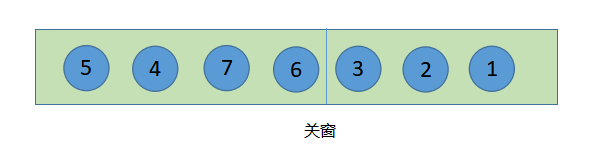

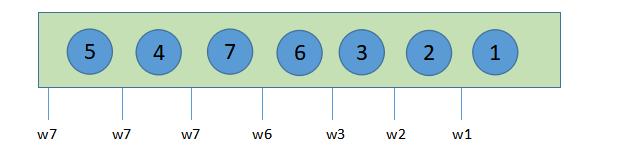

In the window operation of flow data, when event time passes the window time, it will close the window. Then in the actual production environment, due to various reasons such as the network, late data may appear, resulting in data disorder. As shown in the figure below, the number in the circle represents the time. At this time, if you perform a 5-second scrolling window operation on the data, when the data in the 6th second enters, the system will say that the 0-5 second window is closed, and the 4 second data will be lost.

In this case, besides calling allowed latency and side output stream, you can also use watermark. The use of watermark can deal with disordered data and define the window closing time point through watermark.

What is watermark

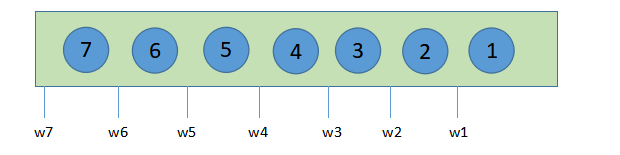

From the literal meaning of watermark, it can be understood as water level line, which can be understood as the scale of water level in real life, and in the semantics of flink, watermark can also be understood as a time scale. Take a simple example. A certain route starts at 10 a.m. every day, and there is a flight every half hour. Each departure point can be understood as a watermark, and passengers can be understood as events. If all passengers arrive before take-off, they can normally take the plane to their destination, which is the water level of orderly events. As shown in the figure below, 1234567 has reached its own water level. If a passenger is late, the plane will not wait for him to fly away directly, which is more like the water level line of disorderly sequence events, as shown in Figure 45 below. If 45 is not processed, these data will be lost.

Ordered time line

Disordered time water level

watermark test

package com.stanley.flink /** * An order sample class * @param timestamp Event event * @param category Commodity category * @param price Price */ case class Order(timestamp:Long,category:String,price:Double)

package com.stanley.flink

import org.apache.flink.api.java.tuple.{Tuple, Tuple1}

import org.apache.flink.streaming.api.TimeCharacteristic

import org.apache.flink.streaming.api.functions.timestamps.BoundedOutOfOrdernessTimestampExtractor

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.scala.function.WindowFunction

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.util.Collector

object WatermarkTest {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

//Set to eventTime

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

//Parallelism set to 1

env.setParallelism(1)

//Create text flow

val inputStream:DataStream[String] = env.socketTextStream("node1",9999)

//Convert to order sample class

val dataStream:DataStream[Order] = inputStream.map(str=>{

val order = str.split(",")

new Order(order(0).toLong,order(1),order(2).toDouble)})

val outputStream:DataStream[Order] = dataStream.

//Calling watermark, the maximum out of order event is set to 0

assignTimestampsAndWatermarks(new BoundedOutOfOrdernessTimestampExtractor[Order](Time.seconds(0)) {

override def extractTimestamp(t: Order): Long = t.timestamp*1000L

}).keyBy("category").timeWindow(Time.seconds(5)).apply(new MyWindowFunction)

dataStream.print("Data")

outputStream.print("Result")

env.execute()

}

}

//Customize Windowfunction to aggregate categories and return the largest event with time stamp as window

class MyWindowFunction() extends WindowFunction[Order,Order,Tuple,TimeWindow]{

override def apply(key: Tuple, window: TimeWindow, input: Iterable[Order], out: Collector[Order]): Unit = {

val timestamp = window.maxTimestamp()

var sum:Double = 0

for (elem <- input) {

sum = elem.price+sum

}

//java Tuple transformation

val category:String = key.asInstanceOf[Tuple1[String]].f0

out.collect(new Order(timestamp,category,sum))

}

}

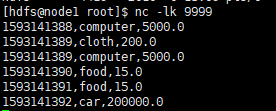

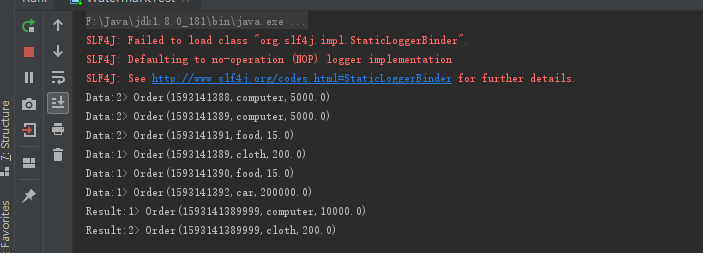

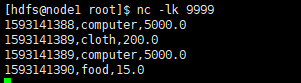

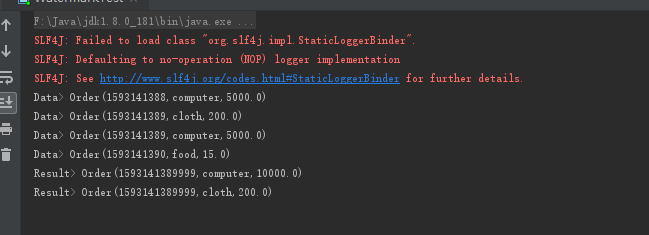

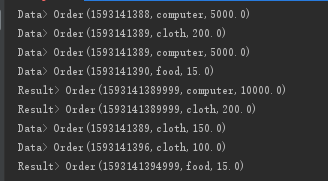

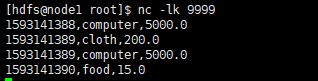

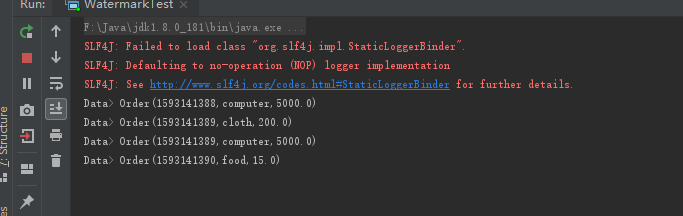

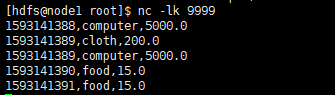

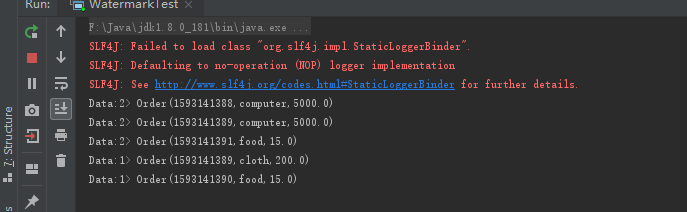

Enter the following four pieces of data. When the event event is 390, the window will close. The events in the time stamp 385-389 will be classified and aggregated. The events in 390 will be the events of the next window in the console. At this time, the watermark is 390. Input the events before 390 again, and these data will be lost if not processed

Only 390 food is aggregated, 389 data is lost

How to process late data through watermark

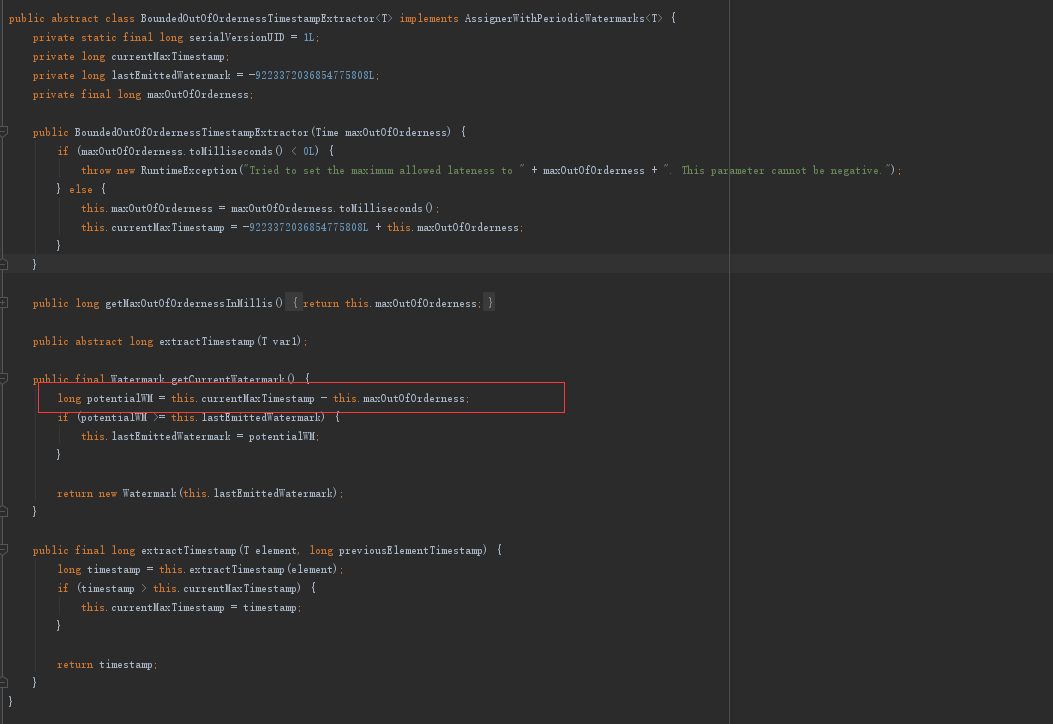

The function of watermark can delay the arrival time of watermark by passing in a time. From the source code, we can see that watermark is the current event time minus the maximum disorder time

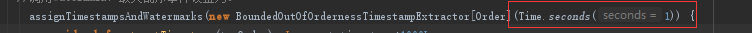

Modify the maximum out of order time, delay watermark,

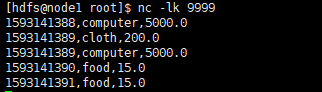

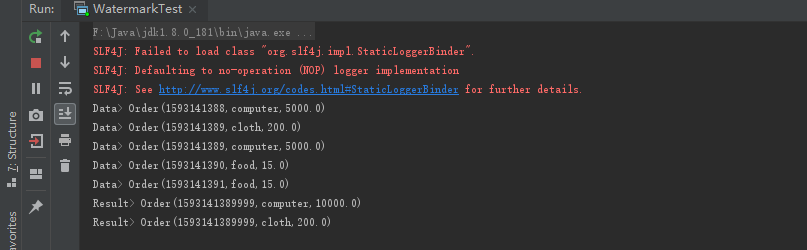

Input the same data again. At this time, the console does not print the result data, because the maximum out of order time is set to 1 second, and the watermark is 389

When the event time changes to 391, the watermark of 390 arrives, and the time window of 385-389 closes

How to define the downstream watermark when multitasking is parallel

When multiple tasks in the upstream are parallel, the lowest watermark in the upstream of watermark will prevail

Set the parallelism of the code to 2

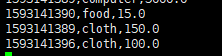

Input the same data again. The watermark of slot2 has reached 390, while the watermark of slot1 is still 389, so the downstream watermark is still 389, so there is no data output

When the event of 392 is input again, when the event is polled to slot1, the watermark of slot1 becomes 391, the watermark of slot2 is 390, and the downstream watermark is 390, which causes the window to close. Input the result data