1. PG introduction

This time, I'd like to share the detailed explanation of various states of PG in Ceph. PG is one of the most complex and difficult concepts. The complexity of PG is as follows:

- At the architecture level, PG is in the middle of the RADOS layer.

a. Up is responsible for receiving and processing requests from clients.

b. The next step is to translate these data requests into transactions that can be understood by the local object store. - It is the basic unit of the storage pool. Many features in the storage pool are directly implemented by PG.

- The backup strategy for disaster recovery domain makes the general PG need to perform distributed write across nodes, so the synchronization of data between different nodes and the recovery of data also depend on PG.

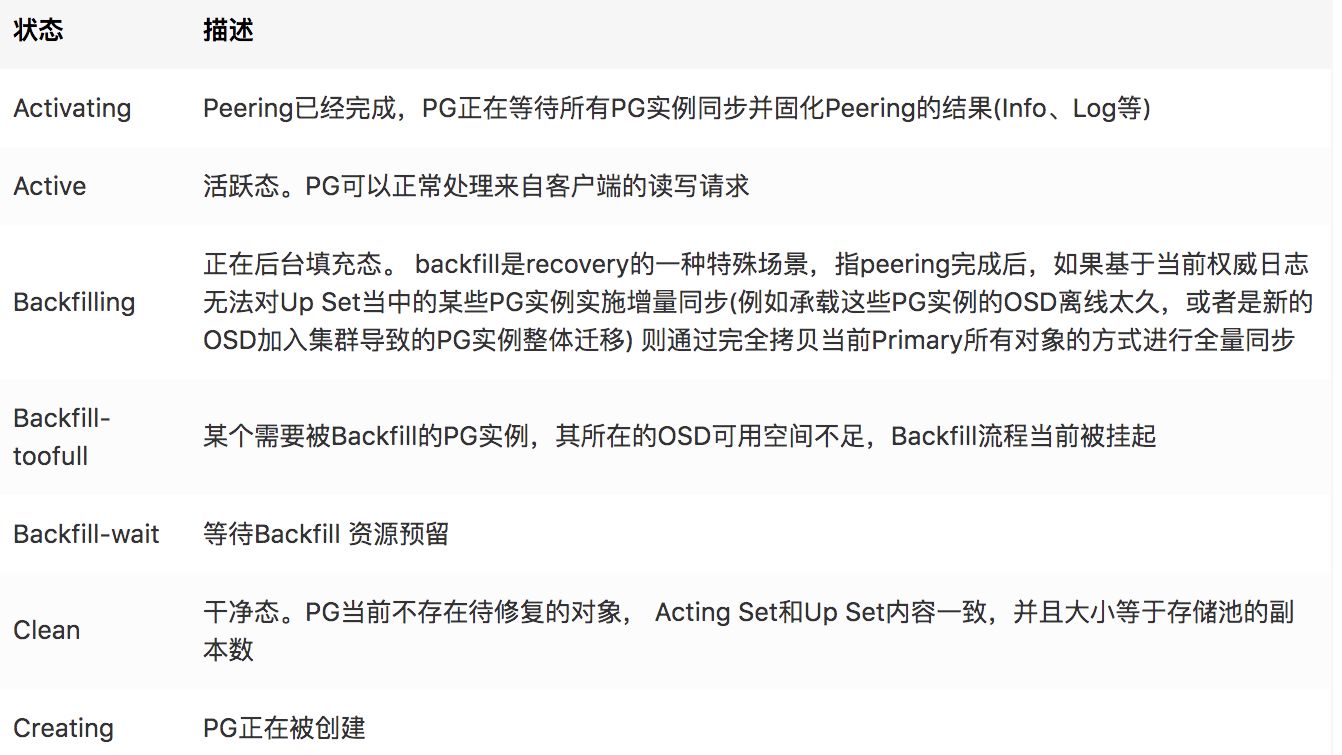

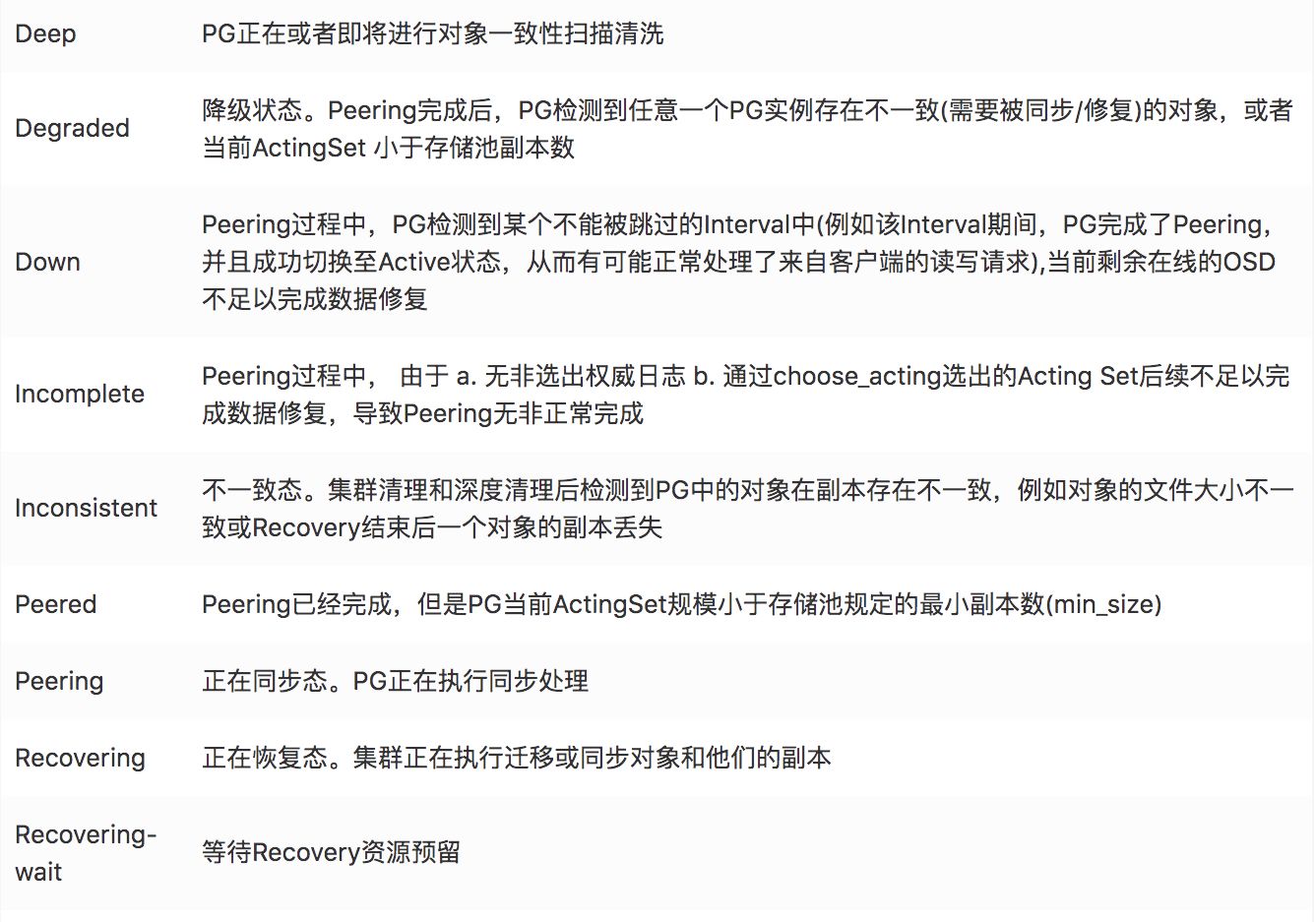

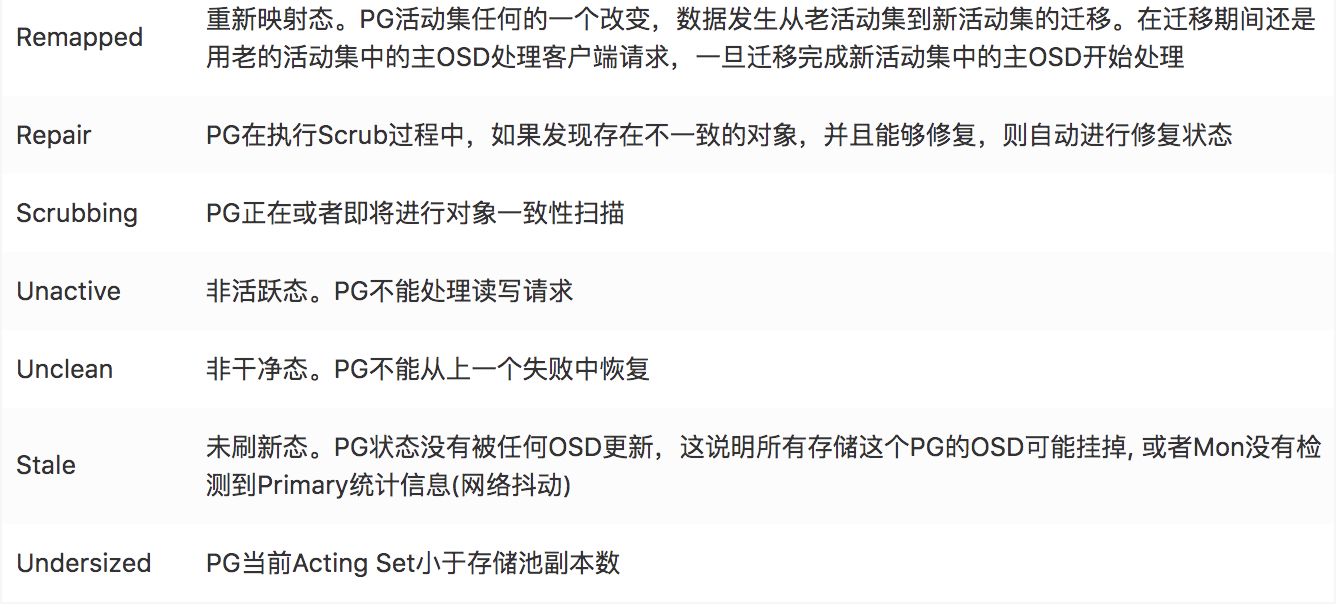

2. PG status table

The normal PG status is 100% active + clean, which means all PGs are accessible and all replicas are available to all PGs.

If Ceph also reports other warning or error status for PG. PG status table:

3.1 Degraded

3.1.1 description

- Degradation: as can be seen from the above, each PG has three copies, which are saved in different OSDs. In the non fault situation, this PG is in the active+clean state. Then, if the copy osd.4 of PG is hung up, this PG is in the degraded state.

3.1.2 fault simulation

a. Stop osd.1

$ systemctl stop ceph-osd@1

b. View PG status

$ bin/ceph pg stat 20 pgs: 20 active+undersized+degraded; 14512 kB data, 302 GB used, 6388 GB / 6691 GB avail; 12/36 objects degraded (33.333%)

c. View cluster monitoring status

$ bin/ceph health detail HEALTH_WARN 1 osds down; Degraded data redundancy: 12/36 objects degraded (33.333%), 20 pgs unclean, 20 pgs degraded; application not enabled on 1 pool(s) OSD_DOWN 1 osds down osd.1 (root=default,host=ceph-xx-cc00) is down PG_DEGRADED Degraded data redundancy: 12/36 objects degraded (33.333%), 20 pgs unclean, 20 pgs degraded pg 1.0 is active+undersized+degraded, acting [0,2] pg 1.1 is active+undersized+degraded, acting [2,0]

d. Client IO operation

#Write object $ bin/rados -p test_pool put myobject ceph.conf #Read object to file $ bin/rados -p test_pool get myobject.old #see file $ ll ceph.conf* -rw-r--r-- 1 root root 6211 Jun 25 14:01 ceph.conf -rw-r--r-- 1 root root 6211 Jul 3 19:57 ceph.conf.old

Fault summary:

In order to simulate a fault, (size = 3, min_size = 2) we manually stop osd.1, and then check the PG status. It can be seen that its current status is active + interpreted + degraded. When the OSD where a PG is hangs up, the PG will enter the interpreted + degraded status. The meaning of the [0,2] on the back side is that there are two copies surviving on osd.0 and osd.2, At this time, the client can read and write IO normally.

3.1.3 summary

- Degradation refers to that Ceph marks all PG on the OSD as Degraded after some failures such as OSD hang up.

- The degraded cluster can read and write data normally. The degraded PG is just a minor problem, not a serious problem.

- Undersized means that the current number of surviving PG copies is 2, which is less than 3. Make this mark to indicate that the number of inventory copies is insufficient, which is not a serious problem.

3.2 Peered

3.2.1 description

- Peering has completed, but PG's current Acting Set size is smaller than the minimum number of replicas specified by the storage pool.

3.2.2 fault simulation

a. Stop two copies of OSD. 1 and OSD. 0

$ systemctl stop ceph-osd@1 $ systemctl stop ceph-osd@0

b. View cluster health status

$ bin/ceph health detail HEALTH_WARN 1 osds down; Reduced data availability: 4 pgs inactive; Degraded data redundancy: 26/39 objects degraded (66.667%), 20 pgs unclean, 20 pgs degraded; application not enabled on 1 pool(s) OSD_DOWN 1 osds down osd.0 (root=default,host=ceph-xx-cc00) is down PG_AVAILABILITY Reduced data availability: 4 pgs inactive pg 1.6 is stuck inactive for 516.741081, current state undersized+degraded+peered, last acting [2] pg 1.10 is stuck inactive for 516.737888, current state undersized+degraded+peered, last acting [2] pg 1.11 is stuck inactive for 516.737408, current state undersized+degraded+peered, last acting [2] pg 1.12 is stuck inactive for 516.736955, current state undersized+degraded+peered, last acting [2] PG_DEGRADED Degraded data redundancy: 26/39 objects degraded (66.667%), 20 pgs unclean, 20 pgs degraded pg 1.0 is undersized+degraded+peered, acting [2] pg 1.1 is undersized+degraded+peered, acting [2]

c. Client IO operation (tamper)

#Read object to file, RAM IO $ bin/rados -p test_pool get myobject ceph.conf.old

Fault summary:

- Now pg only survives on osd.2, and pg has another state: peered, which means to look carefully. Here we can understand it as negotiation and search.

- At this time, when reading the file, you will find that the instruction will stay stuck in that place. Why can't you read the content? Because we set min_size=2. If the number of survivals is less than 2, such as 1 here, then it won't respond to external IO requests.

d. Adjusting min_size=1 can solve the problem of IO tamping

#Set min_size = 1 $ bin/ceph osd pool set test_pool min_size 1 set pool 1 min_size to 1

e. View cluster monitoring status

$ bin/ceph health detail HEALTH_WARN 1 osds down; Degraded data redundancy: 26/39 objects degraded (66.667%), 20 pgs unclean, 20 pgs degraded, 20 pgs undersized; application not enabled on 1 pool(s) OSD_DOWN 1 osds down osd.0 (root=default,host=ceph-xx-cc00) is down. PG_DEGRADED Degraded data redundancy: 26/39 objects degraded (66.667%), 20 pgs unclean, 20 pgs degraded, 20 pgs undersized pg 1.0 is stuck undersized for 65.958983, current state active+undersized+degraded, last acting [2] pg 1.1 is stuck undersized for 65.960092, current state active+undersized+degraded, last acting [2] pg 1.2 is stuck undersized for 65.960974, current state active+undersized+degraded, last acting [2]

f. Client IO operation

#Read object to file $ ll -lh ceph.conf* -rw-r--r-- 1 root root 6.1K Jun 25 14:01 ceph.conf -rw-r--r-- 1 root root 6.1K Jul 3 20:11 ceph.conf.old -rw-r--r-- 1 root root 6.1K Jul 3 20:11 ceph.conf.old.1

Fault summary:

- As you can see, the PG status Peered is gone, and the client file IO can be read and written normally.

- When min_size=1, as long as one copy of the cluster is alive, it can respond to external IO requests.

3.2.3 summary

- Peered status we can understand it here as waiting for other copies to come online.

- When min_size = 2, the Peered state can be removed when two replicas must survive.

- PG in Peered state cannot respond to external requests and IO is suspended.

3.3 Remapped

3.3.1 description

- After Peering is completed, if the PG current Acting Set is inconsistent with the Up Set, the status of Remapped will appear.

3.3.2 fault simulation

a. Stop osd.x

$ systemctl stop ceph-osd@x

b. Start osd.x every 5 minutes

$ systemctl start ceph-osd@x

c. View PG status

$ ceph pg stat 1416 pgs: 6 active+clean+remapped, 1288 active+clean, 3 stale+active+clean, 119 active+undersized+degraded; 74940 MB data, 250 GB used, 185 TB / 185 TB avail; 1292/48152 objects degraded (2.683%) $ ceph pg dump | grep remapped dumped all 13.cd 0 0 0 0 0 0 2 2 active+clean+remapped 2018-07-03 20:26:14.478665 9453'2 20716:11343 [10,23] 10 [10,23,14] 10 9453'2 2018-07-03 20:26:14.478597 9453'2 2018-07-01 13:11:43.262605 3.1a 44 0 0 0 0 373293056 1500 1500 active+clean+remapped 2018-07-03 20:25:47.885366 20272'79063 20716:109173 [9,23] 9 [9,23,12] 9 20272'79063 2018-07-03 03:14:23.960537 20272'79063 2018-07-03 03:14:23.960537 5.f 0 0 0 0 0 0 0 0 active+clean+remapped 2018-07-03 20:25:47.888430 0'0 20716:15530 [23,8] 23 [23,8,22] 23 0'0 2018-07-03 06:44:05.232179 0'0 2018-06-30 22:27:16.778466 3.4a 45 0 0 0 0 390070272 1500 1500 active+clean+remapped 2018-07-03 20:25:47.886669 20272'78385 20716:108086 [7,23] 7 [7,23,17] 7 20272'78385 2018-07-03 13:49:08.190133 7998'78363 2018-06-28 10:30:38.201993 13.102 0 0 0 0 0 0 5 5 active+clean+remapped 2018-07-03 20:25:47.884983 9453'5 20716:11334 [1,23] 1 [1,23,14] 1 9453'5 2018-07-02 21:10:42.028288 9453'5 2018-07-02 21:10:42.028288 13.11d 1 0 0 0 0 4194304 1539 1539 active+clean+remapped 2018-07-03 20:25:47.886535 20343'22439 20716:86294 [4,23] 4 [4,23,15] 4 20343'22439 2018-07-03 17:21:18.567771 20343'22439 2018-07-03 17:21:18.567771#Query $ceph pg stat in 2 minutes 1416 pgs: 2 active+undersized+degraded+remapped+backfilling, 10 active+undersized+degraded+remapped+backfill_wait, 1401 active+clean, 3 stale+active+clean; 74940 MB data, 247 GB used, 179 TB / 179 TB avail; 260/48152 objects degraded (0.540%); 49665 kB/s, 9 objects/s recovering$ ceph pg dump | grep remapped dumped all 13.1e8 2 0 2 0 0 8388608 1527 1527 active+undersized+degraded+remapped+backfill_wait 2018-07-03 20:30:13.999637 9493'38727 20754:165663 [18,33,10] 18 [18,10] 18 9493'38727 2018-07-03 19:53:43.462188 0'0 2018-06-28 20:09:36.303126

d. Client IO operation

#Reading and writing of rados are normal rados -p test_pool put myobject /tmp/test.log

3.3.3 summary

- When the OSD is hung up or expanded, the OSD on the PG will reassign the OSD number of the PG according to the Crush algorithm. And it will map PG Remap to other OSDs.

- In the Remapped state, the PG current Acting Set is inconsistent with the Up Set.

- Client IO can read and write normally.

3.4 Recovery

3.4.1 description

- It refers to the process that PG synchronizes and repairs objects with inconsistent data through PGLog log.

3.4.2 fault simulation

a. Stop osd.x

$ systemctl stop ceph-osd@x

b. Start osd.x every 1 minute

osd$ systemctl start ceph-osd@x

c. View cluster monitoring status

$ ceph health detail HEALTH_WARN Degraded data redundancy: 183/57960 objects degraded (0.316%), 17 pgs unclean, 17 pgs degraded PG_DEGRADED Degraded data redundancy: 183/57960 objects degraded (0.316%), 17 pgs unclean, 17 pgs degraded pg 1.19 is active+recovery_wait+degraded, acting [29,9,17]

3.4.3 summary

- Recovery is to recover data through the recorded PGLog.

- The recorded PG log is within OSD Max PG log entries = 10000. At this time, the data can be recovered incrementally through PGLog.

3.5 Backfill

3.5.1 description

- When PGLog is the only way to recover data from a PG replica, full synchronization is required. Full synchronization is performed by copying all objects of the current Primary.

3.5.2 fault simulation

a. Stop osd.x

$ systemctl stop ceph-osd@x.

b. Start osd.x every 10 minutes

$ osd systemctl start ceph-osd@x.

c. View cluster health status

$ ceph health detail. HEALTH_WARN Degraded data redundancy: 6/57927 objects degraded (0.010%), 1 pg unclean, 1 pg degraded. PG_DEGRADED Degraded data redundancy: 6/57927 objects degraded (0.010%), 1 pg unclean, 1 pg degraded pg 3.7f is active+undersized+degraded+remapped+backfilling, acting [21,29]

3.5.3 summary

- When the data cannot be recovered according to the recorded PGLog, it is necessary to perform the Backfill process to recover the data in full.

- If the number of entries exceeds OSD Max PG log entries = 10000, full data recovery is required at this time.

3.6 Stale

3.6.1 description

- mon detects that the osd of the Primary node of the current PG is down.

- Primary timeout does not report pg related information (such as network congestion) to mon.

- Three copies of PG are hung.

3.6.2 fault simulation

a. Stop three copies of OSD in PG respectively, first stop osd.23

$ systemctl stop ceph-osd@23.

b. Then stop osd.24

$ systemctl stop ceph-osd@24.

c. View the status of stopping two copies of PG 1.45 (interpreted + degraded + peered)

$ ceph health detail. HEALTH_WARN 2 osds down; Reduced data availability: 9 pgs inactive; Degraded data redundancy: 3041/47574 objects degraded (6.392%), 149 pgs unclean, 149 pgs degraded, 149 pgs undersized. OSD_DOWN 2. osds down osd.23 (root=default,host=ceph-xx-osd02) is down osd.24 (root=default,host=ceph-xx-osd03) is down PG_AVAILABILITY Reduced data availability: 9 pgs inactive pg 1.45 is stuck inactive for 281.355588, current state undersized+degraded+peered, last acting [10]

d. Third copy osd.10 in stop PG 1.45

$ systemctl stop ceph-osd@10

e. View the status of stopping three copies PG 1.45 (stale + interpreted + degraded + peered)

$ ceph health detail HEALTH_WARN 3 osds down; Reduced data availability: 26 pgs inactive, 2 pgs stale; Degraded data redundancy: 4770/47574 objects degraded (10.026%), 222 pgs unclean, 222 pgs degraded, 222 pgs undersized OSD_DOWN 3 osds down osd.10 (root=default,host=ceph-xx-osd01) is down osd.23 (root=default,host=ceph-xx-osd02) is down osd.24 (root=default,host=ceph-xx-osd03) is down PG_AVAILABILITY Reduced data availability: 26 pgs inactive, 2 pgs stale pg 1.9 is stuck inactive for 171.200290, current state undersized+degraded+peered, last acting [13] pg 1.45 is stuck stale for 171.206909, current state stale+undersized+degraded+peered, last acting [10] pg 1.89 is stuck inactive for 435.573694, current state undersized+degraded+peered, last acting [32] pg 1.119 is stuck inactive for 435.574626, current state undersized+degraded+peered, last acting [28]

f. Client IO operation

#Read write mount disk IO tamper ll /mnt/

Fault summary:

Stop two replicas in the same PG first, and the status is interpreted + degraded + peered.

Then stop three replicas in the same PG, and the status is stale + interpreted + degraded + peered.

3.6.3 summary

- When three copies of a PG are hung, a stale state will appear.

- At this time, the PG can't provide client reading and writing, IO hangs and tamps.

- When the Primary node times out, it fails to report pg related information (such as network blocking) to mon, and a stale state will also appear.

3.7 Inconsistent

3.7.1 description

- PG detects that some or some objects are inconsistent between PG instances through Scrub

3.7.2 fault simulation

a. Delete the copy osd.34 header file in PG 3.0

$ rm -rf /var/lib/ceph/osd/ceph-34/current/3.0_head/DIR_0/1000000697c.0000122c__head_19785300__3

b. Manual execution of PG 3.0 for data cleaning

$ ceph pg scrub 3.0 instructing pg 3.0 on osd.34 to scrub

c. Check cluster monitoring status

$ ceph health detail HEALTH_ERR 1 scrub errors; Possible data damage: 1 pg inconsistent OSD_SCRUB_ERRORS 1 scrub errors PG_DAMAGED Possible data damage: 1 pg inconsistent pg 3.0 is active+clean+inconsistent, acting [34,23,1]

d. Repair PG 3.0

$ ceph pg repair 3.0 instructing pg 3.0 on osd.34 to repair #View cluster monitoring status $ ceph health detail HEALTH_ERR 1 scrub errors; Possible data damage: 1 pg inconsistent, 1 pg repair OSD_SCRUB_ERRORS 1 scrub errors PG_DAMAGED Possible data damage: 1 pg inconsistent, 1 pg repair pg 3.0 is active+clean+scrubbing+deep+inconsistent+repair, acting [34,23,1] #Cluster monitoring status has returned to normal $ ceph health detail HEALTH_OK

Fault summary:

When there are data inconsistencies in the three copies of PG, to repair the inconsistent data files, just execute the ceph pg repair command, and ceph will copy the lost files from other copies to repair the data.

3.7.3 fault simulation

- When the OSD hangs up for a short time, because there are two replicas in the cluster, it can be written normally, but the data in osd.34 has not been updated. After a while, osd.34 will go online. At this time, the data in osd.34 is old, and it will be sent to osd.34 through other OSDs Recover the data to make it up-to-date. During the recovery process, the PG status will go from inconsistent - > recover - > clean and finally recover to normal.

- This is a scenario of cluster fault self-healing.

3.8 Down

3.8.1 description

- During the Peering process, PG detects an Interval that cannot be skipped (for example, during the Interval, PG completes Peering and successfully switches to the Active state, thus it is possible to normally process the read and write requests from the client). Currently, the remaining online OSD is not enough to complete the data repair

3.8.2 fault simulation

a. View the number of copies in PG 3.7f

$ ceph pg dump | grep ^3.7f dumped all 3.7f 43 0 0 0 0 494927872 1569 1569 active+clean 2018-07-05 02:52:51.512598 21315'80115 21356:111666 [5,21,29] 5 [5,21,29] 5 21315'80115 2018-07-05 02:52:51.512568 6206'80083 2018-06-29 22:51:05.831219

b. Stop PG 3.7f replica osd.21

$ systemctl stop ceph-osd@21

c. View PG 3.7f status

$ ceph pg dump | grep ^3.7f dumped all 3.7f 66 0 89 0 0 591396864 1615 1615 active+undersized+degraded 2018-07-05 15:29:15.741318 21361'80161 21365:128307 [5,29] 5 [5,29] 5 21315'80115 2018-07-05 02:52:51.512568 6206'80083 2018-06-29 22:51:05.831219

d. When the client writes data, make sure that the data is written to the copy of PG 3.7f [5,29]

$ fio -filename=/mnt/xxxsssss -direct=1 -iodepth 1 -thread -rw=read -ioengine=libaio -bs=4M -size=2G -numjobs=30 -runtime=200 -group_reporting -name=read-libaio read-libaio: (g=0): rw=read, bs=4M-4M/4M-4M/4M-4M, ioengine=libaio, iodepth=1 ... fio-2.2.8 Starting 30 threads read-libaio: Laying out IO file(s) (1 file(s) / 2048MB) Jobs: 5 (f=5): [_(5),R(1),_(5),R(1),_(3),R(1),_(2),R(1),_(1),R(1),_(9)] [96.5% done] [1052MB/0KB/0KB /s] [263/0/0 iops] [eta 00m:02s] s] read-libaio: (groupid=0, jobs=30): err= 0: pid=32966: Thu Jul 5 15:35:16 2018 read : io=61440MB, bw=1112.2MB/s, iops=278, runt= 55203msec slat (msec): min=18, max=418, avg=103.77, stdev=46.19 clat (usec): min=0, max=33, avg= 2.51, stdev= 1.45 lat (msec): min=18, max=418, avg=103.77, stdev=46.19 clat percentiles (usec): | 1.00th=[ 1], 5.00th=[ 1], 10.00th=[ 1], 20.00th=[ 2], | 30.00th=[ 2], 40.00th=[ 2], 50.00th=[ 2], 60.00th=[ 2], | 70.00th=[ 3], 80.00th=[ 3], 90.00th=[ 4], 95.00th=[ 5], | 99.00th=[ 7], 99.50th=[ 8], 99.90th=[ 10], 99.95th=[ 14], | 99.99th=[ 32] bw (KB /s): min=15058, max=185448, per=3.48%, avg=39647.57, stdev=12643.04 lat (usec) : 2=19.59%, 4=64.52%, 10=15.78%, 20=0.08%, 50=0.03% cpu : usr=0.01%, sys=0.37%, ctx=491792, majf=0, minf=15492 IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% issued : total=r=15360/w=0/d=0, short=r=0/w=0/d=0, drop=r=0/w=0/d=0 latency : target=0, window=0, percentile=100.00%, depth=1 Run status group 0 (all jobs): READ: io=61440MB, aggrb=1112.2MB/s, minb=1112.2MB/s, maxb=1112.2MB/s, mint=55203msec, maxt=55203msec

e. Stop copy osd.29 in PG 3.7f and check PG 3.7f status (interpreted + degraded + peered)

#Stop the PG copy osd.29 systemctl stop ceph-osd@29 #Check that the PG 3.7f status is interpreted + degraded + peered ceph pg dump | grep ^3.7f dumped all 3.7f 70 0 140 0 0 608174080 1623 1623 undersized+degraded+peered 2018-07-05 15:35:51.629636 21365'80169 21367:132165 [5] 5 [5] 5 21315'80115 2018-07-05 02:52:51.512568 6206'80083 2018-06-29 22:51:05.831219

f. Stop the copy osd.5 in PG 3.7f, and check the PG 3.7f status (interpreted + degraded + peered)

#Stop the PG copy osd.5 $ systemctl stop ceph-osd@5 #View the PG status interpreted + degraded + peered $ ceph pg dump | grep ^3.7f dumped all 3.7f 70 0 140 0 0 608174080 1623 1623 stale+undersized+degraded+peered 2018-07-05 15:35:51.629636 21365'80169 21367:132165 [5] 5 [5] 5 21315'80115 2018-07-05 02:52:51.512568 6206'80083 2018-06-29 22:51:05.831219

g. Pull up the copy osd.21 in PG 3.7f (at this time, the osd.21 data is relatively old), and check the PG status (down)

#Pull up the osd.21 of the PG $ systemctl start ceph-osd@21 #View the status of the PG down $ ceph pg dump | grep ^3.7f dumped all 3.7f 66 0 0 0 0 591396864 1548 1548 down 2018-07-05 15:36:38.365500 21361'80161 21370:111729 [21] 21 [21] 21 21315'80115 2018-07-05 02:52:51.512568 6206'80083 2018-06-29 22:51:05.831219

h. Client IO operation

#At this time, the client IO will be blocked ll /mnt/

Fault summary:

First, a PG 3.7f has three copies [5, 21, 29]. When an osd.21 is stopped, write data to osd.5, osd.29. At this time, stop osd.29 and osd.5, and finally pull osd.21. At this time, the data of osd.21 is relatively old, and the PG is down. At this time, the IO of the client will be rammed, and only the suspended OSD can be pulled up to fix the problem.

3.8.3 OSD with PG as Down is lost or cannot be pulled up

- Repair method (production environment verified)

a. Delete cannot be pulled OSD b. Create corresponding numbered OSD c. PG Of Down The state will disappear d. about unfound Of PG ,Optional delete perhaps revert ceph pg {pg-id} mark_unfound_lost revert|delete

3.8.4 conclusion

- Typical scenario: a (main), B, C

a. First, kill B b. New write data to A, C c. kill A and C d. Pull up B

- In the case of PG Down, the osd node data is too old, and other online OSDs are not enough to complete data repair.

- At this time, the PG cannot provide client IO reading and writing. IO will hang and ram.

3.9 Incomplete

In the process of Peering, because a. all authoritative logs are selected and b. the Acting Set selected through choose & acting is not enough to complete data repair in the future, Peering is not completed abnormally.

It is common for ceph cluster to restart the server back and forth or power down in peering state.

3.9.1 summary

- Repair method wanted: command to clear 'incomplete' PGs

For example, pg 1.1 is incomplete. First, compare the number of objects in pg between the primary and secondary copies of pg 1.1, and export the pg if the number of objects is more

Then import to pg with a small number of objects, and then mark complete. You must first export pg for backup.

In a simple way, data may be lost again

a. stop the osd that is primary for the incomplete PG; b. run: ceph-objectstore-tool --data-path ... --journal-path ... --pgid $PGID --op mark-complete c. start the osd.

Ensure data integrity

#1. View the number of objects in the primary copy of pg 1.1. If the number of primary objects is large, execute at the osd node where the primary copy is located $ ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-0/ --journal-path /var/lib/ceph/osd/ceph-0/journal --pgid 1.1 --op export --file /home/pg1.1 #2. Then add / home/pg1.1 scp to the node where the replica is located (there are multiple replicas, which should be done for each replica), and execute it at the node where the replica is located $ ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-1/ --journal-path /var/lib/ceph/osd/ceph-1/journal --pgid 1.1 --op import --file /home/pg1.1 #3. Then makr complete $CEPH objectstore tool -- data path / var / lib / CEPH / OSD / ceph-1 / -- Journal path / var / lib / CEPH / OSD / ceph-1 / Journal -- PGID 1.1 -- OP mark complete #4. Finally start OSD $start OSD

Validation plan

#1. Mark pg with incomplete status as complete. It is recommended to verify in the test environment and be familiar with the use of CEPH objectstore tool before operation. PS: before using CEPH objectstore tool, you need to stop the osd of the current operation, otherwise an error will be reported. #2. Query the details of pg 7.123 and use it online ceph pg 7.123 query > /export/pg-7.123-query.txt #3. Query each osd replica node ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-641/ --type bluestore --pgid 7.123 --op info > /export/pg-7.123-info-osd641.txt as pg 7.123 OSD 1 has 1,2,3,4,5 object pg 7.123 OSD 2 has 1,2,3,6 object pg 7.123 OSD 2 has 1,2,3,7 object #4. Query comparison data #4.1 export the object list of pg ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-641/ --type bluestore --pgid 7.123 --op list > /export/pg-7.123-object-list-osd-641.txt #4.2 querying the object quantity of pg ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-641/ --type bluestore --pgid 7.123 --op list|wc -l #4.3 compare the object s of all copies. diff -u /export/pg-7.123-object-list-osd-1.txt /export/pg-7.123-object-list-osd-2.txt For example, pg 7.123 is incomplete. Compare the number of object s in pg between all copies of 7.123. -As mentioned above, after diff comparison, whether the object list of each replica (all the master and slave replicas) is consistent. Avoid data inconsistency. After diff comparison, the most used backup includes all objects. -As mentioned above, after diff comparison, the quantity is inconsistent, and the most objects do not include all objects. Therefore, it is necessary to consider not to overwrite the import and then export. Finally, all complete objects are used for import. Note: import is to import after removing PG in advance, which is equal to overwrite import. -For example, after diff comparison, if the data is consistent, use the backup with the largest number of objects, then import it to pg with a small number of objects, and then mark complete in all replicas. You must first export pg backup in osd node of all replicas to avoid exceptions before pg can be recovered. #5. Export backup Check the number of objects in all the copies of pg 7.123. Referring to the above situation, assuming that osd-641 has a large number of objects and the data diff is the same, the OSD node with the largest number of objects and the same object list will execute (it's better to export and back up each copy. If it's 0, it also needs to export and back up) ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-641/ --type bluestore --pgid 7.123 --op export --file /export/pg1.414-osd-1.obj #6. Import backup Then / export/pg1.414-osd-1.obj scp is sent to the node where the replica is located, and the import is performed in the OSD node with fewer objects. (it's better to have an export backup for each copy, and it's also necessary to export backup for 0) Import the specified pg metadata to the current pg, and delete the current pg before importing (please export and back up the pg data before removing). You need to remove the current pg, otherwise it cannot be imported, indicating that it already exists. CEPH objectstore tool -- data path / var / lib / CEPH / OSD / ceph-57 / -- type bluestore -- PGID 7.123 -- OP remove requires - force to delete. ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-57/ --type bluestore --pgid 7.123 --op import --file /export/pg1.414-osd-1.obj #7. Mark pg status, makr complete (master and slave copies execute) ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-57/ --type bluestore --pgid 7.123

Author: Li Hang [didi travel expert engineer]

In order to improve the efficiency of R & D, Didi cloud technology salon, which is all technology dry goods, is registering!

Pay attention to the official account of the drop cloud.

Reply to "class" for free registration

Reply to "server" to get a free one month experience of getting started with ECS