http://blog.csdn.net/JansonZhe/article/details/47334671

In my last article The Compilation of USB Camera Android(JNI) Based on V4L2 Driver (I) In this article, I will analyze the process of V4L2 video capture in detail.

1. Apply buffer frame to driver

Buffer frames, as the name implies, are "containers" used in Linux drivers to temporarily store data, and in V4L2 drivers, that is, to store our video stream data. And what is to apply for buffer frames from the driver, because the number of buffer frames in V4L2 driver is not fixed. Every time we use it, we should apply for V4L2 driver according to our needs. This also reflects the strength of V4L2 and the rationality of its design, and guarantees unnecessary waste of resources. Here's how to apply for a buffer frame from the V4L2 driver.

Use command: VIDIOC_REQBUFS

struct v4l2_requestbuffers req;

//Setting parameter information of v4l2_request buffers

CLEAR (req);

req.count = 4; //Cache quantity

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; //Data flow type, this parameter cannot be changed

req.memory = V4L2_MEMORY_MMAP;//Use memory mapping.

//Application buffer number, using VIDIOC_REQBUFS, note that although req.count above assigns a value of 4, but not necessarily after the application, the number of buffers obtained is also 4.

if (-1 == xioctl (fd, VIDIOC_REQBUFS, &req)) {

if (EINVAL == errno) {

LOGE("%s does not support memory mapping", dev_name);

return ERROR_LOCAL;

} else {

return errnoexit ("VIDIOC_REQBUFS");

}

}

//If the requested buffer is less than 2, it means that there is insufficient memory (kernel space, physical memory)

if (req.count < 2) {

LOGE("Insufficient buffer memory on %s", dev_name);

return ERROR_LOCAL;

}

//Define buffer type structure

struct buffer {

void * start;

size_t length;

};

//Structural pointer defining buffer type

struct buffer * buffers = NULL;

//According to the number of buffers applied, req.count buffer pointers are also allocated in user space to store the mapping of the kernel space.

buffers = calloc (req.count, sizeof (*buffers));- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

For the above program, I would like to highlight the structure of v4l2_request buffers, which defines some information about setting buffer frames. Its complete structure is as follows:

struct v4l2_requestbuffers

{

__u32 count //Number of buffered frames applied

__u32 type //Data flow type, this parameter is usually fixed

__u32 memory //Using memory mapping

__u32 reserved[2] //Keep data, generally not.

}

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

In the program, why do we need to define a buffer type structure pointer in the user space, and the number of pointers applied is the same as the number of buffer frames defined in the kernel space, which is a method of applying our Linux kernel mapping. The reason for using memory mapping is that because of the large video stream data, if we still use the previous copy_from_user or copy_to_user mode, it not only consumes the amount of memory in the kernel space, but also consumes the memory in the user space. At the same time, it greatly reduces the performance of the driver. With memory mapping, as long as we map the physical address of the memory buffer frame to our user space, we can directly use the data of the kernel buffer frame in user space.

Mapping buffer frames to user space

In the previous step, we have applied for buffer frames in the kernel space and defined an equal number of buffer frame pointers in the user space, so we will certainly complete the mapping of buffer frames in the kernel to the user space.

In this step, we first use the VIDIOC_QUERYBUF command to get the relevant information of the buffer frame. After acquisition, we map each buffer frame one by one. The main code is as follows:

for (n_buffers = 0; n_buffers < req.count; ++n_buffers) {

struct v4l2_buffer buf;

CLEAR (buf);

//Initialize v4l2_buffer

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

//Complete the numbering of the kernel buffer frame, so that it corresponds to the buffer frame pointer in user space.

buf.index = n_buffers;

//Get the address of the cache buf and other information.

if (-1 == xioctl (fd, VIDIOC_QUERYBUF, &buf))

return errnoexit ("VIDIOC_QUERYBUF");

buffers[n_buffers].length = buf.length;

buffers[n_buffers].start =

mmap (NULL ,

buf.length,

PROT_READ | PROT_WRITE,

MAP_SHARED,

fd, buf.m.offset);

//The ultimate goal of mmap system calls is to map devices or files to the virtual address space of user processes, so that user processes can read and write files directly.

if (MAP_FAILED == buffers[n_buffers].start) //If the mapping fails

return errnoexit ("mmap");

}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

We also used a very important structure in this step. v4l2_buffer For an introduction to this structure, you can view official documents. Now our initialization work is almost over here. The next step is to start capturing video stream data.

3. Capturing Video Stream

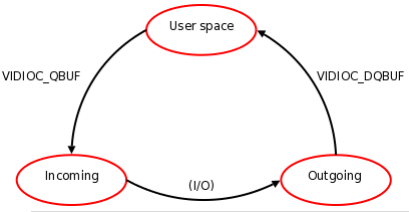

The first step is to put the buffered frames we applied for in the kernel space into the queue to receive the video stream data one by one. The command is VIDIOC_QBUF. The second step is to open the captured video data. The command is VIDIOC_STREAMON. Generally, when writing code, the two parts will be written together.

unsigned int i;

enum v4l2_buf_type type;

//Only before capturing video streams should buf be queued, so as long as there is buf in the queue, the device will put data into buf, where the function of putting buf in the queue is VIDIOC_QBUF.

for (i = 0; i < n_buffers; ++i) {

struct v4l2_buffer buf;

CLEAR (buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP; //Here we choose the form of mmap, that is, memory mapping.

//Put the numbered buffer frame into the receiving video data queue.

if (-1 == xioctl (fd, VIDIOC_QBUF, &buf))

return errnoexit ("VIDIOC_QBUF");

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

//Open Capture Video Data

if (-1 == xioctl (fd, VIDIOC_STREAMON, &type))

return errnoexit ("VIDIOC_STREAMON");

return SUCCESS_LOCAL;

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

4. Reading Buffered Frame Data

After the above step, the video stream data should be captured one by one and put into the buffer frame of the receiving data queue. Now our main task is to read the data from one frame of the buffer frame of the receiving queue, and then process the read data. Of course, after reading, we have to put the buffer frame back into the queue of receiving data. The command used to read the data is VIDIOC_DQBUF. Will the buffer frame be placed in the receiving data queue or the command we used in the last step: VIDIOC_QBUF?

struct v4l2_buffer buf;

unsigned int i;

//Read the cache, that is, out of the queue

CLEAR (buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

if (-1 == xioctl (fd, VIDIOC_DQBUF, &buf)) {

switch (errno) {

case EAGAIN:

return 0;

case EIO:

default:

return errnoexit ("VIDIOC_DQBUF");

}

}

//A conditional judgment statement terminates the program if the judgment is unsuccessful. At this point n_buffers are already 4.

assert (buf.index < n_buffers);

//The read cache is processed and the data starting address is passed to the processing function as a parameter.

processimage (buffers[buf.index].start);

//Re-queuing is a necessary step, because sometimes you don't know whether it belongs to an output device or an input device.

if (-1 == xioctl (fd, VIDIOC_QBUF, &buf))

return errnoexit ("VIDIOC_QBUF");- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

The whole process of receiving video stream data can be represented by the following graph.

Proceimage function in the program is used to process video stream data. Because we are in The Compilation of USB Camera Android(JNI) Based on V4L2 Driver (I) In the step of setting the format, width, height and size of the captured video frame, the storage format of the captured video stream data is set to YUYV format, so it will be processed in our process image function.