In the previous chapter, we used minicube to build a single machine cluster and create Deployment and service (explained in the three chapters). This chapter will introduce the practice of deploying multi node clusters with kubedm, learning to install and use kubernetes command-line tools to quickly create cluster instances and complete the Deployment of hello world applications.

Kubedm is the deployment method required in CKAD certification, but the image can only be downloaded through foreign networks. If the reader is a domestic server, you can refer to chapter 2.4 and use a domestic server as an agent.

This chapter mainly introduces how to install kubeadm, deploy clusters and add nodes.

Docker needs to be installed on the server in advance.

This article is part of the author's Kubernetes series of e-books. E-books have been open source. Please pay attention. E-book browsing address:

https://k8s.whuanle.cn [suitable for domestic visit]

https://ek8s.whuanle.cn [gitbook]

Command line tools

In kubernetes, there are mainly three tools for daily use. These tools are named with kube prefix. The three tools are as follows:

- Kubedm: an instruction used to initialize a cluster. It can create a cluster and add new nodes. It can be replaced by other deployment tools.

- kubelet: used to start pods and containers on each node in the cluster. Each node must have a network agent relative to the node and the cluster.

- kubectl: a command-line tool used to communicate / interact with the cluster. It communicates with kubernetes API server. It is the client of our cluster.

kubelet and kubectl are introduced in Chapter 1.5. kubelet is responsible for the communication between nodes in the cluster. Kubectl allows users to enter commands to control the cluster, and kubedm is a tool for creating clusters, adding and reducing nodes.

Install command line tools

Command line tools need to be installed on each node. kubectl and kubelet are required components, and kubedm can be replaced. kubeadm is the deployment tool officially recommended by Kubenetes, but due to various reasons such as network, some alternative projects have also been developed in the Chinese community, such as kubesphere( https://kubesphere.com.cn/ ), Kubernetes can be deployed in China to eliminate network problems.

Install through software warehouse

Here's how to download the installation kit from Google's source.

to update apt Package index and install using Kubernetes apt Packages required for warehouse:

sudo apt-get update sudo apt-get install -y apt-transport-https ca-certificates curl

Download the Google Cloud public signature key:

sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

Add Kubernetes apt Warehouse:

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

Note: if it is a domestic server, please ignore the above two steps and use the following command:

apt-get update && apt-get install -y apt-transport-https curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add - cat <<EOF >/etc/apt/sources.list.d/kubernetes.list deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main EOF

to update apt Package index, install kubelet, kubedm and kubectl, and lock their versions:

sudo apt-get update sudo apt-get install -y kubelet kubeadm kubectl sudo apt-mark hold kubelet kubeadm kubectl

Execute the command to check whether it is normal:

kubeadm --help

Different operating systems

This is just to introduce the different installation methods of ubuntu and centos. If they have been installed through the previous installation methods, you don't need to ignore this section.

Systems such as Ubuntu and Debain can be installed through the software warehouse using the following commands:

sudo apt-get update && sudo apt-get install -y apt-transport-https gnupg2 curl curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add - echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee -a /etc/apt/sources.list.d/kubernetes.list sudo apt-get update sudo apt-get install -y kubelet kubeadm kubectl

Centos, RHEL and other systems can be installed through the software warehouse using the following commands:

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg EOF yum install -y kubelet kubeadm kubectl

Cluster management

Create kubernetes cluster

Kubedm is a creation management tool that mainly provides kubeadm init and kubeadm join Two commands as a "shortcut" to creating Kubernetes clusters are best practices.

Kubernetes cluster is composed of Master and Worker nodes. The Master node is responsible for controlling all nodes in the cluster.

Note that the nodes in the cluster in this tutorial should be servers that can be interconnected through the intranet, and these servers can access each other through the intranet. If the servers communicate with each other through the public network, please consult other tutorials for operation methods.

1. Create Master

implement hostname -i View the ip of this node.

We initialize an API Server service with a binding address of 192.168.0.8 (according to your ip address). This step creates a master node.

Note: it can be used directly Kubedm init, which automatically uses the default network ip.

kubeadm init # Or kubedm init -- apiserver advertisement address 192.168.0.8 # Or kubedm init -- apiserver advertisement address $(hostname - I)

If deployment fails, you can refer to the following two commands to view the failure reason.

systemctl status kubelet journalctl -xeu kubelet

For common Docker related errors, please refer to: https://kubernetes.io/docs/setup/production-environment/container-runtimes/#docker

After completion, some information will be prompted, which can be found in the prompted content:

kubeadm join 192.168.0.8:6443 --token q25z3f.v5uo5bphvgxkjnmz \

--discovery-token-ca-cert-hash sha256:0496adc212112b5485d0ff12796f66b29237d066fbc1d4d2c5e45e6add501f64

Save this information for later use when adding nodes.

If prompted Alternatively, if you are the root user, you can run:.

export KUBECONFIG=/etc/kubernetes/admin.conf

[Info] prompt

admin.conf is the authentication file for connecting to kubernetes. You can connect to kubernetes through this file. kubectl also needs this file; In Linux, use the KUBECONFIG environment variable to know where the authentication file is.

The environment variables of each user in Linux are different. If you switch users, you also need to set the KUBECONFIG environment variable; If you want to connect to the cluster on another node, you can copy this file.

The admin.conf file is required for subsequent operations, otherwise an error will be reported The connection to the server localhost:8080 was refused - did you specify the right host or port? .

because export The environment variable cannot be persisted. Please open it ~/. bashrc File, add this command to the end of the file.

[Info] prompt

In order to protect / etc/kubernetes/admin.conf from direct pointing, it is recommended that each user copy this file to the user directory once. The commands are as follows:

mkdir -p $HOME/.kube cp -f /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config

2. Initialize the network

This step is not necessary, but generally speaking, deploying Kubernetes will configure the network, otherwise the nodes will not be able to access each other. Readers can follow it. We will explore it in the following chapters.

To initialize the network through a remote configuration file, you need to pull a yaml file from a third party.

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')" --namespace=kube-system # --Namespace = Kube system means that the plug-in runs in the Kube system namespace

If successful, you will be prompted:

serviceaccount/weave-net created clusterrole.rbac.authorization.k8s.io/weave-net created clusterrolebinding.rbac.authorization.k8s.io/weave-net created role.rbac.authorization.k8s.io/weave-net created rolebinding.rbac.authorization.k8s.io/weave-net created daemonset.apps/weave-net created

We can also manually configure and execute kubectl version Check the version number and find GitVersion:v1.21.1 , Replace the address of the yaml file https://cloud.weave.works/k8s/net?k8s-version=v1.21.1, and then execute kubectl apply -n kube-system -f net.yaml Just.

3. Join the cluster

The Master node has been created earlier. Next, add another server to the cluster as a Worker node. If the reader has only one server, you can skip this step.

When a node joins the cluster initialized by kubedm, both parties need to establish two-way trust, which is divided into two parts: Discovery (Worker trusts Master) and TLS guidance (Master trusts Worker to be joined). At present, there are two ways to join, one is through token, the other is through kubeconfig file.

Format:

kubeadm join --discovery-token abcdef.xxx {IP}:6443 --discovery-token-ca-cert-hash sha256:xxx

kubeadm join--discovery-file file.conf

In the second node, use the previously backed up command, execute it directly and join the cluster. The format is as follows.

kubeadm join 192.168.0.8:6443 --token q25z3f.v5uo5bphvgxkjnmz \

--discovery-token-ca-cert-hash sha256:0496adc212112b5485d0ff12796f66b29237d066fbc1d4d2c5e45e6add501f64

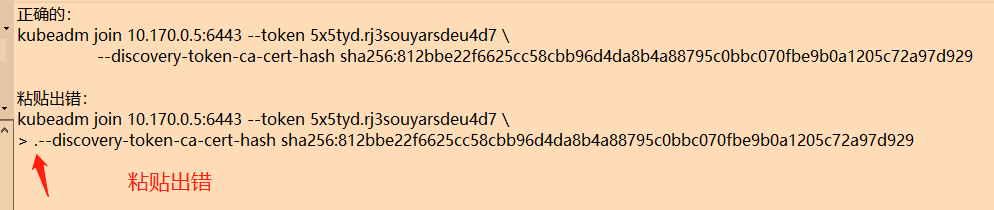

When copying and pasting, you should be aware that it may be due to \ A line feed character causes an extra decimal point when pasting, resulting in an error.

Possible problems

View docker version: yum list installed | grep docker and docker version.

If problems occur during deployment failed to parse kernel config: unable to load kernel module, which also indicates that the docker version is too high and needs to be downgraded.

If dnf is installed on the server, the command to downgrade the docker version:

dnf remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-selinux \

docker-engine-selinux \

docker-engine

dnf -y install dnf-plugins-core

dnf install docker-ce-18.06.3.ce-3.el7 docker-ce-cli containerd.io

If you can't, do it https://docs.docker.com/engine/install/centos/ Downgrade or handle it by other methods.

Note that docker version You will see the client and server versions. The version numbers of the two may be inconsistent.

Delete node

In the production environment, because services are already deployed on nodes, deleting nodes directly may lead to serious failure problems. Therefore, when a node needs to be removed, first expel all Pods on this node, and Kubernetes will automatically transfer the Pods on this node to other nodes for deployment (described in Chapter 3).

Get all nodes in the cluster and find the name of the node to be evicted.

kubectl get nodes

Evict all pods on this node:

kubectl drain {node name}

Although all services on the node are expelled, the node is still in the cluster, but Kubernetes will not deploy Pod to this node. If you need to restore this node and allow you to continue deploying the Pod, you can use:

kubectl uncordon {Node name}

As for expulsion, we will learn in the following chapters.

Note: it is necessary to expel all pods, and some pods may not be cleared.

Finally delete this node:

kubectl delete node {Node name}

After the cluster deletes this node, some data remains on the node, and you can continue to clean up the environment.

Clear environment

If the steps are wrong and you want to do it again, or if you need to clear the environment to remove the node, you can execute it kubeadm reset [flags] Command.

Note: execute only kubeadm reset Invalid command.

[flags] There are four types:

preflight Run reset pre-flight checks update-cluster-status Remove this node from the ClusterStatus object. remove-etcd-member Remove a local etcd member. cleanup-node Run cleanup node.

We need to implement:

kubeadm reset cleanup-node kubeadm reset

You can clear the remaining containers or other data of Kubernetes on the current server.