[TOC]

1. Briefly explain why rook is used

rook This is not detailed here, you can see it on the official website.

Say why you want to deploy the ceph cluster on kubernetes using root.

As we all know, the current kubernetes As the pod is released within the kubernetes node, its container data will also be cleared for the best cloud native container platform at the moment, i.e. it does not have the ability to persist data.and ceph As one of the best open source storage, it is also one of the best storage combined with kubernetes.Using the scheduling function of kubernetes, root's self-expansion and self-repair capabilities work closely with each other.

2. rook-ceph deployment

2.1 Environment

| Edition | Remarks | |

|---|---|---|

| system | CentOS7.6 | A 200G Data Disk |

| kubernetes | v1.14.8-aliyun.1 | Nodes with mounted data disks are scheduled as osd nodes |

| rook | v1.1.4 | - |

| ceph | v14.2.4 | - |

Note:

OSD has at least three nodes and works best with bare disks instead of partitions or file systems.

2.2 Rook Operator Deployment

Let's use the helm approach here, and let's not go into the benefits of helm.

Reference documents:

https://rook.io/docs/rook/v1.1/helm-operator.html

helm repo add rook-release https://charts.rook.io/release helm fetch --untar rook-release/rook-ceph cd rook-ceph vim values.yaml # Default mirror blocked by FW, repository recommended: ygqygq2/hyperkube helm install --name rook-ceph --namespace rook-ceph --namespace ./

Note:

EnbleFlexDriver: true can be set in values.yaml depending on the kubernetes version support;

Deployment results:

[root@linuxba-node1 rook-ceph]# kubectl get pod -n rook-ceph NAME READY STATUS RESTARTS AGE rook-ceph-operator-5bd7d67784-k9bq9 1/1 Running 0 2d15h rook-discover-2f84s 1/1 Running 0 2d14h rook-discover-j9xjk 1/1 Running 0 2d14h rook-discover-nvnwn 1/1 Running 0 2d14h rook-discover-nx4qf 1/1 Running 0 2d14h rook-discover-wm6wp 1/1 Running 0 2d14h

2.3 Ceph Cluster Creation

2.3.1 Identify the osd node

For better management control of the osd, identify the specified nodes so that the pod can only be scheduled on those nodes.

kubectl label node node1 ceph-role=osd

2.3.2 yaml Create Ceph Cluster

vim rook-ceph-cluster.yaml

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: ceph/ceph:v14.2.4-20190917

# Node ceph directory, containing configuration and log

dataDirHostPath: /var/lib/rook

mon:

# Set the number of mons to be started. The number should be odd and between 1 and 9.

# If not specified the default is set to 3 and allowMultiplePerNode is also set to true.

count: 3

# Enable (true) or disable (false) the placement of multiple mons on one node. Default is false.

allowMultiplePerNode: false

mgr:

modules:

- name: pg_autoscaler

enabled: true

network:

# osd and mgr will use the host network, but mon still uses the k8s network and therefore cannot solve the k8s external connection problem

# hostNetwork: true

dashboard:

enabled: true

# cluster level storage configuration and selection

storage:

useAllNodes: false

useAllDevices: false

deviceFilter:

location:

config:

metadataDevice:

#databaseSizeMB: "1024" # this value can be removed for environments with normal sized disks (100 GB or larger)

#journalSizeMB: "1024" # this value can be removed for environments with normal sized disks (20 GB or larger)

# Node list, using the node name in k8s

nodes:

- name: k8s1138026node

devices: # specific devices to use for storage can be specified for each node

- name: "vdb"

config: # configuration can be specified at the node level which overrides the cluster level config

storeType: bluestore

- name: k8s1138027node

devices: # specific devices to use for storage can be specified for each node

- name: "vdb"

config: # configuration can be specified at the node level which overrides the cluster level config

storeType: bluestore

- name: k8s1138031node

devices: # specific devices to use for storage can be specified for each node

- name: "vdb"

config: # configuration can be specified at the node level which overrides the cluster level config

storeType: bluestore

- name: k8s1138032node

devices: # specific devices to use for storage can be specified for each node

- name: "vdb"

config: # configuration can be specified at the node level which overrides the cluster level config

storeType: bluestore

placement:

all:

nodeAffinity:

tolerations:

mgr:

nodeAffinity:

tolerations:

mon:

nodeAffinity:

tolerations:

# Recommend osd to set node affinity

osd:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-role

operator: In

values:

- osd

tolerations:kubectl apply -f rook-ceph-cluster.yaml

View the results:

[root@linuxba-node1 ceph]# kubectl get pod -n rook-ceph -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES csi-cephfsplugin-5dthf 3/3 Running 0 20h 172.16.138.33 k8s1138033node <none> <none> csi-cephfsplugin-f2hwm 3/3 Running 3 20h 172.16.138.27 k8s1138027node <none> <none> csi-cephfsplugin-hggkk 3/3 Running 0 20h 172.16.138.26 k8s1138026node <none> <none> csi-cephfsplugin-pjh66 3/3 Running 0 20h 172.16.138.32 k8s1138032node <none> <none> csi-cephfsplugin-provisioner-78d9994b5d-9n4n7 4/4 Running 0 20h 10.244.2.80 k8s1138031node <none> <none> csi-cephfsplugin-provisioner-78d9994b5d-tc898 4/4 Running 0 20h 10.244.3.81 k8s1138032node <none> <none> csi-cephfsplugin-tgxsk 3/3 Running 0 20h 172.16.138.31 k8s1138031node <none> <none> csi-rbdplugin-22bp9 3/3 Running 0 20h 172.16.138.26 k8s1138026node <none> <none> csi-rbdplugin-hf44c 3/3 Running 0 20h 172.16.138.32 k8s1138032node <none> <none> csi-rbdplugin-hpx7f 3/3 Running 0 20h 172.16.138.33 k8s1138033node <none> <none> csi-rbdplugin-kvx7x 3/3 Running 3 20h 172.16.138.27 k8s1138027node <none> <none> csi-rbdplugin-provisioner-74d6966958-srvqs 5/5 Running 5 20h 10.244.1.111 k8s1138027node <none> <none> csi-rbdplugin-provisioner-74d6966958-vwmms 5/5 Running 0 20h 10.244.3.80 k8s1138032node <none> <none> csi-rbdplugin-tqt7b 3/3 Running 0 20h 172.16.138.31 k8s1138031node <none> <none> rook-ceph-mgr-a-855bf6985b-57vwp 1/1 Running 1 19h 10.244.1.108 k8s1138027node <none> <none> rook-ceph-mon-a-7894d78d65-2zqwq 1/1 Running 1 19h 10.244.1.110 k8s1138027node <none> <none> rook-ceph-mon-b-5bfc85976c-q5gdk 1/1 Running 0 19h 10.244.4.178 k8s1138033node <none> <none> rook-ceph-mon-c-7576dc5fbb-kj8rv 1/1 Running 0 19h 10.244.2.104 k8s1138031node <none> <none> rook-ceph-operator-5bd7d67784-5l5ss 1/1 Running 0 24h 10.244.2.13 k8s1138031node <none> <none> rook-ceph-osd-0-d9c5686c7-tfjh9 1/1 Running 0 19h 10.244.0.35 k8s1138026node <none> <none> rook-ceph-osd-1-9987ddd44-9hwvg 1/1 Running 0 19h 10.244.2.114 k8s1138031node <none> <none> rook-ceph-osd-2-f5df47f59-4zd8j 1/1 Running 1 19h 10.244.1.109 k8s1138027node <none> <none> rook-ceph-osd-3-5b7579d7dd-nfvgl 1/1 Running 0 19h 10.244.3.90 k8s1138032node <none> <none> rook-ceph-osd-prepare-k8s1138026node-cmk5j 0/1 Completed 0 19h 10.244.0.36 k8s1138026node <none> <none> rook-ceph-osd-prepare-k8s1138027node-nbm82 0/1 Completed 0 19h 10.244.1.103 k8s1138027node <none> <none> rook-ceph-osd-prepare-k8s1138031node-9gh87 0/1 Completed 0 19h 10.244.2.115 k8s1138031node <none> <none> rook-ceph-osd-prepare-k8s1138032node-nj7vm 0/1 Completed 0 19h 10.244.3.87 k8s1138032node <none> <none> rook-discover-4n25t 1/1 Running 0 25h 10.244.2.5 k8s1138031node <none> <none> rook-discover-76h87 1/1 Running 0 25h 10.244.0.25 k8s1138026node <none> <none> rook-discover-ghgnk 1/1 Running 0 25h 10.244.4.5 k8s1138033node <none> <none> rook-discover-slvx8 1/1 Running 0 25h 10.244.3.5 k8s1138032node <none> <none> rook-discover-tgb8v 0/1 Error 0 25h <none> k8s1138027node <none> <none> [root@linuxba-node1 ceph]# kubectl get svc,ep -n rook-ceph NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/csi-cephfsplugin-metrics ClusterIP 10.96.36.5 <none> 8080/TCP,8081/TCP 20h service/csi-rbdplugin-metrics ClusterIP 10.96.252.208 <none> 8080/TCP,8081/TCP 20h service/rook-ceph-mgr ClusterIP 10.96.167.186 <none> 9283/TCP 19h service/rook-ceph-mgr-dashboard ClusterIP 10.96.148.18 <none> 7000/TCP 19h service/rook-ceph-mon-a ClusterIP 10.96.183.92 <none> 6789/TCP,3300/TCP 19h service/rook-ceph-mon-b ClusterIP 10.96.201.107 <none> 6789/TCP,3300/TCP 19h service/rook-ceph-mon-c ClusterIP 10.96.105.92 <none> 6789/TCP,3300/TCP 19h NAME ENDPOINTS AGE endpoints/ceph.rook.io-block <none> 25h endpoints/csi-cephfsplugin-metrics 10.244.2.80:9081,10.244.3.81:9081,172.16.138.26:9081 + 11 more... 20h endpoints/csi-rbdplugin-metrics 10.244.1.111:9090,10.244.3.80:9090,172.16.138.26:9090 + 11 more... 20h endpoints/rook-ceph-mgr 10.244.1.108:9283 19h endpoints/rook-ceph-mgr-dashboard 10.244.1.108:7000 19h endpoints/rook-ceph-mon-a 10.244.1.110:3300,10.244.1.110:6789 19h endpoints/rook-ceph-mon-b 10.244.4.178:3300,10.244.4.178:6789 19h endpoints/rook-ceph-mon-c 10.244.2.104:3300,10.244.2.104:6789 19h endpoints/rook.io-block <none> 25h

2.4 Rook toolbox validation ceph

Deploy Rook toolbox to kubernetes, following by deploying yaml:

vim rook-ceph-toolbox.yam

apiVersion: apps/v1

kind: Deployment

metadata:

name: rook-ceph-tools

namespace: rook-ceph

labels:

app: rook-ceph-tools

spec:

replicas: 1

selector:

matchLabels:

app: rook-ceph-tools

template:

metadata:

labels:

app: rook-ceph-tools

spec:

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: rook-ceph-tools

image: rook/ceph:v1.1.0

command: ["/tini"]

args: ["-g", "--", "/usr/local/bin/toolbox.sh"]

imagePullPolicy: IfNotPresent

env:

- name: ROOK_ADMIN_SECRET

valueFrom:

secretKeyRef:

name: rook-ceph-mon

key: admin-secret

securityContext:

privileged: true

volumeMounts:

- mountPath: /dev

name: dev

- mountPath: /sys/bus

name: sysbus

- mountPath: /lib/modules

name: libmodules

- name: mon-endpoint-volume

mountPath: /etc/rook

# if hostNetwork: false, the "rbd map" command hangs, see https://github.com/rook/rook/issues/2021

hostNetwork: true

volumes:

- name: dev

hostPath:

path: /dev

- name: sysbus

hostPath:

path: /sys/bus

- name: libmodules

hostPath:

path: /lib/modules

- name: mon-endpoint-volume

configMap:

name: rook-ceph-mon-endpoints

items:

- key: data

path: mon-endpoints# Start rook-ceph-tools pod

kubectl create -f rook-ceph-toolbox.yaml

# Waiting for toolbox pod to start

kubectl -n rook-ceph get pod -l "app=rook-ceph-tools"

# Once toolbox is running, it can enter

kubectl -n rook-ceph exec -it $(kubectl -n rook-ceph get pod -l "app=rook-ceph-tools" -o jsonpath='{.items[0].metadata.name}') bashCheck the ceph-related status after entering toolbox:

# View status using ceph command

[root@linuxba-node5 /]# ceph -s

cluster:

id: f3457013-139d-4dae-b380-fe86dc05dfaa

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 21h)

mgr: a(active, since 21h)

osd: 4 osds: 4 up (since 21h), 4 in (since 22h)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 4.0 GiB used, 792 GiB / 796 GiB avail

pgs:

[root@linuxba-node5 /]# ceph osd status

+----+----------------+-------+-------+--------+---------+--------+---------+-----------+

| id | host | used | avail | wr ops | wr data | rd ops | rd data | state |

+----+----------------+-------+-------+--------+---------+--------+---------+-----------+

| 0 | k8s1138026node | 1026M | 197G | 0 | 0 | 0 | 0 | exists,up |

| 1 | k8s1138031node | 1026M | 197G | 0 | 0 | 0 | 0 | exists,up |

| 2 | k8s1138027node | 1026M | 197G | 0 | 0 | 0 | 0 | exists,up |

| 3 | k8s1138032node | 1026M | 197G | 0 | 0 | 0 | 0 | exists,up |

+----+----------------+-------+-------+--------+---------+--------+---------+-----------+

[root@linuxba-node5 /]# ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 796 GiB 792 GiB 10 MiB 4.0 GiB 0.50

TOTAL 796 GiB 792 GiB 10 MiB 4.0 GiB 0.50

POOLS:

POOL ID STORED OBJECTS USED %USED MAX AVAIL

[root@linuxba-node5 /]# rados df

POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR USED COMPR UNDER COMPR

total_objects 0

total_used 4.0 GiB

total_avail 792 GiB

total_space 796 GiB

[root@linuxba-node5 /]# Note:

Custom config in configmap rook-config-override is automatically mounted to / etc/ceph/ceph.conf in ceph pod for custom configuration purposes.(Ceph Cli management is recommended, not recommended)

apiVersion: v1

kind: ConfigMap

metadata:

name: rook-config-override

namespace: rook-ceph

data:

config: |

[global]

osd crush update on start = false

osd pool default size = 22.5 Exposure to Ceph

ceph is deployed in kubernetes and needs to be accessed outside, exposing related services such as dashboard, ceph monitor.

2.5.1 Exposure to ceph dashboard

It is recommended that dashboard be exposed using ingres, otherwise referring to the use of kubernetes.

vim rook-ceph-dashboard-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

# cert-manager.io/cluster-issuer: letsencrypt-prod

# kubernetes.io/tls-acme: "true"

name: rook-ceph-mgr-dashboard

namespace: rook-ceph

spec:

rules:

- host: ceph-dashboard.linuxba.com

http:

paths:

- backend:

serviceName: rook-ceph-mgr-dashboard

servicePort: 7000

path: /

tls:

- hosts:

- ceph-dashboard.linuxba.com

secretName: tls-ceph-dashboard-linuxba-comGet dashboard password:

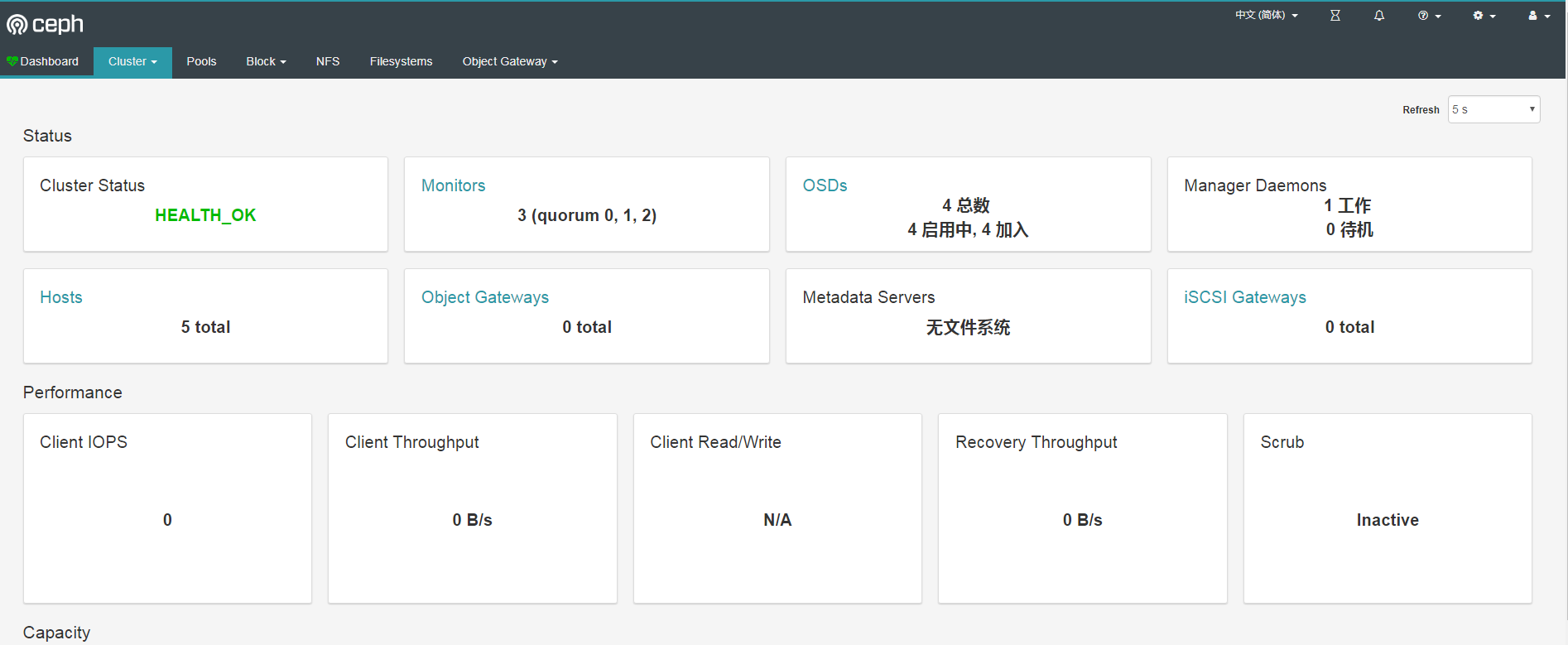

kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echoUser name admin, after login:

2.5.2 Exposure to ceph monitor

This step is only to verify whether the ceph monitor can be connected outside of kubernetes, and the results show that this is not possible.

The service type of the newly created monitor is LoadBalancer so that it can be used outside of k8s because I am using Ali Cloud kubernetes and I only want to use intranet load balancing, so add the following services:

vim rook-ceph-mon-svc.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

service.beta.kubernetes.io/alibaba-cloud-loadbalancer-address-type: "intranet"

labels:

app: rook-ceph-mon

mon_cluster: rook-ceph

rook_cluster: rook-ceph

name: rook-ceph-mon

namespace: rook-ceph

spec:

ports:

- name: msgr1

port: 6789

protocol: TCP

targetPort: 6789

- name: msgr2

port: 3300

protocol: TCP

targetPort: 3300

selector:

app: rook-ceph-mon

mon_cluster: rook-ceph

rook_cluster: rook-ceph

sessionAffinity: None

type: LoadBalancerNote:

- Built-in kubernetes recommends that MetalLB provide LoadBalancer-style load balancing.

- Now root does not support kubernetes external connection ceph monitor.

3. Configure rook-ceph

Configure ceph so that kubernetes can use dynamic volume management.

vim rook-ceph-block-pool.yaml

apiVersion: ceph.rook.io/v1

kind: CephBlockPool

metadata:

name: replicapool

namespace: rook-ceph

spec:

failureDomain: host

replicated:

size: 2

# Sets up the CRUSH rule for the pool to distribute data only on the specified device class.

# If left empty or unspecified, the pool will use the cluster's default CRUSH root, which usually distributes data over all OSDs, regardless of their class.

# deviceClass: hddvim rook-ceph-filesystem.yaml

apiVersion: ceph.rook.io/v1

kind: CephFilesystem

metadata:

name: cephfs-k8s

namespace: rook-ceph

spec:

metadataPool:

replicated:

size: 3

dataPools:

- replicated:

size: 3

metadataServer:

activeCount: 1

activeStandby: truevim rook-ceph-storage-class.yaml

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: ceph-rbd provisioner: ceph.rook.io/block parameters: blockPool: replicapool # The value of "clusterNamespace" MUST be the same as the one in which your rook cluster exist clusterNamespace: rook-ceph # Specify the filesystem type of the volume. If not specified, it will use `ext4`. fstype: xfs # Optional, default reclaimPolicy is "Delete". Other options are: "Retain", "Recycle" as documented in https://kubernetes.io/docs/concepts/storage/storage-classes/ reclaimPolicy: Retain # Optional, if you want to add dynamic resize for PVC. Works for Kubernetes 1.14+ # For now only ext3, ext4, xfs resize support provided, like in Kubernetes itself. allowVolumeExpansion: true --- # apiVersion: storage.k8s.io/v1 # kind: StorageClass # metadata: # name: cephfs # # Change "rook-ceph" provisioner prefix to match the operator namespace if needed # provisioner: rook-ceph.cephfs.csi.ceph.com # parameters: # # clusterID is the namespace where operator is deployed. # clusterID: rook-ceph # # # CephFS filesystem name into which the volume shall be created # fsName: cephfs-k8s # # # Ceph pool into which the volume shall be created # # Required for provisionVolume: "true" # pool: cephfs-k8s-data0 # # # Root path of an existing CephFS volume # # Required for provisionVolume: "false" # # rootPath: /absolute/path # # # The secrets contain Ceph admin credentials. These are generated automatically by the operator # # in the same namespace as the cluster. # csi.storage.k8s.io/provisioner-secret-name: rook-csi-cephfs-provisioner # csi.storage.k8s.io/provisioner-secret-namespace: rook-ceph # csi.storage.k8s.io/node-stage-secret-name: rook-csi-cephfs-node # csi.storage.k8s.io/node-stage-secret-namespace: rook-ceph # # reclaimPolicy: Retain

Go to toolbox to see the results:

[root@linuxba-node5 /]# ceph osd pool ls replicapool cephfs-k8s-metadata cephfs-k8s-data0 [root@linuxba-node5 /]# ceph fs ls name: cephfs-k8s, metadata pool: cephfs-k8s-metadata, data pools: [cephfs-k8s-data0 ] [root@linuxba-node5 /]#

4. kubernetes uses dynamic volumes to verify ceph

The ceph rbd for flex was successfully validated.

[root@linuxba-node1 ceph]# kubectl get pod NAME READY STATUS RESTARTS AGE curl-66bdcf564-9hhrt 1/1 Running 0 23h curl-66bdcf564-ghq5s 1/1 Running 0 23h curl-66bdcf564-sbv8b 1/1 Running 1 23h curl-66bdcf564-t9gnc 1/1 Running 0 23h curl-66bdcf564-v5kfx 1/1 Running 0 23h nginx-rbd-dy-67d8bbfcb6-vnctl 1/1 Running 0 21s [root@linuxba-node1 ceph]# kubectl exec -it nginx-rbd-dy-67d8bbfcb6-vnctl /bin/bash root@nginx-rbd-dy-67d8bbfcb6-vnctl:/# ps -ef bash: ps: command not found root@nginx-rbd-dy-67d8bbfcb6-vnctl:/# df -h Filesystem Size Used Avail Use% Mounted on overlay 197G 9.7G 179G 6% / tmpfs 64M 0 64M 0% /dev tmpfs 32G 0 32G 0% /sys/fs/cgroup /dev/vda1 197G 9.7G 179G 6% /etc/hosts shm 64M 0 64M 0% /dev/shm /dev/rbd0 1014M 33M 982M 4% /usr/share/nginx/html tmpfs 32G 12K 32G 1% /run/secrets/kubernetes.io/serviceaccount tmpfs 32G 0 32G 0% /proc/acpi tmpfs 32G 0 32G 0% /proc/scsi tmpfs 32G 0 32G 0% /sys/firmware root@nginx-rbd-dy-67d8bbfcb6-vnctl:/# cd /usr/share/nginx/html/ root@nginx-rbd-dy-67d8bbfcb6-vnctl:/usr/share/nginx/html# ls root@nginx-rbd-dy-67d8bbfcb6-vnctl:/usr/share/nginx/html# ls -la total 4 drwxr-xr-x 2 root root 6 Nov 5 08:47 . drwxr-xr-x 3 root root 4096 Oct 23 00:25 .. root@nginx-rbd-dy-67d8bbfcb6-vnctl:/usr/share/nginx/html# echo a > test.html root@nginx-rbd-dy-67d8bbfcb6-vnctl:/usr/share/nginx/html# ls -l total 4 -rw-r--r-- 1 root root 2 Nov 5 08:47 test.html root@nginx-rbd-dy-67d8bbfcb6-vnctl:/usr/share/nginx/html#

The cephfs validation failed, and the pod has been waiting to be mounted, as detailed below.

5. Solve the problem that csi-cephfs of rook-ceph cannot be mounted on kubernetes of Ali cloud of flex

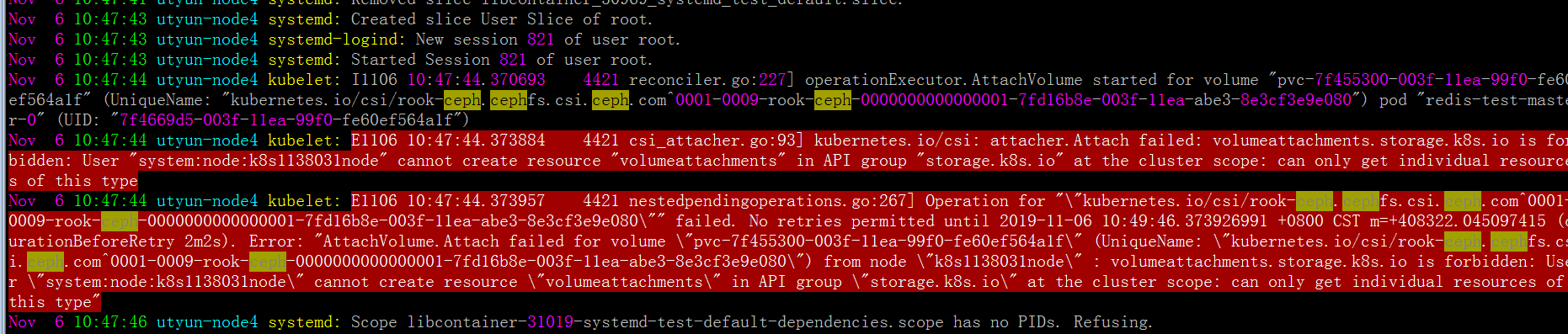

View the/var/log/message logs of all pod nodes using cephfs pvc,

Follow the log prompt to start thinking that you have insufficient permissions:

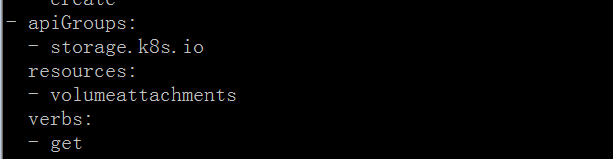

kubectl get clusterrole system:node -oyaml

By adding permissions for this clusterrole, the error remains the same.

Just remember that the cephfs storageclass was created using the csi plug-in method.

While Aliyun kubernetes only supports flex or csi, my cluster chose to use flex plug-in.

In its flex plug-in mode, the cluster node kubelet parameter, enable-controller-attach-detach, is false.

If you need to change to csi mode, you need to modify this parameter to true yourself.

Go ahead and go to the node where the pod in ContainerCreating is located.

Vim/etc/system d/system/kubelet.service.d/10-kubeadm.conf, modify enable-controller-attach-detach to true, then restart kubelet with systemctl daemon-reload && systemctl restart kubelet, and find that POD is mounted normally.

It can be concluded that the kubelet parameter enable-controller-attach-detach of kubernetes in Ali Cloud is false, causing csi not to be used.

Modifying this parameter is obviously impractical because the flex plug-in method was chosen when purchasing the Alibaba cloud hosted version of kubernetes, which would not have required kubelet maintenance, now because this parameter maintains the kubelet for all nodes.What else can I do without modifying the kubelet parameter?

Previously, I used a provisioner provided in the form of kubernetes-incubator/external-storage/ceph, referring to my previous articles:

https://blog.51cto.com/ygqygq2/2163656

5.1 Create cephfs-provisioner

First, write the string after the key in toolbox / etc/ceph/keyring to the file / tmp/ceph.client.admin.secret, make it secret, and start cephfs-provisioner.

kubectl create secret generic ceph-admin-secret --from-file=/tmp/ceph.client.admin.secret --namespace=rook-ceph kubectl apply -f cephfs/rbac/

Wait for startup to succeed

[root@linuxba-node1 ceph]# kubectl get pod -n rook-ceph|grep cephfs-provisioner cephfs-provisioner-5f64bb484b-24bqf 1/1 Running 0 2m

Then create the cephfs storageclass.

vim cephfs-storageclass.yaml

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: cephfs provisioner: ceph.com/cephfs reclaimPolicy: Retain parameters: # svc IP port of ceph monitor monitors: 10.96.201.107:6789,10.96.105.92:6789,10.96.183.92:6789 adminId: admin adminSecretName: ceph-admin-secret adminSecretNamespace: "rook-ceph" claimRoot: /volumes/kubernetes

The kubernetes node will also install ceph-common and ceph-fuse.

Using the ceph yum source of Ali Cloud, cat/etc/yum.repos.d/ceph.repo

[Ceph] name=Ceph packages for $basearch baseurl=http://mirrors.cloud.aliyuncs.com/ceph/rpm-nautilus/el7/$basearch enabled=1 gpgcheck=1 type=rpm-md gpgkey=http://mirrors.cloud.aliyuncs.com/ceph/keys/release.asc [Ceph-noarch] name=Ceph noarch packages baseurl=http://mirrors.cloud.aliyuncs.com/ceph/rpm-nautilus/el7/noarch enabled=1 gpgcheck=1 type=rpm-md gpgkey=http://mirrors.cloud.aliyuncs.com/ceph/keys/release.asc [ceph-source] name=Ceph source packages baseurl=http://mirrors.cloud.aliyuncs.com/ceph/rpm-nautilus/el7/SRPMS enabled=1 gpgcheck=1 type=rpm-md gpgkey=http://mirrors.cloud.aliyuncs.com/ceph/keys/release.asc

5.2 Verify cephfs

Continue with the previous test and you can see that it is working properly.

kubectl delete -f rook-ceph-cephfs-nginx.yaml -f rook-ceph-cephfs-pvc.yaml kubectl apply -f rook-ceph-cephfs-pvc.yaml kubectl apply -f rook-ceph-cephfs-nginx.yaml

[root@linuxba-node1 ceph]# kubectl get pod|grep cephfs nginx-cephfs-dy-5f47b4cbcf-txtf9 1/1 Running 0 3m50s [root@linuxba-node1 ceph]# kubectl exec -it nginx-cephfs-dy-5f47b4cbcf-txtf9 /bin/bash root@nginx-cephfs-dy-5f47b4cbcf-txtf9:/# df -h Filesystem Size Used Avail Use% Mounted on overlay 197G 9.9G 179G 6% / tmpfs 64M 0 64M 0% /dev tmpfs 32G 0 32G 0% /sys/fs/cgroup /dev/vda1 197G 9.9G 179G 6% /etc/hosts shm 64M 0 64M 0% /dev/shm ceph-fuse 251G 0 251G 0% /usr/share/nginx/html tmpfs 32G 12K 32G 1% /run/secrets/kubernetes.io/serviceaccount tmpfs 32G 0 32G 0% /proc/acpi tmpfs 32G 0 32G 0% /proc/scsi tmpfs 32G 0 32G 0% /sys/firmware root@nginx-cephfs-dy-5f47b4cbcf-txtf9:/# echo test > /usr/share/nginx/html/test.html

6. Summary

-

The ceph monitor is not accessible outside of Kubernetes, and due to this limitation, it is much better to deploy it directly on the machine.

-

rook-ceph provides both flex-driven and csi-driven rbd-type storageclasses, while cephfs currently only supports csi-driven storageclasses. The use of flex-driven cephfs storage volumes can be used as an example: kube-registry.yaml

- Finally, the relevant Yaml files used above are attached:

https://github.com/ygqygq2/kubernetes/tree/master/kubernetes-yaml/rook-ceph

Reference material:

[1] https://rook.io/docs/rook/v1.1/ceph-quickstart.html

[2] https://rook.io/docs/rook/v1.1/helm-operator.html

[3] https://rook.io/docs/rook/v1.1/ceph-toolbox.html

[4] https://rook.io/docs/rook/v1.1/ceph-advanced-configuration.html#custom-cephconf-settings

[5] https://rook.io/docs/rook/v1.1/ceph-pool-crd.html

[6] https://rook.io/docs/rook/v1.1/ceph-block.html

[7] https://rook.io/docs/rook/v1.1/ceph-filesystem.html

[8] https://github.com/kubernetes-incubator/external-storage/tree/master/ceph