Background

Rancher HA is deployed in a variety of ways:

- Helm HA installation deploys Rancher in the existing Kubernetes cluster. Rancher will use the cluster etcd to store data and use Kubernetes scheduling to achieve high availability.

- RKE HA installation, using RKE tools to install a separate Kubernetes cluster, dedicated to Rancher HA deployment and operation, RKE HA installation only supports Rancher v2.0.8 and previous versions, Rancher v2.0.8 version after the use of helm to install Rancher.

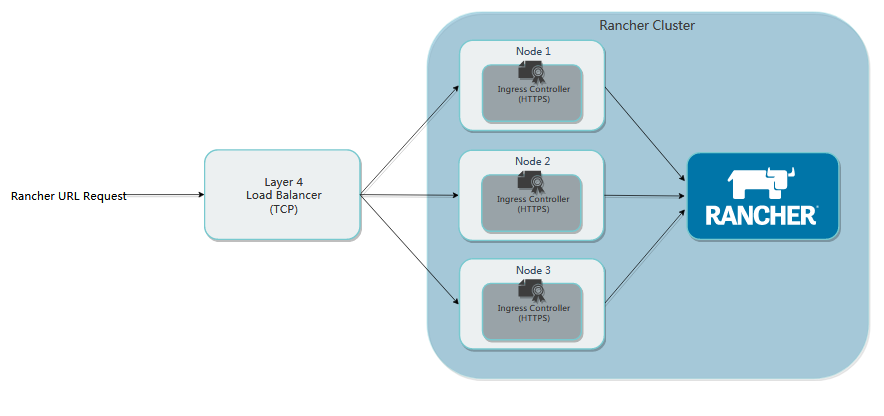

Based on the existing Kubernetes cluster, Rancher HA will be installed with Helm, and a four-tier load balancing method will be adopted.

Adding Chart Warehouse Address

Add Rancher chart warehouse address using helm repo add command

Rancher tag and Chart version selection reference: https://www.cnrancher.com/docs/rancher/v2.x/cn/installation/server-tags/#Replace <CHART_REPO> with the Helm repository branch (that is, latest or stable) that you want to use. helm repo add rancher-stable https://releases.rancher.com/server-charts/stable

Installation of Rancher server using self-signed certificates

Rancher server design by default requires opening the SSL/TLS configuration to ensure security, passing ssl certificates to rancher server or Ingress Controller in the form of Kubernetes Secret volumes. First create the certificate ciphertext so that Rancher and Ingress Controller can use it.

1. Generating Self-Signing Certificates

Scripts

One-click generation of self-signed certificate scripts

# Executing scripts to generate certificates

sh create_self-signed-cert.sh --ssl-domain=rancher.sumapay.com --ssl-trusted-ip=172.16.1.21,172.16.1.22 --ssl-size=2048 --ssl-date=3650

2. Using kubectl to create secrets of tls type

# Creating Namespaces

[root@k8s-master03 ~]# kubectl create namespace cattle-system namespace/rancher-system created

# Service certificate and private key ciphertext

[root@k8s-master03 self_CA]# kubectl -n cattle-system create secret tls tls-rancher-ingress --cert=./tls.crt --key=./tls.key secret/tls-rancher-ingress created

# ca certificate ciphertext

[root@k8s-master03 self_CA]# kubectl -n cattle-system create secret generic tls-ca --from-file=cacerts.pem secret/tls-ca created

3. Install rancher server

# Installing rancher HA with helm

[root@k8s-master03 ~]# helm install rancher-stable/rancher --name rancher2 --namespace cattle-system --set hostname=rancher.sumapay.com --set ingress.tls.source=secret --set privateCA=true NAME: rancher2 LAST DEPLOYED: Fri Apr 26 14:03:51 2019 NAMESPACE: cattle-system STATUS: DEPLOYED RESOURCES: ==> v1/ClusterRoleBinding NAME AGE rancher2 0s ==> v1/Deployment NAME READY UP-TO-DATE AVAILABLE AGE rancher2 0/3 3 0 0s ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE rancher-55c884bbf7-2xqpl 0/1 ContainerCreating 0 0s rancher-55c884bbf7-bqvjh 0/1 ContainerCreating 0 0s rancher-55c884bbf7-hhlvh 0/1 ContainerCreating 0 0s ==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE rancher2 ClusterIP 10.110.148.105 <none> 80/TCP 0s ==> v1/ServiceAccount NAME SECRETS AGE rancher2 1 0s ==> v1beta1/Ingress NAME HOSTS ADDRESS PORTS AGE rancher2 rancher.sumapay.com 80, 443 0s NOTES: Rancher Server has been installed. NOTE: Rancher may take several minutes to fully initialize. Please standby while Certificates are being issued and Ingress comes up. Check out our docs at https://rancher.com/docs/rancher/v2.x/en/ Browse to https://rancher.sumapay.com Happy Containering!

# View Creation

[root@k8s-master03 ~]# kubectl get ns NAME STATUS AGE cattle-global-data Active 2d5h cattle-system Active 2d5h [root@k8s-master03 ~]# kubectl get ingress -n cattle-system NAME HOSTS ADDRESS PORTS AGE rancher2 rancher.sumapay.com 80, 443 57m [root@k8s-master03 ~]# kubectl get service -n cattle-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE rancher2 ClusterIP 10.111.16.80 <none> 80/TCP 54m [root@k8s-master03 ~]# kubectl get serviceaccount -n cattle-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE rancher2 ClusterIP 10.111.16.80 <none> 80/TCP 51m [root@k8s-master03 ~]# kubectl get ClusterRoleBinding -n cattle-system -l app=rancher2 -o wide NAME AGE ROLE USERS GROUPS SERVICEACCOUNTS rancher2 58m ClusterRole/cluster-admin cattle-system/rancher2 [root@k8s-master03 ~]# kubectl get pods -n cattle-system NAME READY STATUS RESTARTS AGE cattle-cluster-agent-594b8f79bb-pgmdt 1/1 Running 5 2d2h cattle-node-agent-lg44f 1/1 Running 0 2d2h cattle-node-agent-zgdms 1/1 Running 5 2d2h rancher2-9774897c-622sc 1/1 Running 0 50m rancher2-9774897c-czxxx 1/1 Running 0 50m rancher2-9774897c-sm2n5 1/1 Running 0 50m [root@k8s-master03 ~]# kubectl get deployment -n cattle-system NAME READY UP-TO-DATE AVAILABLE AGE cattle-cluster-agent 1/1 1 1 2d4h rancher2 3/3 3 3 55m

4. Adding host aliases for Agent Pod (/etc/hosts)

If you do not have an internal DNS server but specify the Rancher server domain name by adding / etc/hosts host aliases, then no matter which way you create the K8S cluster (custom, import, Host driver, etc.), after the K8S cluster runs, because cattle-cluster-agent Pod and cattle-node-agent can not find the Rancher server through DNS records, eventually leading to no communication.

Solution

By adding host aliases (/etc/hosts) to cattle-cluster-agent Pod and cattle-node-agent, they can communicate normally (provided that IP addresses can be interoperable).

#cattle-cluster-agent pod

kubectl -n cattle-system \

patch deployments cattle-cluster-agent --patch '{

"spec": {

"template": {

"spec": {

"hostAliases": [

{

"hostnames":

[

"rancher.sumapay.com"

],

"ip": "Four Layer Load Balancing Address"

}

]

}

}

}

}'

#cattle-node-agent pod

kubectl -n cattle-system \

patch daemonsets cattle-node-agent --patch '{

"spec": {

"template": {

"spec": {

"hostAliases": [

{

"hostnames":

[

"rancher.sumapay.com"

],

"ip": "Four Layer Load Balancing Address"

}

]

}

}

}

}'So far, rancher HA has been deployed, because it is not in the form of NodePort, we can not directly access the rancher service without the deployment of ingress-controller.

For ingress-controller deployment, please refer to Deployment and use of traefik.

Reference resources:

https://www.cnrancher.com/docs/rancher/v2.x/cn/installation/ha-install/helm-rancher/tcp-l4/rancher-install/