Blog Outline:

1. Overview of Varnish

1. Introduction to Varnish

2. Differences between Varnish and squid

3. How Varnish works

4.Varnish architecture

5.Varnish Configuration

6. Built-in preset variables in VCL

7. Subprograms of VCL

8. Specific Function Statements

9.return statement

10.Steps for processing Varnish requests

11.Elegant mode of Varnish

2. Install Varnish

1. Client Access Test Cache

2. Server-side test clears cache

3. Configure the access of the http server to get the IP address of the client

1. Overview of Varnish

1. Introduction to Varnish

Varnish is a high performance and open source reverse proxy server and HTTP accelerator that uses a new software architecture and works closely with the current hardware architecture.Compared with traditional squid, Varnish has the advantages of high performance, fast speed and convenient management. At present, many large websites are trying to use Varnish instead of squid, which is the most fundamental reason for the rapid development of Varnish.

Main features of Varnish:

- (1) Cache agent location: you can use either memory or disk;

- (2) Log storage: the log is stored in memory;

- (3) Support the use of virtual memory;

- (4) There is an accurate time management mechanism, i.e. the control of the time attribute of the cache;

- (5) State Engine Architecture: Processing different cache and proxy data on different engines;

- (6) Cache management: manage the cached data with binary heap to clean up the data in time;

2. Differences between Varnish and squid

Same:

- Is a reverse proxy server;

- Are open source software;

Advantages of Varnish:

- (1) Stability: Squid servers are more likely to fail when Varnish and Squid are doing work with the same load than Varnish because Squid requires frequent restarts;

- (2) Faster access: All cached data in Varnish is read directly from memory, while Squid is read from the hard disk;

- (3) Support for more concurrent connections: Varnish's TCP connections and releases much faster than Squid's

Defects of Varnish:

- (1) Once the Varnish process is restarted, the cached data will be completely released from memory, and all requests will be sent to the back-end server, which will put a lot of pressure on the back-end server under high concurrency conditions;

- (2) In the use of Varnish, if a request using a single URL passes through load balancing, each request will fall on a different Varnish server, causing the request to the back-end server; and the same Qingui caches on multiple servers will also cause the waste of Varnish's cache resources, resulting in performance degradation;

Solutions for Varnish defects:

- Defects (1): Starting with Varnish's memory cache is recommended in case of heavy access, followed by multiple Nginx servers (as reverse proxies).This mainly prevents a large number of requests from reaching the back-end server when the Varnish server restarts; thus Nginx is the second cached proxy server.Prevent excessive requests from putting too much pressure on back-end servers;

- For bugs (2): URL hashing can be done on load balancing to pin a single URL request to a Varnish server;

3. How Varnish works

When the Varnish server receives a request from the client, you are the first to check if there is any data in the cache, if there is, to respond directly to the client; if not, to request the corresponding resources from the back-end server, to cache locally to the Varnish server, and then to respond to the client;

Choose whether the data needs to be cached based on the rules and the type of request page. You can determine whether the data needs to be cached based on Cache-Contorl in the request header and whether the cookies have tags. These functions can be accomplished by writing a configuration file.

4.Varnish architecture

Varnish is divided into management and child processes:

- management process: Manage child processes, and colleagues compile VCL configurations and apply them to different state engines;

- child process: Generate a thread pool responsible for handling user requests and returning user results through hash lookup;

5.Varnish Configuration

Varnish main configuration section:

- Backend configuration: Add a reverse proxy server node to Varnish, configure at least one;

- ACL configuration: Add access control lists to Varnish, which can be specified to access or disable access;

- probes configuration: Add rules to Varnish to detect whether the back-end server is working, to facilitate switching or to disable the corresponding back-end server;

- directors configuration: add load balancing mode to Varnish to manage multiple back-end servers;

- Core subprogram configuration: add back-end server switching, request caching, access control, error handling rules to Varnish;

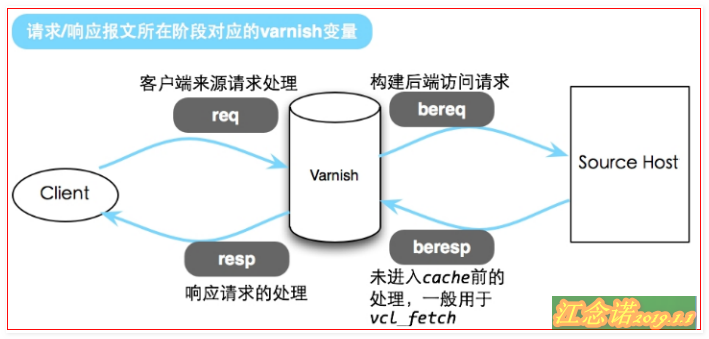

6. Built-in preset variables in VCL

Figure:

- req: Variables available when a client requests a Varnish server;

- bereq:Varnish server requests variables available to the back-end server;

- beresp: The variable used by the back-end server when it responds to a Varnish server request and returns the result;

- resp:Varnish server is a variable used in response to client requests;

- obj: Cache objects, cache backend response request content;

- now: the purpose is to return the current timestamp;

7. Subprograms of VCL

Client Basic Information:

- Client ip: Returns the IP address of the client;

- Client port: Get the port number requested by the client (std needs to be called);

- Client.identiy: Get the client identification code;

Server side basic information:

- Server.hostname: Server host name;

- Server.identiy: Get the server identification code;

- Server.ip: Get the server IP address;

- Server.prot: Get the server IP port number (call std module);

Client request (req):

- Req: The data structure of the entire request;

- Req.bachend_hint: Specify the request backend node such as gif to the picture server (hint implies) Set the client's request req.bachend_hint=picture server address when gif is accessed;

- Req.can_gzip: Whether the client accepts gzip transport encoding;

- req.hash_always_miss: Whether to read data from the cache or not;

- req.hash_ignore_busy: Ignore busy data in the cache;

- req.http: the header corresponding to the request for http;

- req.method: the type or manner of request;

- req.proto: The version of the http protocol that the client requests to use;

- req.restarts: Number of restarts with a default maximum of 4 (usually used to determine whether a server has been accessed);

- req.url: the URL requested;

- req.xid: The unique ID number of the request;

varnish request back-end server (bereq):

- bereq: The data structure of the entire backend request;

- bereq.backend: Configuration of the requested backend node;

- bereq.between_bytes_timeout: The wait time or timeout between each byte received from the backend;

- bereq.http: Header information corresponding to HTTP sent to the back end;

- bereq.method: the type or mode of request sent to the backend;

- bereq.proto: The http protocol version of the request sent to the backend;

- bereq.retires: same request retry count;

- bereq.uncacheable: Request data is not cached or request is not cached;

- bereq.url: the URL sent to the backend request;

- bereq.xid: Request unique id;

Back-end server to varnish (beresq):

- Beresp: Back-end server response data;

- Beresp.backend.ip: The IP address of the back-end response (processing request data) server;

- Beresp.backend.name: The node name of the back-end response server;

- Beresp.do_gunzip: Default is false, uncompress the object before caching;

- Beresp.grace: Set an additional grace time for cache expiration;

- Beresp.http: The header of the HTTP when responding;

- Beresp.keep: The object is cached with a retention time;

- Beresp.proto: The http version of the response;

- Beresp.reason: http status information returned by the back-end server;

- Beresp.status: The status code returned by the back-end server;

- Beresp.storage_hint: Specifies the specific storage (memory) to be saved;

- Beresp.ttl: Change the remaining time of the object cache to specify the remaining time of the unified cache;

- Beresp,uncacheable: Do not cache data;

Cached object (obj):

- obj.grace: Extra grace time for the object;

- obj.hits: The number of cache hits that can be used to determine whether a cache exists or not;

- obj.http: the header corresponding to HTTP;

- obj.proto: HTTP version;

- obj.reason: The HTTP status returned by the server;

- obj.status: The status code returned by the server;

- obj.ttl: The time remaining for the object to be cached (in seconds);

- obj.uncacheable: No cache;

Response object returned to the client (resp):

- resp: the entire response data structure;

- resp.http: header corresponding to HTTP;

- resp.proto: Edit the HTTP protocol version of the response;

- resp.reason: The HTTP status information to be returned;

- resq.status: The HTTP status to be returned;

8. Specific Function Statements

- Ban (expression): Clears the specified object cache;

- Call(subroutine): Call subroutine;

- Hash_data (input): Generate a hash key based on the value of the subprogram of input;

- New (): Create a new VCL object, only in the vcl_init subprocess;

- Return (): Ends the current subprogram and specifies to proceed to the next step;

- Rollback (): Restores the HTTP header to its original state, which has been discarded and replaced with std.rollback();

- Synthetic (STRING): A synthesizer that defines the page and status code returned to the client;

- Regsub (str, regex, sub) replaces the first occurrence of a string with a regular expression;

- Regsuball (str, regex,sub) replaces all occurrence strings;

9.return statement

The return statement terminates the subprogram and returns the action, all of which are selected according to different vcl subprogram restrictions.

Syntax: return (action);

Common options:

- abandon: Abandons the process and generates an error;

- deliver: Delivery processing;

- fetch: Remove the response object from the back end;

- Hash: hash cache processing;

- lookup: Find the cache;

- ok: continue execution;

- Pass: Enter pass non-cached mode;

- Pipe: Enter pipe non-cached mode;

- purge: Clear the cache object and build the response;

- Restart: restart retry to retry back-end processing;

- synth(status code,reason): composite returns client state;

10.Steps for processing Varnish requests

Figure:

The VCL process is roughly divided into the following steps:

(1)Receive status, which is the entry status for request processing, which is determined by the VCL rules to be Pass or Pipe or to enter Lookup (local query);

(2)Lookup state, after entering this state, will look for data in hash table, if found, enter Hit state, otherwise enter miss state;

(3)Pass state, in which the back-end request, i.e. fetch state, enters;

(4)Fetch state, in Fetch state, back-end acquisition of requests, sending requests, obtaining data, and local storage;

(5)Deliver status, send the acquired data to the client, and then complete the request;

11.Elegant mode of Varnish

When several clients request the same page, varnish sends only one request to the back-end server, then suspends several other requests and waits for the results to be returned; when the results are obtained, the other requests copy the results of the back-end and send them to the client; but if there are thousands of requests at the same time, the waiting queue becomes large, which leads to two types of potential problems: thundering herd problem, i.e. a sudden release of a large number of threads to copy the results returned by the back end, will cause a sharp increase in load; no user likes to wait;

To solve this problem, you can configure varnish to retain a period of time after the cache object expires due to a timeout to return stale content to those waiting requests. The configuration case is as follows:

sub vcl_recv {

if (! req.backend.healthy) { #Determine the health of the back-end server

set req.grace = 5m; #If the back-end server is unhealthy, the client caches for an additional 15 minutes

}else {

set req.grace = 15s; } #Normal additional cache time of 15 seconds

}

sub vcl_fetch {

set beresp.grace = 30m; #Define an additional 30 minutes after the cache expires

}2. Install Varnish

Obtain Varnish Package

[root@localhost ~]# yum -y install autoconf automake libedit-devel libtool ncurses-devel pcre-devel pkgconfig python-docutils python-sphinx

//Dependencies required to install Varnish

[root@localhost ~]# tar zxf varnish-4.0.3.tar.gz -C /usr/src

[root@localhost ~]# cd /usr/src/varnish-4.0.3/

[root@localhost varnish-4.0.3]# . /configure & & make & & make install //compile and install Varnish

[root@localhost ~]# cp /usr/src/varnish-4.0.3/etc/example.vcl /usr/local/var/varnish/

//Copy Varnish Master Profile

[root@localhost ~]# Vim/usr/local/var/varnish/example.vcl //compile Varnish master profile

#

# This is an example VCL file for Varnish.

#

# It does not do anything by default, delegating control to the

# builtin VCL. The builtin VCL is called when there is no explicit

# return statement.

#

# See the VCL chapters in the Users Guide at https://www.varnish-cache.org/docs/

# and http://varnish-cache.org/trac/wiki/VCLExamples for more examples.

# Marker to tell the VCL compiler that this VCL has been adapted to the

# new 4.0 format.

vcl 4.0;

import directors;

import std;

# Default backend definition. Set this to point to your content server.

probe backend_healthcheck {

.url="/"; #Access Backend Server Root Path

.interval = 5s; #Request Interval

.timeout = 1s; #Request Timeout

.window = 5; #Specify polling times 5 times

.threshold = 3; #Three failures indicate a backend server exception

}

backend web1 { #Define Backend Server

.host = "192.168.1.7"; #IP or domain name to turn to the host (that is, back-end host)

.port = "80"; #Specify the port number of the back-end server

.probe = backend_healthcheck; #Health check calls the contents of the backend_healthcheck definition

}

backend web2 {

.host = "192.168.1.8";

.port = "80";

.probe = backend_healthcheck;

}

acl purgers { #Define access control lists

"127.0.0.1";

"localhost";

"192.168.1.0/24";

!"192.168.1.8";

}

sub vcl_init { #Initialize the subroutine by calling vcl_init to create the backend host group, directors

new web_cluster=directors.round_robin(); #Create drector objects using the new keyword and use the round_robin algorithm

web_cluster.add_backend(web1); #Add Backend Server Node

web_cluster.add_backend(web2);

}

sub vcl_recv {

set req.backend_hint = web_cluster.backend(); #Specify the back-end node defined by the request's back-end node web_cluster

if (req.method == "PURGE") { #Determine whether the client's request header is a PURGE

if (!client.ip ~ purgers) { #If so, determine if the client's IP address is in the ACL access control list.

return (synth(405, "Not Allowed.")); #If not, return to the client 405 status code and return to the defined page.

}

return (purge); #If ACL is defined, it is handled by purge.

}

if (req.method != "GET" &&

req.method != "HEAD" &&

req.method != "PUT" &&

req.method != "POST" &&

req.method != "TRACE" &&

req.method != "OPTIONS" &&

req.method != "PATCH" &&

req.method != "DELETE") { #Determine Client Request Type

return (pipe);

}

if (req.method != "GET" && req.method != "HEAD") {

return (pass); #If not GET and HEAD, pass.

}

if (req.url ~ "\.(php|asp|aspx|jsp|do|ashx|shtml)($|\?)") {

return (pass); #When a client accesses a pass at the end of.php, etc.

}

if (req.http.Accept-Encoding) {

if (req.url ~ "\.(bmp|png|gif|jpg|jpeg|ico|gz|tgz|bz2|tbz|zip|rar|mp3|mp4|ogg|swf|flv)$") {

unset req.http.Accept-Encoding; #Cancel compression type received by client

} elseif (req.http.Accept-Encoding ~ "gzip") {

set req.http.Accept-Encoding = "gzip"; #If there is a gzip type, mark the gzip type.

} elseif (req.http.Accept-Encoding ~ "deflate") {

set req.http.Accept-Encoding = "deflate";

} else {

unset req.http.Accept-Encoding; #Other undefined pages also cancel the type of compression the client receives.

}

}

if (req.url ~ "\.(css|js|html|htm|bmp|png|gif|jpg|jpeg|ico|gz|tgz|bz2|tbz|zip|rar|mp3|mp4|ogg|swf|flv)($|\?)") {

unset req.http.cookie; #Cancel client cookie value.

return (hash); #Forward the request to the hash subprogram, that is, view the local cache.

}

if (req.restarts == 0) { #Determine if the client is requesting for the first time

if (req.http.X-Forwarded-For) { #If this is the first request, set the Get Client IP Address.

set req.http.X-Forwarded-For = req.http.X-Forwarded-For + ", " + client.ip;

} else {

set req.http.X-Forwarded-For = client.ip;

}

}

return (hash);

}

sub vcl_hash {

hash_data(req.url); #View the page requested by the client and hash it

if (req.http.host) {

hash_data(req.http.host); #Set up the client's host

} else {

hash_data(server.ip); #Set the IP of the server

}

return (lookup);

}

sub vcl_hit {

if (req.method == "PURGE") { #If it is HIT and when the type of client request is 200 status code returned by PURGE, return to the corresponding page.

return (synth(200, "Purged."));

}

return (deliver);

}

sub vcl_miss {

if (req.method == "PURGE") {

return (synth(404, "Purged.")); #If miss returns 404

}

return (fetch);

}

sub vcl_deliver {

if (obj.hits > 0) {

set resp.http.CXK = "HIT-from-varnish"; #Set http header X-Cache =hit

set resp.http.X-Cache-Hits = obj.hits; #Number of times commands are returned

} else {

set resp.http.X-Cache = "MISS";

}

unset resp.http.X-Powered-By; #Undisplay web version

unset resp.http.Server; #Cancel display of varnish service

unset resp.http.X-Drupal-Cache; #Undisplay Cached Framework

unset resp.http.Via; #Cancel showing file content source

unset resp.http.Link; #Cancel displaying HTML hyperlink addresses

unset resp.http.X-Varnish; #Unshow varnish id

set resp.http.xx_restarts_count = req.restarts; #Set the number of client requests

set resp.http.xx_Age = resp.http.Age; #Time to display cached files

#set resp.http.hit_count = obj.hits; #Show the number of cache hits

#unset resp.http.Age;

return (deliver);

}

sub vcl_pass {

return (fetch); #Cache data returned by back-end server locally

}

sub vcl_backend_response {

set beresp.grace = 5m; #Cache Extra Grace Time

if (beresp.status == 499 || beresp.status == 404 || beresp.status == 502) {

set beresp.uncacheable = true; #When the corresponding status code of the back-end server is 449 etc., it is not cached

}

if (bereq.url ~ "\.(php|jsp)(\?|$)") {

set beresp.uncacheable = true; #Pages that are PHP are not cached

} else {

if (bereq.url ~ "\.(css|js|html|htm|bmp|png|gif|jpg|jpeg|ico)($|\?)") {

set beresp.ttl = 15m; #Cache for 15 minutes when it ends above

unset beresp.http.Set-Cookie;

} elseif (bereq.url ~ "\.(gz|tgz|bz2|tbz|zip|rar|mp3|mp4|ogg|swf|flv)($|\?)") {

set beresp.ttl = 30m; #Cache for 30 minutes

unset beresp.http.Set-Cookie;

} else {

set beresp.ttl = 10m; #Lifetime 10 minutes

unset beresp.http.Set-Cookie;

}

}

return (deliver);

}

sub vcl_purge {

return (synth(200,"success"));

}

sub vcl_backend_error {

if (beresp.status == 500 ||

beresp.status == 501 ||

beresp.status == 502 ||

beresp.status == 503 ||

beresp.status == 504) {

return (retry); #If the status code is one of the above, request again

}

}

sub vcl_fini {

return (ok);

}

[root@localhost ~]# varnishd -f /usr/local/var/varnish/example.vcl -s malloc,200M -a 0.0.0.0:80

//Start varnish service

//-f specifies the location where the profile will be stored; -s specifies the size of memory used at startup; -a specifies the address and port to listen on

[root@localhost ~]# netstat -anpt | grep 80

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 10508/varnishd

//Make sure port 80 is already listeningSet up two http servers yourself (the same is not recommended for accessing pages)!

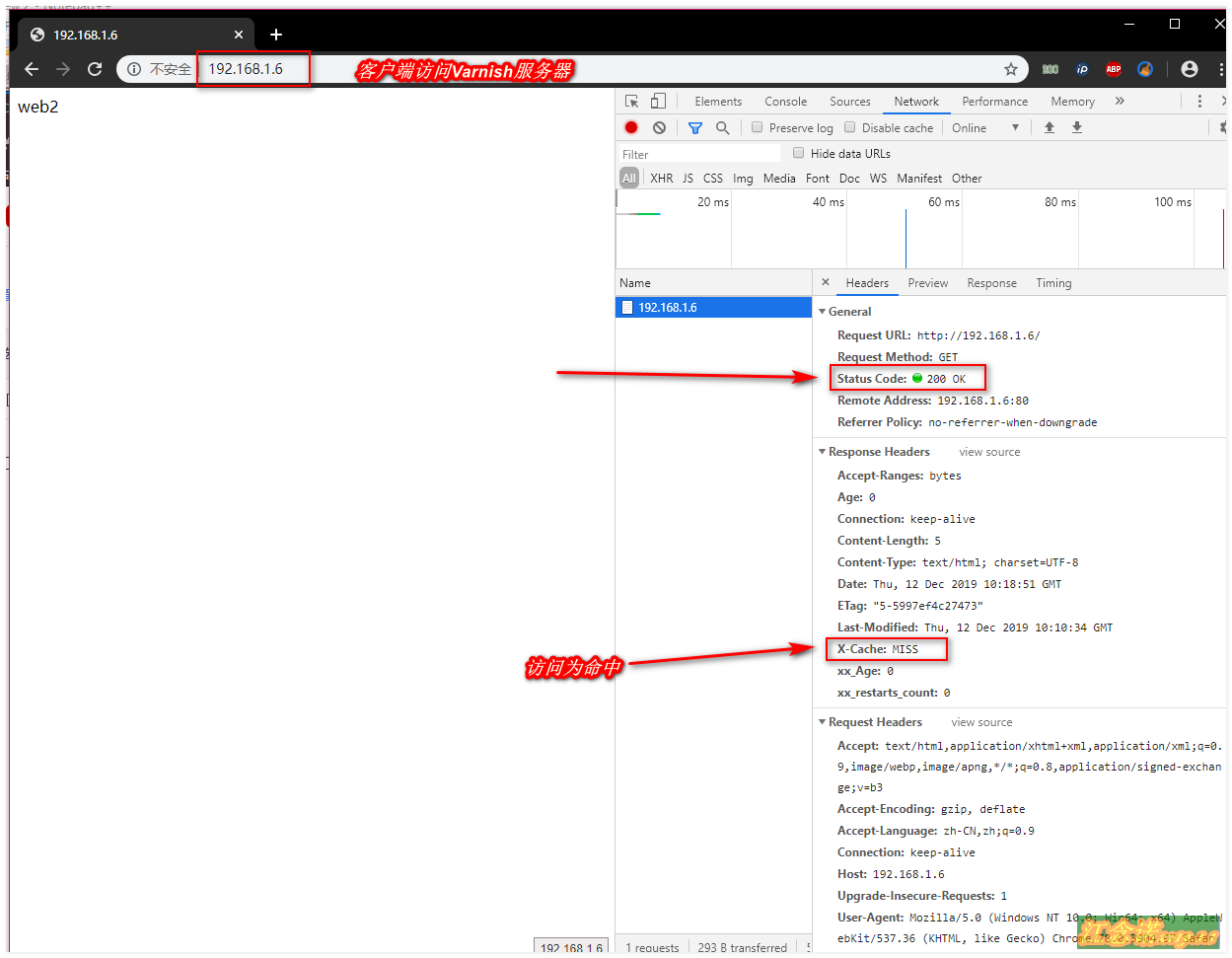

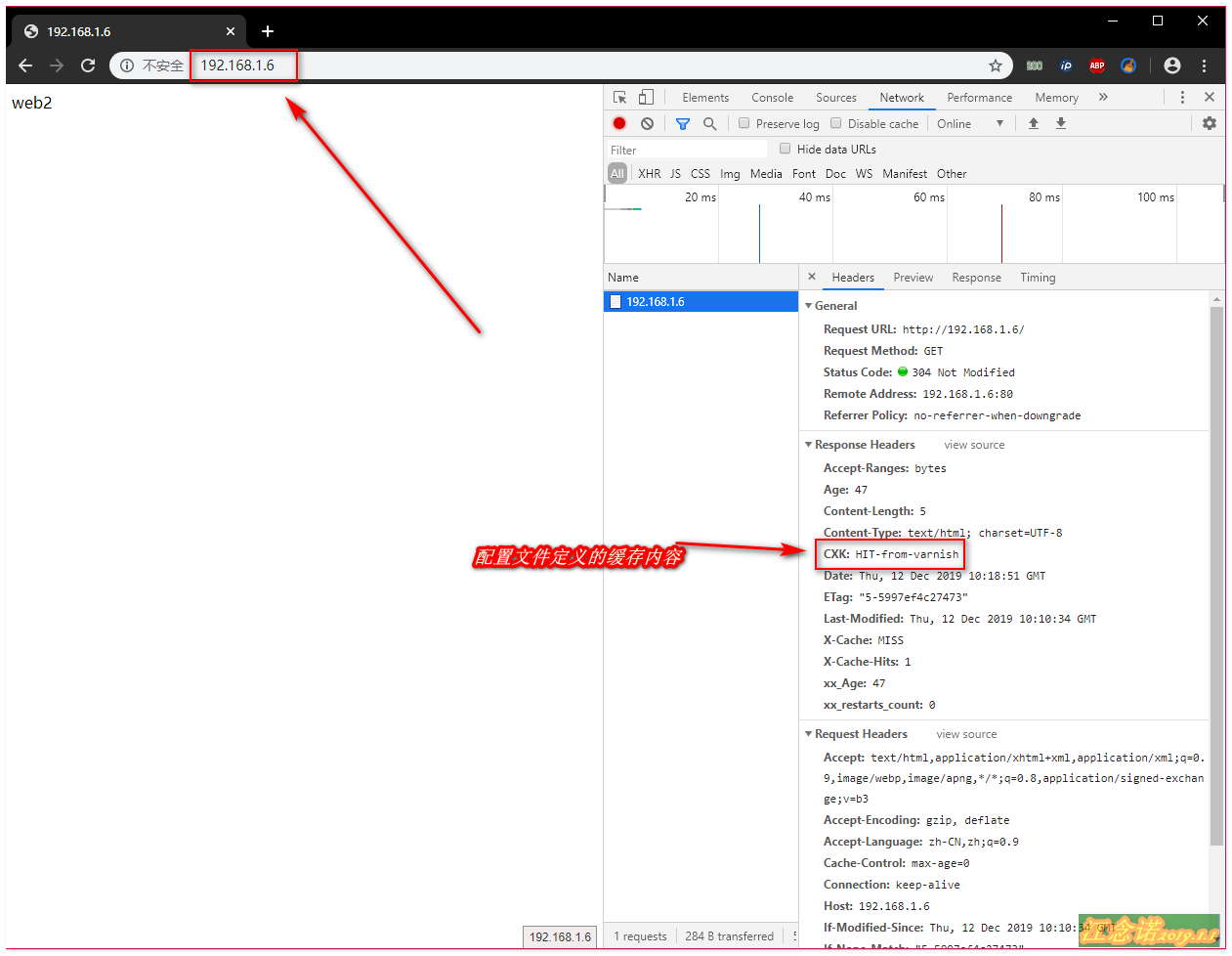

1. Client Access Test Cache

First visit:

Result after refreshing by pressing F5:

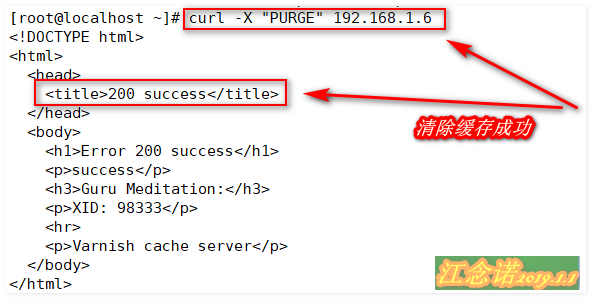

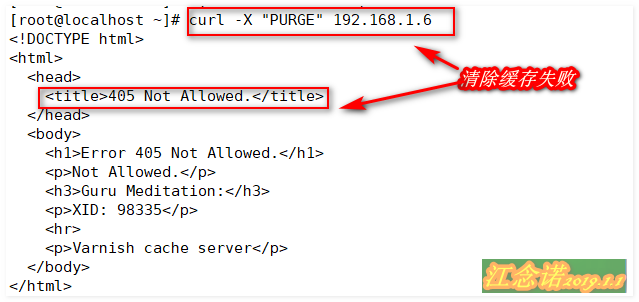

2. Server-side test clears cache

Note: Clear the cache during the test!

web Server (192.168.1.7)

[root@localhost ~]# curl -X "PURGE" 192.168.1.6 //Specify a cache server of 192.168.1.6 to clear the cache

The test access is as follows:

web server (192.168.1.8)

[root@localhost ~]# curl -X "PURGE" 192.168.1.6 //Specify a cache server of 192.168.1.6 to clear the cache

The test access is as follows:

Test complete!

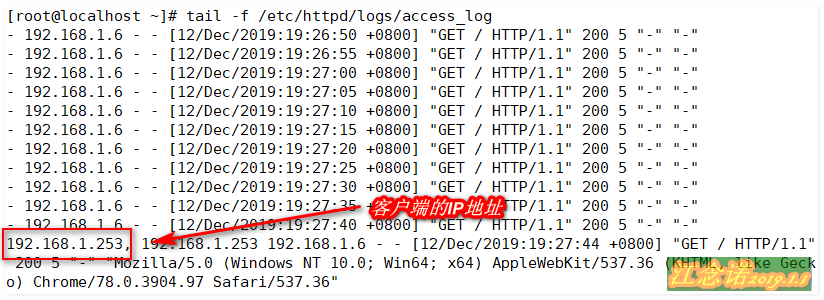

3. Configure the access of the http server to get the IP address of the client

If not configured, the http server only records the IP address of the Varnish server, which is already configured in the Varnish configuration file above.The configuration of httpd is as follows:

[root@localhost ~]# Vim/etc/httpd/conf/httpd.conf //Modify http master profile

196 LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\"" combined

197 LogFormat "%h %l %u %t \"%r\" %>s %b" common

//Add the following to allow the http service to intercept the client's IP address

198 LogFormat "%{X-Forwarded-For}i %h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User- Agent}i\"" combined

//The X-Forwarded-For parameter has been defined in the Varnish configuration file

[root@localhost ~]# systemctl restart httpd //restart http serviceBoth http servers need to be configured (to prevent switching to another server for too long).

Note: Clear the cache during the test!

The client still accesses the Varnish server, and the http access log is as follows:

Test complete!!!

To this end of the article, thank you for watching -----------