1, Deploy Rabbitmq cluster

Rabbitmq cluster can be divided into two ways:

1. Normal mode: the default cluster mode. The message body only exists on one node;

2. Mirror mode: make the required queue into a mirror, which exists in multiple nodes.

ha-mode:

all: queue to all nodes;

exatly: randomly mirror to other nodes;

nodes: mirror to the specified node.

Cluster node mode:

1. Memory node: working in memory;

2. Disk node: working on disk;

Exception: memory nodes and disk nodes exist together to improve access speed and data persistence.

Compared with the memory node, although it does not write to the disk, it performs better than the disk node. In the cluster, only one disk is needed to save the state. If there are only memory nodes in the cluster, once the node is down, all the States and messages will be lost, and the data persistence cannot be realized.

rpm package (extraction code: rv8g)

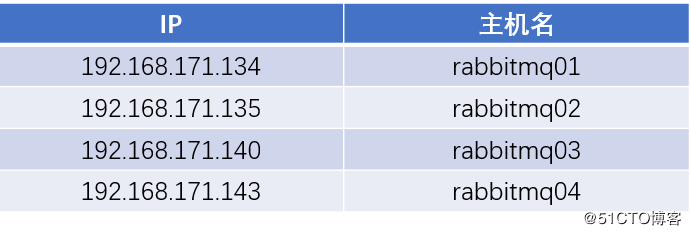

1. The environment is as follows

2. Install rabbitmq service

At 192.168.171.134/135/140 Perform the following operations on the node and deploy rabbitmq Services: [root@localhost ~]# mkdir rabbitmq [root@localhost ~]# cd rabbitmq/ [root@localhost rabbitmq]# ls erlang-18.1-1.el6.x86_64.rpm rabbitmq-server-3.6.6-1.el6.noarch.rpm socat-1.7.3.2-2.el7.x86_64.rpm#Install rabbitmq [root@localhost rabbitmq]# yum -y localinstall erlang-18.1-1.el6.x86_64.rpm rabbitmq-server-3.6.6-1.el6.noarch.rpm socat-1.7.3.2-2.el7.x86_64.rpm [root@localhost rabbitmq]# chkconfig rabbitmq-server on [root@localhost rabbitmq]# /etc/init.d/rabbitmq-server start Starting rabbitmq-server (via systemctl): [ OK ]

3. Configure host 192.168.171.134

[root@localhost ~]# tail -4 /etc/hosts #Configure local resolution (host name can be customized) 192.168.171.134 rabbitmq01192.168.171.135 rabbitmq02192.168.171.140 rabbitmq03192.168.171.143 rabbitmq04#Copy the hosts file to another node [root@localhost ~]# scp /etc/hosts root@192.168.171.135:/etc/ [root@localhost ~]# scp /etc/hosts root@192.168.171.140:/etc/ [root@localhost ~]# scp /etc/hosts root@192.168.171.143:/etc/#take rabbitmq01 Of cookie Node information is copied to other nodes that need to join the cluster#The cookie information of nodes should be consistent when deploying a cluster [root@localhost ~]# scp /var/lib/rabbitmq/.erlang.cookie root@192.168.171.135:/var/lib/rabbitmq/ [root@localhost ~]# scp /var/lib/rabbitmq/.erlang.cookie root@192.168.171.140:/var/lib/rabbitmq/ [root@localhost ~]# scp /var/lib/rabbitmq/.erlang.cookie root@192.168.171.143:/var/lib/rabbitmq/

4. Restart all node servers to join the cluster

Perform the following command on all servers to join the cluster to restart (including rabbitmq01) [root@localhost ~]# init 6 If it is stuck in an interface after restart, it needs to be forced to restart [root @ rabbitmq01 ~] ා PS - EF | grep rabbitmq ා make sure that it has been started. You can see that the hostname has been changed to the one configured in the hosts resolution after restart

5. Configuring clusters on rabbitmq01

[root@rabbitmq01 ~]# rabbitmqctl stop_app # Stop node service Stopping node rabbit@rabbitmq01 ... [root@rabbitmq01 ~]# rabbitmqctl reset # Reset node Resetting node rabbit@rabbitmq01 ... [root@rabbitmq01 ~]# rabbitmqctl start_app # Start node service Starting node rabbit@rabbitmq01 ... # Node name returned by replication

6. Configure rabbitmq02 and 03 to join rabbitmq01 cluster

[root@rabbitmq02 ~]# rabbitmqctl stop_app [root@rabbitmq02 ~]# rabbitmqctl reset [root@rabbitmq02 ~]# rabbitmqctl join_cluster --ram rabbit@rabbitmq01 #Join the cluster in memory, followed by the node name of the copied rabbitmq01 [root@rabbitmq02 ~]# rabbitmqctl start_app [root@rabbitmq02 ~]# rabbitmq-plugins enable rabbitmq_management # Open web plugin

7. View node status on rabbitmq01

[root@rabbitmq01 ~]# rabbitmqctl cluster_status

Cluster status of node rabbit@rabbitmq01 ...

[{nodes,[{disc,[rabbit@rabbitmq01]},

{ram,[rabbit@rabbitmq03,rabbit@rabbitmq02]}]},

{running_nodes,[rabbit@rabbitmq03,rabbit@rabbitmq02,rabbit@rabbitmq01]},

{cluster_name,<<"rabbit@rabbitmq01">>},

{partitions,[]},

{alarms,[{rabbit@rabbitmq03,[]},

{rabbit@rabbitmq02,[]},

{rabbit@rabbitmq01,[]}]}]# rabbit01 The working mode is disk node; rabbit02 And 03 are memory node modes# running_nodes: Running nodes# cluster_name: Node name# alarms: rabbit01, 02 and 03 will give an alarm in case of any problem8. Create an administrative user in rabbitmq and join the administrative group

Since the node has been reset, the user also needs to recreate it

[root@rabbitmq01 ~]# rabbitmqctl add_user admin 123.com Creating user "admin" ... [root@rabbitmq01 ~]# rabbitmqctl set_user_tags admin administrator Setting tags for user "admin" to [administrator] ...

9. Log in web interface access

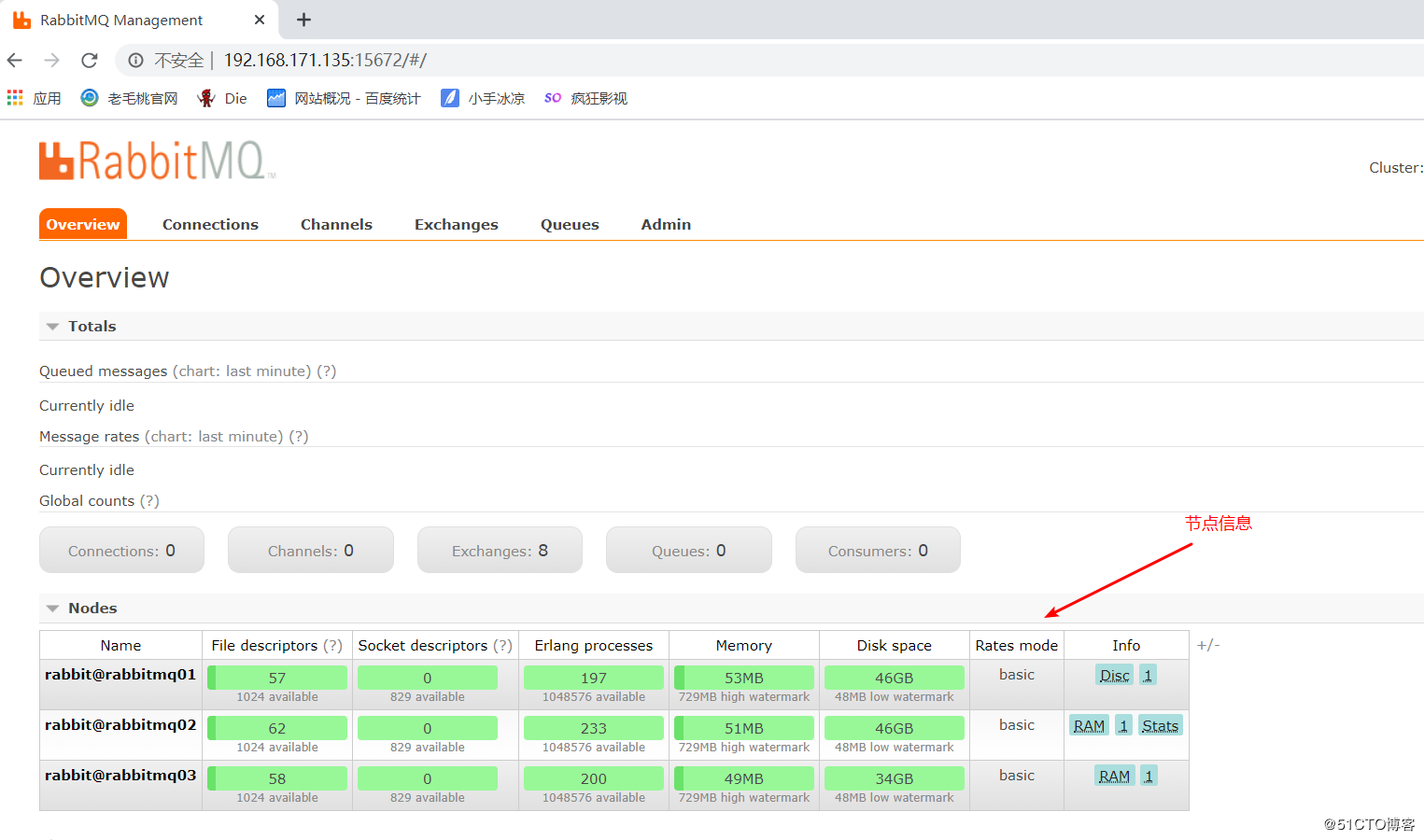

The IP+15672 port of any node in the cluster can be logged in:

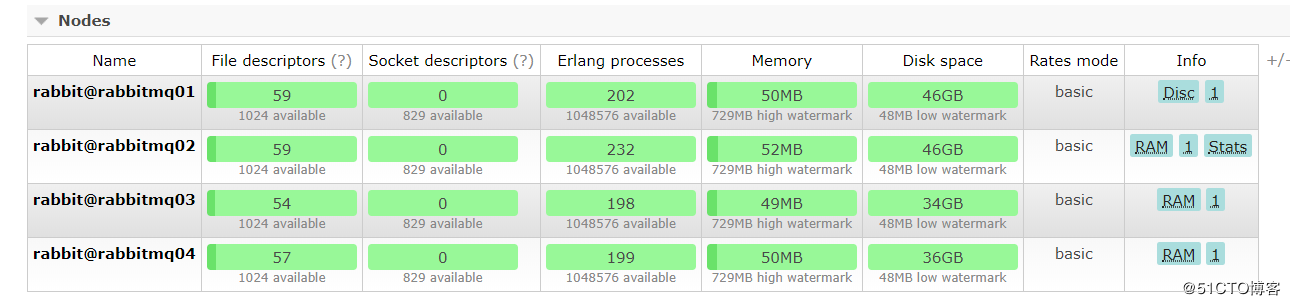

You can see the cluster node information on the following page:

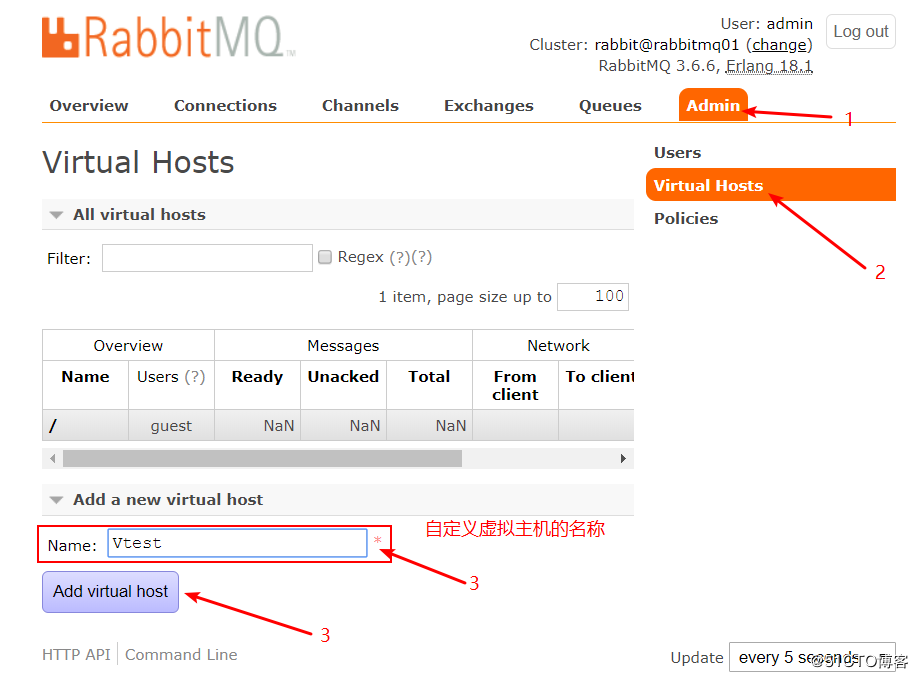

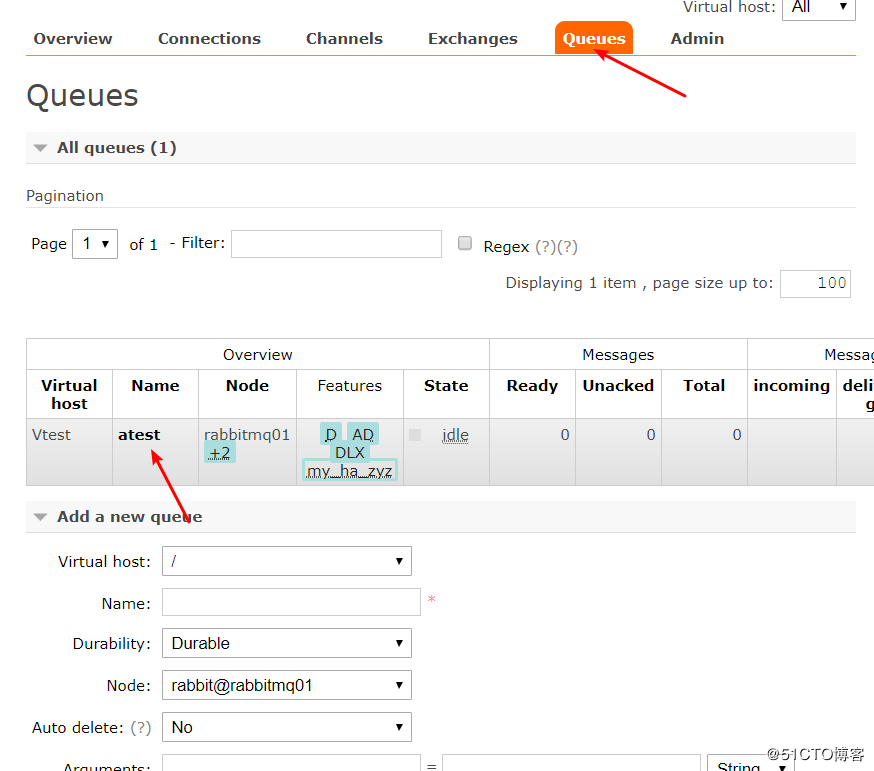

10. Configure web interface to add Vhost

Enter the created virtual host:

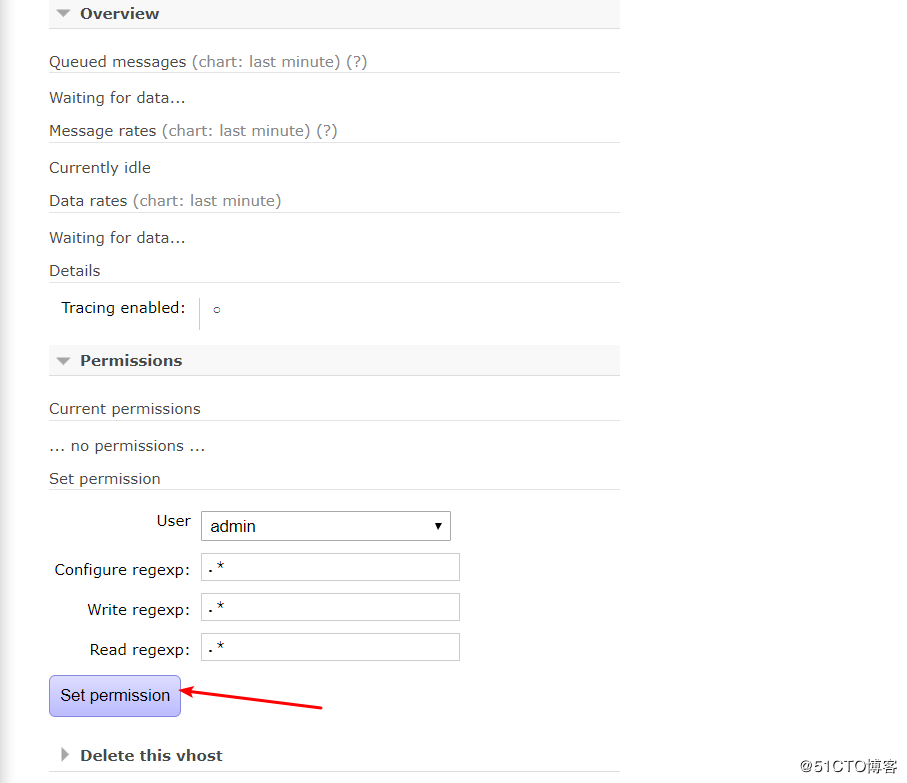

Then configure as follows:

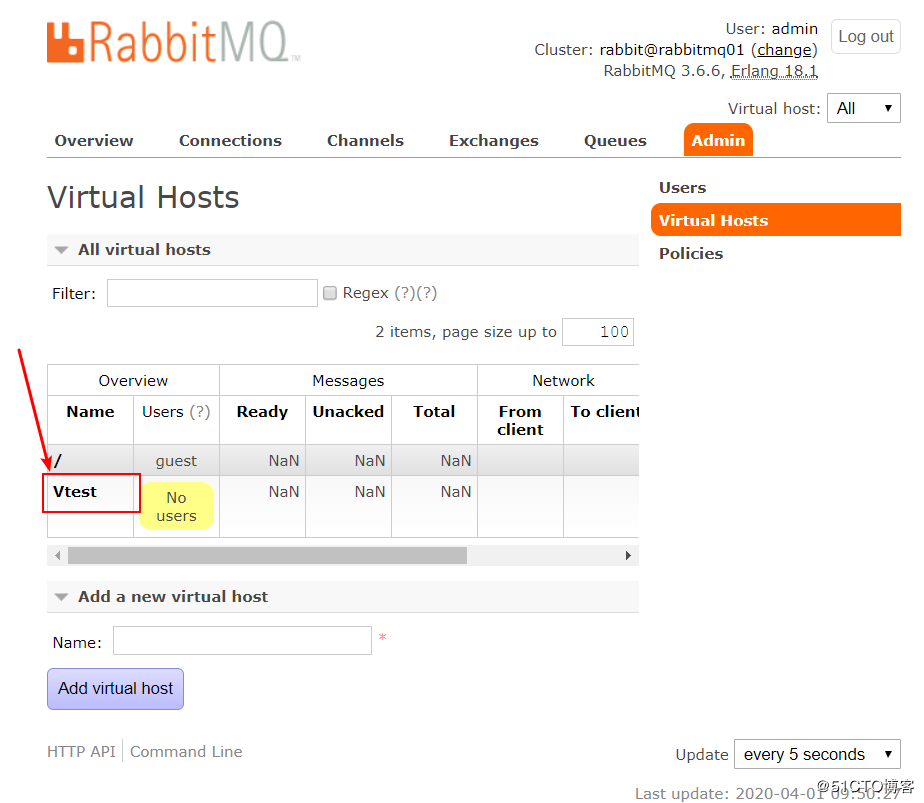

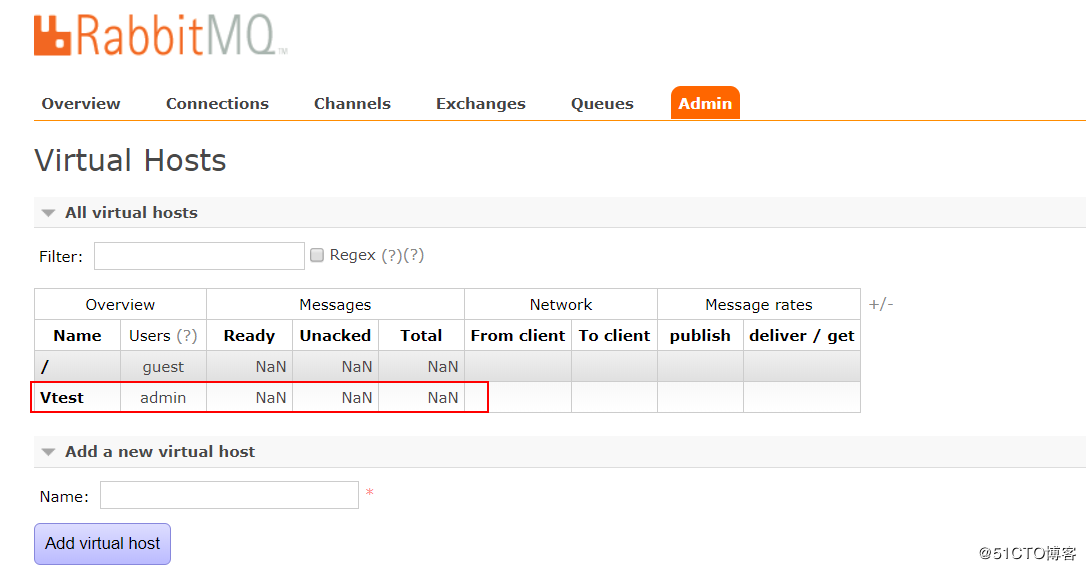

View the virtual host again after setup:

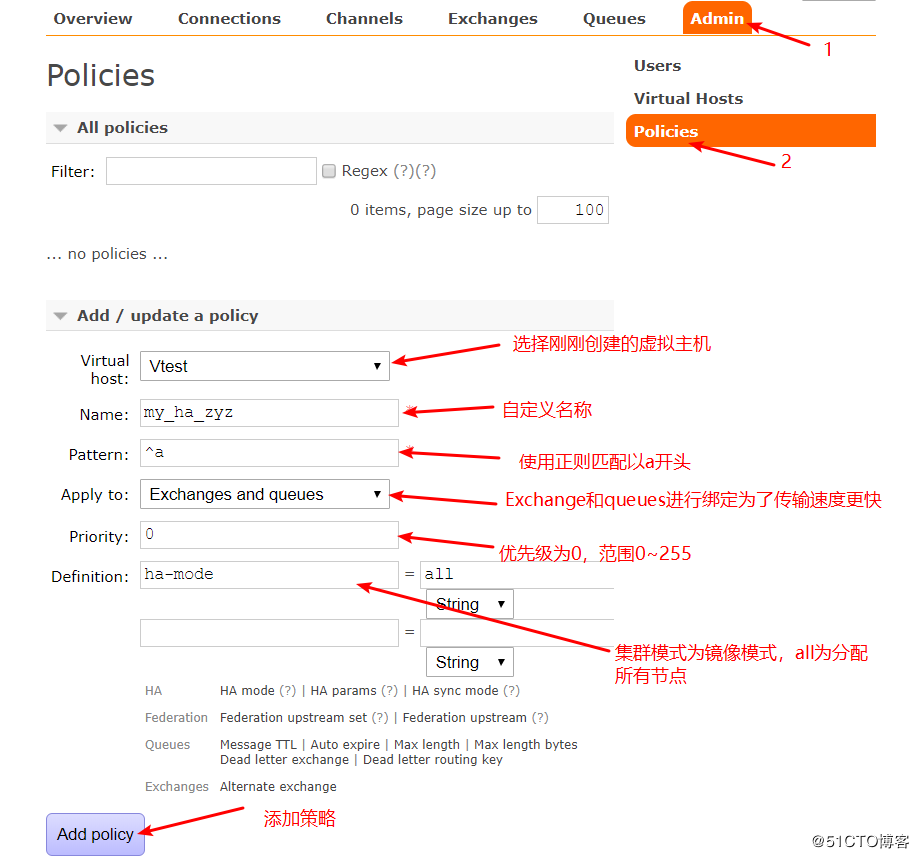

Set matching policy:

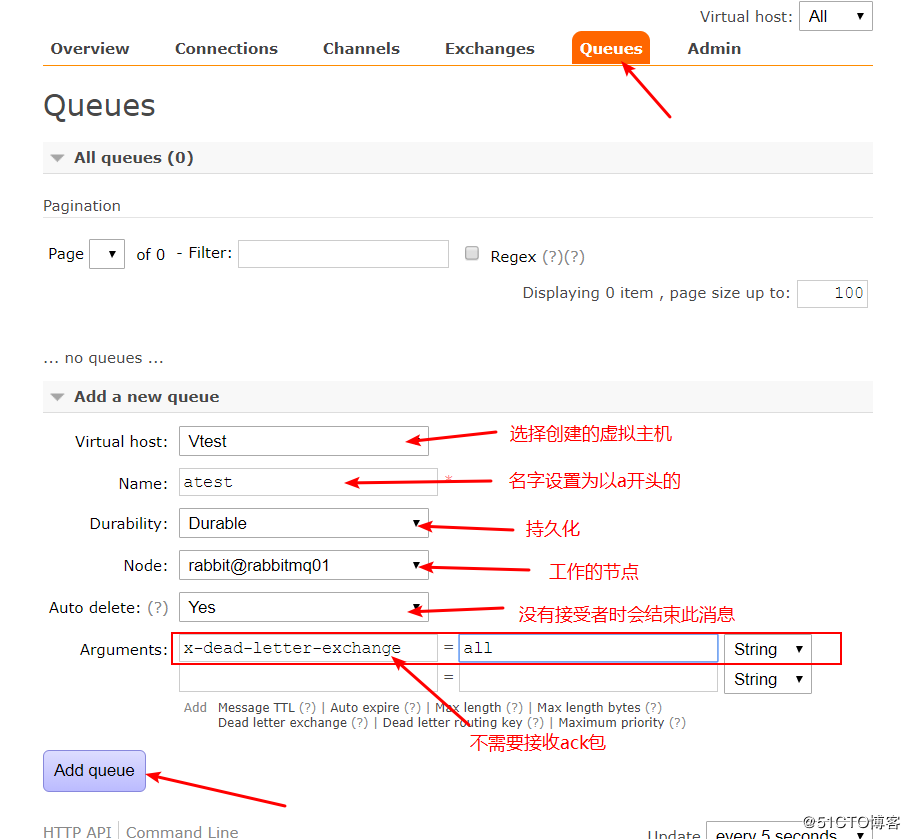

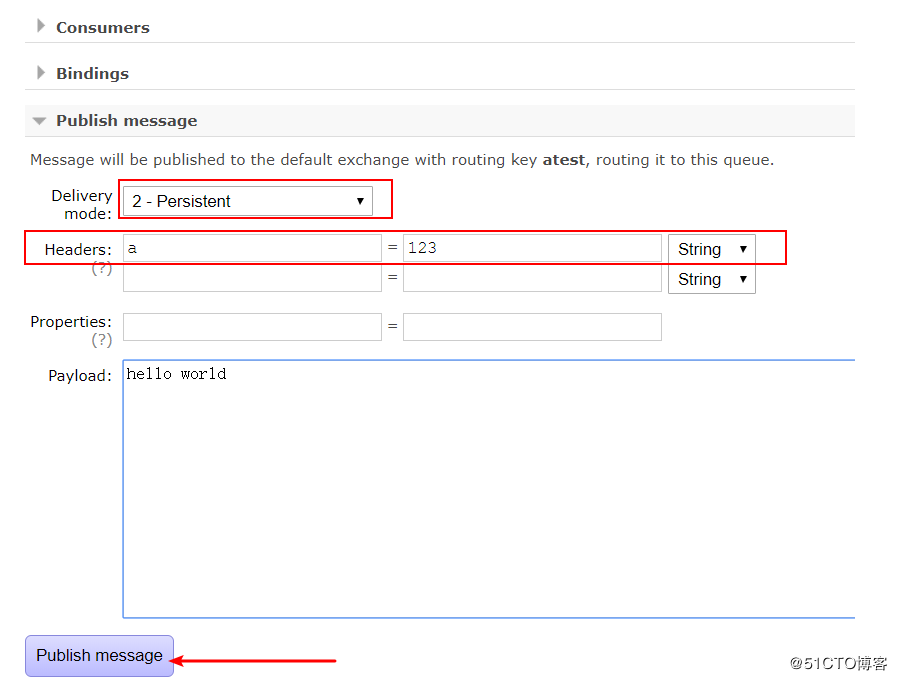

Publish message:

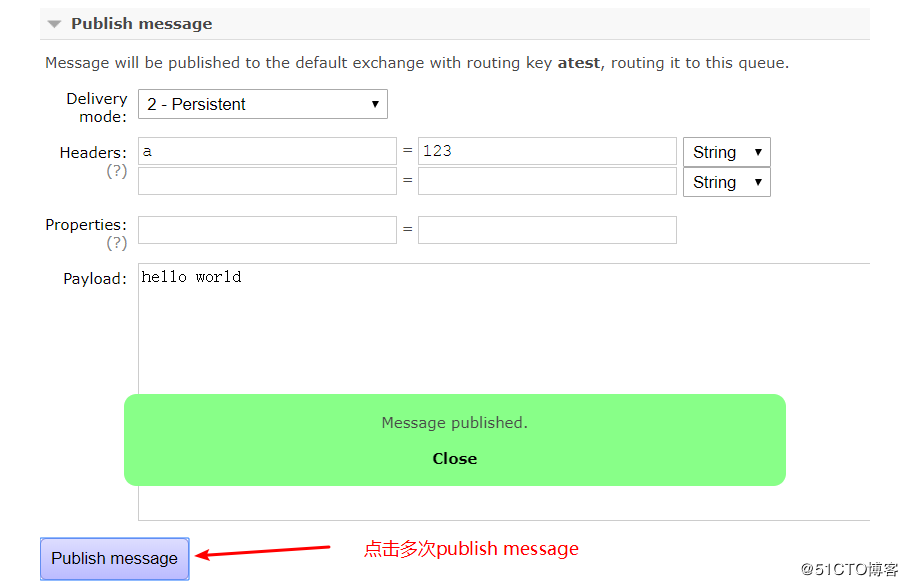

To set the content of a published message:

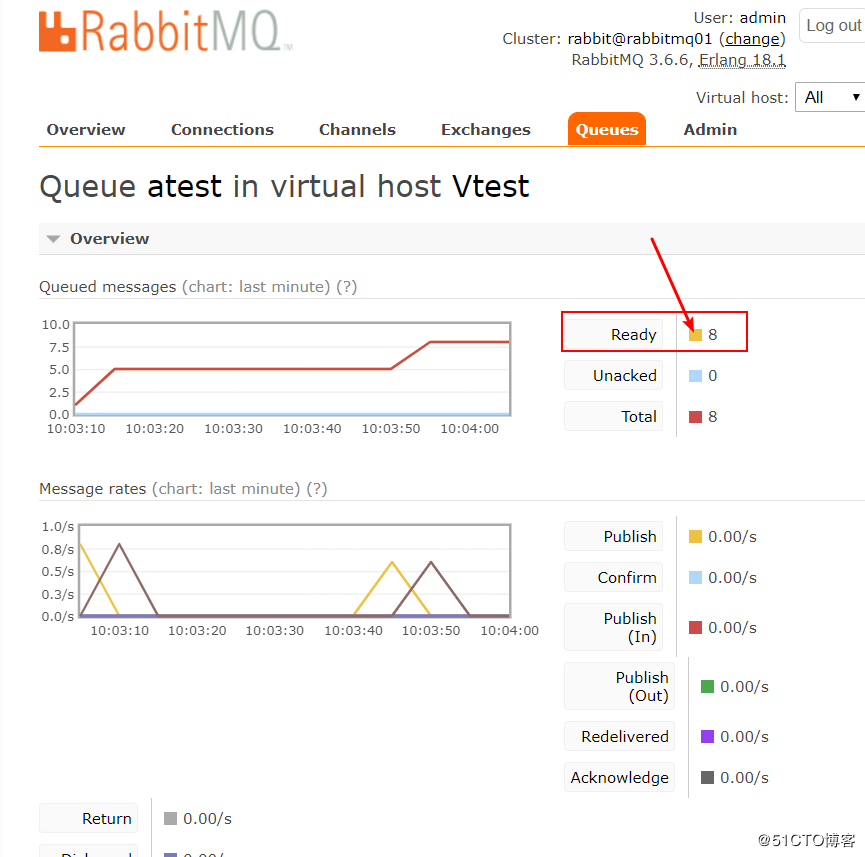

Then refresh the current page to see the total number of queues in the current virtual host:

4, Single node joins or exits cluster configuration

1. Nodes join the cluster

Since the hosts file can resolve the nodes in the cluster when I deploy the cluster above, the resolution is omitted here

#Install rabbitmq on the server of node 192.168.171.143 and configure [root@localhost src]# yum -y localinstall erlang-18.1-1.el6.x86_64.rpm rabbitmq-server-3.6.6-1.el6.noarch.rpm socat-1.7.3.2-2.el7.x86_64.rpm [root@localhost src]# chkconfig rabbitmq-server on [root@localhost src]# /etc/init.d/rabbitmq-server start#Copy cookie information from the cluster to the local [root@localhost src]# scp root@rabbitmq01:/var/lib/rabbitmq/.erlang.cookie /var/lib/rabbitmq/ [root@localhost src]# init 6 #Restart the machine#Join the cluster [root@rabbitmq04 ~]# rabbitmqctl stop_app [root@rabbitmq04 ~]# rabbitmqctl reset [root@rabbitmq04 ~]# rabbitmqctl join_cluster --ram rabbit@rabbitmq01#Join the cluster in the way of memory operation. To join the cluster in the way of disk, omit the "- ram" option [root@rabbitmq04 ~]# rabbitmqctl start_app#Open web management page [root@rabbitmq04 ~]# rabbitmq-plugins enable rabbitmq_management

Check the web interface and confirm rabbitmq04 joins the cluster:

2. Single node exit cluster

1) Stop the node on rabbitmq04 first

[root@rabbitmq04 ~]# rabbitmqctl stop_app

2) Go back to the primary node rabbitmq01 and delete the node

[root @ rabbitmq04 ~] ා rabbitmqctl - N rabbit @ rabbitmq01 forget ා cluster ා node rabbit @ rabbitmq04 ා - N: specify the node name ා forget ᦉ cluster ᦇ node: followed by the node name to be deleted