Container is optional in yarn. This paper introduces the following two types:

- DefaultContainerExecutor

- LinuxContainerExecutor

Controlled by configuration parameters: yarn.nodemanager.container-executor.class

When NodeManager initializes, load

org.apache.hadoop.yarn.server.nodemanager.NodeManager#serviceInit

// todo initializes Container Executor, which encapsulates various methods of node Manager's operation on Container.

// todo includes starting container, querying whether the container with the specified id is alive, and so on. Depending on the configuration, yarn.nodemanager.container-executor.class

// todo determines the instance of Container Executor, which defaults to Default Container Executor.

ContainerExecutor exec = createContainerExecutor(conf);

try {

exec.init(context);

} catch (IOException e) {

throw new YarnRuntimeException("Failed to initialize container executor", e);

}

DeletionService del = createDeletionService(exec);

addService(del);

@VisibleForTesting

protected ContainerExecutor createContainerExecutor(Configuration conf) {

return ReflectionUtils.newInstance(

conf.getClass(YarnConfiguration.NM_CONTAINER_EXECUTOR,

DefaultContainerExecutor.class, ContainerExecutor.class), conf);

}

Namely: Default Container Executor.

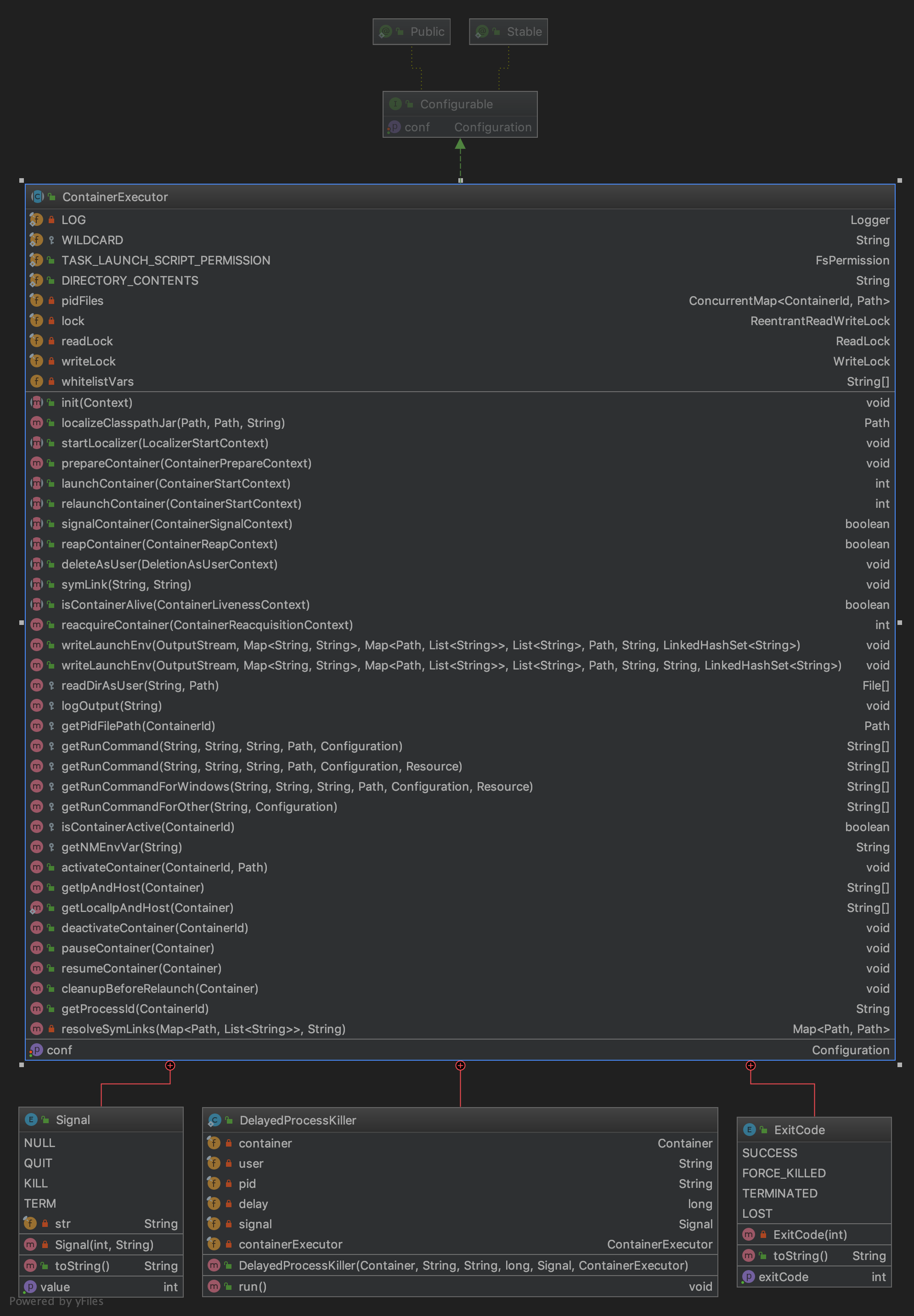

Before explaining, take a look at their parents.

org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor

Class diagram:

All ContainerExecutor s inherit this class.

The more important methods in this class are as follows:

setConf: Set configuration file:

init: Initialization

startLocalizer: Prepare the execution environment for the container in this application.

prepareContainer: Prepare the container before writing the startup environment.

launchContainer: Start the container on the node. This is a blocking call that returns only when the container exits.

relaunchContainer: Restart the container on the node. This is a blocking call that returns only when the container exits.

signalContainer: A signal container with a specified signal.

isContainerAlive: Check if the container is alive

reacquireContainer: Restore existing containers. This is a blocking call that returns only when the container exits. Note that the container must be activated before this call.

Write LaunchEnv: Write the default container startup script for the startup environment.

readDirAsUser: Read the user directory

getRunCommand: Get the run command

IsContainer Active: Is the container alive

ActateContainer: Mark the container as active

pauseContainer: Suspend the container. The default implementation is kill, which can be customized

getProcessId: Get process ID based on container ID

enum Signal: Signal Enumeration

NULL(0, "NULL"),

QUIT(3, "SIGQUIT"),

KILL(9, "SIGKILL"),

TERM(15, "SIGTERM");

Delayed Process Killer: According to the signal, kill the process class. (This is the kill process class, the specific situation is not yet clear.)

Next, we will explain separately:

DefaultContainerExecutor

Default Container Executor, DCE for short. Each Container runs in a separate process, but the process is started by the NM user. For example, NM processes are started by yarn users, and all Container processes are also started by yarn users.

In the process of starting a Container by Container Executor, three scripts are involved, which are:

- default_container_executor.sh

- default_container_executor_session.sh

- launch_container.sh

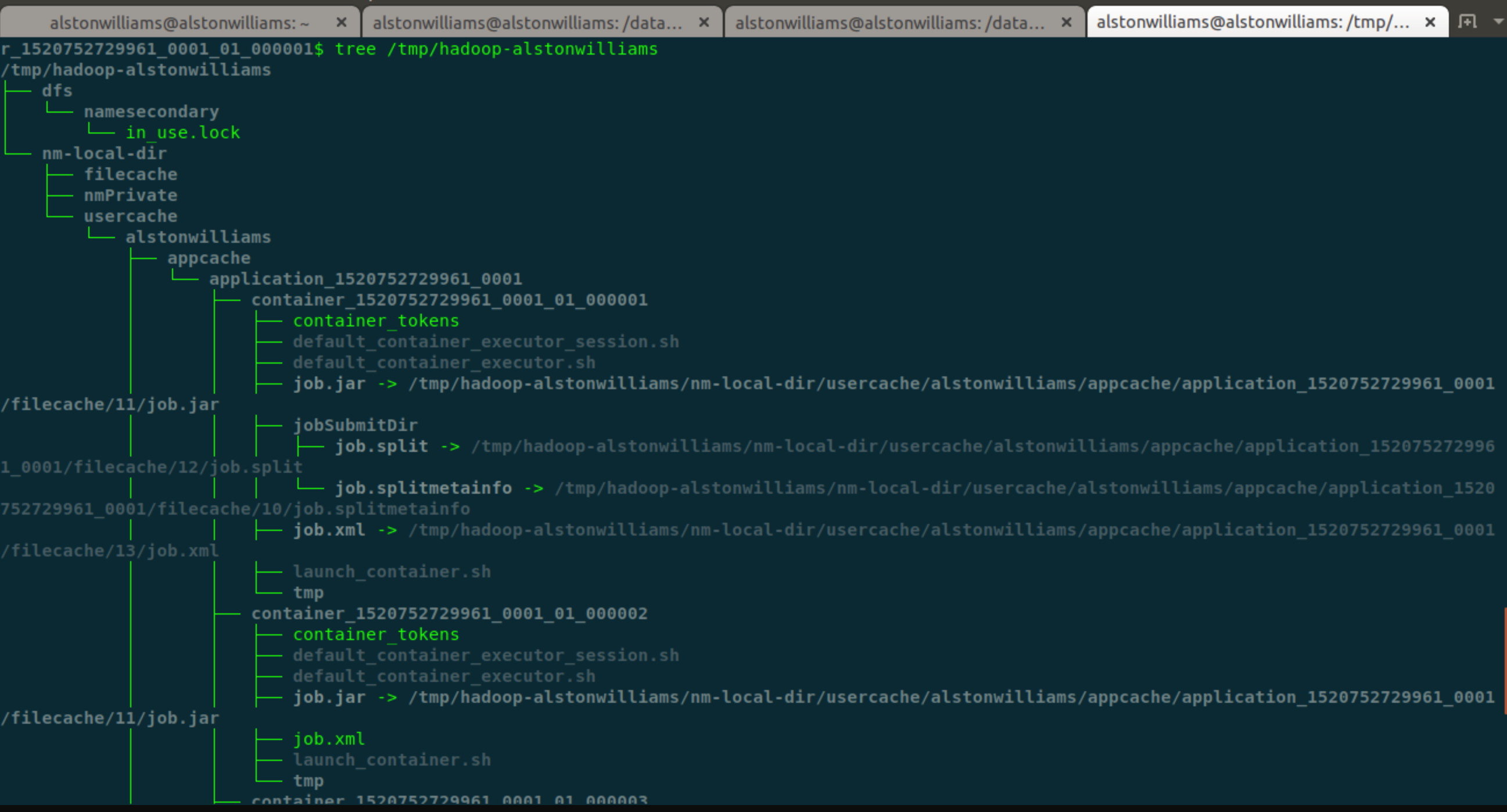

These three scripts are all related to Container, so they are all placed under a directory structure represented by Container.

In NodeManager, a corresponding directory is created for each Application and each Container. Under each Container's directory, some necessary information is placed to run the Container.

Generally speaking, these directories are located in the / tmp directory and will be deleted after an Application is completed. Reduce disk space consumption.

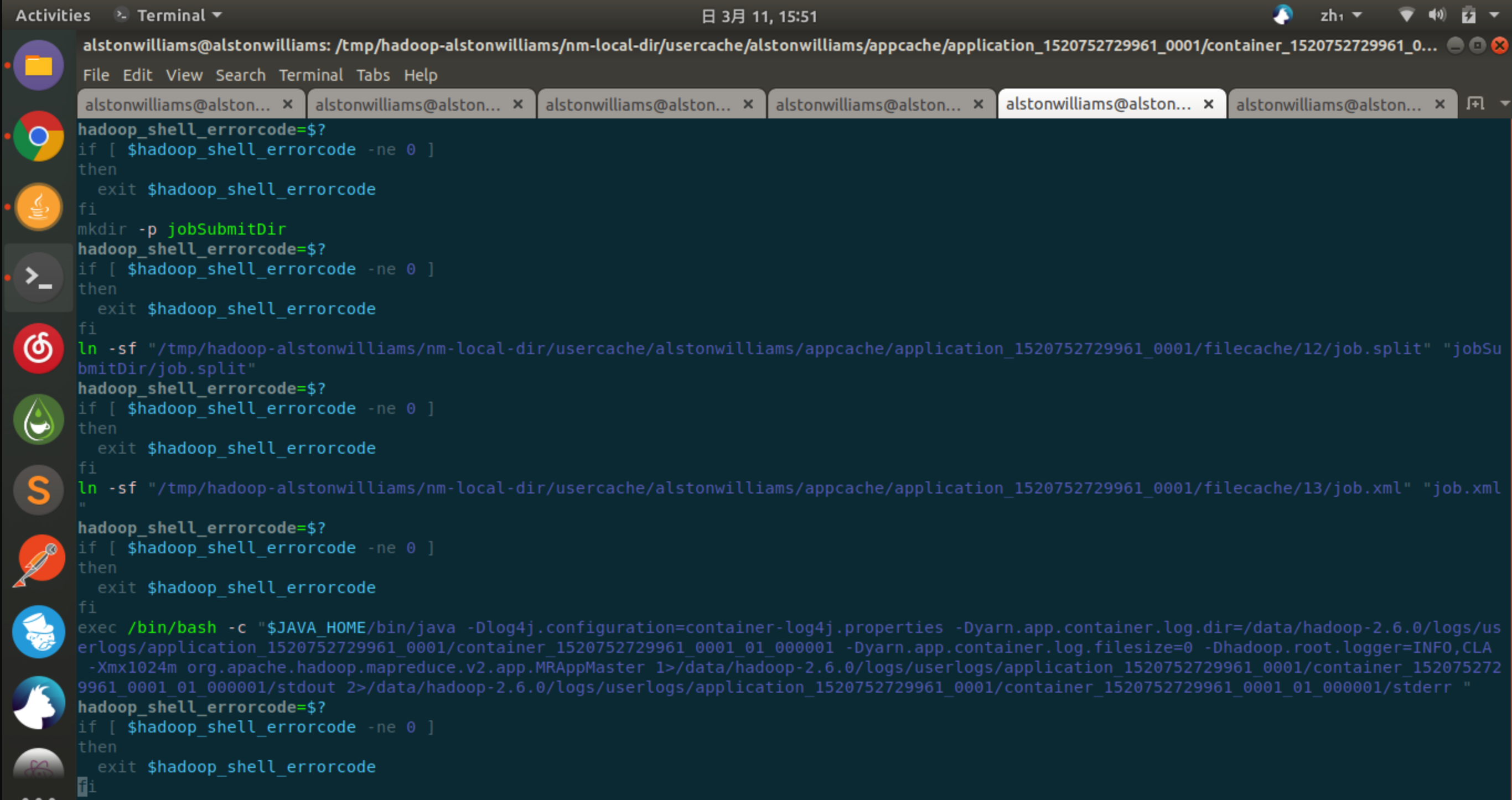

Let's take a look at the contents of the three script files mentioned above.

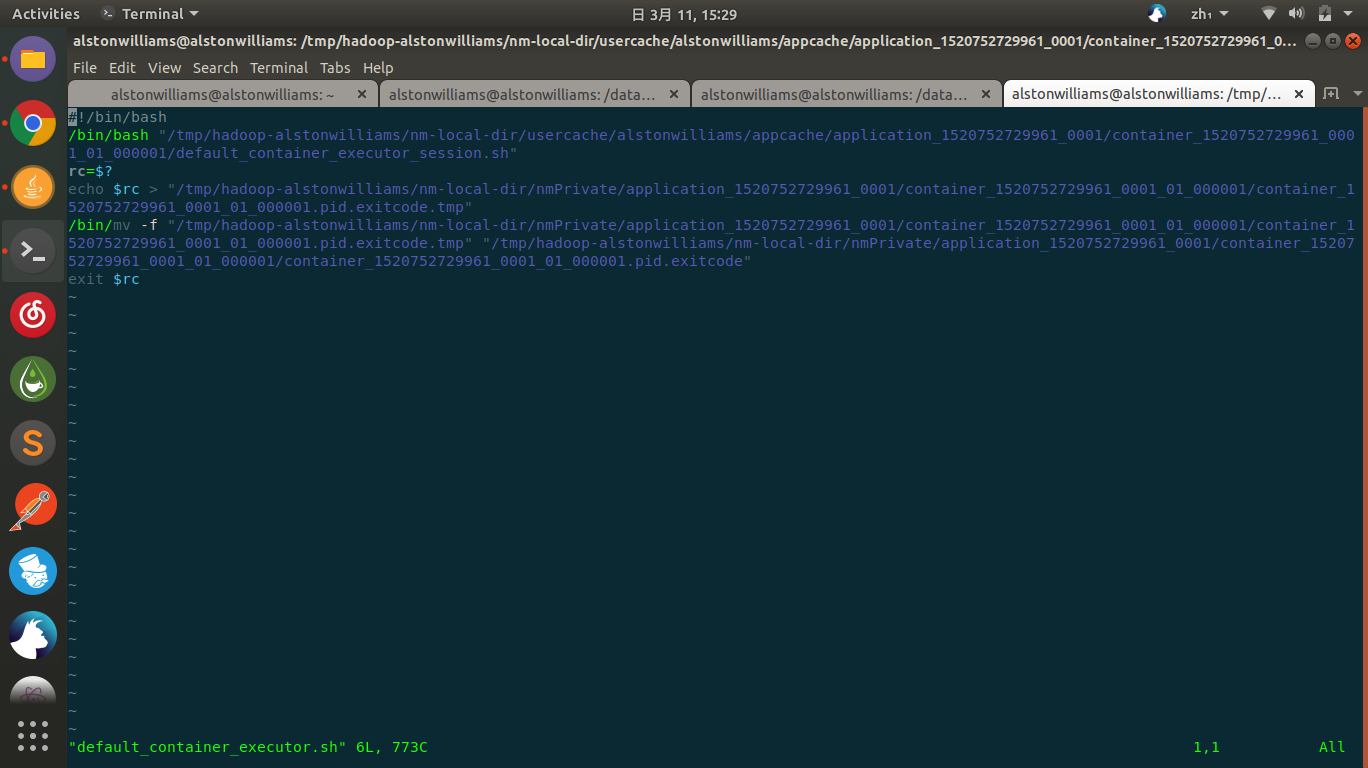

default-container_executor.sh:

We can see that within this script file, default_container_executor_session.sh will be started.

And write the execution results to a file named Container ID+pid.exitcode for this Container.

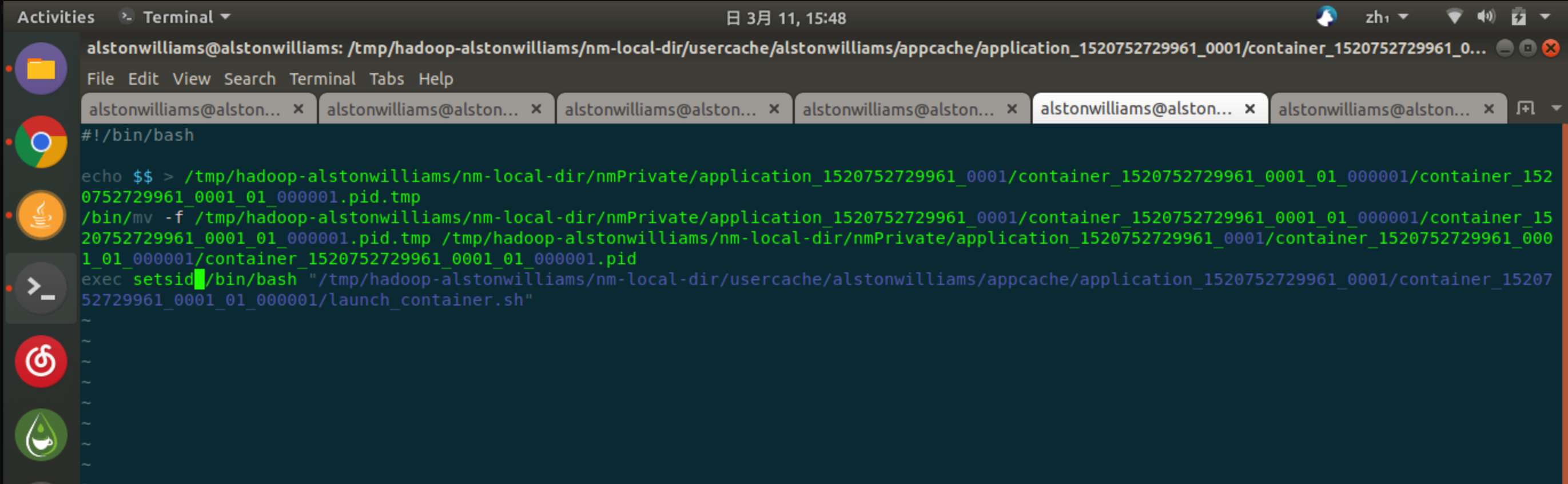

What about default_container_executor_session.sh script?

As you can see, it basically starts the launch_container.sh script.

And we can see that in launch_container.sh,

It's responsible for running the corresponding Container, or MRAppMaster, or Mapper or Reducer:

In launch_container.sh, many environment variables are set.

Here, because I looked at the Container of an Application Master, I started MRAppMaster.

So, Default Container Executor should be the first to execute the default_container_executor.sh script, right?

Well, yeah, that's right.

Next comes the code:

startLocalizer: Set the initialization operation

/**

* todo Setting the initialization operation, you need to pass in the initialization object

* todo LocalizerStartContext : Encapsulate the information needed to start the localizer.

*

* @param ctx LocalizerStartContext that encapsulates necessary information

* for starting a localizer.

* @throws IOException

* @throws InterruptedException

*/

@Override

public void startLocalizer(LocalizerStartContext ctx)

throws IOException, InterruptedException {

Path nmPrivateContainerTokensPath = ctx.getNmPrivateContainerTokens();

//todo Gets NoeManger Communication Address

InetSocketAddress nmAddr = ctx.getNmAddr();

String user = ctx.getUser();

String appId = ctx.getAppId();

String locId = ctx.getLocId();

LocalDirsHandlerService dirsHandler = ctx.getDirsHandler();

//todo local file directory

List<String> localDirs = dirsHandler.getLocalDirs();

//todo local log file directory

List<String> logDirs = dirsHandler.getLogDirs();

//todo initializes the local directory of a particular user. localDirs

// create $local.dir/usercache/$user and its immediate parent

createUserLocalDirs(localDirs, user);

//todo creates the user cache directory.

// create $local.dir/usercache/$user/appcache

// create $local.dir/usercache/$user/filecache

createUserCacheDirs(localDirs, user);

//todo Creates App Directory

// create $local.dir/usercache/$user/appcache/$appId

createAppDirs(localDirs, user, appId);

//todo Creates Love You App Log Directory

// create $log.dir/$appid

createAppLogDirs(appId, logDirs, user);

// randomly choose the local directory

// todo creates working directories

// Returns randomly selected application directories from the list of local storage directories. The probability of selecting a directory is proportional to its size.

Path appStorageDir = getWorkingDir(localDirs, user, appId);

String tokenFn =

String.format(ContainerLocalizer.TOKEN_FILE_NAME_FMT, locId);

Path tokenDst = new Path(appStorageDir, tokenFn);

//todo copy files

copyFile(nmPrivateContainerTokensPath, tokenDst, user);

LOG.info("Copying from " + nmPrivateContainerTokensPath

+ " to " + tokenDst);

FileContext localizerFc =

FileContext.getFileContext(lfs.getDefaultFileSystem(), getConf());

localizerFc.setUMask(lfs.getUMask());

localizerFc.setWorkingDirectory(appStorageDir);

LOG.info("Localizer CWD set to " + appStorageDir + " = "

+ localizerFc.getWorkingDirectory());

ContainerLocalizer localizer =

createContainerLocalizer(user, appId, locId, localDirs, localizerFc);

// TODO: DO it over RPC for maintaining similarity?

localizer.runLocalization(nmAddr);

}

Launch Container: Launch Container

The core of this comparison is actually to build a shell Command Executor, execute shell startup commands, start scripts...

/**

* todo Start Container

*

* @param ctx Encapsulates information necessary for launching containers.

* @return

* @throws IOException

* @throws ConfigurationException

*/

@Override

public int launchContainer(ContainerStartContext ctx)

throws IOException, ConfigurationException {

Container container = ctx.getContainer();

Path nmPrivateContainerScriptPath = ctx.getNmPrivateContainerScriptPath();

Path nmPrivateTokensPath = ctx.getNmPrivateTokensPath();

String user = ctx.getUser();

Path containerWorkDir = ctx.getContainerWorkDir();

List<String> localDirs = ctx.getLocalDirs();

List<String> logDirs = ctx.getLogDirs();

FsPermission dirPerm = new FsPermission(APPDIR_PERM);

ContainerId containerId = container.getContainerId();

// todo creates container directories on all disks

String containerIdStr = containerId.toString();

String appIdStr =

containerId.getApplicationAttemptId().

getApplicationId().toString();

for (String sLocalDir : localDirs) {

Path usersdir = new Path(sLocalDir, ContainerLocalizer.USERCACHE);

Path userdir = new Path(usersdir, user);

Path appCacheDir = new Path(userdir, ContainerLocalizer.APPCACHE);

Path appDir = new Path(appCacheDir, appIdStr);

Path containerDir = new Path(appDir, containerIdStr);

createDir(containerDir, dirPerm, true, user);

}

// todo creates log directories on all hard disks

createContainerLogDirs(appIdStr, containerIdStr, logDirs, user);

//todo creates temporary file directory:. / tmp

Path tmpDir = new Path(containerWorkDir,

YarnConfiguration.DEFAULT_CONTAINER_TEMP_DIR);

createDir(tmpDir, dirPerm, false, user);

// todo copy container tokens to work dir

Path tokenDst =

new Path(containerWorkDir, ContainerLaunch.FINAL_CONTAINER_TOKENS_FILE);

copyFile(nmPrivateTokensPath, tokenDst, user);

// todo copy launch script to work dir

Path launchDst =

new Path(containerWorkDir, ContainerLaunch.CONTAINER_SCRIPT);

copyFile(nmPrivateContainerScriptPath, launchDst, user);

// Create new local launch wrapper script

// todo creates a new local startup wrapper script

LocalWrapperScriptBuilder sb = getLocalWrapperScriptBuilder(

containerIdStr, containerWorkDir);

// Fail fast if attempting to launch the wrapper script would fail due to

// Windows path length limitation.

if (Shell.WINDOWS &&

sb.getWrapperScriptPath().toString().length() > WIN_MAX_PATH) {

throw new IOException(String.format(

"Cannot launch container using script at path %s, because it exceeds " +

"the maximum supported path length of %d characters. Consider " +

"configuring shorter directories in %s.", sb.getWrapperScriptPath(),

WIN_MAX_PATH, YarnConfiguration.NM_LOCAL_DIRS));

}

Path pidFile = getPidFilePath(containerId);

if (pidFile != null) {

//todo gets pidFile and writes the startup script

sb.writeLocalWrapperScript(launchDst, pidFile);

} else {

LOG.info("Container " + containerIdStr

+ " pid file not set. Returning terminated error");

return ExitCode.TERMINATED.getExitCode();

}

// create log dir under app

// fork script

Shell.CommandExecutor shExec = null;

try {

setScriptExecutable(launchDst, user);

setScriptExecutable(sb.getWrapperScriptPath(), user);

shExec = buildCommandExecutor(sb.getWrapperScriptPath().toString(),

containerIdStr, user, pidFile, container.getResource(),

new File(containerWorkDir.toUri().getPath()),

container.getLaunchContext().getEnvironment());

//To do containerId Start the command if it survives

if (isContainerActive(containerId)) {

// To do - --------------------------------------------------------------------------------------------------------------------------------------------------------

shExec.execute();

// todo - --------------------------------------------------------------------------------------------------------------------------------------------------------

} else {

LOG.info("Container " + containerIdStr +

" was marked as inactive. Returning terminated error");

return ExitCode.TERMINATED.getExitCode();

}

} catch (IOException e) {

if (null == shExec) {

return -1;

}

int exitCode = shExec.getExitCode();

LOG.warn("Exit code from container " + containerId + " is : " + exitCode);

// 143 (SIGTERM) and 137 (SIGKILL) exit codes means the container was

// terminated/killed forcefully. In all other cases, log the

// container-executor's output

if (exitCode != ExitCode.FORCE_KILLED.getExitCode()

&& exitCode != ExitCode.TERMINATED.getExitCode()) {

LOG.warn("Exception from container-launch with container ID: "

+ containerId + " and exit code: " + exitCode , e);

StringBuilder builder = new StringBuilder();

builder.append("Exception from container-launch.\n");

builder.append("Container id: ").append(containerId).append("\n");

builder.append("Exit code: ").append(exitCode).append("\n");

if (!Optional.fromNullable(e.getMessage()).or("").isEmpty()) {

builder.append("Exception message: ");

builder.append(e.getMessage()).append("\n");

}

if (!shExec.getOutput().isEmpty()) {

builder.append("Shell output: ");

builder.append(shExec.getOutput()).append("\n");

}

String diagnostics = builder.toString();

logOutput(diagnostics);

container.handle(new ContainerDiagnosticsUpdateEvent(containerId,

diagnostics));

} else {

container.handle(new ContainerDiagnosticsUpdateEvent(containerId,

"Container killed on request. Exit code is " + exitCode));

}

return exitCode;

} finally {

if (shExec != null) shExec.close();

}

return 0;

}

At the same time, we can also see that this implementation has some problems, that is, it is not good for resource isolation.

All Container s are started by the user running NodeManager.

LinuxContainerExecutor

Linux Container Executor, short for LCE. Each Container is started by a different user. For example, container of job submitted by A user is started by A user. In addition, it supports cgroup, separate configuration files and simple ACL.

LCE is obviously better isolated, but there are some limitations:

- linux native program support is required. To be exact, it is a container-executor program written in C. The code can be found in hadoop-yarn-project hadoop-yarn hadoop-yarn-server hadoop-yarn-server-nodemanager src main native container-executor. When compiling hadoop, be sure to compile container-executor at the same time. The path of container-executor is specified by the attribute yarn.nodemanager.linux-container-executor.path.

- Container-executor also needs a configuration file container-executor.cfg. And the relative path between this configuration file and container-executor binary file is fixed. By default container-executor will go to.. / etc/hadoop path to find the configuration file, and if it cannot find it, it will report an error. You can specify when compiling hadoop: MVN package-Pdist, native-DskipTests-Dtar-Dcontainer-executor.conf.dir=. /. /conf. I don't know why it's designed like this.

- Since Container is started by different users, there must be corresponding Linux users. Otherwise, an exception will be thrown. This causes some administrative problems, such as the need to execute useradd B on all NM nodes when adding a new user B.

- The owner of container-executor and container-executor.cfg must be root. And their directory goes all the way up to /, and the owner must also be root. So we usually put these two files under / etc/yarn.

- The permission of container-executor file must be 6050 or--Sr-s---, because its principle is setuid/setgid. The group owner must be in the same group as the user who started the NM. For example, NM is started by yarn users, yarn users belong to hadoop group, and container-executor must also be hadoop group.

Memory isolation

YARN does not really isolate memory, but monitors the memory usage of Container processes and kills them directly after exceeding the limit. The related logic is shown in the ContainersMonitorImpl class.

The logic of process monitoring is shown in the ProcfsBasedProcessTree class. The principle is to read the file under / proc/$pid and get the memory occupancy of the process.

The specific logic is not detailed, but a little complicated.

CPU isolation

By default, YARN does not consider CPU isolation at all, even using LCE.

So if a task is CPU intensive, it may consume the entire NM CPU.

(Related to specific applications. For MR, use at most one core.)

cgroup

YARN supports cgroup isolation of CPU resources: YARN-3.

cgroup must have LCE, but it is not turned on by default. You can set the property yarn.nodemanager.linux-container-executor.resources-handler.class to org.apache.hadoop.yarn.server.nodemanager.util.CgroupsLCEResources Handler to open.

There are many other attributes for cgroup that can be adjusted, see the configuration in yarn-default.xml.

localize process

In the process of researching Container Executor, we found this thing, and the pain of the research was very painful...

This is actually similar to the previous distributed cache, but YARN is more general.

Mainly distribute all the files needed to run the container, including some lib, token, and so on.

This process is called localization and is the responsibility of the ResourceLocalizationService class.

There are several steps:

- Create relevant catalogues. $local.dir/user cache/$user/filecache for temporary user-visible distributed cache; $local.dir/user cache/$user/app cache/$appid/filecache for temporary app visible distributed cache; $log.dir/$appid/$containerid for temporary log. I've only listed the deepest level of directory here. If the parent directory does not exist, it will be created. For DCE, these directories are built directly with java code. For LCE, call container-executor to build a directory. See Usage of container-executor above. Note that these directories will be built on all disks (our nodes are usually 12 disks, built 12 times), but only one will actually be used.

- Write the token file to the $local.dir/usercache/$user/appcache/$appid directory. There are bug s, whether DCE or LCE, which will write token files to the first local-dir, so there may be competition, leading to subsequent container startup failure. see YARN-2566,YARN-2623.

- For DCE, a ContainerLocalizer object is directly new and the runLocalization method is called. The purpose of this method is to get the URI of the file to be distributed from the Resource Localization Service and download it locally. For LCE, a JVM process is started separately and communicates with Resource Localization Service through RPC Localization Protocol. The function is the same.

To be added...

Reference connection:

https://www.jianshu.com/p/e79b6a10dc85

http://jxy.me/2015/05/15/yarn-container-executor/