Recently, when I was doing data prediction on DBN, I found that there was no such program on the Internet, so I decided to add it to this blog.

Before executing the following code, you need to implant Deep Learning Toolkit . See other blogs about how to insert the toolbox, and I won't say any more.

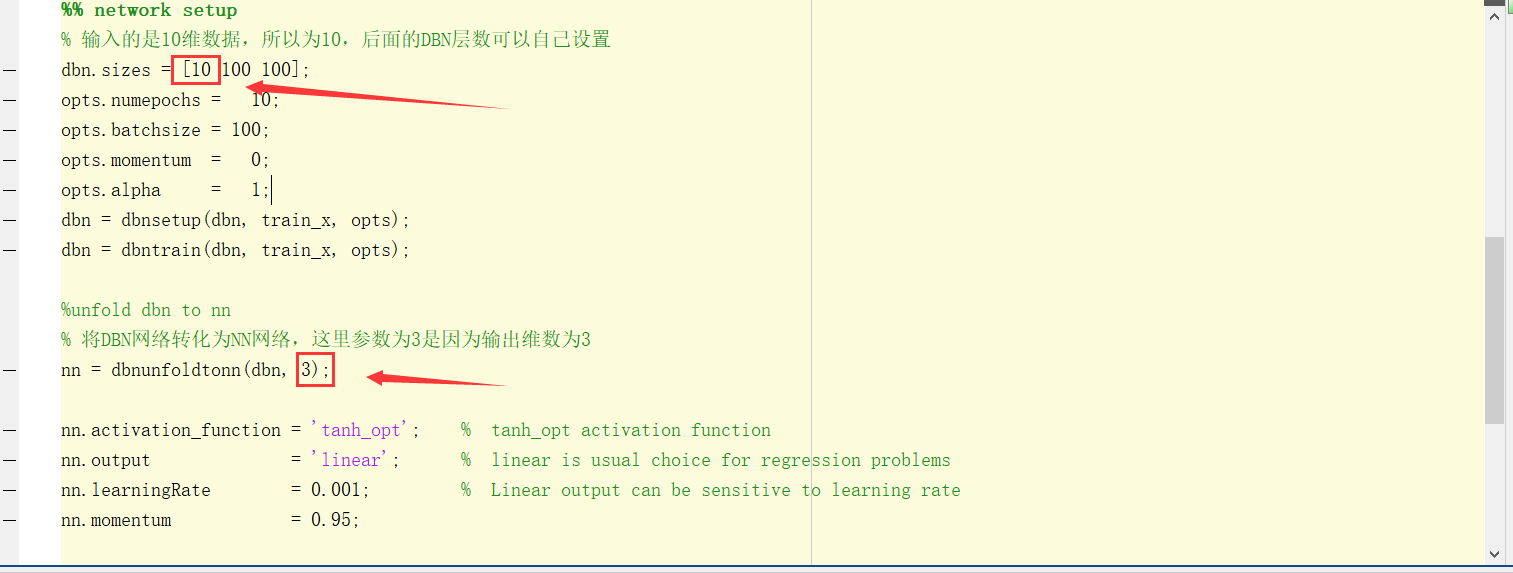

clc;clear %% Generate data % Some invented nonlinear relationships to get 3 noisy output targets % 1000 records with 10 features all_x = randn(20000, 10); all_y = randn(20000, 3) * 0.01; all_y(:,1) = all_y(:,1) + sum( all_x(:,1:5) .* all_x(:, 3:7), 2 ); all_y(:,2) = all_y(:,2) + sum( all_x(:,5:9) .* all_x(:, 4:8) .* all_x(:, 2:6), 2 ); all_y(:,3) = all_y(:,3) + log( sum( all_x(:,4:8) .* all_x(:,4:8), 2 ) ) * 3.0; % input_data 20000*10 output_data 20000*3 % The first 19,000 samples are used as training data, and the last 1,000 samples are used as test data. train_x = all_x(1:19000,:); train_y = all_y(1:19000,:); test_x = all_x(19001:20000,:); test_y = all_y(19001:20000,:); % the constructed data is already normalized, but this is usually best practice: [train_x, mu, sigma] = zscore(train_x); test_x = normalize(test_x, mu, sigma); %% network setup % The input is 10-dimensional data, so it's 10. DBN Layers can be set by themselves dbn.sizes = [10 100 100]; opts.numepochs = 10; opts.batchsize = 100; opts.momentum = 0; opts.alpha = 1; dbn = dbnsetup(dbn, train_x, opts); dbn = dbntrain(dbn, train_x, opts); %unfold dbn to nn % take DBN Network Conversion NN Network, where the parameter is 3 because the output dimension is 3 nn = dbnunfoldtonn(dbn, 3); nn.activation_function = 'tanh_opt'; % tanh_opt activation function nn.output = 'linear'; % linear is usual choice for regression problems nn.learningRate = 0.001; % Linear output can be sensitive to learning rate nn.momentum = 0.95; opts.numepochs = 20; % Number of full sweeps through data opts.batchsize = 100; % Take a mean gradient step over this many samples [nn, L] = nntrain(nn, train_x, train_y, opts); % nnoutput calculates the predicted regression values predictions = nnoutput( nn, test_x );%Prediction results [er, bad] = nntest(nn, test_x, test_y);%Error calculation assert(er < 1.5, 'Too big error');

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

There are a lot of people commenting on this blog. Some people have bug s and some people are not accurate enough. I used to write this blog casually before. I was very happy that it would be helpful to so many people. Before doing DBN, found that there is no matlab prediction code on the network, most of them are classified, so now the toolbox is the result of the code modification of the official network. Now, let's read the code step by step.

Note: Blogger matlab version 2016b

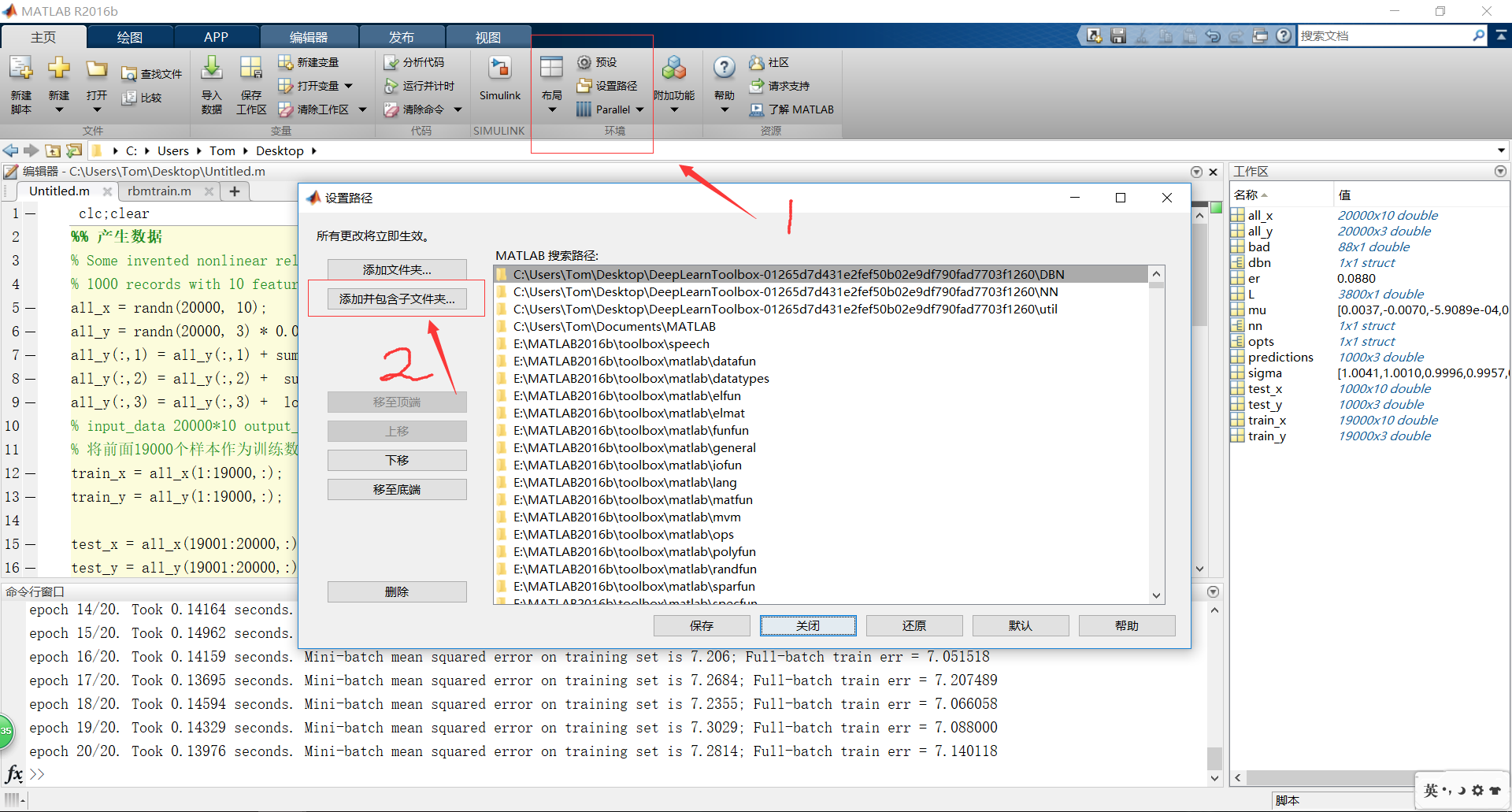

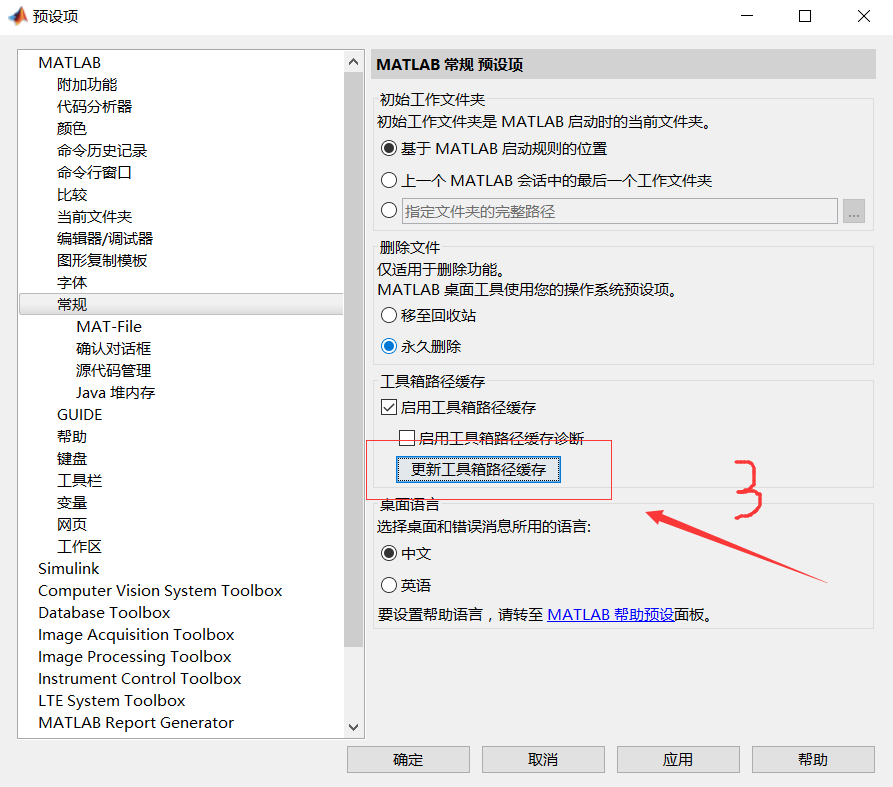

First, we need to download the toolbox, decompress it, and add the toolbox. Here, some people comment that the debugging failed. I tried it just now, and it really failed. Because the function name inside is duplicated with the function name of MATLAB itself, matlab calls another function when calling this function. Here, we can add the toolbox manually, and make it rank first, with a certain priority when invoking.

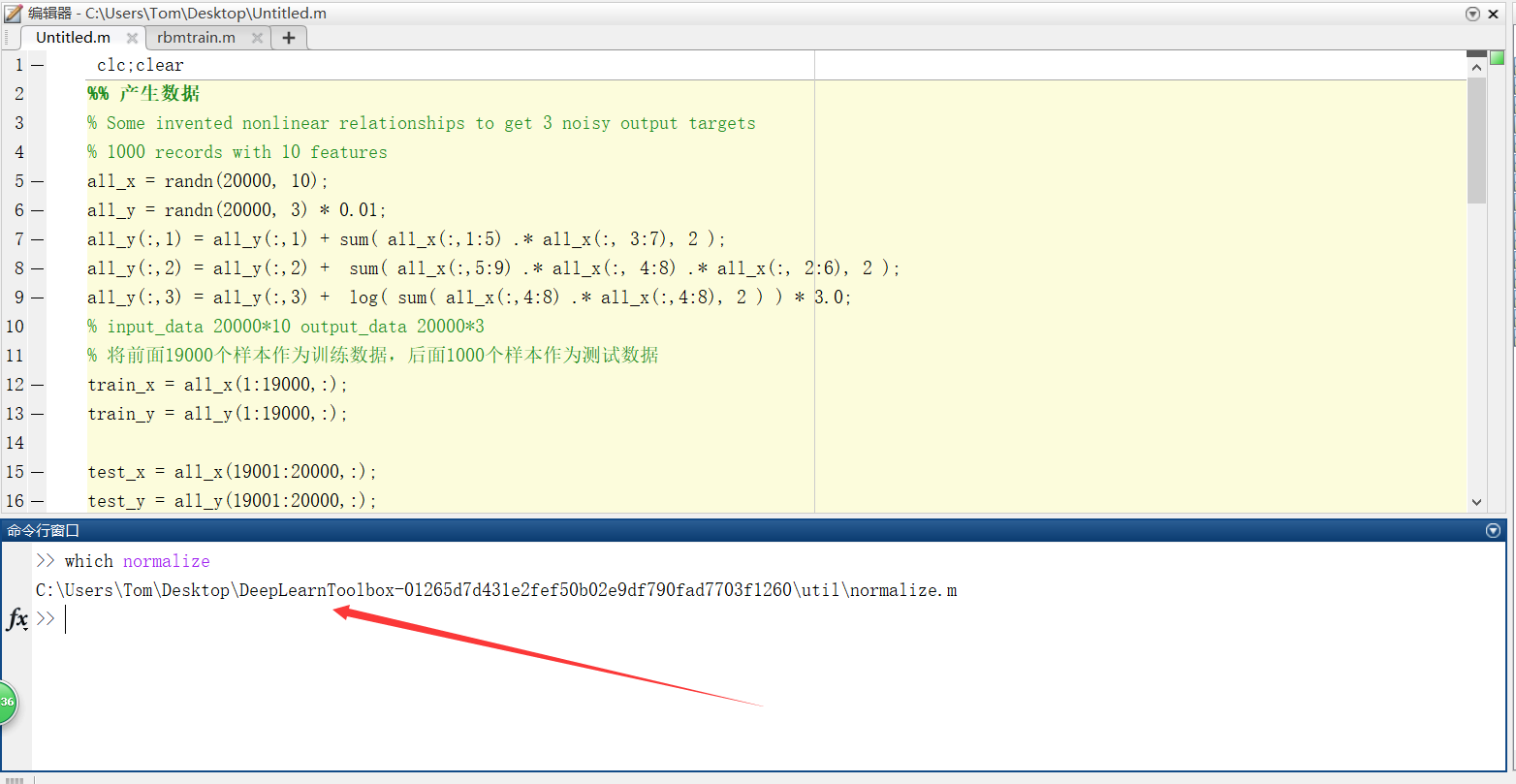

After the above operation, we enter any function name in which + in the command window, and the detailed path will be displayed. In this way, the addition of our toolbox is complete. Note that when you add it, you must add all three folders of the toolbox. I try to add the total folder, as if it is not very good.

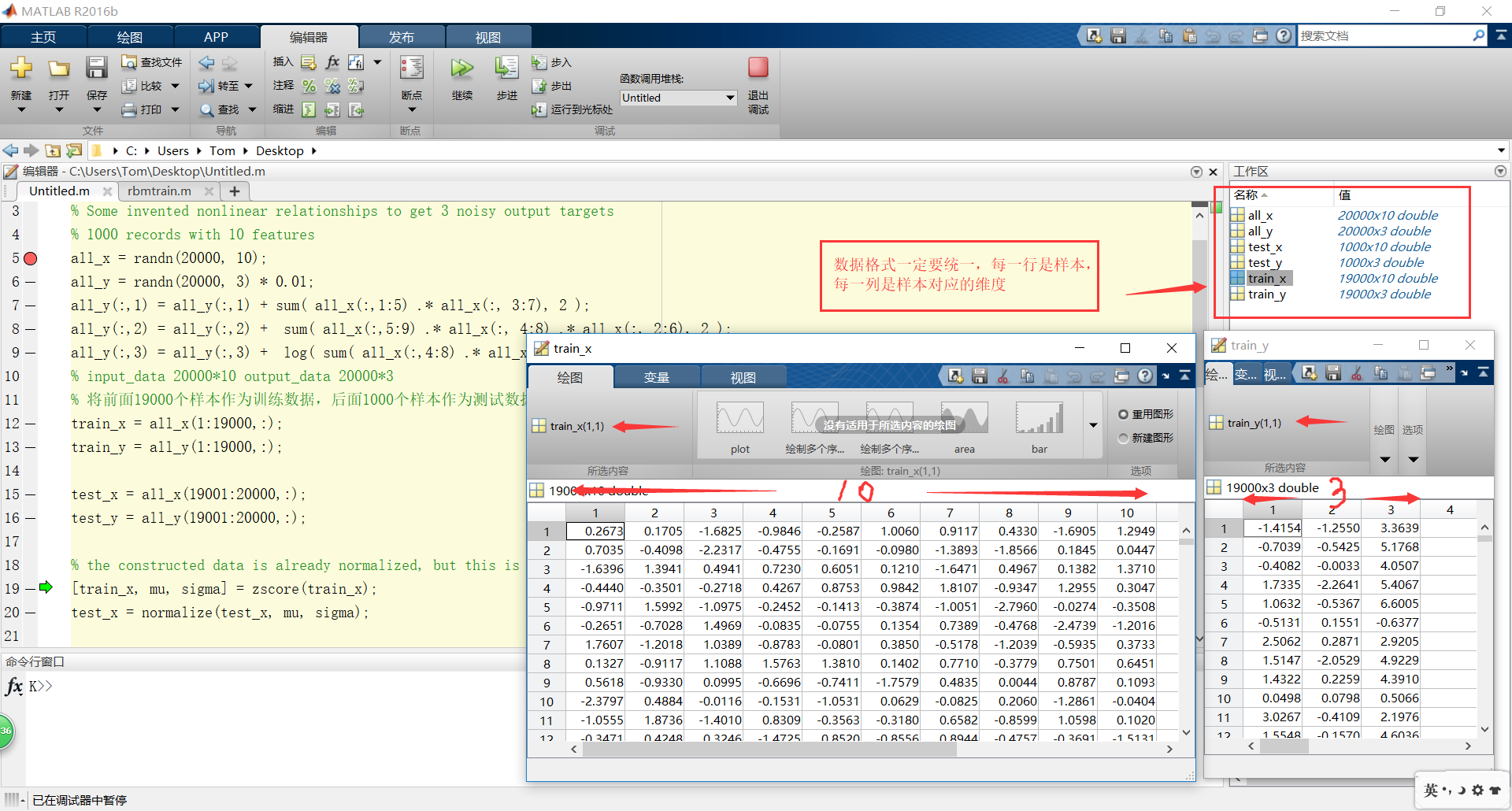

For convenience, we simulate some data randomly. train_x is the input of training set, train_y is the output of training set, test_x is the input of testing set, and test_y is the output of testing set. The input dimension is 10 and the output dimension is 3.

Finally, because the input dimension and output dimension of each person's data set are different, it must be changed according to their actual situation.

Finally, the correct rate is 91%. If we adjust the parameters again, we should be able to go up. We are also afraid of fitting, local minimum, saddle point or something, because the solutions are applied.