(Source code is easier to see on mobile phone's horizontal screen)

Note: The java source analysis section is based on the Java 8 version unless otherwise specified.

Note: This article divides and conquers thread pool classes based on ForkJoinPool.

brief introduction

With the development and widespread use of multicore processors on hardware, concurrent programming has become a technology that programmers must master, and knowledge about concurrency is often examined in interviews.

Today, let's look at an interview:

How can I make full use of multicore CPU s to calculate the sum of all integers in a large array?

Analyse

- Single threaded addition?

The easiest thing to think of is single-threaded addition, a for loop.

- Thread pools added?

If further optimized, we naturally think about using a thread pool to add segments together, and finally add the results of each segment.

- Other?

Yes, it's our protagonist today, ForkJoinPool, but how does it work?It doesn't seem like you've used Ha^^

Three implementations

OK, the analysis is complete, let's look directly at the three implementations, no ink, serve directly.

/**

* Calculate the sum of 100 million integers

*/

public class ForkJoinPoolTest01 {

public static void main(String[] args) throws ExecutionException, InterruptedException {

// Construct data

int length = 100000000;

long[] arr = new long[length];

for (int i = 0; i < length; i++) {

arr[i] = ThreadLocalRandom.current().nextInt(Integer.MAX_VALUE);

}

// Single Thread

singleThreadSum(arr);

// ThreadPoolExecutor Thread Pool

multiThreadSum(arr);

// ForkJoinPool Thread Pool

forkJoinSum(arr);

}

private static void singleThreadSum(long[] arr) {

long start = System.currentTimeMillis();

long sum = 0;

for (int i = 0; i < arr.length; i++) {

// The simulation is time consuming. This paper was originally created by Gongcong Tong Gong Read Source

sum += (arr[i]/3*3/3*3/3*3/3*3/3*3);

}

System.out.println("sum: " + sum);

System.out.println("single thread elapse: " + (System.currentTimeMillis() - start));

}

private static void multiThreadSum(long[] arr) throws ExecutionException, InterruptedException {

long start = System.currentTimeMillis();

int count = 8;

ExecutorService threadPool = Executors.newFixedThreadPool(count);

List<Future<Long>> list = new ArrayList<>();

for (int i = 0; i < count; i++) {

int num = i;

// Submit Tasks in Segments

Future<Long> future = threadPool.submit(() -> {

long sum = 0;

for (int j = arr.length / count * num; j < (arr.length / count * (num + 1)); j++) {

try {

// Time-consuming simulation

sum += (arr[j]/3*3/3*3/3*3/3*3/3*3);

} catch (Exception e) {

e.printStackTrace();

}

}

return sum;

});

list.add(future);

}

// Add results for each segment

long sum = 0;

for (Future<Long> future : list) {

sum += future.get();

}

System.out.println("sum: " + sum);

System.out.println("multi thread elapse: " + (System.currentTimeMillis() - start));

}

private static void forkJoinSum(long[] arr) throws ExecutionException, InterruptedException {

long start = System.currentTimeMillis();

ForkJoinPool forkJoinPool = ForkJoinPool.commonPool();

// Submit Tasks

ForkJoinTask<Long> forkJoinTask = forkJoinPool.submit(new SumTask(arr, 0, arr.length));

// Get results

Long sum = forkJoinTask.get();

forkJoinPool.shutdown();

System.out.println("sum: " + sum);

System.out.println("fork join elapse: " + (System.currentTimeMillis() - start));

}

private static class SumTask extends RecursiveTask<Long> {

private long[] arr;

private int from;

private int to;

public SumTask(long[] arr, int from, int to) {

this.arr = arr;

this.from = from;

this.to = to;

}

@Override

protected Long compute() {

// Direct addition when less than 1000, flexible adjustment

if (to - from <= 1000) {

long sum = 0;

for (int i = from; i < to; i++) {

// Time-consuming simulation

sum += (arr[i]/3*3/3*3/3*3/3*3/3*3);

}

return sum;

}

// Divided into two tasks, this article was originally created by Gongcong "Tong Gong Read Source"

int middle = (from + to) / 2;

SumTask left = new SumTask(arr, from, middle);

SumTask right = new SumTask(arr, middle, to);

// Submit tasks to the left

left.fork();

// Tasks on the right are computed directly from the current thread, saving overhead

Long rightResult = right.compute();

// Wait until the calculation on the left is complete

Long leftResult = left.join();

// Return results

return leftResult + rightResult;

}

}

}Tong told you secretly that in fact, adding up 100 million integers, single-threaded is the fastest. My computer is about 100ms, but using thread pool will be slower.

So, to demonstrate how good ForkJoinPool is, I've performed an operation on each number/3*3/3*3/3*3/3*3/3*3 to simulate the time-consuming calculations.

To see the results:

sum: 107352457433800662 single thread elapse: 789 sum: 107352457433800662 multi thread elapse: 228 sum: 107352457433800662 fork join elapse: 189

As you can see, ForkJoinPool has a significant improvement over the normal thread pool.

Question: Can a normal thread pool implement ForkJoinPool, which is to split large tasks into tasks, medium tasks into small tasks, and finally summarize them?

You can try it (---)

OK, now we're officially going into ForkJoinPool's analysis.

Divide and Conquer

- basic thought

Divide a large-scale problem into smaller-scale sub-problems, divide and conquer them, and merge the solutions of the sub-problems to get the solution of the original problem.

- step

(1) Split the original problem:

(2) Solving subproblems:

(3) The solution of the merged subproblem is the solution of the original problem.

In a partition method, subproblems are generally independent of each other, so they are often solved by recursive invocation of algorithms.

- Typical application scenarios

(1) Binary search

(2) Multiplication of large integers

(3) Strassen Matrix Multiplication

(4) board coverage

(5) Merge and Sort

(6) Quick sorting

(7) Linear time selection

(8) Tower of Hanoi

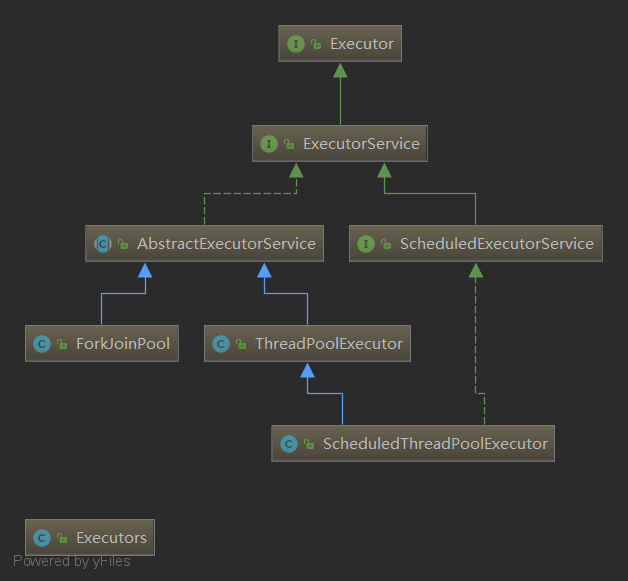

ForkJoinPool Inheritance System

ForkJoinPool is a new thread pool class in java 7, and its inheritance system is as follows:

ForkJoinPool and ThreadPoolExecutor are both inherited from the AbstractExecutorService Abstract class, so they are almost identical to ThreadPoolExecutor except that the task becomes ForkJoinTask.

Here, another important design principle is applied, the open and close principle, which is open to modifications and extensions.

It can be seen that the interface design of the entire thread pool system is very good at the beginning. A new thread pool class will not interfere with the original code, but can also take advantage of the original features.

ForkJoinTask

Two main methods

- fork()

The fork() method is similar to the Thread.start() method for threads, but it does not really start a thread, it puts tasks into the work queue.

- join()

The join() method is similar to the Thread.join() method of a thread, but instead of simply blocking the thread, it uses the worker thread to run other tasks.When a join() method is called in a worker thread, it will process other tasks until it notices that the target subtasks have been completed.

Three Subclasses

- RecursiveAction

No return value task.

- RecursiveTask

There is a return value task.

- CountedCompleter

No return value task, callback can be triggered when the task is completed.

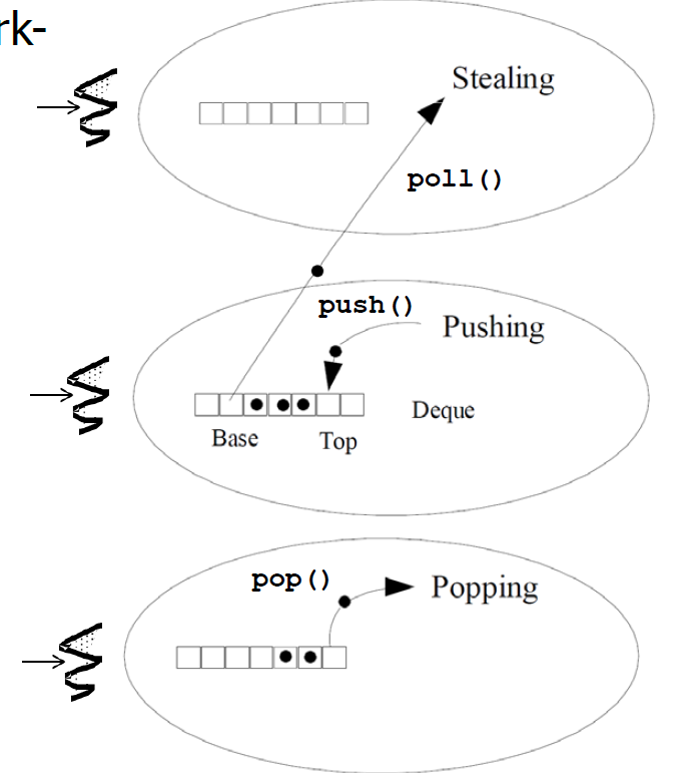

Internal Principles of ForkJoinPool

Internally, ForkJoinPool uses a job-stealing algorithm.

(1) Each worker thread has its own workqueue WorkQueue;

(2) This is a double-ended queue, which is private to threads;

(3) The fork subtasks in ForkJoinTask will be placed in the header of the worker thread running the task, and the worker thread will process the tasks in the worker queue in the LIFO order;

(4) To maximize CPU utilization, idle threads will "steal" tasks from other threads'queues;

(5) Stealing tasks from the tail of the work queue to reduce competition;

(6) Operation of double-ended queue: push()/pop() is called only in its owner worker thread, poll() is called when another thread steals a task;

(7) When only the last task remains, there will still be competition, which is achieved through CAS;

ForkJoinPool Best Practices

(1) Computing-intensive tasks are the most suitable ones. This paper was originally created by Gonggong Tong Tong Tong Read Source Code;

(2) ManagedBlocker can be used when the worker thread needs to be blocked;

(3) ForkJoinPool.invoke()/invokeAll() should not be used inside RecursiveTask;

summary

(1) ForkJoinPool is particularly suitable for the implementation of the divide-and-conquer algorithm;

(2) ForkJoinPool and ThreadPoolExecutor are complementary, not who is substituting for whom, and they apply in different scenarios;

(3) ForkJoinTask has two core methods, fork() and join(), and three important subclasses, RecursiveAction, RecursiveTask and CountdCompleter;

(4) The internal implementation of Forkjoin Pool is based on the "work stealing" algorithm;

(5) Each thread has its own work queue, which is a double-ended queue, accessing tasks from the head of the queue itself, and stealing tasks from the tail by other threads;

(6) ForkJoinPool is best suited for computing intensive tasks, but ManagedBlocker can also be used for blocking tasks;

(7) RecursiveTask can call fork() once less internally, which is a skill to use the current thread processing;

Eggs

How does ManagedBlocker work?

A: ManagedBlocker is equivalent to explicitly telling the ForkJoinPool framework that it is blocking and ForkJoinPool will start another thread to run the task to maximize CPU utilization.

Look at the example below and think about it yourself.

/**

* Fibonacci series

* A number is the sum of its first two numbers

* 1,1,2,3,5,8,13,21

*/

public class Fibonacci {

public static void main(String[] args) {

long time = System.currentTimeMillis();

Fibonacci fib = new Fibonacci();

int result = fib.f(1_000).bitCount();

time = System.currentTimeMillis() - time;

System.out.println("result,This article was originally created by Gong Cong's Tong Gong Read Source Code = " + result);

System.out.println("test1_000() time = " + time);

}

public BigInteger f(int n) {

Map<Integer, BigInteger> cache = new ConcurrentHashMap<>();

cache.put(0, BigInteger.ZERO);

cache.put(1, BigInteger.ONE);

return f(n, cache);

}

private final BigInteger RESERVED = BigInteger.valueOf(-1000);

public BigInteger f(int n, Map<Integer, BigInteger> cache) {

BigInteger result = cache.putIfAbsent(n, RESERVED);

if (result == null) {

int half = (n + 1) / 2;

RecursiveTask<BigInteger> f0_task = new RecursiveTask<BigInteger>() {

@Override

protected BigInteger compute() {

return f(half - 1, cache);

}

};

f0_task.fork();

BigInteger f1 = f(half, cache);

BigInteger f0 = f0_task.join();

long time = n > 10_000 ? System.currentTimeMillis() : 0;

try {

if (n % 2 == 1) {

result = f0.multiply(f0).add(f1.multiply(f1));

} else {

result = f0.shiftLeft(1).add(f1).multiply(f1);

}

synchronized (RESERVED) {

cache.put(n, result);

RESERVED.notifyAll();

}

} finally {

time = n > 10_000 ? System.currentTimeMillis() - time : 0;

if (time > 50)

System.out.printf("f(%d) took %d%n", n, time);

}

} else if (result == RESERVED) {

try {

ReservedFibonacciBlocker blocker = new ReservedFibonacciBlocker(n, cache);

ForkJoinPool.managedBlock(blocker);

result = blocker.result;

} catch (InterruptedException e) {

throw new CancellationException("interrupted");

}

}

return result;

// return f(n - 1).add(f(n - 2));

}

private class ReservedFibonacciBlocker implements ForkJoinPool.ManagedBlocker {

private BigInteger result;

private final int n;

private final Map<Integer, BigInteger> cache;

public ReservedFibonacciBlocker(int n, Map<Integer, BigInteger> cache) {

this.n = n;

this.cache = cache;

}

@Override

public boolean block() throws InterruptedException {

synchronized (RESERVED) {

while (!isReleasable()) {

RESERVED.wait();

}

}

return true;

}

@Override

public boolean isReleasable() {

return (result = cache.get(n)) != RESERVED;

}

}

}Welcome to pay attention to my public number "Tong Gong Read Source". Check out more articles about source series and enjoy the sea of source code with Tong Gong.