1, Background

DataX is an open-source offline synchronization tool for heterogeneous data sources from Alibaba. It is committed to realizing stable and efficient data synchronization between various heterogeneous data sources, including relational databases (MySQL, Oracle, etc.), HDFS, Hive, ODPS, HBase, FTP, etc. However, with the increase of business requirements, the built-in plug-ins of dataX are gradually not satisfied, so secondary development of new plug-ins is required. Based on this, this paper describes the secondary development steps of dataX plug-in in detail, hoping to help you.

2, Local test datax based on java

2.1 download the source code of datax from GitHub

website: https://github.com/alibaba/DataX

2.2 importing dataX code into idea

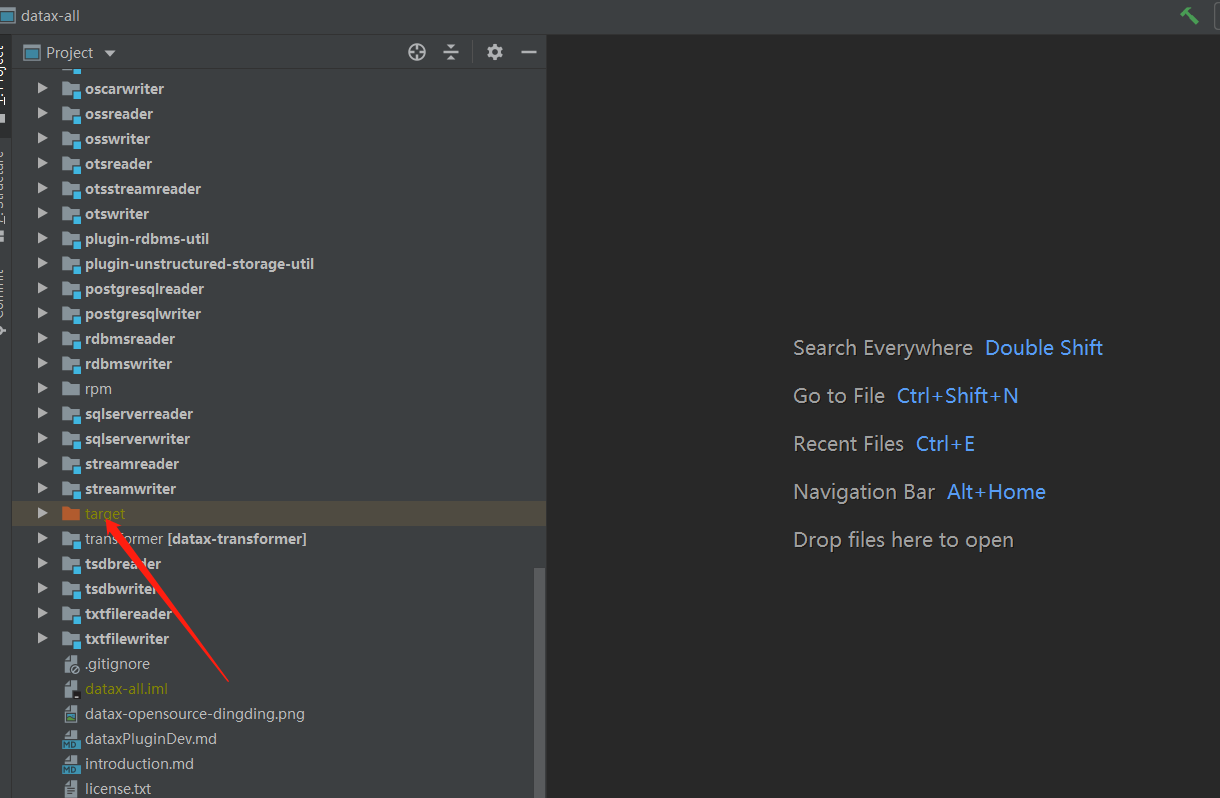

1. Here, unzip the datax code downloaded from Git locally and import it into idea, as shown in the following figure:

2. java based local testing

Use the local maven to compile the datax source code. The packaging command is as follows:

mvn -U clean package assembly:assembly -Dmaven.test.skip=true

Wait for the package to complete... To generate the target directory:

3. Use the Core class to conduct local test on the Engine under the Core module:

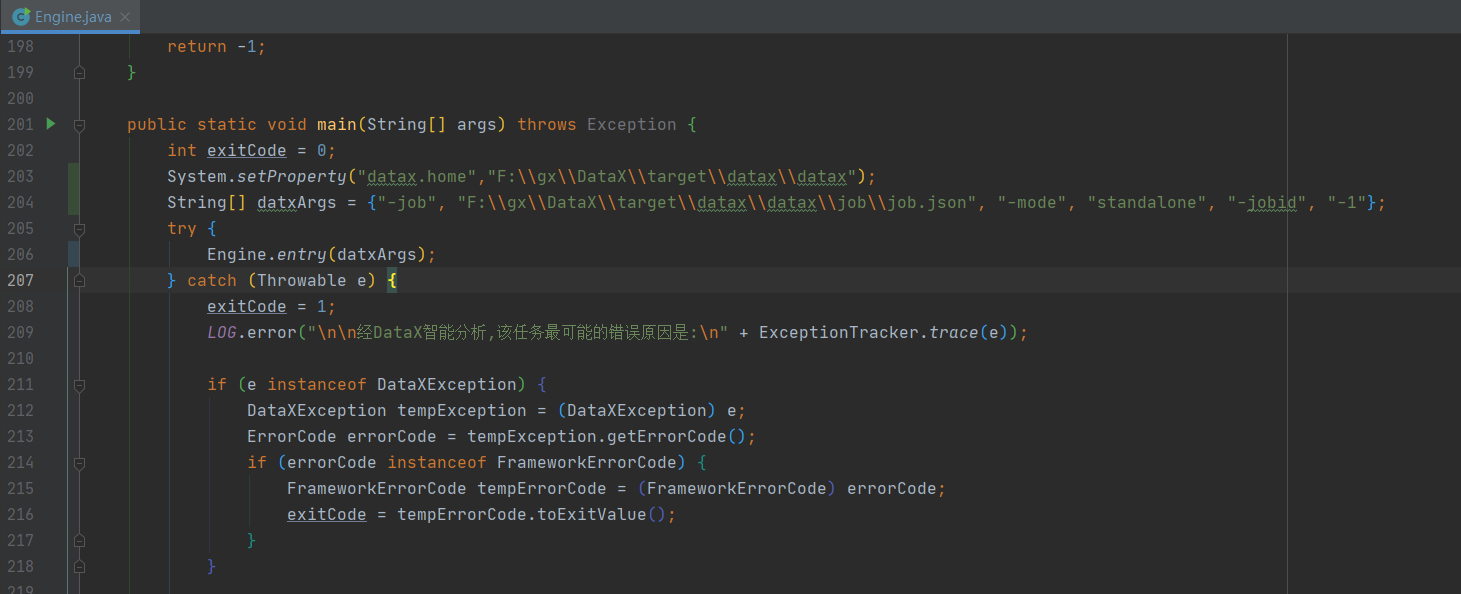

4. Modify the Engine.java code because this is the entry of datax.

First, set the system variable, that is, the directory path of datax:

First, set the system variable, that is, the directory path of datax:

System.setProperty("datax.home","F:\\gx\\DataX\\target\\datax\\datax");

Secondly, set the job path and some parameters.

String[] datxArgs = {"-job", "F:\\gx\\DataX\\target\\datax\\datax\\job\\job.json", "-mode", "standalone", "-jobid", "-1"};

(the datax directory path and job path use the datax in the target directory generated by packaging) when conducting local testing, you can modify job.json.

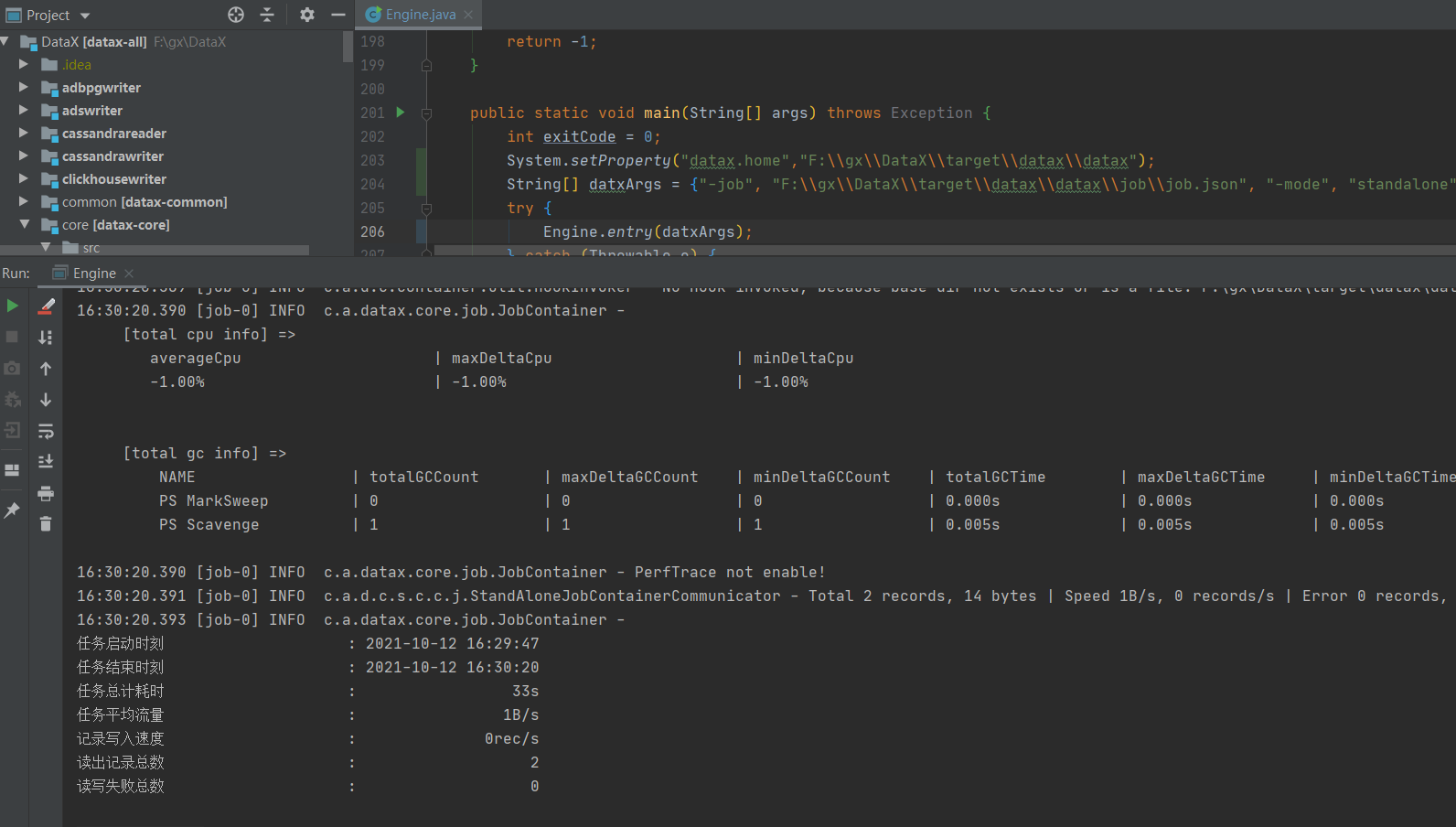

Test: directly click the main method to run.

3, docker installs NTU general database GBase and GBase 8a

3.1 docker installation Gbase 8a

1 step 1 Environmental preparation

Confirm that the Docker environment has been installed on the machine

2 step 2 download the GBase 8a image on the server with docker installed

$ docker pull shihd/gbase8a:1.0

3 step 3 create and start the container

$ docker run --name Container name -itd -p5258:5258 shihd/gbase8a:1.0

GBase8a database information

DB: gbase

User: root

Password: root

Port: 5258

3.2 docker installation Gbase 8s

1. Find GBase 8s image version

[root@localhost ~]# docker search gbase8s

2. Pull GBase 8s image

[root@localhost ~]# docker pull liaosnet/gbase8s:3.3.0_2_amd64

3 operating vessel

docker run --name Container name -p 19088:9088 -itd liaosnet/gbase8s:3.3.0_2_amd64

4. Installation succeeded

Check whether the installation is successful through the docker ps command. And enter the container: docker exec -i -t container name / bin/bash

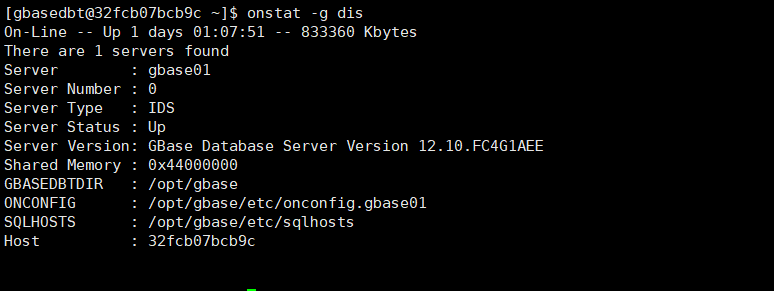

Enter the container to view the database status:

View the instance service of gbase 8s in:

3, NTU general database GBase 8s To GBase 8a

3.1 GBase 8s reader reader plug-in development (the same applies to writer)

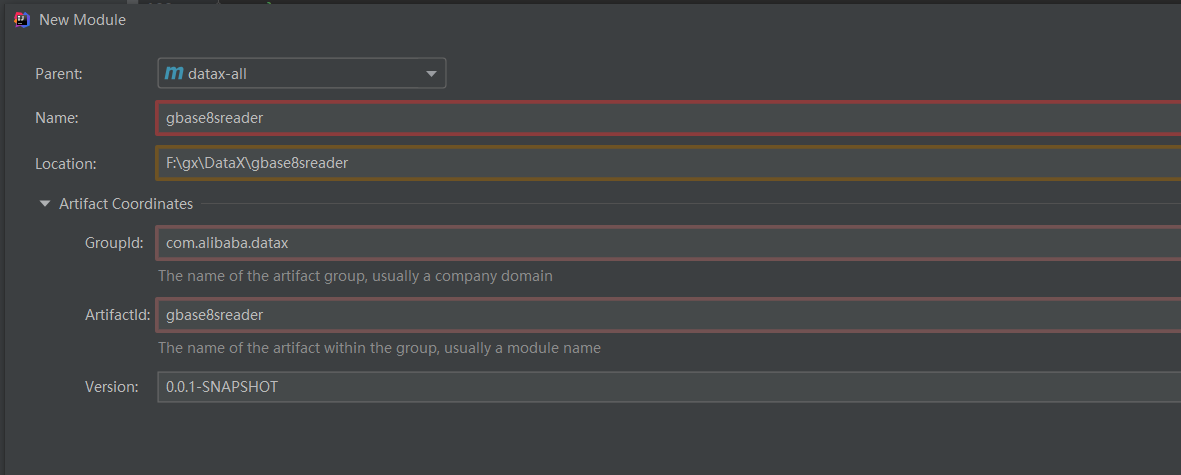

1. Create a new gbase8sreader module

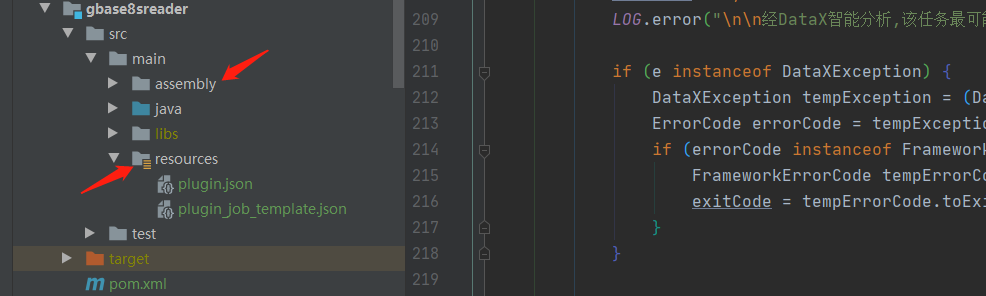

2. Copy the assembly package of mysqlreader and the plugin.json and plugin under the resource package_ job_ template.json

Put the copied into the corresponding position of gbase8sreader module.

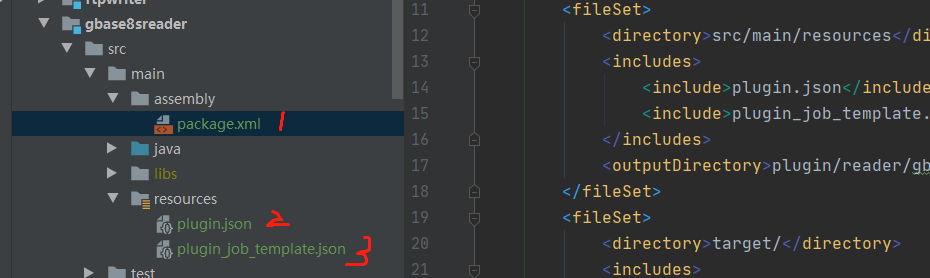

3. Modify the copied file.

package.xml

<assembly xmlns="http://maven.apache.org/plugins/maven-assembly-plugin/assembly/1.1.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/plugins/maven-assembly-plugin/assembly/1.1.0 http://maven.apache.org/xsd/assembly-1.1.0.xsd"> <id></id> <formats> <format>dir</format> </formats> <includeBaseDirectory>false</includeBaseDirectory> <fileSets> <fileSet> <directory>src/main/resources</directory> <includes> <include>plugin.json</include> <include>plugin_job_template.json</include> </includes> <outputDirectory>plugin/reader/gbase8sreader</outputDirectory> </fileSet> <fileSet> <directory>target/</directory> <includes> <include>gbase8sreader-0.0.1-SNAPSHOT.jar</include> </includes> <outputDirectory>plugin/reader/gbase8sreader</outputDirectory> </fileSet> </fileSets> <dependencySets> <dependencySet> <useProjectArtifact>false</useProjectArtifact> <outputDirectory>plugin/reader/gbase8sreader/libs</outputDirectory> <scope>runtime</scope> </dependencySet> </dependencySets> </assembly>

plugin.json

{

"name": "gbase8sreader",

"class": "com.cetc.datax.plugin.reader.gbase8sreader.Gbase8sReader",

"description": "useScene: test. mechanism: use datax framework to transport data from txt file. warn: The more you know about the data, the less problems you encounter.",

"developer": "cetc"

}

Note: "class" is the class name of the code we need to write later

plugin_job_template.json

{

"name": "gbase8sreader",

"parameter": {

"username": "",

"password": "",

"column": [],

"connection": [

{

"jdbcUrl": [],

"table": []

}

],

"where": ""

}

}

4. Create a new plug-in code package

Gbase8sReader code is as follows:

package com.cetc.datax.plugin.reader.gbase8sreader;

import com.alibaba.datax.common.plugin.RecordSender;

import com.alibaba.datax.common.spi.Reader;

import com.alibaba.datax.common.util.Configuration;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import com.alibaba.datax.plugin.rdbms.util.DataBaseType;

import java.util.List;

import com.alibaba.datax.plugin.rdbms.reader.Constant;

import com.alibaba.datax.plugin.rdbms.reader.CommonRdbmsReader;

/**

* @author : shujuelin

* @date : 16:08 2021/10/11

*/

public class Gbase8sReader extends Reader {

private static final DataBaseType DATABASE_TYPE = DataBaseType.Gbase8s;

public static class Job extends Reader.Job {

private static final Logger LOG = LoggerFactory

.getLogger(Job.class);

private Configuration originalConfig = null;

private CommonRdbmsReader.Job commonRdbmsReaderJob;

@Override

public void init() {

this.originalConfig = super.getPluginJobConf();

Integer userConfigedFetchSize = this.originalConfig.getInt(Constant.FETCH_SIZE);

if (userConfigedFetchSize != null) {

LOG.warn("yes gbasereader No configuration required fetchSize, gbasereader This configuration will be ignored. If you don't want to see this warning again,Please remove fetchSize to configure.");

}

this.originalConfig.set(Constant.FETCH_SIZE, Integer.MIN_VALUE);

this.commonRdbmsReaderJob = new CommonRdbmsReader.Job(DATABASE_TYPE);

this.commonRdbmsReaderJob.init(this.originalConfig);

}

@Override

public void preCheck(){

init();

this.commonRdbmsReaderJob.preCheck(this.originalConfig,DATABASE_TYPE);

}

@Override

public List<Configuration> split(int adviceNumber) {

return this.commonRdbmsReaderJob.split(this.originalConfig, adviceNumber);

}

@Override

public void post() {

this.commonRdbmsReaderJob.post(this.originalConfig);

}

@Override

public void destroy() {

this.commonRdbmsReaderJob.destroy(this.originalConfig);

}

}

public static class Task extends Reader.Task {

private Configuration readerSliceConfig;

private CommonRdbmsReader.Task commonRdbmsReaderTask;

@Override

public void init() {

this.readerSliceConfig = super.getPluginJobConf();

this.commonRdbmsReaderTask = new CommonRdbmsReader.Task(DATABASE_TYPE,super.getTaskGroupId(), super.getTaskId());

this.commonRdbmsReaderTask.init(this.readerSliceConfig);

}

@Override

public void startRead(RecordSender recordSender) {

int fetchSize = 1000; //this.readerSliceConfig.getInt(Constant.FETCH_SIZE);

this.commonRdbmsReaderTask.startRead(this.readerSliceConfig, recordSender,

super.getTaskPluginCollector(), fetchSize);

}

@Override

public void post() {

this.commonRdbmsReaderTask.post(this.readerSliceConfig);

}

@Override

public void destroy() {

this.commonRdbmsReaderTask.destroy(this.readerSliceConfig);

}

}

}

be careful

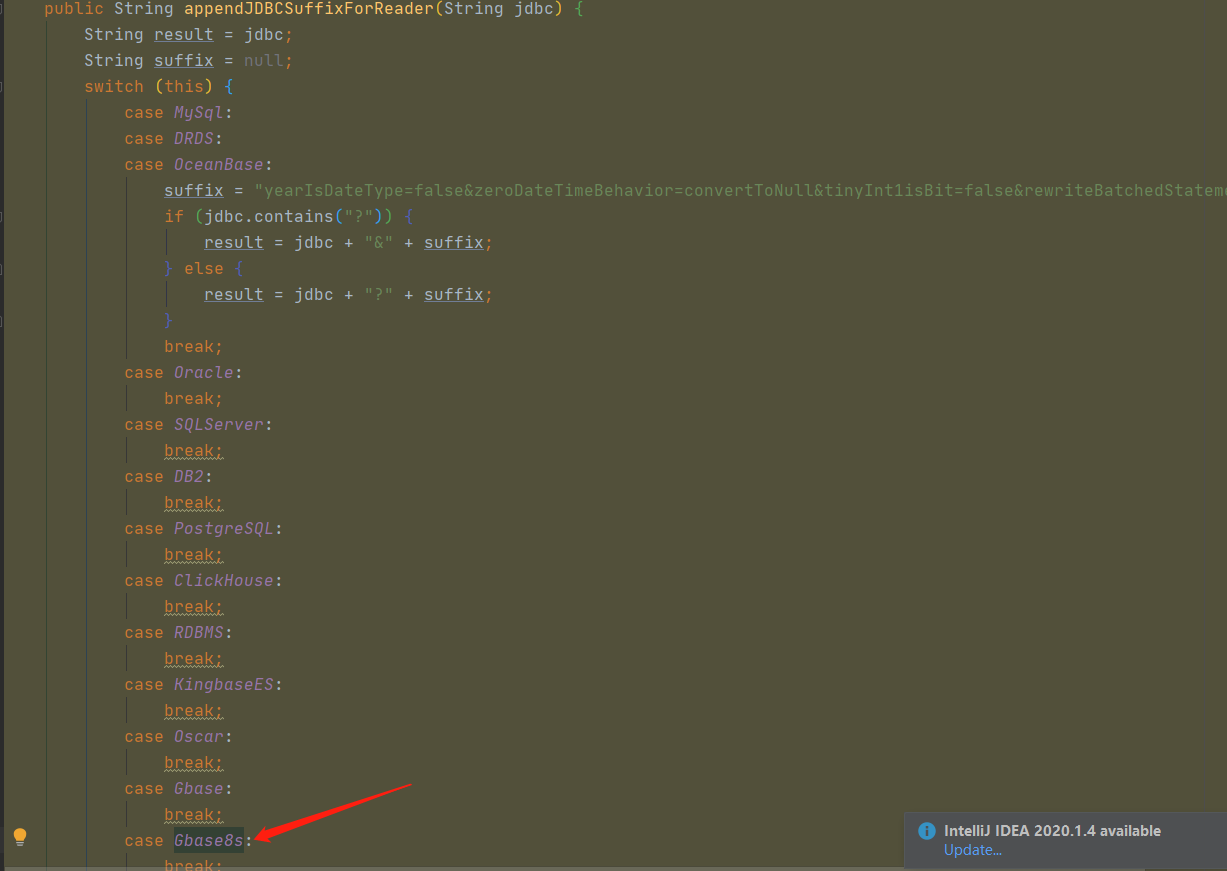

In the code: private static final DataBaseType DATABASE_TYPE = DataBaseType.Gbase8s;

Need in

Modify and add your own data source type.

Gbase("gbase","com.gbase.jdbc.Driver"),

Gbase8s("gbase8s","com.gbasedbt.jdbc.Driver");

In the DataBaseType class, for the data source of the reader, to modify the appendJDBCSuffixForReader method:

Similarly: when writing the writer plug-in, you also need to modify the appendJDBCSuffixForWriter in the corresponding method.

5 packaging operation

Annotate other modules, leaving only public modules and their own project modules. Outermost pom

Add the following to the outermost package.xml

<fileSet>

<directory>gbase8sreader/target/datax/</directory>

<includes>

<include>**/*.*</include>

</includes>

<outputDirectory>datax</outputDirectory>

</fileSet>

4, NTU general gbase visualization tool

The client connection tools used in this article are:

4.1 connection configuration of NTU general GBase 8a

4.2 connection configuration of NTU universal GBase 8s