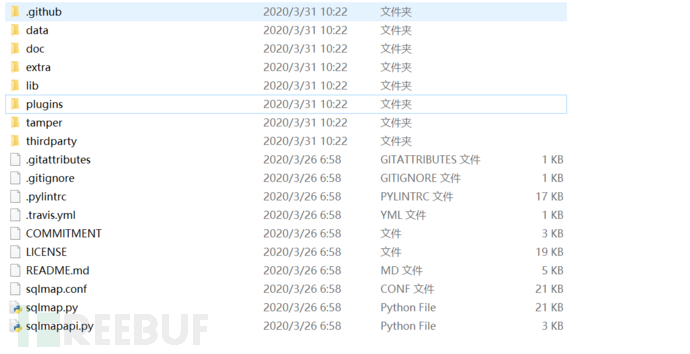

directory structure

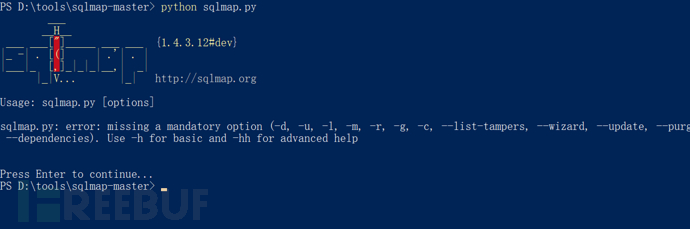

Let's sort out the directory structure: here is the latest version of sqlmap 1.4.3.12 I downloaded

1. The data directory contains graphical interface templates that can be built, shell back door (the code inside is encrypted), udf rights lifting function, database injection detection load, etc

2.doc directory is the instructions for different countries and regions

3. The extra directory has some additional functions, such as running cmd, shellcode, icmp Protocol rebound shell (yes, icmp can be used to rebound shell. In case of traditional tcp and udp rebound shell failure during normal penetration, icmp Protocol or DNS protocol can be used to rebound shell), and beep, etc

4. The Lib / directory contains a variety of connection libraries of sqlmap, such as parameters requested by five injection types, rights raising operations, etc. (this directory needs our attention)

5.plugins / database information and database general matters

6. The tamper directory contains various bypass scripts (this is very easy to use)

7. Third party plug-ins used by sqlmap in thirdparty directory

Sqlmap.conf configuration file of sqlmap, such as various default parameters (default is that no parameters are set, and default parameters can be set for batch or automatic detection)

sqlmap.py sqlmap main program file

Sqlmapapi.py is the api file of sqlmap, which can integrate sqlmap into other platforms

swagger.yaml api document

Entry file

sqlmap.py let's take a look at five more important functions

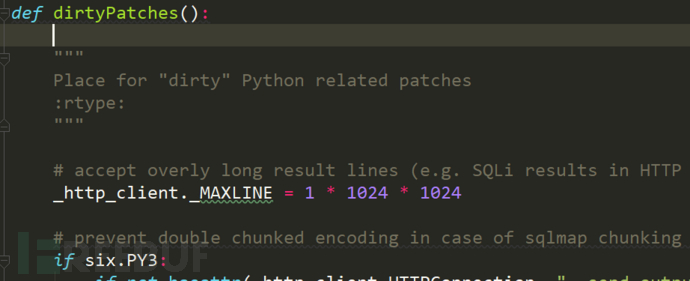

dirtyPatches()

For some problems and fixes of the program, it is written as a patch function and implemented first. In DirtyPatches, first set the maximum line length of httplib (httplib._MAXLINE), then import the third-party ip address translation function module (win_inet_pton) under windows, and then replace the code with utf8 to avoid some interactive errors, These operations do not have a great impact on the actual functions of sqlmap. They are normal options to ensure the user experience and system settings, and do not need too much attention.

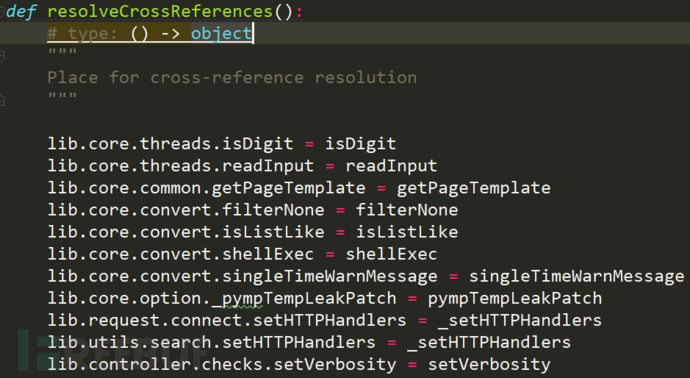

resolveCrossReferences()

In order to eliminate the problem of cross reference, the functions in some subroutines will be rewritten and assigned at this position

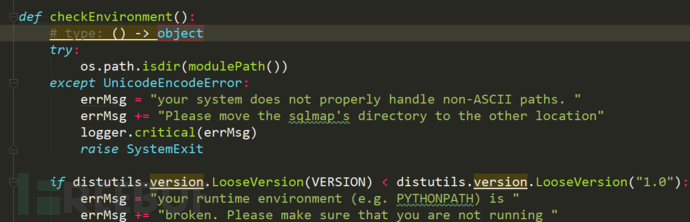

checkEnvironment()

This function is used to detect the running environment, including checking the module path, checking the Python version, and importing global variables

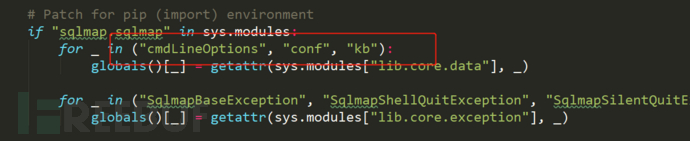

These three global variables can be said to run through the whole process of running sqlmap, especially conf and kb

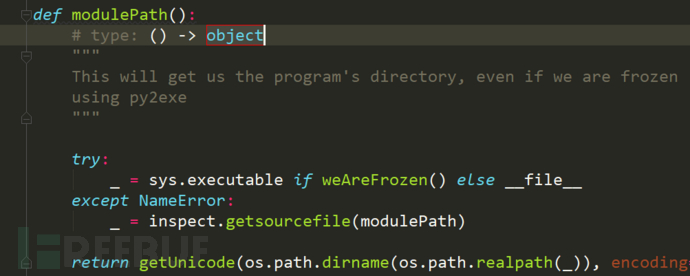

setPaths(modulePath())

Get path

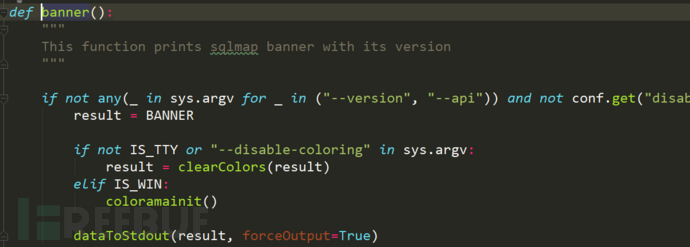

banner()

This function is used to print banner information

global variable

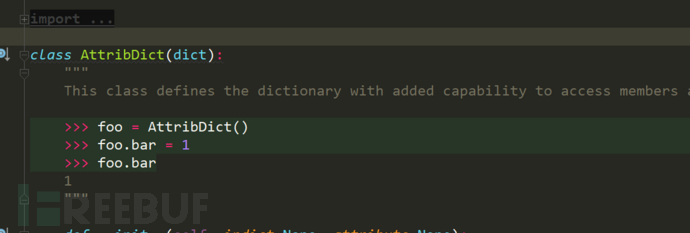

cmdLineOptions

It's an AttribDict. What is AttribDict? This class override s several super method s. Modify the native dict to customize the attribute dictionary required by its own project

Usage of the original dictionary: dict1 ["key"]

Current usage of custom dictionary: dict1.key

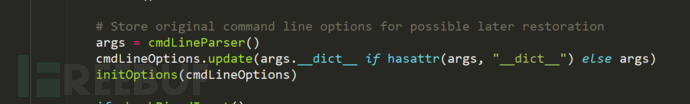

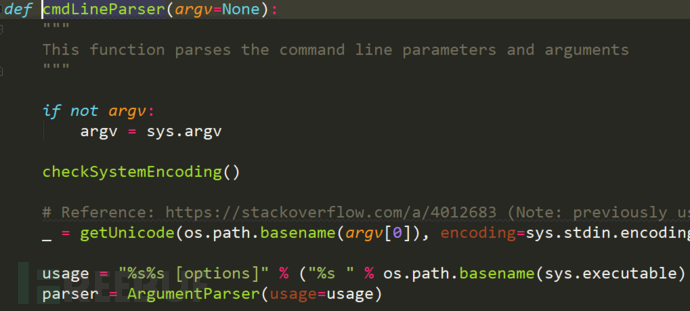

Follow up cmdlineParser()

Here, we judge and split the command line parameter options we entered, convert them into dict key value pairs, and store them in cmdLineOptions

conf,kb

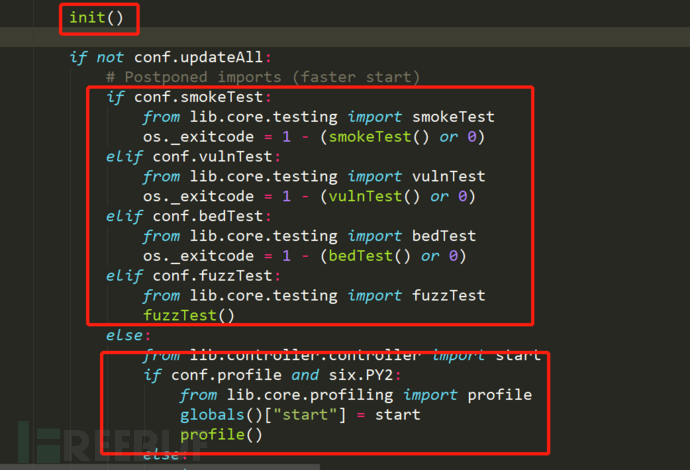

1.init() mainly contains the initial values of all initial variables. These initial values in init() setting mainly refer to various functions to complete the basic setting. We don't need to branch them in turn. We just need to find them when we need them.

2. The second part is a variety of tests, including smoke test, fuzzy test, etc

The tested url parameter information will be saved to kb.testedParams

3. Enter our workflow after the test is completed

controller.py file

from lib.controller.controller import start

if conf.profile and six.PY2:

from lib.core.profiling import profile

globals()["start"] = start

profile()

else:

try:

if conf.crawlDepth and conf.bulkFile:

targets = getFileItems(conf.bulkFile)

for i in xrange(len(targets)):

try:

kb.targets.clear()

target = targets[i]

if not re.search(r"(?i)\Ahttp[s]*://", target):

target = "http://%s" % target

infoMsg = "starting crawler for target URL '%s' (%d/%d)" % (target, i + 1, len(targets))

logger.info(infoMsg)

crawl(target)

except Exception as ex:

if not isinstance(ex, SqlmapUserQuitException):

errMsg = "problem occurred while crawling '%s' ('%s')" % (target, getSafeExString(ex))

logger.error(errMsg)

else:

raise

else:

if kb.targets:

start()

else:

start()

The following part of the code is the core detection method

for targetUrl, targetMethod, targetData, targetCookie, targetHeaders in kb.targets:

try:

if conf.checkInternet:

infoMsg = "checking for Internet connection"

logger.info(infoMsg)

if not checkInternet():

warnMsg = "[%s] [WARNING] no connection detected" % time.strftime("%X")

dataToStdout(warnMsg)

valid = False

for _ in xrange(conf.retries):

if checkInternet():

valid = True

break

else:

dataToStdout('.')

time.sleep(5)

if not valid:

errMsg = "please check your Internet connection and rerun"

raise SqlmapConnectionException(errMsg)

else:

dataToStdout("\n")

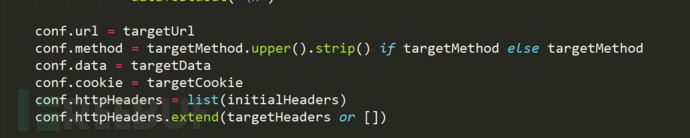

conf.url = targetUrl

conf.method = targetMethod.upper().strip() if targetMethod else targetMethod

conf.data = targetData

conf.cookie = targetCookie

conf.httpHeaders = list(initialHeaders)

conf.httpHeaders.extend(targetHeaders or [])

if conf.randomAgent or conf.mobile:

for header, value in initialHeaders:

if header.upper() == HTTP_HEADER.USER_AGENT.upper():

conf.httpHeaders.append((header, value))

break

conf.httpHeaders = [conf.httpHeaders[i] for i in xrange(len(conf.httpHeaders)) if conf.httpHeaders[i][0].upper() not in (__[0].upper() for __ in conf.httpHeaders[i + 1:])]

initTargetEnv()

parseTargetUrl()

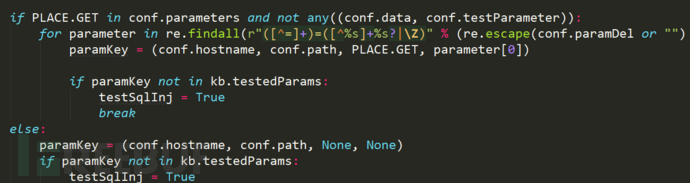

testSqlInj = False

if PLACE.GET in conf.parameters and not any((conf.data, conf.testParameter)):

for parameter in re.findall(r"([^=]+)=([^%s]+%s?|\Z)" % (re.escape(conf.paramDel or "") or DEFAULT_GET_POST_DELIMITER, re.escape(conf.paramDel or "") or DEFAULT_GET_POST_DELIMITER), conf.parameters[PLACE.GET]):

paramKey = (conf.hostname, conf.path, PLACE.GET, parameter[0])

if paramKey not in kb.testedParams:

testSqlInj = True

break

else:

paramKey = (conf.hostname, conf.path, None, None)

if paramKey not in kb.testedParams:

testSqlInj = True

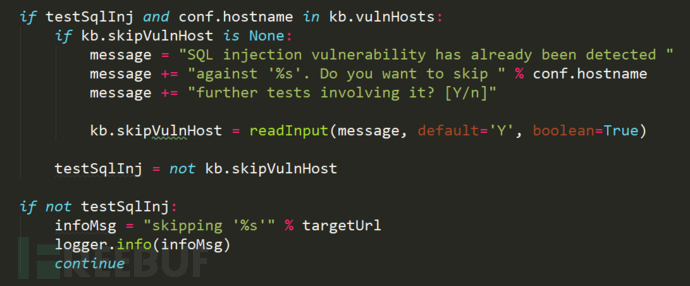

if testSqlInj and conf.hostname in kb.vulnHosts:

if kb.skipVulnHost is None:

message = "SQL injection vulnerability has already been detected "

message += "against '%s'. Do you want to skip " % conf.hostname

message += "further tests involving it? [Y/n]"

kb.skipVulnHost = readInput(message, default='Y', boolean=True)

testSqlInj = not kb.skipVulnHost

if not testSqlInj:

infoMsg = "skipping '%s'" % targetUrl

logger.info(infoMsg)

continue

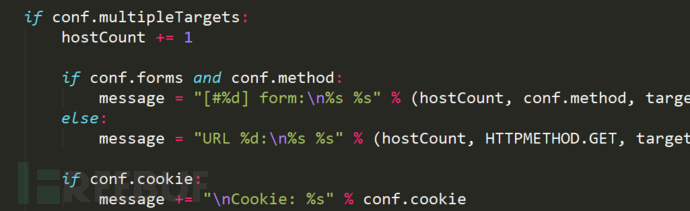

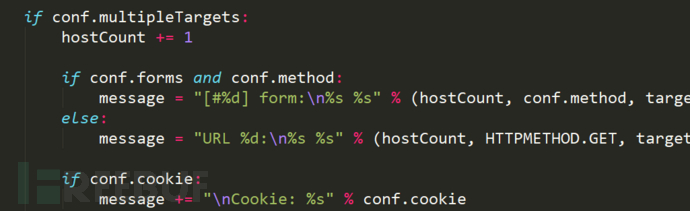

if conf.multipleTargets:

Initialize the currently detected targets, including url, method, Data, Cookie, headers and related fields

Take the parameters for detection from the conf dictionary

Check whether it has been tested

This goal

This code is for multiple targets

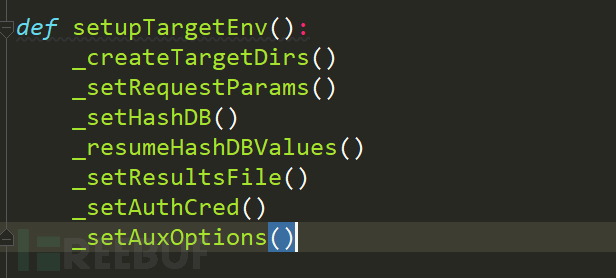

Next, go through the setupTargetEnv() function,

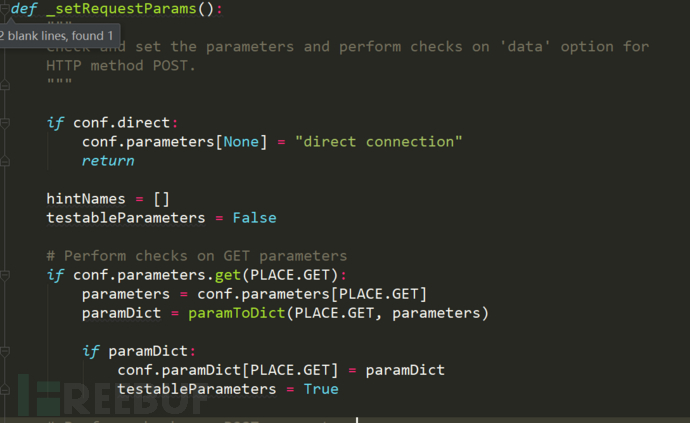

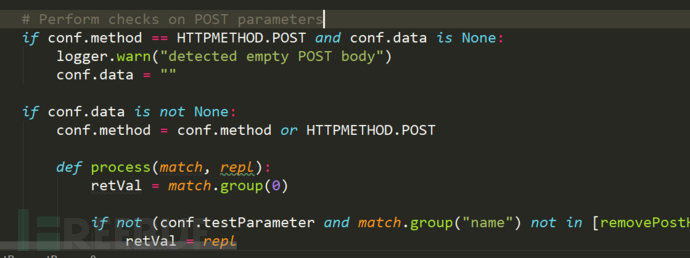

Here, let's take a look at the processing method of the request, which is mainly to parse the data sent by get or post into dictionary form and save it to conf.paramDict

In the loop body of foreach targets in the start() method before the regression, after setupTargetEnv(), we now know that all the points that can be tried to inject test about this target have been set, and all exist in the dictionary conf.paramDict.

Read the session file (if any), read the data in the file and save it to kb variable

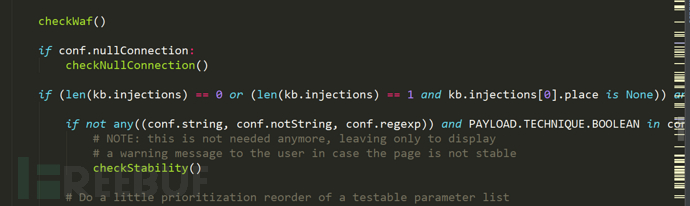

The next step is to check waf. Here is to check whether there is waf (a strange thing is that there is no waf directory in the sqlmap directory downloaded this time)

Then check the null connection, check the stability of the page, and sort the parameters and test list

nullConnection: according to the official manual, it is a method to know the page size without obtaining the page content. This method has a very good effect in Boolean blind annotation

If -- null connection is enabled, the calculation of page similarity is simply calculated by the length of the page

The algorithm of page similarity plays an important role in the detection of SQL map, and Gaussian distribution also plays an important role in the anomaly detection of SQL map