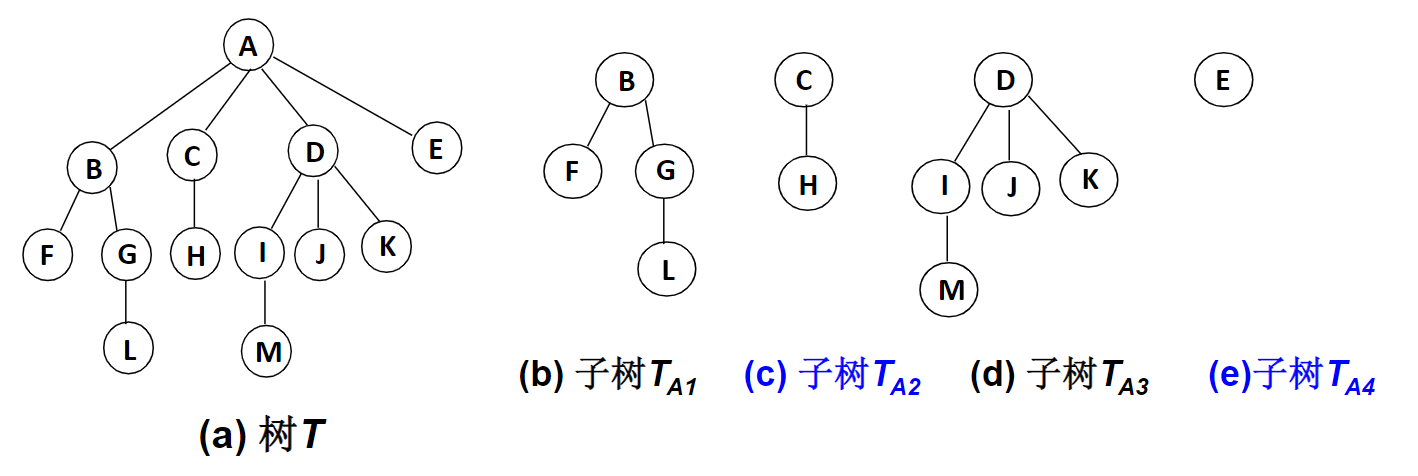

1, Definition of tree

A finite set composed of n (n > 0) nodes is an empty tree when n is equal to zero

For any non empty tree, it has the following properties:

1. There is a special node in the tree that becomes the root, which is represented by r

2. Other nodes can be divided into m (M > 0) disjoint finite sets T1.T2.T3... Tm, in which each set itself is a tree, which is called the subtree of the original tree

A tree can also be defined in this way: a tree is composed of root nodes and several sub trees. A tree consists of a set and a relationship defined on the set. The elements in the collection are called the nodes of the tree, and the defined relationship is called parent-child relationship. The parent-child relationship establishes a hierarchy between the nodes of the tree. In this hierarchy, a node has a special status. This node is called the root node of the tree, or the root of the tree.

2, Some basic terms of tree:

Child node or child node: the root node of the subtree contained in a node is called the child node of the node;

Node degree: the number of child nodes contained in a node is called the degree of the node;

Leaf node or terminal node: a node with a degree of 0 is called a leaf node;

Non terminal node or branch node: a node whose degree is not 0;

Parent node or parent node: if a node contains child nodes, this node is called the parent node of its child nodes;

Sibling node: nodes with the same parent node are called sibling nodes;

Tree degree: the degree of the largest node in a tree is called the degree of the tree;

Node hierarchy: defined from the root, the root is the first layer, and the child node of the root is the second layer, and so on;

Height or depth of tree: the maximum level of nodes in the tree;

Cousin node: nodes with parents on the same layer are cousins to each other;

Ancestor of a node: all nodes from the root to the branch through which the node passes;

Descendant: any node in the subtree with a node as the root is called the descendant of the node;

Forest: a collection of disjoint trees is called forest.

3, Representation of tree:

1. Parental representation

2. Child representation

3. Representation of children's brothers

1. Parental representation

The parent representation takes a continuous memory space and stores each node with a variable recording the location of its parent node. In the tree structure, except for the tree root, each node has only one parent node (also known as "parent node").

Advantages: it is easy to find the parent node in the tree

Disadvantages: it is not easy to find the child nodes in the tree

#define size 100 / / defines the maximum number of nodes in the tree

typedef struct PTNode

{

int data; //Data type of node in tree

int parent; //The position subscript of the parent node of the node in the array

}PTNode;

typedef struct

{

PTNode nodes[size]; //Store all nodes in the tree

int r, n; //Position subscript and node number of the root

}PTree;

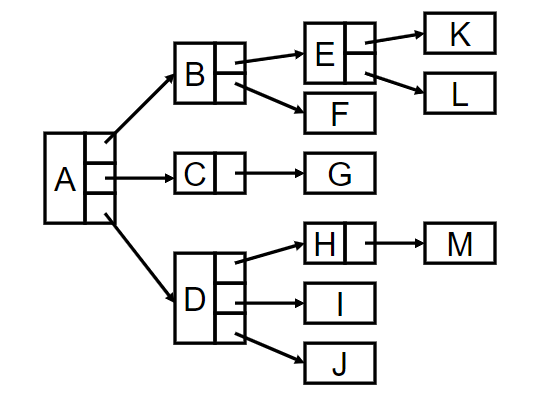

2. Child representation

The child nodes of each node in the tree are arranged into a linear list and stored in a linked list. For a tree with n nodes, there will be n single linked lists. The head pointers of n single linked lists are stored in a linear table. Such a representation is the child representation.

Advantages and disadvantages: the tree structure stored by child representation is just opposite to the parent representation. It is suitable for finding the child node of a node, not its parent node. This is because the single linked list can only be checked and cannot be checked

#define Size 100

typedef struct CTNode

{

int child;//Each node in the linked list stores not the data itself, but the position index of the data stored in the array

struct CTNode *next;

}*ChildPtr;

typedef struct

{

int data; //Data type of node

ChildPtr firstchild; //Head pointer of child linked list

}CT;

typedef struct

{

CT nodes[Size]; //Array of storage nodes

int n, r; //Number of nodes and location of tree roots

}CTree;

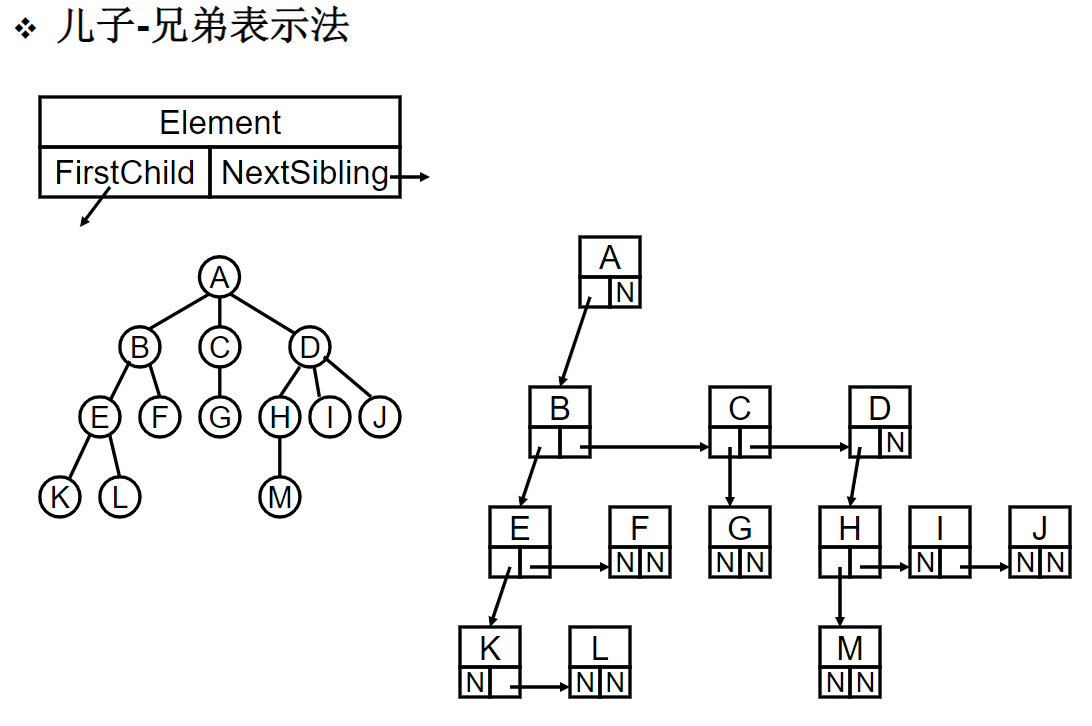

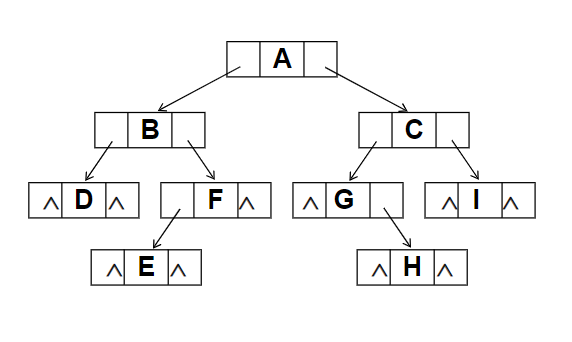

3. Representation of children's brothers

Use the chain storage structure to store the common tree. The child pointer field indicates the first child node pointing to the current node, and the brother node indicates the next brother node pointing to the current node.

typedef struct CSNode

{

int data;

struct CSNode *firstchild, *nextsibling;

}CSNode, *CSTree;

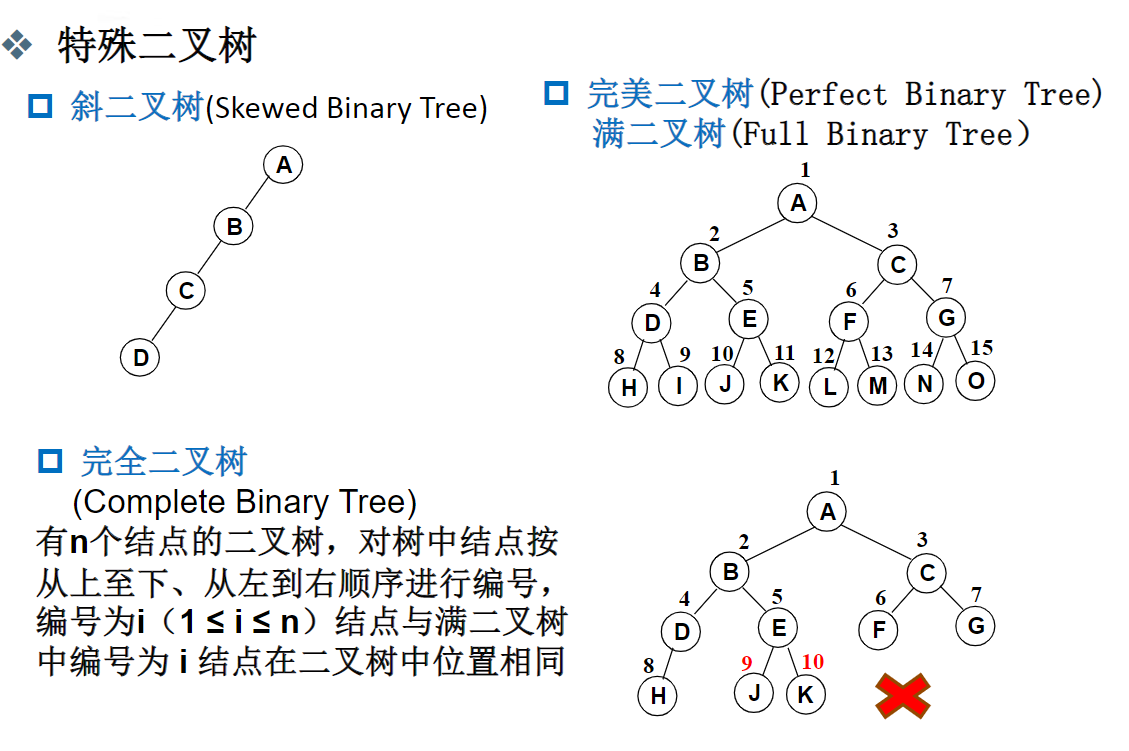

4, Binary tree

Binary tree is an important type of tree structure. The data structure abstracted from many practical problems is often in the form of binary tree. Even a general tree can be simply transformed into binary tree, and the storage structure and algorithm of binary tree are relatively simple, so binary tree is particularly important. The characteristic of binary tree is that each node can only have two subtrees at most

1. Definition of binary tree

binary tree refers to an ordered tree in which the degree of nodes in the tree is no more than 2. It is the simplest and most important tree.

The recursive definition of a binary tree is: a binary tree is an empty tree, or a non empty tree composed of a root node and two disjoint left and right subtrees called roots respectively; The left subtree and the right subtree are also binary trees

Full binary tree: if a binary tree has only nodes with degree 0 and degree 2, and the nodes with degree 0 are on the same layer, the binary tree is full binary tree [4].

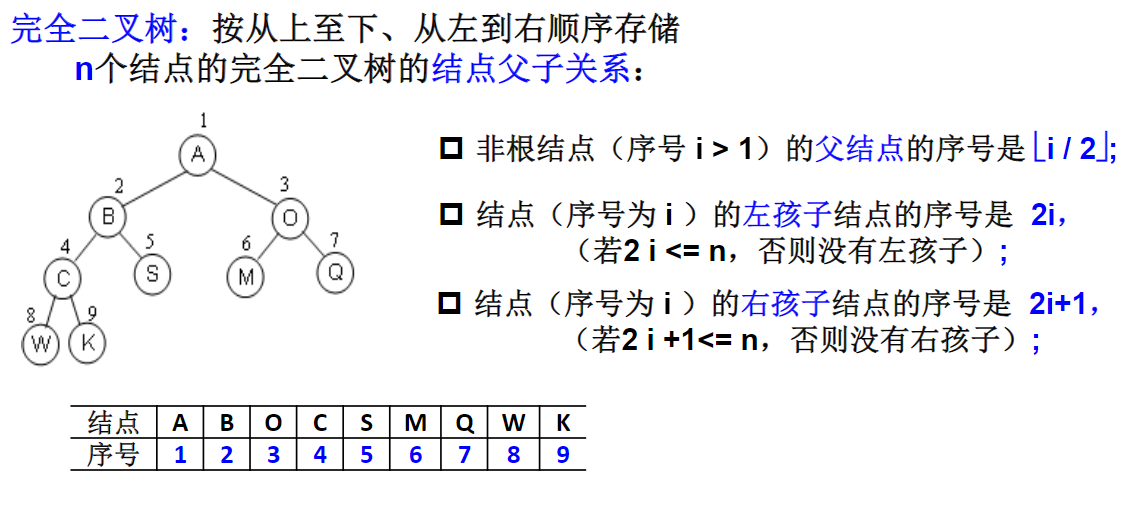

Complete binary tree: a binary tree with a depth of k and n nodes is called a complete binary tree if and only if each node corresponds to the nodes numbered from 1 to n in the full binary tree with a depth of k.

The characteristic of complete binary tree is that leaf nodes can only appear on the two largest layers of sequence, and the maximum sequence of the descendants of the left branch of a node is equal to or greater than that of the descendants of the right branch

2. Properties of binary tree

Property 1: there are at most 2^(i-1) (I ≥ 1) nodes on layer I of binary tree.

Property 2: a binary tree with depth h contains at most 2^h-1 nodes.

Property 3: if there are n0 leaf nodes and n2 nodes with degree 2 in any binary tree, there must be n0=n2+1.

Property 4: the depth of a complete binary tree with n nodes is logx+1 (where x represents the largest integer not greater than n).

Property 5: if a complete binary tree with n nodes is sequentially numbered (1 ≤ i ≤ n), then for the node numbered i (i ≥ 1);

When i=1, the node is the root and has no parents.

When I > 1, the parent node number of the node is i/2.

If 2i ≤ n, there is a left node numbered 2i, otherwise there is no left node.

If 2i+1 ≤ n, there is a right node numbered 2i+1, otherwise there is no right node.

3. Storage method of binary tree

3.1 sequential storage

3.2 chain storage

typedef struct TNode *Position;

typedef Position BinTree; /* Binary tree type */

struct TNode{ /* Tree node definition */

int Data; /* Node data */

BinTree Left; /* Point to left subtree */

BinTree Right; /* Point to right subtree */

};

4. Four traversals of binary tree

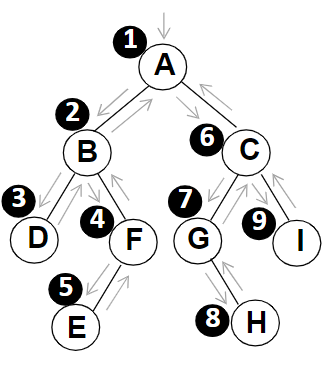

4.1 preorder traversal

1. Access the root node

2. Access the left subtree in the way of preorder traversal

3. Access the right subtree in the way of preorder traversal

void PreorderTraversal(BinTree BT)

{

if (BT) {

printf("%d ", BT->Data);

PreorderTraversal(BT->Left);

PreorderTraversal(BT->Right);

}

}

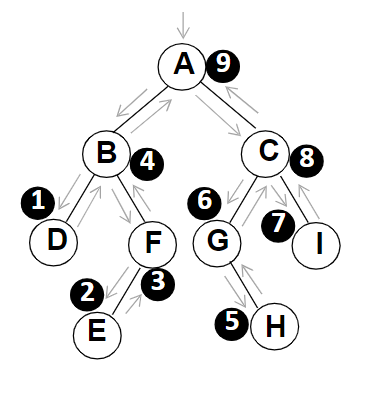

Illustration:

1. Access root node A first

2. According to the rules of preorder traversal, follow the recursive call, and first access the left subtree

So ABD

3. When accessing D, there is no left subtree, so go back to B to access the right subtree

So FE

4. After accessing the left subtree of root node A, start accessing the right subtree. The principle is the same as above, and the access order is

CGHI

5. Therefore, the general sequence of visits is A/BDFE/CGHI

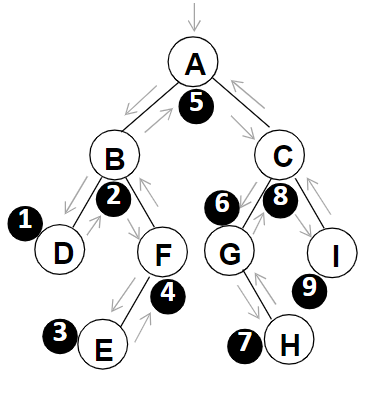

4.2 middle order traversal

1. Use middle order traversal to access the left subtree

2. Access the root node

3. Use middle order traversal to access the right subtree

void InorderTraversal(BinTree BT)

{

if (BT) {

InorderTraversal(BT->Left);

printf("%d ", BT->Data);

InorderTraversal(BT->Right);

}

}

The diagram is the same as the preorder traversal, but the timing of reading data is different

DBEF/A/GHCI

Note: since G has no left subtree, it passes through g twice, so G is in front of H

4.3 post order traversal

1. Use post order traversal to access the left subtree

2. Use post order traversal to access the right subtree

3. Access the root node

void PostorderTraversal(BinTree BT)

{

if (BT) {

PostorderTraversal(BT->Left);

PostorderTraversal(BT->Right);

printf("%d ", BT->Data);

}

}

The diagram is the same as above, but the timing of reading node data is different

DEFB/HGIC/A

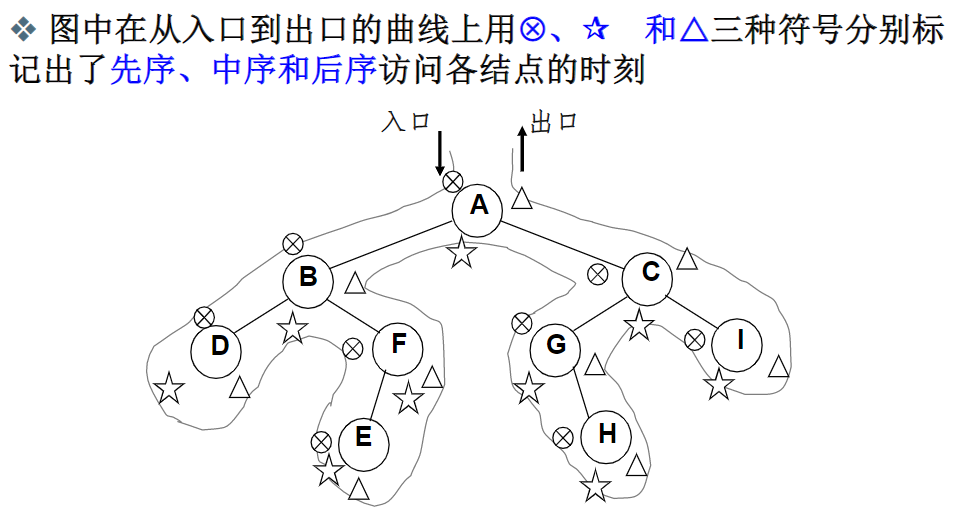

4.4 summary of pre -, middle -, and post sequence traversal

First order, middle order and second order traversal: the route in the traversal process is the same, but the time to read the data on the node is different

Traverse the left subtree to calculate a contact;

Traverse the right subtree to calculate a contact;

Reading node data is a contact;

**Summary: * * preorder traversal is the order in which data is read at the first contact

Middle order traversal is the order in which data is read at the second contact

Post order traversal is the order in which data is read at the third contact

Note: the first time a leaf node passes through is counted as three contacts, and the first time a parent node of only one right child node passes through is counted as two contacts. The second time a parent node of only one left child node passes through is counted as two contacts

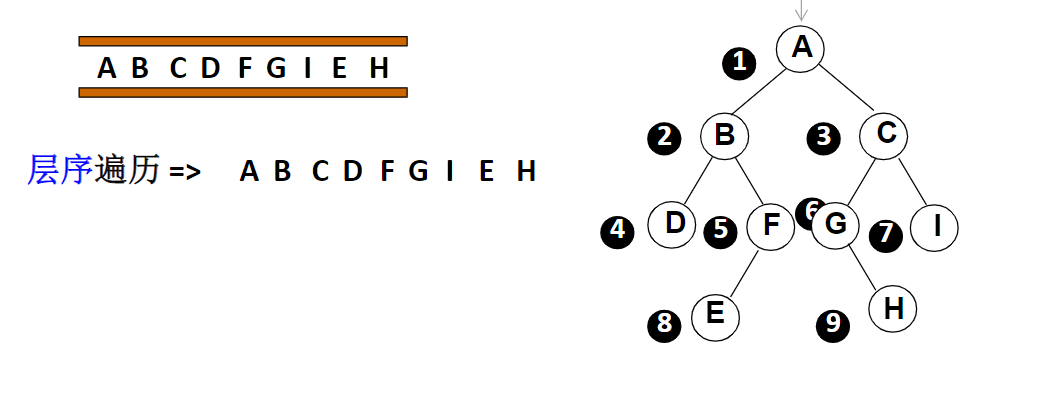

4.5 sequence traversal

1. Join the team at the root node

2. Visit the first element of the team. If the left son is not empty, the left cotyledon will join the team, and if the right son is not empty, the left cotyledon will join the team

3. The first element of the team is out of the team

4. Repeat steps 3 and 4 until the queue is empty

void LevelorderTraversal(BinTree BT)

{

Queue Q;

BinTree T;

if (!BT) return; /* If it is an empty tree, it returns directly */

Q = CreatQueue(); /* Create empty queue Q */

AddQ(Q, BT);

while (!IsEmpty(Q)) {

T = DeleteQ(Q);

printf("%d ", T->Data); /* Access the node that fetches the queue */

if (T->Left) AddQ(Q, T->Left);

if (T->Right) AddQ(Q, T->Right);

}

}

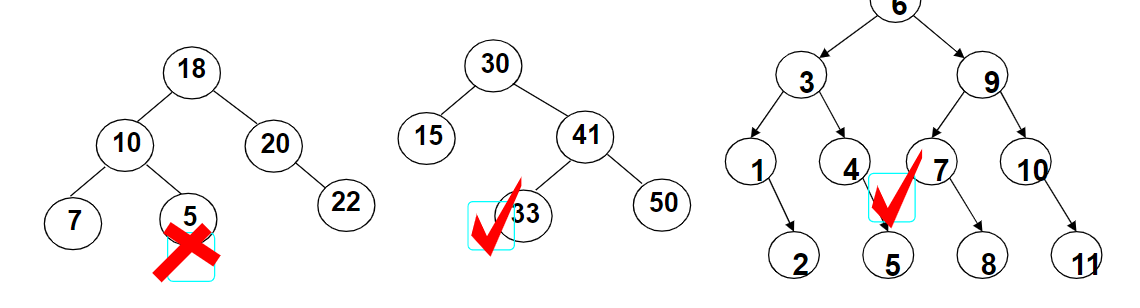

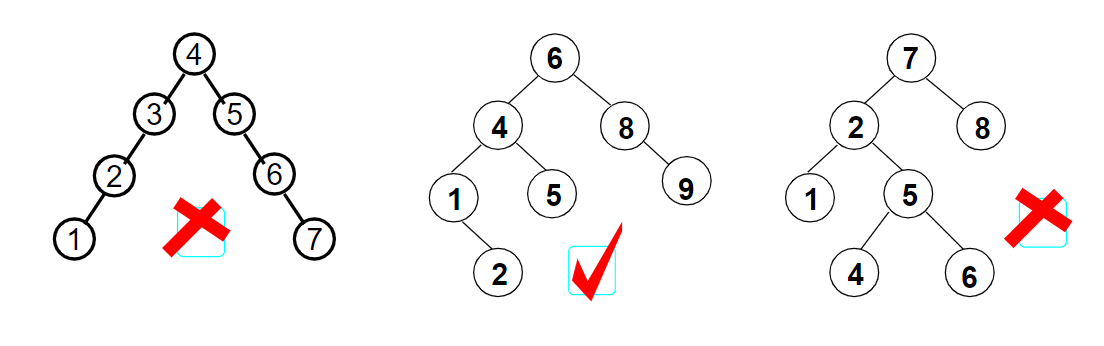

5, Binary search tree

Binary Search Tree (also: Binary Search Tree, binary sort tree) is either an empty tree or a binary tree with the following properties: if its left subtree is not empty, the values of all nodes on the left subtree are less than the values of its root node; If its right subtree is not empty, the values of all nodes on the right subtree are greater than those of its root node; Its left and right subtrees are also binary sort trees. As a classical data structure, Binary Search Tree not only has the characteristics of fast insertion and deletion of linked list, but also has the advantage of fast search of array; Therefore, it is widely used. For example, this data structure is generally used in file system and database system for efficient sorting and retrieval.

5.1 properties of binary search tree

1. If the left subtree of any node is not empty, the value of all nodes on the left subtree is not greater than the value of its root node.

2. If the right subtree of any node is not empty, the values of all nodes on the right subtree are not less than the values of its root node.

3. The left and right subtrees of any node are also binary search trees.

5.2 the operations of binary sort tree mainly include:

1. Find

BinTree Find(int x,BinTree BST)

{

while(BST)

{

if(X>BST->Data)

BST=BST->Right;

else if(X<BST->Data)

BST=BST->Left;

else

return BST;

}

return NULL;

}

2. Insert

BinTree Insert( BinTree BST, int X )

{

if( !BST ){ /* If the original tree is empty, a binary search tree of one node is generated and returned */

BST = (BinTree)malloc(sizeof(struct TNode));

BST->Data = X;

BST->Left = BST->Right = NULL;

}

else { /* Start finding where to insert the element */

if( X < BST->Data )

BST->Left = Insert( BST->Left, X ); /*Recursive insertion of left subtree*/

else if( X > BST->Data )

BST->Right = Insert( BST->Right, X ); /*Recursive insertion of right subtree*/

/* else X It already exists and does nothing */

}

return BST;

}

3. Delete

(1) Leaf node: deleted directly without affecting the original tree.

(2) Nodes with only left or right subtrees: after a node is deleted, move its left or right subtree to the location where the node is deleted, and the child inherits the parent business.

(3) Nodes with both left and right subtrees: find the direct precursor or direct successor s of node p to be deleted, replace node P with s, and then delete node s.

BinTree Delete( BinTree BST, ElementType X )

{

Position Tmp;

if( !BST )

printf("The element to be deleted was not found");

else {

if( X < BST->Data )

BST->Left = Delete( BST->Left, X ); /* Recursively delete from left subtree */

else if( X > BST->Data )

BST->Right = Delete( BST->Right, X ); /* Recursively delete from right subtree */

else { /* BST Is the node to delete */

/* If the deleted node has left and right child nodes */

if( BST->Left && BST->Right ) {

/* Find the smallest element from the right subtree to fill and delete the node */

Tmp = FindMin( BST->Right );

BST->Data = Tmp->Data;

/* Deletes the smallest element from the right subtree */

BST->Right = Delete( BST->Right, BST->Data );

}

else { /* The deleted node has one or no child nodes */

Tmp = BST;

if( !BST->Left ) /* Only right child or no child node */

BST = BST->Right;

else /* Only left child */

BST = BST->Left;

free( Tmp );

}

}

}

return BST;

}

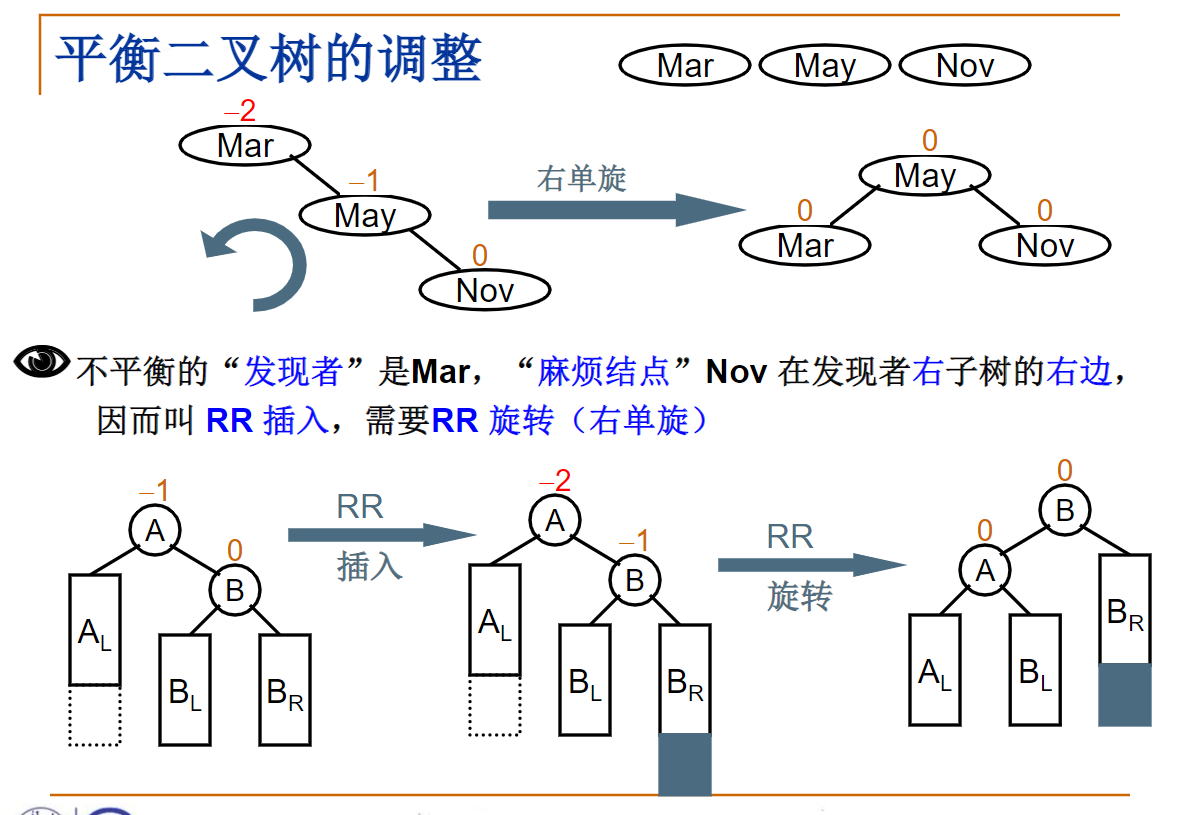

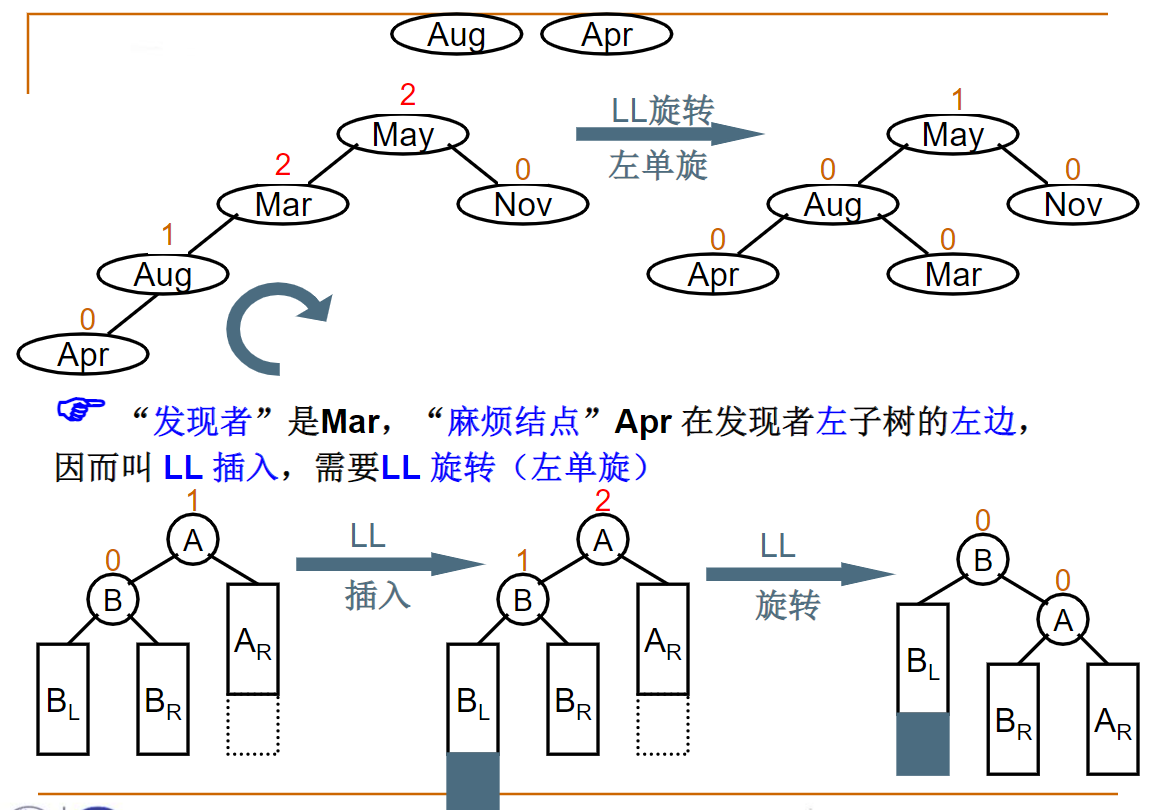

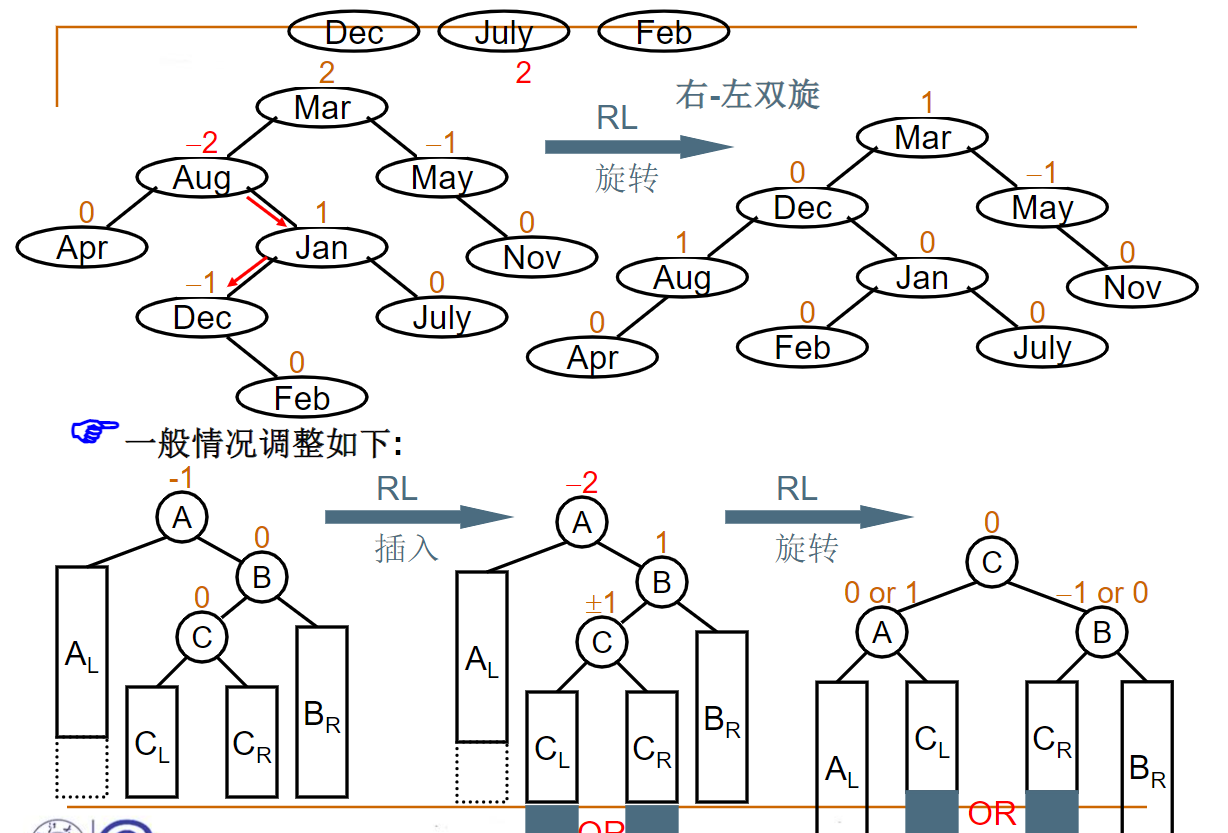

6, Balanced binary tree

Balanced binary tree means that the height difference of subtrees of any node is less than or equal to 1

Balance factor: BF(T)=h left - H right

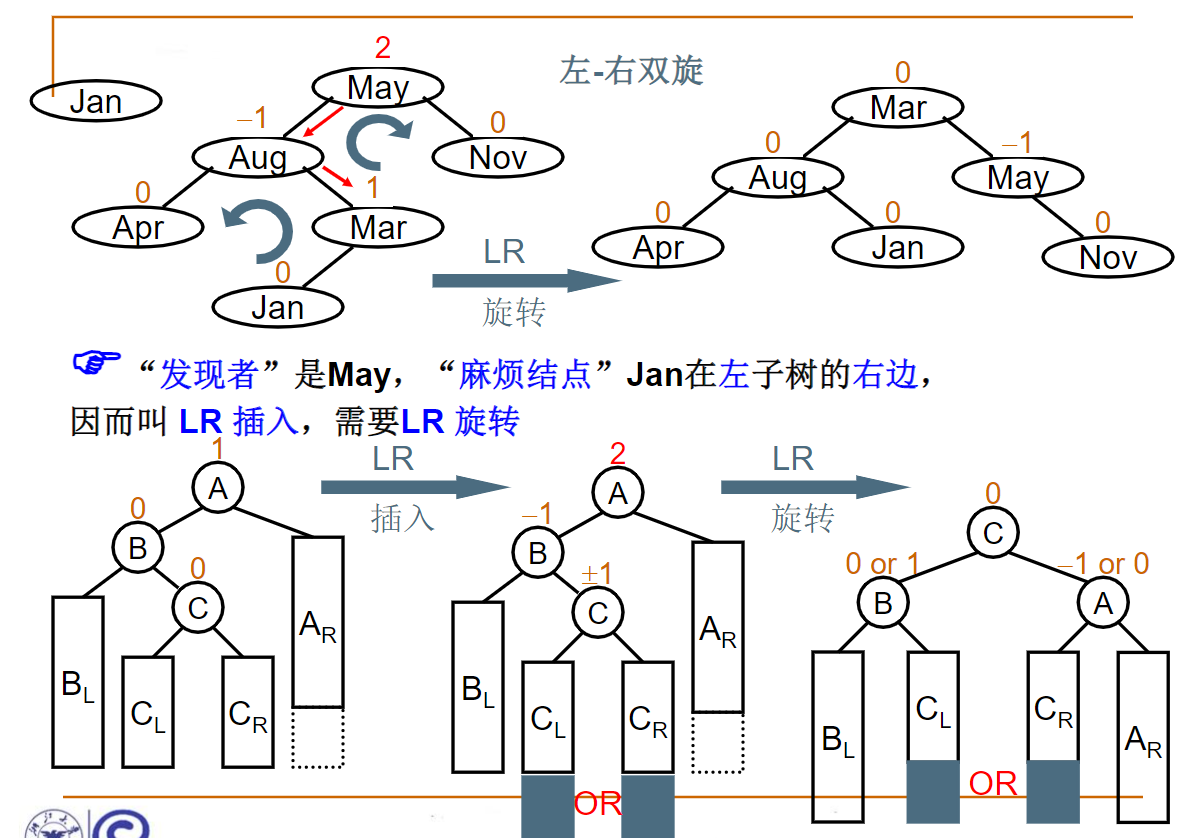

6.1 adjustment of balanced binary tree

RR

LL

RL

LR

typedef struct AVLNode *Position;

typedef Position AVLTree; /* AVL Tree type */

struct AVLNode{

ElementType Data; /* Node data */

AVLTree Left; /* Point to left subtree */

AVLTree Right; /* Point to right subtree */

int Height; /* Tree height */

};

int Max ( int a, int b )

{

return a > b ? a : b;

}

AVLTree SingleLeftRotation ( AVLTree A )

{ /* Note: A must have A left child node B */

/* Make left single rotation of A and B, update the height of A and B, and return to the new root node B */

AVLTree B = A->Left;

A->Left = B->Right;

B->Right = A;

A->Height = Max( GetHeight(A->Left), GetHeight(A->Right) ) + 1;

B->Height = Max( GetHeight(B->Left), A->Height ) + 1;

return B;

}

AVLTree DoubleLeftRightRotation ( AVLTree A )

{ /* Note: A must have A left child node B, and B must have A right child node C */

/* Make two single rotations of A, B and C to return to the new root node C */

/* Turn B and C to the right, and C is returned */

A->Left = SingleRightRotation(A->Left);

/* Turn A and C to the left, and C is returned */

return SingleLeftRotation(A);

}

AVLTree Insert( AVLTree T, ElementType X )

{ /* Insert X into AVL tree T and return the adjusted AVL tree */

if ( !T ) { /* If an empty tree is inserted, a new tree containing one node will be created */

T = (AVLTree)malloc(sizeof(struct AVLNode));

T->Data = X;

T->Height = 0;

T->Left = T->Right = NULL;

} /* if (Insert empty tree) end */

else if ( X < T->Data ) {

/* Insert left subtree of T */

T->Left = Insert( T->Left, X);

/* If left-hand rotation is required */

if ( GetHeight(T->Left)-GetHeight(T->Right) == 2 )

if ( X < T->Left->Data )

T = SingleLeftRotation(T); /* Sinistral */

else

T = DoubleLeftRightRotation(T); /* Left right double rotation */

} /* else if (Insert left subtree) end */

else if ( X > T->Data ) {

/* Insert right subtree of T */

T->Right = Insert( T->Right, X );

/* If right rotation is required */

if ( GetHeight(T->Left)-GetHeight(T->Right) == -2 )

if ( X > T->Right->Data )

T = SingleRightRotation(T); /* Right single rotation */

else

T = DoubleRightLeftRotation(T); /* Right left double rotation */

} /* else if (Insert right subtree) end */

/* else X == T->Data,No need to insert */

/* Don't forget to update the tree height */

T->Height = Max( GetHeight(T->Left), GetHeight(T->Right) ) + 1;

return T;

}

7, Huffman tree

Given N weights as N leaf nodes, a binary tree is constructed. If the weighted path length of the tree reaches the minimum, such a binary tree is called the optimal binary tree, also known as Huffman tree. Huffman tree is the tree with the shortest weighted path length, and the node with larger weight is closer to the root.

1. Path and path length

In a tree, the path between children or grandchildren that can be reached from one node down is called path. The number of branches in a path is called the path length. If the specified number of layers of the root node is 1, the path length from the root node to the L-th layer node is L-1.

2. Node weight and weighted path length

If a node in the tree is assigned a value with a certain meaning, this value is called the weight of the node. The weighted path length of a node is the product of the path length from the root node to the node and the weight of the node.

3. Weighted path length of tree

The weighted path length of the tree is specified as the sum of the weighted path lengths of all leaf nodes, which is recorded as WPL.

7.1 construction of Huffman tree

Assuming n weights, the constructed Huffman tree has n leaf nodes. If n weights are set as w1, w2,..., wn respectively, the construction rules of Huffman tree are as follows:

(1) Take w1, w2,..., wn as a forest with n trees (each tree has only one node);

(2) In the forest, the tree with the smallest weight of two root nodes is selected and merged as the left and right subtrees of a new tree, and the root node weight of the new tree is the sum of the root node weights of its left and right subtrees;

(3) Delete the two selected trees from the forest and add the new trees to the forest;

(4) Repeat steps (2) and (3) until there is only one tree left in the forest, which is the obtained Huffman tree.

7.2 reactor

Huffman tree for implementation

Heap is a special data structure in computer science. A heap is usually an array object that can be seen as a tree. The heap always satisfies the following properties:

The value of a node in the heap is always not greater than or less than the value of its parent node;

Heap is always a complete binary tree.

The heap with the largest root node is called the maximum heap or large root heap, and the heap with the smallest root node is called the minimum heap or small root heap. Common piles include binary pile, Fibonacci pile, etc.

Heap is a nonlinear data structure, equivalent to a one-dimensional array, with two direct successors.

The definition of heap is as follows: the sequence of n elements {k1,k2,ki,..., kn} is called heap if and only if the following relationship is satisfied.

(KI > k2i and Ki > k2i + 1) or (KI < k2i and Ki < k2i + 1)

If the one-dimensional array corresponding to this sequence (i.e. taking the one-dimensional array as the storage structure of this sequence) is regarded as a complete binary tree, the meaning of heap indicates that the values of all non terminal nodes in the complete binary tree are not greater than (or less than) the values of their left and right child nodes. Therefore, if the sequence {k1,k2,..., kn} is a heap, the top element of the heap (or the root of a complete binary tree) must be the minimum (or maximum) of n elements in the sequence.

The maximum heap creates an instance, and the minimum heap is the same:

typedef struct HNode *Heap; /* Heap type definition */

struct HNode {

ElementType *Data; /* An array of storage elements */

int Size; /* Number of current elements in the heap */

int Capacity; /* Maximum heap capacity */

};

typedef Heap MaxHeap; /* Maximum heap */

typedef Heap MinHeap; /* Minimum heap */

#define MAXDATA 1000 / * the value should be defined as greater than all possible elements in the heap according to the specific situation*/

MaxHeap CreateHeap( int MaxSize )

{ /* Create an empty maximum heap with a capacity of MaxSize */

MaxHeap H = (MaxHeap)malloc(sizeof(struct HNode));

H->Data = (ElementType *)malloc((MaxSize+1)*sizeof(ElementType));

H->Size = 0;

H->Capacity = MaxSize;

H->Data[0] = MAXDATA; /* Define sentinel as a value greater than all possible elements in the heap*/

return H;

}

bool IsFull( MaxHeap H )

{

return (H->Size == H->Capacity);

}

bool Insert( MaxHeap H, ElementType X )

{ /* Insert element X into the maximum heap h, where H - > data [0] has been defined as a sentinel */

int i;

if ( IsFull(H) ) {

printf("Maximum heap full");

return false;

}

i = ++H->Size; /* i Points to the position of the last element in the heap after insertion */

for ( ; H->Data[i/2] < X; i/=2 )

H->Data[i] = H->Data[i/2]; /* Upper filter X */

H->Data[i] = X; /* Insert X into */

return true;

}

#define ERROR -1 / * the error ID should be defined as an element value that is unlikely to appear in the heap according to the specific situation*/

bool IsEmpty( MaxHeap H )

{

return (H->Size == 0);

}

ElementType DeleteMax( MaxHeap H )

{ /* Extract the element with the largest key value from the maximum heap H and delete a node */

int Parent, Child;

ElementType MaxItem, X;

if ( IsEmpty(H) ) {

printf("The maximum heap is empty");

return ERROR;

}

MaxItem = H->Data[1]; /* Get the maximum value stored in the root node */

/* Use the last element in the maximum heap to filter the lower nodes from the root node upward */

X = H->Data[H->Size--]; /* Note that the size of the current heap should be reduced */

for( Parent=1; Parent*2<=H->Size; Parent=Child ) {

Child = Parent * 2;

if( (Child!=H->Size) && (H->Data[Child]<H->Data[Child+1]) )

Child++; /* Child Point to the larger of the left and right child nodes */

if( X >= H->Data[Child] ) break; /* Found the right place */

else /* Lower filter X */

H->Data[Parent] = H->Data[Child];

}

H->Data[Parent] = X;

return MaxItem;

}

/*----------- Build maximum reactor-----------*/

void PercDown( MaxHeap H, int p )

{ /* Filter down: adjust the sub heap with H - > data [P] as the root in H to the maximum heap */

int Parent, Child;

ElementType X;

X = H->Data[p]; /* Get the value stored in the root node */

for( Parent=p; Parent*2<=H->Size; Parent=Child ) {

Child = Parent * 2;

if( (Child!=H->Size) && (H->Data[Child]<H->Data[Child+1]) )

Child++; /* Child Point to the larger of the left and right child nodes */

if( X >= H->Data[Child] ) break; /* Found the right place */

else /* Lower filter X */

H->Data[Parent] = H->Data[Child];

}

H->Data[Parent] = X;

}

void BuildHeap( MaxHeap H )

{ /* Adjust the elements in H - > data [] to meet the order of the maximum heap */

/* It is assumed that all H - > size elements already exist in H - > data [] */

int i;

/* Start from the parent node of the last node to the root node 1 */

for( i = H->Size/2; i>0; i-- )

PercDown( H, i );

}

7.3 implementation of Huffman tree

The main way to realize Huffman coding is to create a binary tree and its nodes. The nodes of these trees can be stored in an array. The size of the array is the size n of the number of symbols, and the nodes are terminal nodes (leaf nodes) and non terminal nodes (internal nodes).

At the beginning, all nodes are terminal nodes, and there are three fields in the node:

1. Symbol

2. Weight, probability and Frequency

3. Link to its parent node

Instead, there are four fields in the terminal node:

1. Weight, probability and Frequency

2. Links to two child node s

3. Link to its parent node

Basically, we use '0' and '1' to point to the left child node and the right child node respectively. Finally, there are n terminal nodes and n-1 non terminal nodes in the completed binary tree, which removes unnecessary symbols and produces the best coding length.

In the process, each terminal node contains a Weight (Weight, probability and Frequency). The combination of two terminal nodes will produce a new node. The Weight of the new node is the sum of the weights of the two terminal nodes with the smallest Weight, and the process continues until only one node is left.

There are many ways to implement Huffman tree. You can use Priority Queue to simply achieve this process and give symbols with lower weight higher Priority. The algorithm is as follows:

1. Add N terminal nodes to the priority queue, then n nodes have a priority Pi, 1 ≤ i ≤ n

2. If the number of nodes in the queue is > 1, then:

(1) remove the two smallest Pi nodes from the queue, that is, remove twice in a row (min (Pi), Priority_Queue)

(2) generate a new node, which is the parent node of the removed node of (1), and the weight value of this node is the sum of the weights of (1) two nodes

(3) add the node generated in (2) to the priority queue

3. The last point in the priority queue is the root node of the tree

The Time Complexity of this algorithm is O (n log n); because there are N terminal nodes, the tree has a total of 2n-1 nodes. Using the priority queue, each cycle must be o (log n).

typedef struct TreeNode *HuffmanTree;

struct TreeNode{

int Weight;

HuffmanTree Left, Right;

}

HuffmanTree Huffman( MinHeap H )

{ /* Suppose that H - > size weights already exist in H - > elements [] - > weight */

int i; HuffmanTree T;

BuildMinHeap(H); /*Adjust H - > elements [] to the minimum heap by weight*/

for (i = 1; i < H->Size; i++) { /*Merge H - > size-1 times*/

T = malloc( sizeof( struct TreeNode) ); /*Create a new node*/

T->Left = DeleteMin(H);

/*Delete a node from the minimum heap as the left child of the new T*/

T->Right = DeleteMin(H);

/*Delete a node from the minimum heap as the right child of the new T*/

T->Weight = T->Left->Weight+T->Right->Weight;

/*Calculate new weights*/

Insert( H, T ); /*Insert new T into minimum heap*/

}

T = DeleteMin(H);

return T;

}

It's been almost a month since I learned data structure. I can only say that it's still a little difficult for me. Especially for the part of time chart, I can only say come on! After all, there is still one month for the postgraduate entrance examination in 22 years. For the candidates in 23 years, I have time to come on

The learning notes of the tree are 15000 words. Let's give a praise. Thank you. Most of the pictures come from the data structure mooc of Zhejiang University. After all, this is what I read by myself

Finally, there is a sentence of chicken soup every day:

What is failure? Nothing, just one step closer to success; what is success? That is, after all the roads leading to failure, there is only one road left, that is the road to success.

Alas, others can continue to choose if they fail, but I may have nothing if I fail once. It's nothing. Come on!

Finally, let's give you a compliment