Data set comes from kaggle classic competition data set

I. purpose

According to the information in the data set, we use python machine learning to predict the survival of Titanic passengers.

2, Dataset

My dataset has three formats: test, train and genderclassmodel, all in csv format

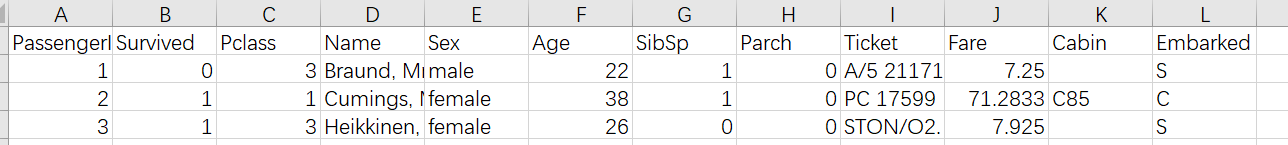

Fields in test and train data sets:

From left to right, the number of passengers, whether they are alive or not, bin, name, gender, age, number of relatives of the same generation on board, number of passengers with parents or children, ticket number, travel expenses, cabin number, port of embarkation (C = Cherbourg, Q = Queenstown, S = Southampton)

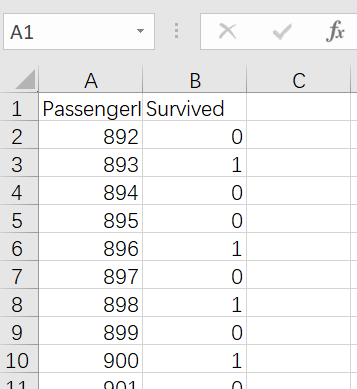

The genderclassmodel is the actual survival result of the test set:

3, Training ideas

Through the observation and experience of the data set, in the data set, such as passenger name, ticket number and number, these attributes have nothing to do with the result obviously and are useless information, which can be removed first. There are many null values in the data. Missing values can also be processed first. For the sample of cabin number, it is too small to delete this advanced feature.

We used KNN and random forest for training.

4, Data cleaning and feature processing`

Introduce training set and test set, delete columns of name, bed ticket number and cabin number

import numpy as np import pandas as pd #1 \ data import df_train=pd.read_csv('data/titanic/train.csv') df_train=df_train.drop(['Name','Ticket','Cabin'],axis=1) df_test=pd.read_csv('data/titanic/test.csv') df_test=df_test.drop(['Name','Ticket','Cabin'],axis=1)

When handling the missing values, age and fare have more missing values. I use the average value to fill in, but there are two missing values at the port of embarkation, so they are deleted directly.

#2. Missing value handling df_train['Age']=df_train['Age'].fillna(df_train['Age'].mean()) df_train['Fare']=df_train['Fare'].fillna(df_train['Fare'].mean()) df_train=df_train.dropna() df_test['Age']=df_test['Age'].fillna(df_test['Age'].mean()) df_test['Fare']=df_test['Fare'].fillna(df_test['Fare'].mean())

Normalization and standardization of eigenvalues

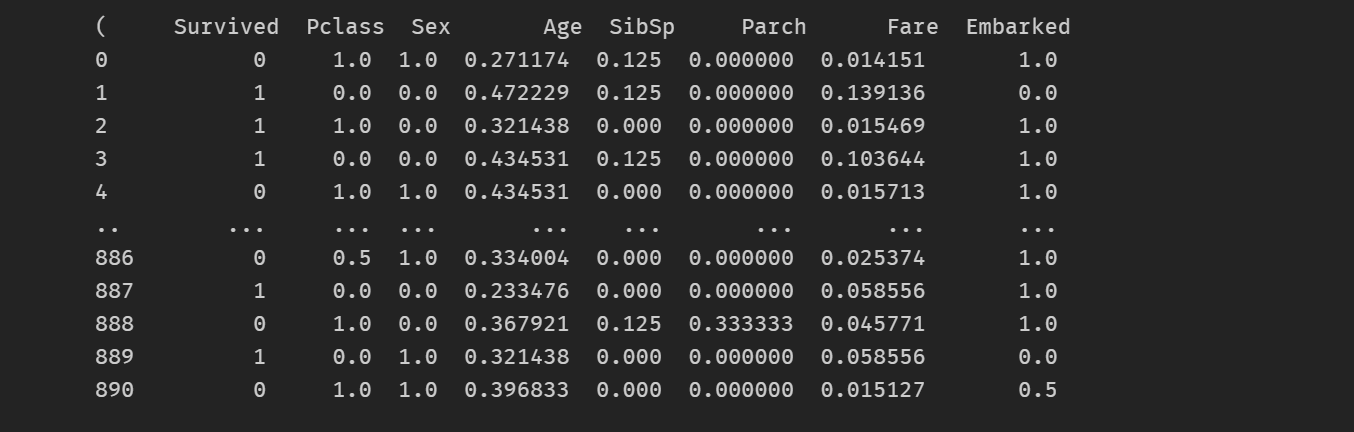

Since the gender and port of embarkation are string types, we need to convert them into values, replacing them with 0, 1, 2. I use normalization to convert other values into numbers between 0-1.

#3. Feature processing from sklearn.preprocessing import MinMaxScaler,StandardScaler from sklearn.preprocessing import LabelEncoder,OneHotEncoder from sklearn.preprocessing import LabelEncoder,OneHotEncoder scaler_list=[Age,Fare,Pclass,SibSp,Parch] column_list=['Age','Fare','Pclass','SibSp','Parch'] for i in range(len(scaler_list)): if not scaler_list[i]: df_train[column_list[i]]=MinMaxScaler().fit_transform(df_train[column_list[i]].values.reshape(-1,1)).reshape(1,-1)[0] df_test[column_list[i]]=MinMaxScaler().fit_transform(df_test[column_list[i]].values.reshape(-1,1)).reshape(1,-1)[0] else: df_train[column_list[i]]=StandardScaler().fit_transform(df_train[column_list[i]].values.reshape(-1,1)).reshape(1,-1)[0] df_test[column_list[i]]=StandardScaler().fit_transform(df_test[column_list[i]].values.reshape(-1,1)).reshape(1,-1)[0] scaler_list=[Sex,Embarked] column_list=['Sex','Embarked'] for i in range(len(scaler_list)): if scaler_list[i]: df_train[column_list[i]]=LabelEncoder().fit_transform(df_train[column_list[i]]) df_train[column_list[i]]=MinMaxScaler().fit_transform(df_train[column_list[i]].values.reshape(-1,1)).reshape(1,-1)[0] df_test[column_list[i]]=LabelEncoder().fit_transform(df_test[column_list[i]]) df_test[column_list[i]]=MinMaxScaler().fit_transform(df_test[column_list[i]].values.reshape(-1,1)).reshape(1,-1)[0] return df_train,test def main(): print(titanic_processing()) if __name__=='__main__': main()

Display result

After the features are processed, you can start modeling

Here, I choose three models for training, KNN, SVM and random forest. This model is the judgment of survival, so we choose classification model for prediction.

What's worth mentioning here is that in the data I get, the training set contains the annotation Survived. First, we need to remove it. The first step is to separate the feature part and the annotation part of the training set and the test set.

y_train=df_train['Survived'].values x_train=df_train.drop('Survived',axis=1).values y_class=pd.read_csv('data/titanic/genderclassmodel.csv') y_test=y_class['Survived'].values x_test=df_test.values

Then we start modeling. We need to select three models and compare the scores of each model, so I created a list of models.

from sklearn.ensemble import RandomForestClassifier,AdaBoostClassifier from sklearn.svm import SVC from sklearn.neighbors import NearestNeighbors,KNeighborsClassifier from sklearn.metrics import accuracy_score,recall_score,f1_score models=[] models.append(('KNN',KNeighborsClassifier(n_neighbors=3))) models.append(('SVM',SVC(C=100))) models.append(('Ramdomforest',RandomForestClassifier()))

Then model fitting and prediction are carried out.

for clf_name,clf in models: clf.fit(x_train,y_train) xy_list=[(x_train,y_train),(x_test,y_test)] for i in range(len(xy_list)): x_part=xy_list[i][0] y_part=xy_list[i][1] y_pred=clf.predict(x_part) print(i) print(clf_name,'ACC:',accuracy_score(y_part,y_pred)) print(clf_name,'REC:',recall_score(y_part,y_pred)) print(clf_name,'f1:',f1_score(y_part,y_pred))

The complete code is as follows:

import numpy as np import pandas as pd #1 \ data import def titanic_processing(Age=False,Fare=False,Pclass=False,SibSp=False,Parch=False,Sex=True,Embarked=True): df_train=pd.read_csv('data/titanic/train.csv') df_train=df_train.drop(['Name','Ticket','Cabin','PassengerId'],axis=1) df_test=pd.read_csv('data/titanic/test.csv') df_test=df_test.drop(['Name','Ticket','Cabin','PassengerId'],axis=1) #2. Missing value handling df_train['Age']=df_train['Age'].fillna(df_train['Age'].mean()) df_train['Fare']=df_train['Fare'].fillna(df_train['Fare'].mean()) df_train=df_train.dropna() df_test['Age']=df_test['Age'].fillna(df_test['Age'].mean()) df_test['Fare']=df_test['Fare'].fillna(df_test['Fare'].mean()) #3. Feature processing from sklearn.preprocessing import MinMaxScaler,StandardScaler from sklearn.preprocessing import LabelEncoder,OneHotEncoder from sklearn.preprocessing import LabelEncoder,OneHotEncoder scaler_list=[Age,Fare,Pclass,SibSp,Parch] column_list=['Age','Fare','Pclass','SibSp','Parch'] for i in range(len(scaler_list)): if not scaler_list[i]: df_train[column_list[i]]=MinMaxScaler().fit_transform(df_train[column_list[i]].values.reshape(-1,1)).reshape(1,-1)[0] df_test[column_list[i]]=MinMaxScaler().fit_transform(df_test[column_list[i]].values.reshape(-1,1)).reshape(1,-1)[0] else: df_train[column_list[i]]=StandardScaler().fit_transform(df_train[column_list[i]].values.reshape(-1,1)).reshape(1,-1)[0] df_test[column_list[i]]=StandardScaler().fit_transform(df_test[column_list[i]].values.reshape(-1,1)).reshape(1,-1)[0] scaler_list=[Sex,Embarked] column_list=['Sex','Embarked'] for i in range(len(scaler_list)): if scaler_list[i]: df_train[column_list[i]]=LabelEncoder().fit_transform(df_train[column_list[i]]) df_train[column_list[i]]=MinMaxScaler().fit_transform(df_train[column_list[i]].values.reshape(-1,1)).reshape(1,-1)[0] df_test[column_list[i]]=LabelEncoder().fit_transform(df_test[column_list[i]]) df_test[column_list[i]]=MinMaxScaler().fit_transform(df_test[column_list[i]].values.reshape(-1,1)).reshape(1,-1)[0] return df_train,df_test def titanic_model(df_train,df_test): y_train=df_train['Survived'].values x_train=df_train.drop('Survived',axis=1).values y_class=pd.read_csv('data/titanic/genderclassmodel.csv') y_test=y_class['Survived'].values x_test=df_test.values from sklearn.ensemble import RandomForestClassifier,AdaBoostClassifier from sklearn.svm import SVC from sklearn.neighbors import NearestNeighbors,KNeighborsClassifier from sklearn.metrics import accuracy_score,recall_score,f1_score models=[] models.append(('KNN',KNeighborsClassifier(n_neighbors=3))) models.append(('SVM',SVC(C=100))) models.append(('Ramdomforest',RandomForestClassifier())) for clf_name,clf in models: clf.fit(x_train,y_train) xy_list=[(x_train,y_train),(x_test,y_test)] for i in range(len(xy_list)): x_part=xy_list[i][0] y_part=xy_list[i][1] y_pred=clf.predict(x_part) print(i) print(clf_name,'ACC:',accuracy_score(y_part,y_pred)) print(clf_name,'REC:',recall_score(y_part,y_pred)) print(clf_name,'f1:',f1_score(y_part,y_pred)) def main(): df_train,df_test=titanic_processing() titanic_model(df_train,df_test) if __name__=='__main__': main()

Let's look at the evaluation results:

0 KNN ACC: 0.875140607424072 KNN REC: 0.8 KNN f1: 0.8305343511450382 1 KNN ACC: 0.8181818181818182 KNN REC: 0.8156028368794326 KNN f1: 0.7516339869281047 0 SVM ACC: 0.84251968503937 SVM REC: 0.6764705882352942 SVM f1: 0.7666666666666666 1 SVM ACC: 0.8779904306220095 SVM REC: 0.8014184397163121 SVM f1: 0.8158844765342961 0 Ramdomforest ACC: 0.9820022497187851 Ramdomforest REC: 0.9647058823529412 Ramdomforest f1: 0.9761904761904762 1 Ramdomforest ACC: 0.7990430622009569 Ramdomforest REC: 0.6950354609929078 Ramdomforest f1: 0.7

0 for training set, 1 for test set.

In general, the results of KNN are better than those of the other two. The fitting effect of random forest training set is very good, but the test set is not satisfactory.

Summary:

We are familiar with the basic process of data mining and machine learning through the actual combat of the survival prediction project of Titanic. Of course, machine learning is a very deep topic, and we need to continue to study hard to understand the algorithm principle of various models, so as to select the appropriate model for analysis in the specific project.