background knowledge

In deep learning, data preprocessing takes up a large part of our training process. How to use different databases to load our data is particularly important. So this blog will combine Data loading in tensorflow 2.0 The official documentation describes how to load our own tagged data and tags.

testing environment

- Operating system: Windows10

- Deep learning framework: Tensorflow==2.0 or plus

- IDE: Pycharm

Introduction to custom data format

. Because I am interested in the field of image, so the data will take image classification as an example to demonstrate and explain.

The link for data download is Demo data download

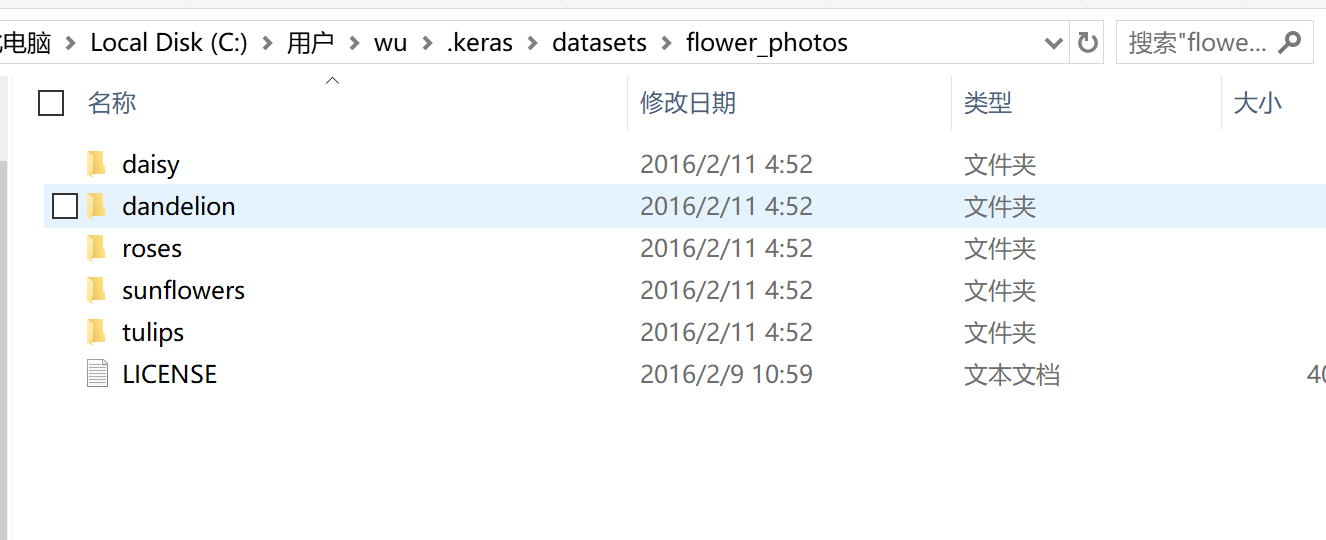

Simply look at the data storage mode. The directory structure of the data storage mode is as follows:

You can see that the parent node directory of each image is the result of their classification, and the content in each folder is the classified image. After we have prepared the data, we can start the operation of the data loading part.

Custom data loading

The data loading functions to be used in tensorflow==2.0 are:

# The main function of data recording module is to dynamically obtain corresponding slices by using iterator ds = tf.data.Dataset.from_tensor_slices() # Using a set of function functions output by ds is a mapping (this is similar to the transform in Python) ds = ds.map(function) # From the function name, it can be seen that the function is to scramble the whole data and fill it up (waiting for a little time) where the buffer size is buffer_size, and restart it by repeat obj = tf.data.experimental.shuffle_and_repeat(buffer_size = buffer_size) # Set batch size, object intrinsic property ds = ds.batch(BATCH_SIZE) # Get data from cache, object intrinsic properties ds = ds.prefetch(buffer_size=AUTOTUNE)

With the above function introduction, now start the operation steps:

- Get the path and label of the image as the parameter of the function DS = tf.data.dataset.from menu slices

- Using ds.map to add image reading, preprocessing (normalization, resize, etc.) operations

- Scramble the resulting data and set the batch [size]

- get data

Preset parameters

import tensorflow as tf AUTOTUNE = tf.data.experimental.AUTOTUNE

Path and label of image, get object ds

# Data_root here is the address where you download the image and save it. Here, data_root = "C:\Users\wu\.keras\datasets\flower_photos"pathlib generates a path object import pathlib data_root = pathlib.Path(data_root) # Object to get the path of all pictures all_image_paths = list(data_root.glob("*/*")) # String to get the path of all pictures all_image_paths = [str(path) for path in all_image_paths] # Disrupt all paths import random random.shuffle(all_image_paths) # Picture quantity image_count = len(all_image_paths) # Image name label_names = sorted(item.name for item in data_root.glob("*/") if item.is_dir()) # Set mapping for image name, mapping result is integer label_to_index = dict((name, index) for index, name in enumerate(label_names)) # Get the label corresponding to all images all_image_labels = [label_to_index[pathlib.Path(path).parent.name] for path in all_image_paths] # Get object ds ds = tf.data.Dataset.from_tensor_slices((all_image_paths,all_image_labels))

Image reading, preprocessing

# Preprocessing picture def preprocess_image(image): image = tf.image.decode_jpeg(image,channels=3) image = tf.image.resize(image,[192,192]) image /=255.0 return image # Read picture + preprocess def load_and_preprocess_image(path): image = tf.io.read_file(path) return preprocess_image(image) # Read picture + preprocess + get the corresponding label of picture def load_and_preprocess_from_path_label(path,label): return load_and_preprocess_image(path),label # Attach the preprocessed part of the picture to the sliced object image_label_ds = ds.map(load_and_preprocess_from_path_label)

Scramble the resulting data and set the batch [size]

ds = image_label_ds.apply(tf.data.experimental.shuffle_and_repeat(buffer_size=image_count)) ds = ds.batch(Batch_Size) ds = ds.prefetch(buffer_size=AUTOTUNE)

get data

def change_range(image,label): return 2*image-1,label keras_ds = ds.map(change_range) image_batch,label_batch = next(iter(keras_ds))

At this point, the image data can be read and input to the neural network.