Because the elimination strategy of lru of redis focuses on the access time of keys. If it is a one-time key traversal operation, many keys that are not being accessed will remain in memory and the data that needs to be accessed immediately will be eliminated.

Therefore, lfu is introduced into redis 4.0.0. lfu is optimized on the basis of lru, and lfu increases the judgment of access times on the basis of time.

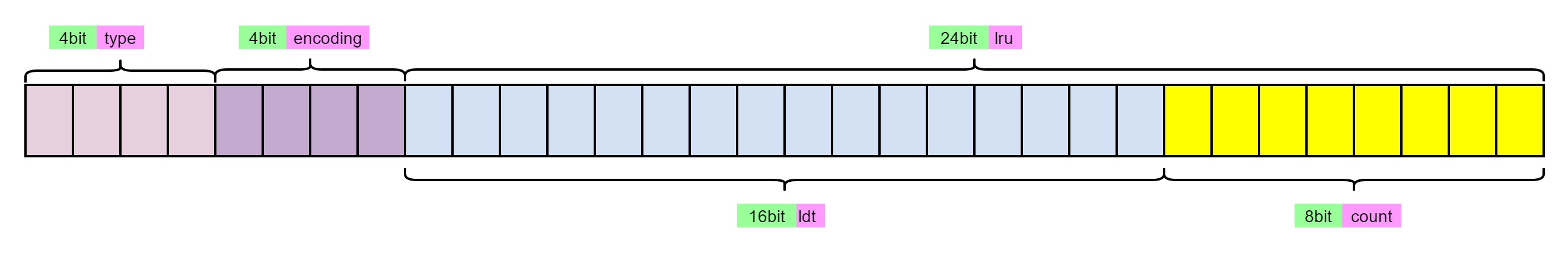

typedef struct redisObject {

unsigned type:4;

unsigned encoding:4;

unsigned lru:LRU_BITS; /* LRU time (relative to global lru_clock) or

* LFU data (least significant 8 bits frequency

* and most significant 16 bits decreas time). */

int refcount;

void *ptr;

} robj;

The original 24 bit lru field is divided into two fields ldt and count. ldt stores the access time, while count stores the access times.

The count is only 8bit, so the maximum value of count is only 255, so it is not added once every access. Instead, it is incremented through a policy.

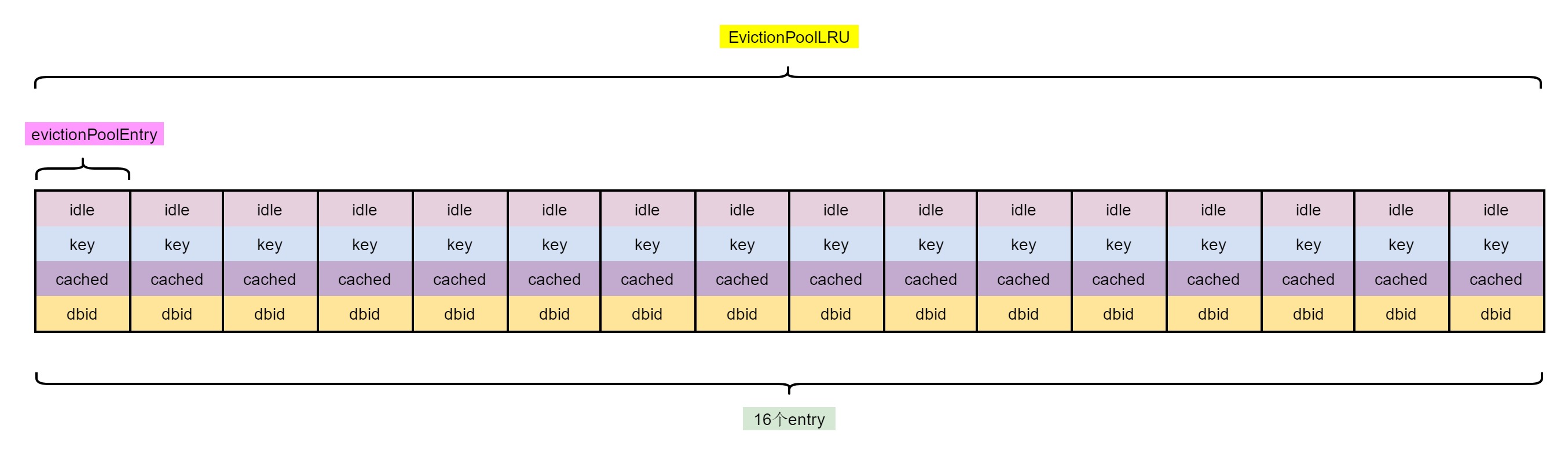

1. Definition of obsolete pool node

#define EVPOOL_SIZE 16

#define EVPOOL_CACHED_SDS_SIZE 255

struct evictionPoolEntry {

unsigned long long idle; /* Object idle time (inverse frequency for LFU) */

sds key; /* Key name. */

sds cached; /* Cached SDS object for key name. */

int dbid; /* Key DB number. */

};

//Global obsolete pool pointer

static struct evictionPoolEntry *EvictionPoolLRU;

2. Elimination pool allocation space

/* Create a new eviction pool. */

void evictionPoolAlloc(void) {

struct evictionPoolEntry *ep;

int j;

ep = zmalloc(sizeof(*ep)*EVPOOL_SIZE);

for (j = 0; j < EVPOOL_SIZE; j++) {

ep[j].idle = 0;

ep[j].key = NULL;

ep[j].cached = sdsnewlen(NULL,EVPOOL_CACHED_SDS_SIZE);

ep[j].dbid = 0;

}

EvictionPoolLRU = ep;

}

There is only one global elimination pool. In one elimination pool, there are elimination candidate keys in all db, so the dbid field is added to identify the db to which the current key belongs.

When a key is added to the elimination pool, it needs to allocate space dynamically every time, which is easy to cause memory fragmentation and performance problems. Therefore, the cached field is added to allocate space in advance, which can be used directly later to reduce frequent allocation of space. However, the space allocated in advance is 255 characters, so it is still necessary to allocate space dynamically when the length of the key exceeds 255.

3. Object creation

In order to prevent the newly created object from being eliminated immediately, the initial value of count is 5

#define LFU_INIT_VAL 5

robj *createObject(int type, void *ptr) {

robj *o = zmalloc(sizeof(*o));

o->type = type;

o->encoding = OBJ_ENCODING_RAW;

o->ptr = ptr;

o->refcount = 1;

/* Set the LRU to the current lruclock (minutes resolution), or

* alternatively the LFU counter. */

if (server.maxmemory_policy & MAXMEMORY_FLAG_LFU) {

o->lru = (LFUGetTimeInMinutes()<<8) | LFU_INIT_VAL;

} else {

o->lru = LRU_CLOCK();

}

return o;

}

unsigned long LFUGetTimeInMinutes(void) {

return (server.unixtime/60) & 65535;

}

4. Update the count when the object is accessed

Because the count is only 8bit and the maximum value is only 255, you can't increase the count every time you visit, so there is an algorithm to increase the count

# 1. A random number R between 0 and 1 is extracted. # 2. A probability P is calculated as 1/(old_value*lfu_log_factor+1). # 3. The counter is incremented only if R < P.

/* Logarithmically increment a counter. The greater is the current counter value

* the less likely is that it gets really implemented. Saturate it at 255. */

uint8_t LFULogIncr(uint8_t counter) {

if (counter == 255) return 255;

double r = (double)rand()/RAND_MAX;

double baseval = counter - LFU_INIT_VAL;

if (baseval < 0) baseval = 0;

double p = 1.0/(baseval*server.lfu_log_factor+1);

if (r < p) counter++;

return counter;

}

The test results based on different values of factor in the official configuration file are as follows

# +--------+------------+------------+------------+------------+------------+ # | factor | 100 hits | 1000 hits | 100K hits | 1M hits | 10M hits | # +--------+------------+------------+------------+------------+------------+ # | 0 | 104 | 255 | 255 | 255 | 255 | # +--------+------------+------------+------------+------------+------------+ # | 1 | 18 | 49 | 255 | 255 | 255 | # +--------+------------+------------+------------+------------+------------+ # | 10 | 10 | 18 | 142 | 255 | 255 | # +--------+------------+------------+------------+------------+------------+ # | 100 | 8 | 11 | 49 | 143 | 255 | # +--------+------------+------------+------------+------------+------------+ #

The count is updated during each access (during rdb or aof rewriting)

And lookup_ The operation of the notify flag is not updated (for example, these commands type, ttl, pttl, swapdb)

robj *lookupKey(redisDb *db, robj *key, int flags) {

dictEntry *de = dictFind(db->dict,key->ptr);

if (de) {

robj *val = dictGetVal(de);

/* Update the access time for the ageing algorithm.

* Don't do it if we have a saving child, as this will trigger

* a copy on write madness. */

if (server.rdb_child_pid == -1 &&

server.aof_child_pid == -1 &&

!(flags & LOOKUP_NOTOUCH))

{

if (server.maxmemory_policy & MAXMEMORY_FLAG_LFU) {

unsigned long ldt = val->lru >> 8;

unsigned long counter = LFULogIncr(val->lru & 255);

val->lru = (ldt << 8) | counter;

} else {

val->lru = LRU_CLOCK();

}

}

return val;

} else {

return NULL;

}

}

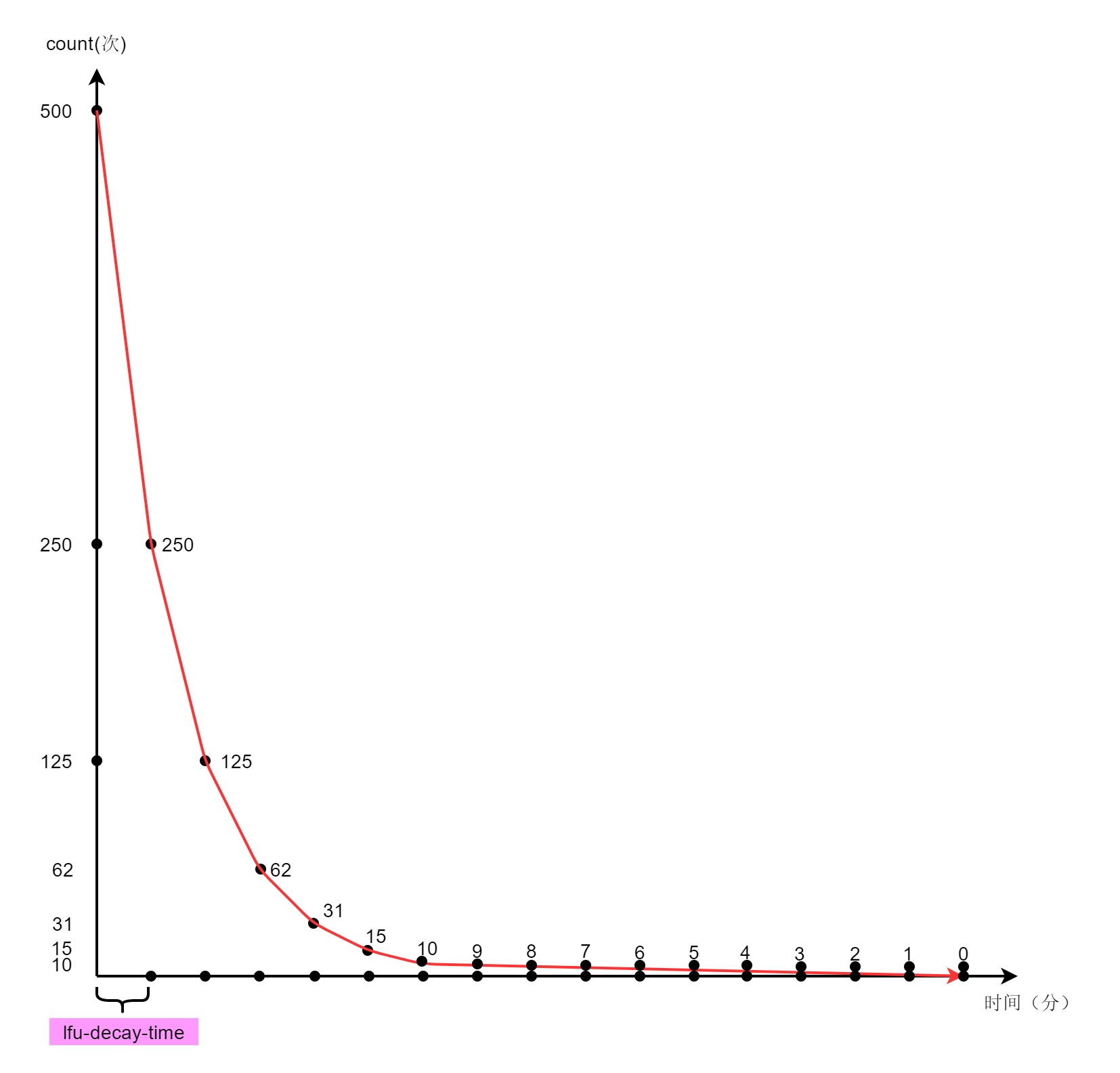

5. Count attenuation

#define LFU_DECR_INTERVAL 1

unsigned long LFUDecrAndReturn(robj *o) {

unsigned long ldt = o->lru >> 8;

unsigned long counter = o->lru & 255;

if (LFUTimeElapsed(ldt) >= server.lfu_decay_time && counter) {

if (counter > LFU_INIT_VAL*2) {

counter /= 2;

if (counter < LFU_INIT_VAL*2) counter = LFU_INIT_VAL*2;

} else {

counter--;

}

o->lru = (LFUGetTimeInMinutes()<<8) | counter;

}

return counter;

}

When server.lfu_decay_time (can be configured through LFU decay time XXX, default 1 minute) when it is not accessed within the time, the count will be attenuated.

- If the count value is greater than 10, it will be halved

- Less than or equal to 10, it decreases linearly

For example, if the count of a key is 500, it will decay with time. The process is as follows.

6. Filter candidate key s from all db

Originally, each db had its own elimination pool, but now there is only one global elimination pool, and the candidate key s are written to this elimination pool.

int freeMemoryIfNeeded(void) {

...

if (server.maxmemory_policy & (MAXMEMORY_FLAG_LRU|MAXMEMORY_FLAG_LFU) ||

server.maxmemory_policy == MAXMEMORY_VOLATILE_TTL)

{

struct evictionPoolEntry *pool = EvictionPoolLRU;

while(bestkey == NULL) {

unsigned long total_keys = 0, keys;

/* We don't want to make local-db choices when expiring keys,

* so to start populate the eviction pool sampling keys from

* every DB. */

for (i = 0; i < server.dbnum; i++) {

db = server.db+i;

dict = (server.maxmemory_policy & MAXMEMORY_FLAG_ALLKEYS) ?

db->dict : db->expires;

if ((keys = dictSize(dict)) != 0) {

evictionPoolPopulate(i, dict, db->dict, pool);

total_keys += keys;

}

}

if (!total_keys) break; /* No keys to evict. */

/* Go backward from best to worst element to evict. */

for (k = EVPOOL_SIZE-1; k >= 0; k--) {

if (pool[k].key == NULL) continue;

bestdbid = pool[k].dbid;

if (server.maxmemory_policy & MAXMEMORY_FLAG_ALLKEYS) {

de = dictFind(server.db[pool[k].dbid].dict,

pool[k].key);

} else {

de = dictFind(server.db[pool[k].dbid].expires,

pool[k].key);

}

/* Remove the entry from the pool. */

if (pool[k].key != pool[k].cached)

sdsfree(pool[k].key);

pool[k].key = NULL;

pool[k].idle = 0;

/* If the key exists, is our pick. Otherwise it is

* a ghost and we need to try the next element. */

if (de) {

bestkey = dictGetKey(de);

break;

} else {

/* Ghost... Iterate again. */

}

}

}

}

...

}

Because they are sorted and eliminated by idle, 255 count is used for lfu, so the smaller the count, the larger the idle;

ttl, ullong is used_ Max - ttl value: the smaller ttl is, the larger the idle is. All conditions are met. The larger the idle is, the earlier it is eliminated.

void evictionPoolPopulate(int dbid, dict *sampledict, dict *keydict, struct evictionPoolEntry *pool) {

int j, k, count;

dictEntry *samples[server.maxmemory_samples];

count = dictGetSomeKeys(sampledict,samples,server.maxmemory_samples);

for (j = 0; j < count; j++) {

unsigned long long idle;

sds key;

robj *o;

dictEntry *de;

de = samples[j];

key = dictGetKey(de);

/* If the dictionary we are sampling from is not the main

* dictionary (but the expires one) we need to lookup the key

* again in the key dictionary to obtain the value object. */

if (server.maxmemory_policy != MAXMEMORY_VOLATILE_TTL) {

if (sampledict != keydict) de = dictFind(keydict, key);

o = dictGetVal(de);

}

/* Calculate the idle time according to the policy. This is called

* idle just because the code initially handled LRU, but is in fact

* just a score where an higher score means better candidate. */

if (server.maxmemory_policy & MAXMEMORY_FLAG_LRU) {

idle = estimateObjectIdleTime(o);

} else if (server.maxmemory_policy & MAXMEMORY_FLAG_LFU) {

/* When we use an LRU policy, we sort the keys by idle time

* so that we expire keys starting from greater idle time.

* However when the policy is an LFU one, we have a frequency

* estimation, and we want to evict keys with lower frequency

* first. So inside the pool we put objects using the inverted

* frequency subtracting the actual frequency to the maximum

* frequency of 255. */

idle = 255-LFUDecrAndReturn(o);

} else if (server.maxmemory_policy == MAXMEMORY_VOLATILE_TTL) {

/* In this case the sooner the expire the better. */

idle = ULLONG_MAX - (long)dictGetVal(de);

} else {

serverPanic("Unknown eviction policy in evictionPoolPopulate()");

}

/* Insert the element inside the pool.

* First, find the first empty bucket or the first populated

* bucket that has an idle time smaller than our idle time. */

k = 0;

while (k < EVPOOL_SIZE &&

pool[k].key &&

pool[k].idle < idle) k++;

if (k == 0 && pool[EVPOOL_SIZE-1].key != NULL) {

/* Can't insert if the element is < the worst element we have

* and there are no empty buckets. */

continue;

} else if (k < EVPOOL_SIZE && pool[k].key == NULL) {

/* Inserting into empty position. No setup needed before insert. */

} else {

/* Inserting in the middle. Now k points to the first element

* greater than the element to insert. */

if (pool[EVPOOL_SIZE-1].key == NULL) {

/* Free space on the right? Insert at k shifting

* all the elements from k to end to the right. */

/* Save SDS before overwriting. */

sds cached = pool[EVPOOL_SIZE-1].cached;

memmove(pool+k+1,pool+k,

sizeof(pool[0])*(EVPOOL_SIZE-k-1));

pool[k].cached = cached;

} else {

/* No free space on right? Insert at k-1 */

k--;

/* Shift all elements on the left of k (included) to the

* left, so we discard the element with smaller idle time. */

sds cached = pool[0].cached; /* Save SDS before overwriting. */

if (pool[0].key != pool[0].cached) sdsfree(pool[0].key);

memmove(pool,pool+1,sizeof(pool[0])*k);

pool[k].cached = cached;

}

}

/* Try to reuse the cached SDS string allocated in the pool entry,

* because allocating and deallocating this object is costly

* (according to the profiler, not my fantasy. Remember:

* premature optimizbla bla bla bla. */

int klen = sdslen(key);

if (klen > EVPOOL_CACHED_SDS_SIZE) {

pool[k].key = sdsdup(key);

} else {

memcpy(pool[k].cached,key,klen+1);

sdssetlen(pool[k].cached,klen);

pool[k].key = pool[k].cached;

}

pool[k].idle = idle;

pool[k].dbid = dbid;

}

}

7. Select the elimination key from the elimination pool

...

/* Go backward from best to worst element to evict. */

for (k = EVPOOL_SIZE-1; k >= 0; k--) {

if (pool[k].key == NULL) continue;

bestdbid = pool[k].dbid;

if (server.maxmemory_policy & MAXMEMORY_FLAG_ALLKEYS) {

de = dictFind(server.db[pool[k].dbid].dict,

pool[k].key);

} else {

de = dictFind(server.db[pool[k].dbid].expires,

pool[k].key);

}

/* Remove the entry from the pool. */

if (pool[k].key != pool[k].cached)

sdsfree(pool[k].key);

pool[k].key = NULL;

pool[k].idle = 0;

/* If the key exists, is our pick. Otherwise it is

* a ghost and we need to try the next element. */

if (de) {

bestkey = dictGetKey(de);

break;

} else {

/* Ghost... Iterate again. */

}

}

...

8. Phase out key

Asynchronous deletion (lazyfree lazy eviction no, closed by default) is introduced, and the number of deleted elements is determined in the asynchronous deletion. Asynchronous background task deletion can be performed only when it is greater than 64, otherwise it is also synchronous deletion.

When asynchronous deletion is enabled, check that the memory release is below the threshold every time 16 key s are released.

...

/* Finally remove the selected key. */

if (bestkey) {

db = server.db+bestdbid;

robj *keyobj = createStringObject(bestkey,sdslen(bestkey));

propagateExpire(db,keyobj,server.lazyfree_lazy_eviction);

/* We compute the amount of memory freed by db*Delete() alone.

* It is possible that actually the memory needed to propagate

* the DEL in AOF and replication link is greater than the one

* we are freeing removing the key, but we can't account for

* that otherwise we would never exit the loop.

*

* AOF and Output buffer memory will be freed eventually so

* we only care about memory used by the key space. */

delta = (long long) zmalloc_used_memory();

latencyStartMonitor(eviction_latency);

if (server.lazyfree_lazy_eviction)

dbAsyncDelete(db,keyobj);

else

dbSyncDelete(db,keyobj);

latencyEndMonitor(eviction_latency);

latencyAddSampleIfNeeded("eviction-del",eviction_latency);

latencyRemoveNestedEvent(latency,eviction_latency);

delta -= (long long) zmalloc_used_memory();

mem_freed += delta;

server.stat_evictedkeys++;

notifyKeyspaceEvent(NOTIFY_EVICTED, "evicted",

keyobj, db->id);

decrRefCount(keyobj);

keys_freed++;

/* When the memory to free starts to be big enough, we may

* start spending so much time here that is impossible to

* deliver data to the slaves fast enough, so we force the

* transmission here inside the loop. */

if (slaves) flushSlavesOutputBuffers();

/* Normally our stop condition is the ability to release

* a fixed, pre-computed amount of memory. However when we

* are deleting objects in another thread, it's better to

* check, from time to time, if we already reached our target

* memory, since the "mem_freed" amount is computed only

* across the dbAsyncDelete() call, while the thread can

* release the memory all the time. */

if (server.lazyfree_lazy_eviction && !(keys_freed % 16)) {

overhead = freeMemoryGetNotCountedMemory();

mem_used = zmalloc_used_memory();

mem_used = (mem_used > overhead) ? mem_used-overhead : 0;

if (mem_used <= server.maxmemory) {

mem_freed = mem_tofree;

}

}

}

...

9. Optimization of stochastic strategy

In the past, every time you enter the memory test for elimination, you will delete the most key s from db0,db1,db2... dbN, so that the data of DB may be balanced.

In the new stochastic strategy, a static variable next is introduced_ db, which will be eliminated from the last ended db every time, and then scanned from the beginning after the end of the round.

/* volatile-random and allkeys-random policy */

...

static int next_db = 0;

...

else if (server.maxmemory_policy == MAXMEMORY_ALLKEYS_RANDOM ||

server.maxmemory_policy == MAXMEMORY_VOLATILE_RANDOM)

{

/* When evicting a random key, we try to evict a key for

* each DB, so we use the static 'next_db' variable to

* incrementally visit all DBs. */

for (i = 0; i < server.dbnum; i++) {

j = (++next_db) % server.dbnum;

db = server.db+j;

dict = (server.maxmemory_policy == MAXMEMORY_ALLKEYS_RANDOM) ?

db->dict : db->expires;

if (dictSize(dict) != 0) {

de = dictGetRandomKey(dict);

bestkey = dictGetKey(de);

bestdbid = j;

break;

}

}

}

10. Optimization of elimination pool

- Remove the evictionPoolEntry structure from db to become a global pointer

Originally, there was one evictionPoolEntry in each db. By default, there are 16 db. Most of them are useless, which wastes space - Originally, each db was eliminated separately, but now m are sampled from all db, that is, m * db_ Eliminate one of num elements

Originally, only m db samples were selected from each db for elimination. Now m db samples are selected from all db samples for elimination each time, so that the longest unreachable db can be eliminated

11. Other changes

- The simple enumeration value originally defined is now defined as a bit operation value

/* Redis maxmemory strategies. Instead of using just incremental number

* for this defines, we use a set of flags so that testing for certain

* properties common to multiple policies is faster. */

#define MAXMEMORY_FLAG_LRU (1<<0)

#define MAXMEMORY_FLAG_LFU (1<<1)

#define MAXMEMORY_FLAG_ALLKEYS (1<<2)

#define MAXMEMORY_FLAG_NO_SHARED_INTEGERS \

(MAXMEMORY_FLAG_LRU|MAXMEMORY_FLAG_LFU)

#define MAXMEMORY_VOLATILE_LRU ((0<<8)|MAXMEMORY_FLAG_LRU)

#define MAXMEMORY_VOLATILE_LFU ((1<<8)|MAXMEMORY_FLAG_LFU)

#define MAXMEMORY_VOLATILE_TTL (2<<8)

#define MAXMEMORY_VOLATILE_RANDOM (3<<8)

#define MAXMEMORY_ALLKEYS_LRU ((4<<8)|MAXMEMORY_FLAG_LRU|MAXMEMORY_FLAG_ALLKEYS)

#define MAXMEMORY_ALLKEYS_LFU ((5<<8)|MAXMEMORY_FLAG_LFU|MAXMEMORY_FLAG_ALLKEYS)

#define MAXMEMORY_ALLKEYS_RANDOM ((6<<8)|MAXMEMORY_FLAG_ALLKEYS)

#define MAXMEMORY_NO_EVICTION (7<<8)

#define CONFIG_DEFAULT_MAXMEMORY_POLICY MAXMEMORY_NO_EVICTION