Preface

Data loading mechanism is similar to data extraction mechanism, which can be divided into full load and incremental load. Full load refers to the operation of loading data after deleting all the data in the target data table. Incremental loading, on the other hand, means that the target table only loads data that changes in the source table, which contains data that is added, modified, and deleted.

1. Full Loading

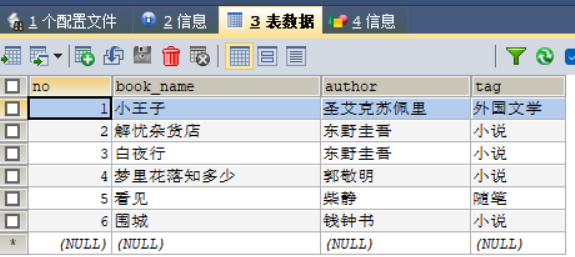

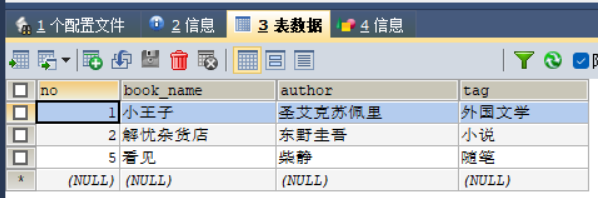

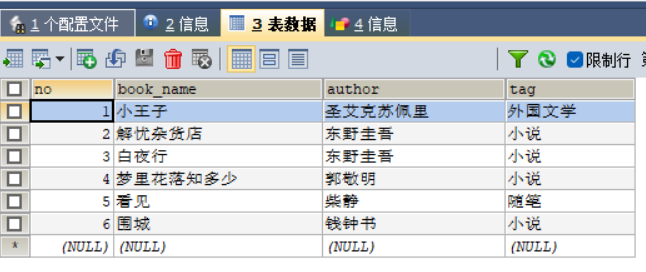

Suppose you have two tables, one for full_source and data table full_target, where the data table full_source is the source data table, data table full_target is the target data table. Datasheet full_source and full_ The contents of the target are shown in the figure.

CREATE TABLE `full_source` (

`no` INT (10),

`book_name` VARCHAR (60),

`author` VARCHAR (30),

`tag` VARCHAR (60)

);

INSERT INTO `full_source` (`no`, `book_name`, `author`, `tag`) VALUES('1','Princeling','St. Exuperi','foreign literature');

INSERT INTO `full_source` (`no`, `book_name`, `author`, `tag`) VALUES('2','Anxiety grocery store','Guywood Orientalis','Novel');

INSERT INTO `full_source` (`no`, `book_name`, `author`, `tag`) VALUES('3','White Night Line','Guywood Orientalis','Novel');

INSERT INTO `full_source` (`no`, `book_name`, `author`, `tag`) VALUES('4','How much do you know about flowers in your dreams','Guo Jingming','Novel');

INSERT INTO `full_source` (`no`, `book_name`, `author`, `tag`) VALUES('5','seeing','Chaijing','Informal essay');

INSERT INTO `full_source` (`no`, `book_name`, `author`, `tag`) VALUES('6','A Town Besieged','Qian Zhongshu','Novel');

create table `full_target` (

`no` int (10),

`book_name` varchar (60),

`author` varchar (30),

`tag` varchar (60)

);

insert into `full_target` (`no`, `book_name`, `author`, `tag`) values('1','Princeling','St. Exuperi','foreign literature');

insert into `full_target` (`no`, `book_name`, `author`, `tag`) values('2','Anxiety grocery store','Guywood Orientalis','Novel');

insert into `full_target` (`no`, `book_name`, `author`, `tag`) values('5','seeing','Chaijing','Informal essay');

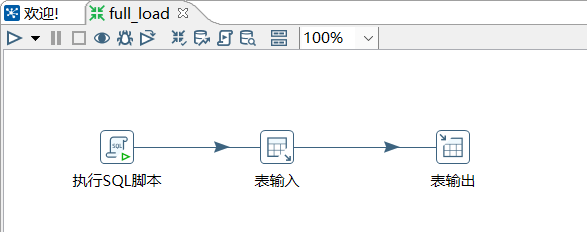

1. Open the kettle tool to create a transformation

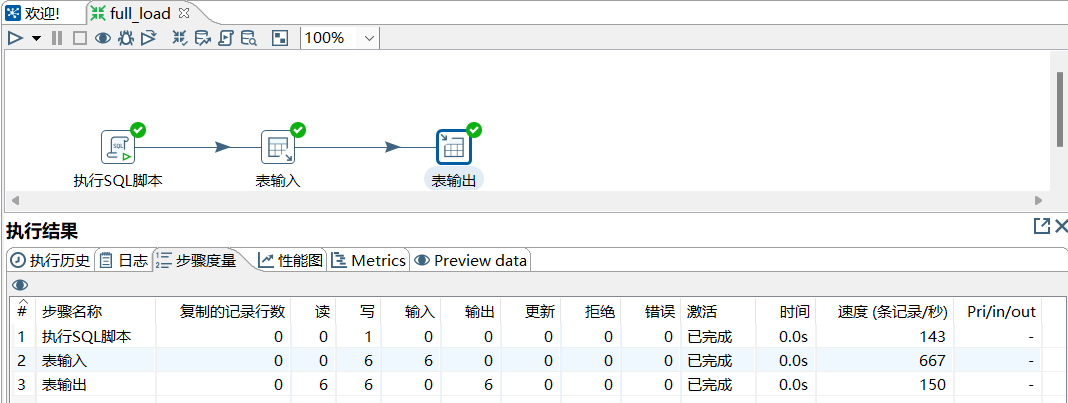

Using the Kettle tool, create a transform full_load, and add the Execute SQL Script Control, Table Input Control, Table Output Control, and Hop Jump Connector as shown in the figure.

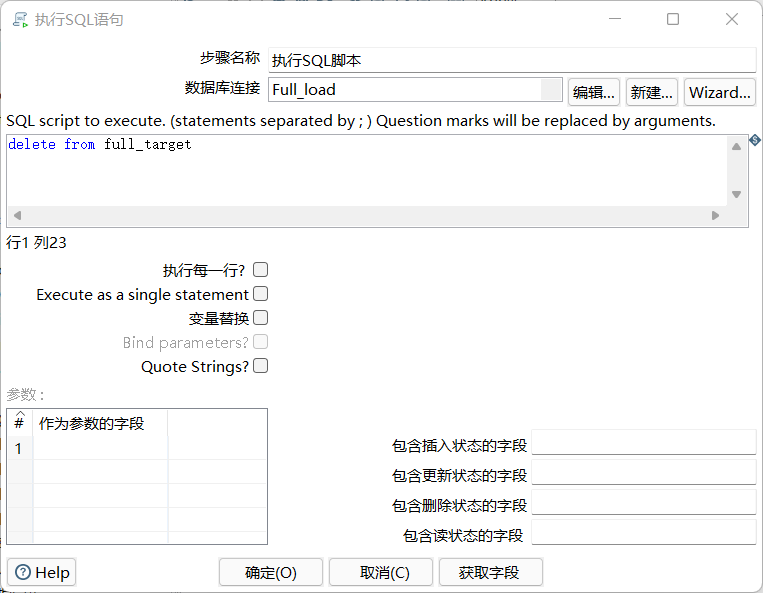

2. Configure Execute SQL Script Control

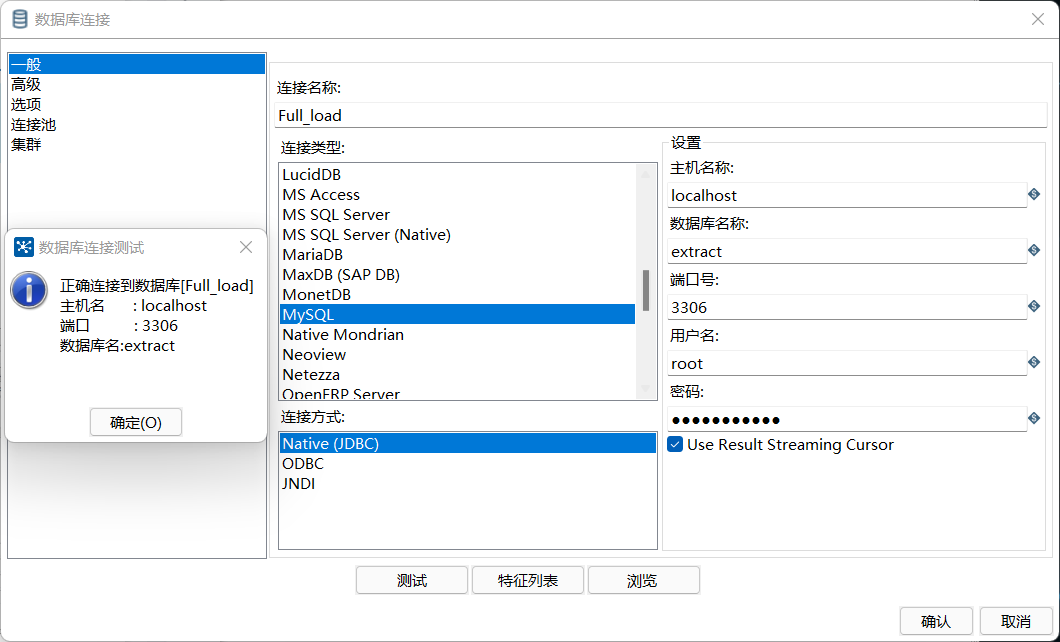

Double-click the Execute SQL Script control to enter the Execute SQL Statement interface as shown in the figure. Click the New button, configure the database connection, and click the Confirm button when the configuration is complete.

Configuration of MySQL database connection; Write delete table full_in the SQL box The SQL statement for the data in the target, as shown in the figure.

delete from full_target

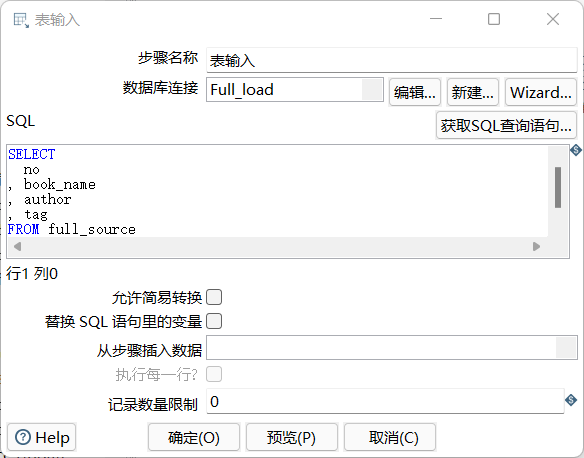

3. Configuration table input control

Double-click the Table Input control to enter the Table Input configuration interface and write the query full_in the SQL box The SQL statement for the source data table (click Get SQL Query Statement), as shown in the figure.

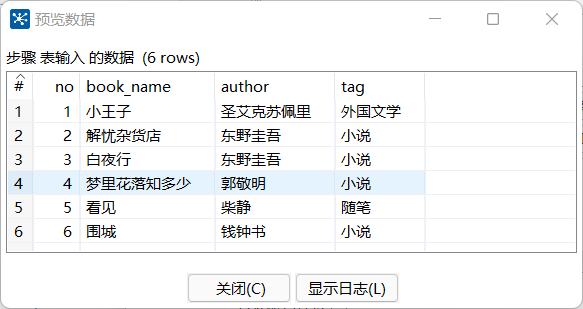

Click the Preview button to see full_ Whether the data from the source data table was successfully extracted from the MySQL database into the table input stream, as shown in the figure.

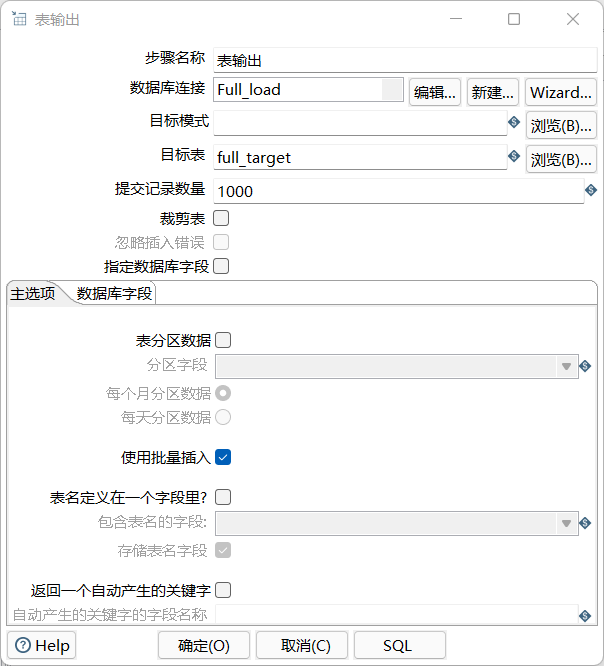

4. Configuration table output control

Double-click the Table Output control to enter the Table Output configuration interface. Click the Browse button on the target table to select the output target table, which is the data table full_target, you don't need to create a new database connection here, you just need to select the database connection you created, as shown in the diagram.

5. Run the conversion full_load

Click the button at the top of the conversion workspace to run the created conversion full_load, which implements full_ Full load of data from source into data table full_ In the target, as shown in the figure.

View the data table full_through the SQLyog tool Whether the target has been successfully loaded into the data, check the results as shown in the figure. (Note: This is not a new creation, but a series of operations)

Incremental Loading

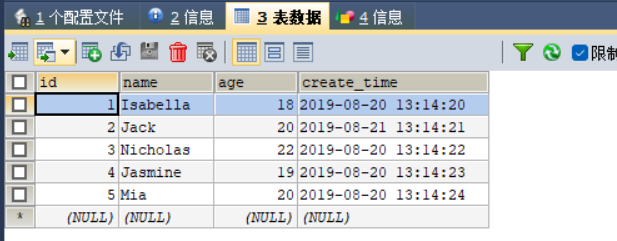

Suppose you have two tables, incremental_source and incremental_target, where incremental_source is the source data table, incremental_target is the target data table. Datasheet incremental_source and incremental_target has the same table structure and data as shown in the figure.

CREATE TABLE `incremental_source` (

`id` INT (20),

`name` VARCHAR (60),

`age` INT (20),

`create_time` DATETIME

);

INSERT INTO `incremental_source` (`id`, `name`, `age`, `create_time`) VALUES('1','Isabella','18','2019-08-20 13:14:20');

INSERT INTO `incremental_source` (`id`, `name`, `age`, `create_time`) VALUES('2','Jack','20','2019-08-21 13:14:21');

INSERT INTO `incremental_source` (`id`, `name`, `age`, `create_time`) VALUES('3','Nicholas','22','2019-08-20 13:14:22');

INSERT INTO `incremental_source` (`id`, `name`, `age`, `create_time`) VALUES('4','Jasmine','19','2019-08-20 13:14:23');

INSERT INTO `incremental_source` (`id`, `name`, `age`, `create_time`) VALUES('5','Mia','20','2019-08-20 13:14:24');

CREATE TABLE `incremental_target` (

`id` INT (20),

`name` VARCHAR (60),

`age` INT (20),

`create_time` DATETIME

);

INSERT INTO `incremental_target` (`id`, `name`, `age`, `create_time`) VALUES('1','Isabella','18','2019-08-20 13:14:20');

INSERT INTO `incremental_target` (`id`, `name`, `age`, `create_time`) VALUES('2','Jack','20','2019-08-21 13:14:21');

INSERT INTO `incremental_target` (`id`, `name`, `age`, `create_time`) VALUES('3','Nicholas','22','2019-08-20 13:14:22');

INSERT INTO `incremental_target` (`id`, `name`, `age`, `create_time`) VALUES('4','Jasmine','19','2019-08-20 13:14:23');

INSERT INTO `incremental_target` (`id`, `name`, `age`, `create_time`) VALUES('5','Mia','20','2019-08-20 13:14:24');

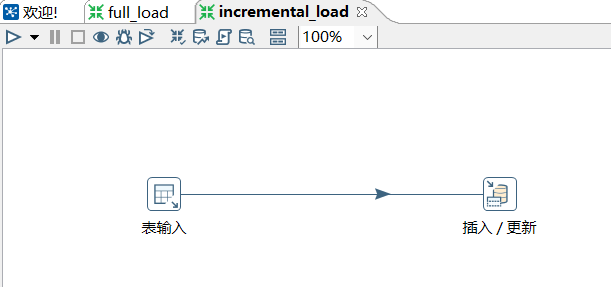

1. Open the kettle tool to create a transformation

Using the Kettle tool, create a transformation incremental_load, and add table input controls, insert/update controls, and Hop jump connectors as shown in the figure.

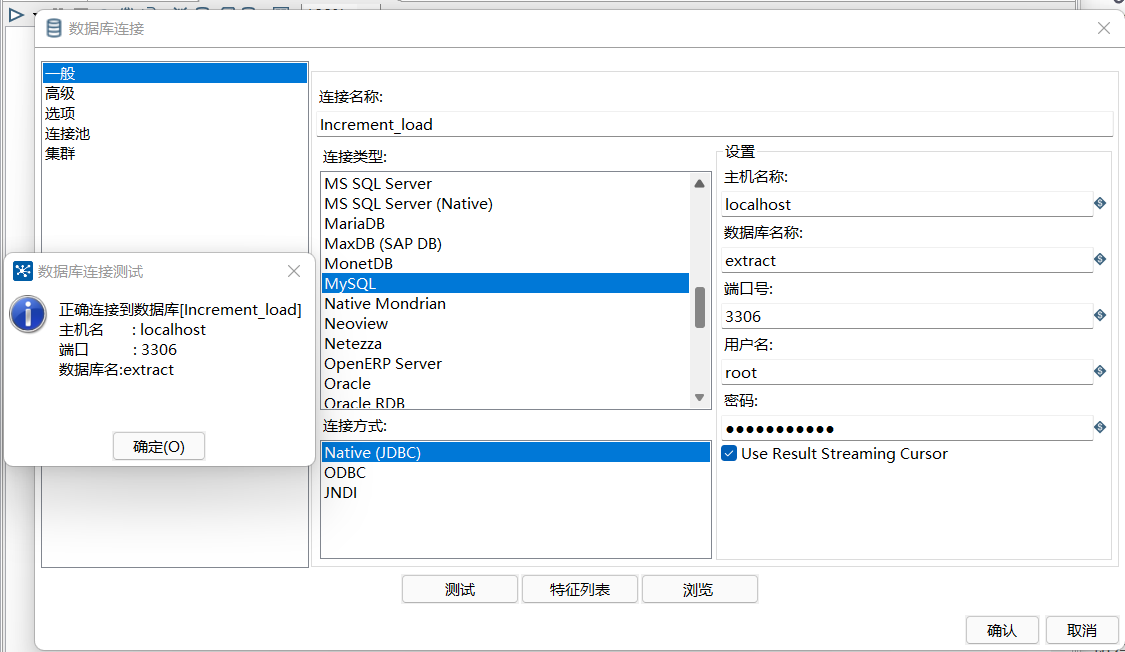

2. Configuration table input control

Double-click the Table Input control to enter the Table Input configuration interface, click the New button, configure the database connection, and click the Confirm button when the configuration is complete. Configuration of MySQL database connection, as shown in the figure.

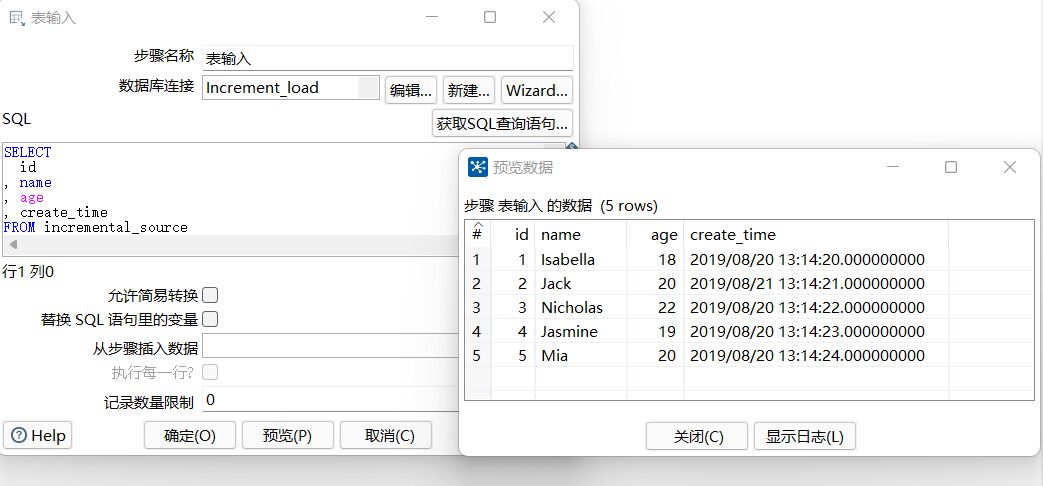

Write the query data table incremental_in the SQL box Sorce's SQL statement, and then click the Preview button to see the data table incremental_ Whether the data from source was successfully extracted from the MySQL database into the table input stream, as shown in the figure.

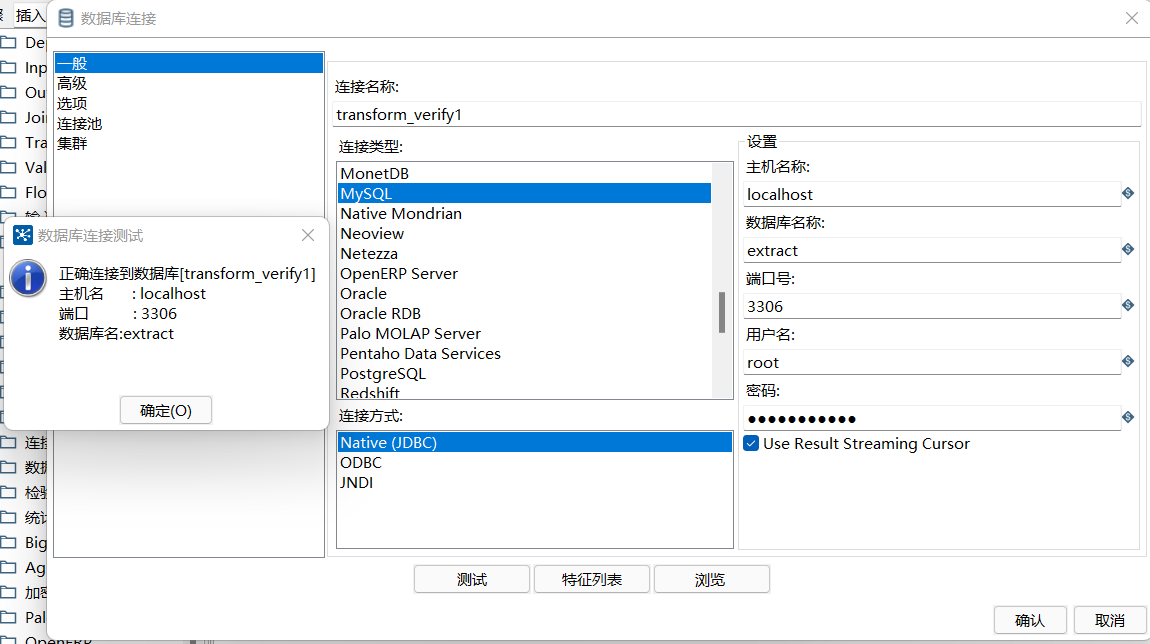

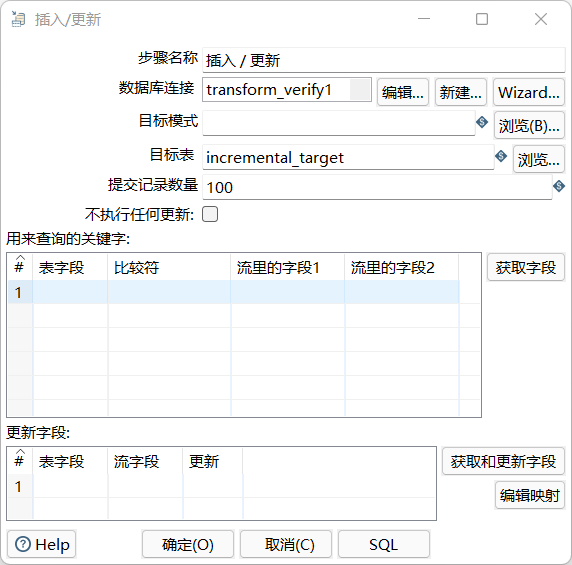

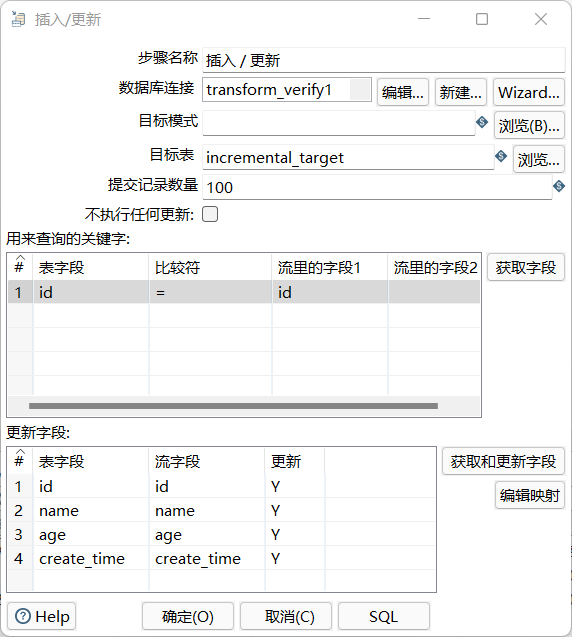

3. Configure insert/update controls

Double-click the Insert/Update control to enter the Insert/Update configuration interface, click the New button, configure the database connection, and click the Confirm button when the configuration is complete. Configuration of MySQL database connection, as shown in the figure.

Click the Browse button on the target table, pop up the Database Browser window, and select the target table incremental_target, as shown in the diagram.

Click the Get Fields button to specify the keywords needed to query the data by comparing the data table incremental_ Whether the field id of the target is consistent with the field id in the input stream is a key condition, updating other field data in the data table; Click the Get and Update Fields button to specify the fields that need to be updated, as shown in the diagram.

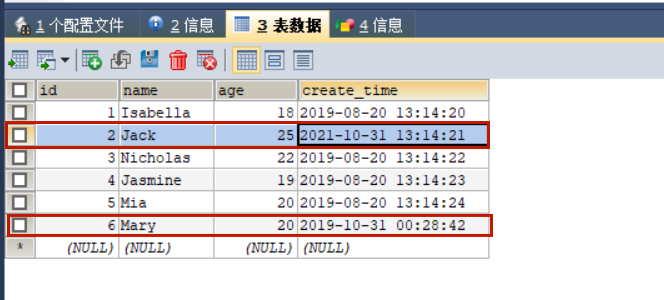

4. Modify the data table incremental_ Data in source

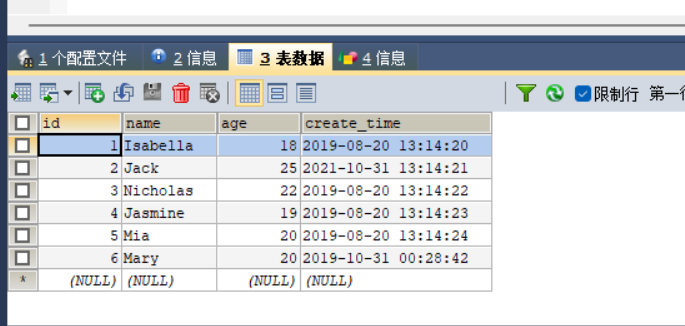

Incremental_in data table A new data with id 6, name Mary and age 23 was added to source. Incremental_on data table The data with id 2 in source is modified to change the age of the data to 25. Modified data table incremental_ The data content in the source is shown in the figure.

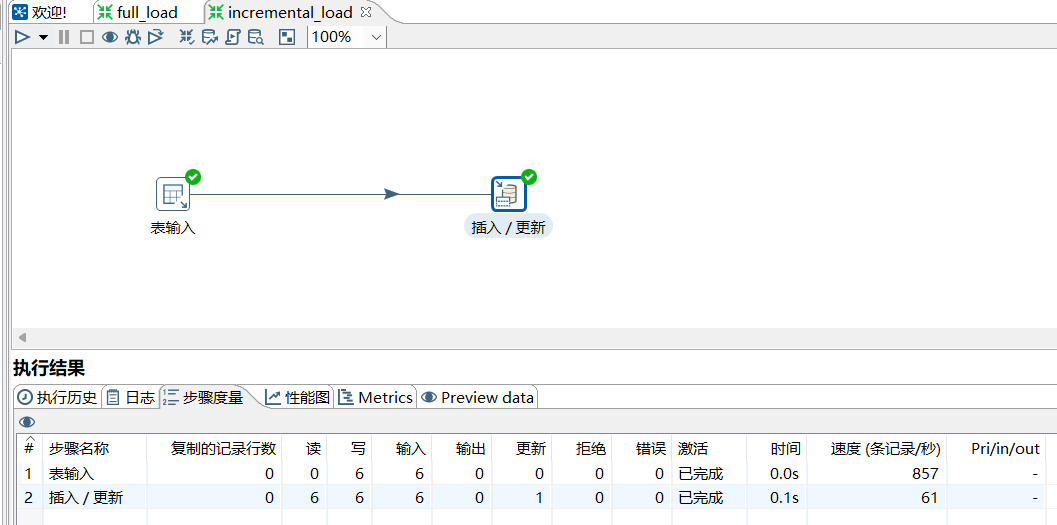

5. Run Conversion increment_load

Click the Run button at the top of the transformation workspace to run the created transformation incremental_load, which implements incremental_ Incrementally loading data from source into data table incremental_ In the target, as shown in the figure.

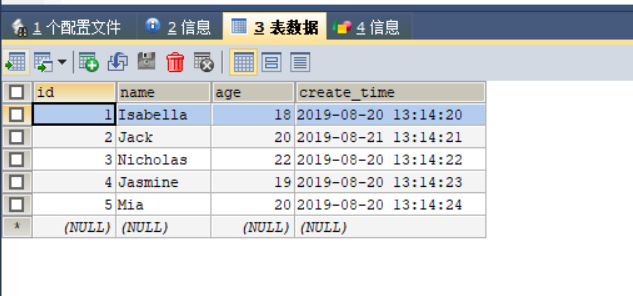

6. View data table incremental_ Data in target

View the data table incremental_through the SQLyog tool Whether the target has been successfully loaded into the data, check the results as shown in the figure. (It's not just created, it's done through a series of operations)

summary

That's what happened