Data preprocessing

problem analysis

The data to be processed this time is to predict whether loan users will be overdue. There are 89 field s in the data, of which "status" is the result label: 0 is not overdue, 1 is overdue, and the rest of the data can be regarded as variables. Therefore, it is classified as a problem of classification and prediction. Before data classification, data are analyzed and pre-processed. By observing the data, it can be seen that the pretreatment includes irrelevant feature deletion, data type conversion, missing value processing and feature optimization.

Irrelevant feature deletion

Among the 88 variables, after sorting out, first delete the variables that have no contribution to whether the loan is overdue or not and the invalid data with too many missing values, including custid, trade_no, bank_card_no, source, id_name, student_feature. The code is as follows:

import pandas as pd

OriginalData=pd.read_csv('data.csv',encoding = 'gbk')

UseData=OriginalData.drop(['student_feature','custid','trade_no','bank_card_no','source','id_name'],axis=1,inplace=True)

Data Type Conversion

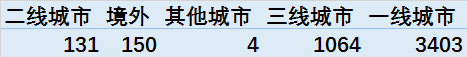

When classifying and forecasting, the input feature variables can only be data, but the actual feature data may exist in the form of strings, as follows:

For such data, it can be converted to a specific value, code as follows:

OriginalData.ix[OriginalData['reg_preference_for_trad']=='First-tier cities','reg_preference_for_trad']=0 OriginalData.ix[OriginalData['reg_preference_for_trad']=='Second-tier cities','reg_preference_for_trad']=1 OriginalData.ix[OriginalData['reg_preference_for_trad']=='The third-class cities','reg_preference_for_trad']=2 OriginalData.ix[OriginalData['reg_preference_for_trad']=='Other cities','reg_preference_for_trad']=3 OriginalData.ix[OriginalData['reg_preference_for_trad']=='Abroad','reg_preference_for_trad']=4

In addition, variables about timestamps need to be processed accordingly, such as features latest_query_time, etc. These data are transformed into specific time periods, the code is as follows:

#Time Data Conversion

OriginalData['latest_query_time'].fillna('2019-1-1', inplace=True)

refrence_time = time.mktime(time.strptime("2019-1-1", "%Y-%m-%d"))

OriginalData['latest_query_timestamp'] = OriginalData['latest_query_time'].apply(lambda x:refrence_time-time.mktime(time.strptime(x,'%Y-%m-%d')))

TimeProcessData=OriginalData[~(OriginalData['latest_query_timestamp'].isin([0]))]

TimeProcessData.drop(['latest_query_time'],axis=1,inplace=True)

TimeProcessData['loans_latest_time'].fillna('2019-1-1', inplace=True)

refrence_time = time.mktime(time.strptime("2019-1-1", "%Y-%m-%d"))

TimeProcessData['loans_latest_timestamp'] = TimeProcessData['loans_latest_time'].apply(lambda x:refrence_time-time.mktime(time.strptime(x,'%Y-%m-%d')))

TimeProcessData=TimeProcessData[~(TimeProcessData['loans_latest_timestamp'].isin([0]))]

TimeProcessData.drop(['loans_latest_time'],axis=1,inplace=True)

Missing Value Processing

Finally, the missing data are processed. After observation, it is found that a small number of rows have many empty values to delete these rows. Mean filling is used for the remaining missing values. The code is as follows:

#Missing Value Processing

TimeProcessData['loans_long_time']=TimeProcessData['loans_long_time'].fillna(999)

FillProcessData=TimeProcessData[~(TimeProcessData['loans_long_time'].isin([999]))]

for column in list(FillProcessData.columns[FillProcessData.isnull().sum() > 0]):

mean_val = FillProcessData[column].mean()

FillProcessData[column].fillna(mean_val, inplace=True)

FillProcessData.to_csv('DFGdata.csv')