catalogue

Comparison between urlopen and Request

Use Request for flexible configuration

In Python 2, there are urllib and urllib libraries to send requests. In Python 3, urllib 2 library no longer exists. It is unified as urllib library. It is Python's built-in HTTP request library, which mainly has four modules

Request: it is the most basic HTTP request module. It can be used to simulate sending a request, just like entering a network program in the browser and then entering a carriage. You can simulate the process by passing RL and additional numbers to the library method

Error: exception handling module. If there are request errors, you can catch these exceptions and retry or to ensure that the program will not terminate unexpectedly

Parse: a tool module that provides many URL processing methods, such as splitting, parsing, and merging

Robotparser: it is mainly used to identify the robots.txt file of the website, and then judge which websites can be crawled and which websites can be crawled. In fact, it is less used

1. Send request

def urlopen(url, data=None, timeout=socket._GLOBAL_DEFAULT_TIMEOUT,

*, cafile=None, capath=None, cadefault=False, context=None):The urlib request module provides the most basic method for constructing HTTP requests. It can be used to simulate a request initiation process of the browser. At the same time, it also handles authorization authentication, redirection, browser Cookies and other contents.

import urllib.request

response_data = urllib.request.urlopen("https://www.baidu.com")

print(type(response_data)) # <class ' http.client.HTTPResponse ' >

print(response_data.read().decode("utf-8")) #Here is the entire source code web page

print(response_data.status) #Get status value

print(response_data.getheaders()) #Get header of type list

print(response_data.getheader("Server")) #Get the data information in the header

print(dir(response_data)) #View all objects and properties in class

data parameter

The data parameter is optional. If you want to add this parameter, and if it is the content of the byte stream encoding format, that is, the byte type, you need to convert it through the bytes () method

import urllib.request

import urllib.parse

data = bytes(urllib.parse.urlencode({"word":"hello"}),encoding="utf-8")

response_data = urllib.request.urlopen("http://httpbin.org/post",data=data)

print(response_data.read())

They passed a parameter word with the value hello, which needs to be transcoded into bytes (byte stream). The byte stream adopts the bytes () method. The first parameter of this method needs to be str (string) type and urencode () in urllib.parse module Method to convert the parameter dictionary into a string; the second parameter specifies the encoding format, which is specified here as utf8

timeout parameter

The timeout parameter is used to set the timeout, in seconds, which means that if the request exceeds the set time, an exception will be thrown if there is no response

import urllib.request

import urllib.parse

response_data = urllib.request.urlopen("http://httpbin.org/get",timeout=0.1)

print(response_data.read())

The result will throw: urllib.error.URLError: <urlopen error timed out>After throwing an exception, you can use the: Tyr exception statement to implement it

import urllib.request

import socket

import urllib.error

import urllib.parse

try:

response_data = urllib.request.urlopen("http://httpbin.org/get",timeout=0.1)

print(response_data.read())

except urllib.error.URLError as e:

if isinstance(e.reason,socket.timeout):

print("TIME OUT")socket.timeout type (meaning timeout exception)

Other parameters

In addition to the data parameter and timeout parameter, there is also a context parameter, which must be of type ssl.SSLContext to specify SSL settings

In addition, cafile and capath parameters specify the CA certificate and its path respectively, which will be used when requesting HTTPS connection

The cadefault parameter has been deprecated and its default value is False

Comparison between urlopen and Request

The urlopen () method can initiate the most basic request, but these simple parameters are not enough to build a complete request

If you need to add Headers and other information to the Request, you can use a more powerful Request class to build it

urlopen: it simply sends a request and cannot handle the request header

Request: the sent request header information can be changed, so it is widely used

Use Request for flexible configuration

urllib.request.Requset(url, data=None, headers={},

origin_req_host=None, unverifiable=False,

method=None):URL: the URL used for the request This is a required parameter, and other parameters are optional

Data: if data is to be transmitted, it must be of bytes (byte stream) type. If it is a dictionary, it can be encoded with urlencode () in urllib.parse module first

Headers: is a dictionary, which is the request header. We can construct the request directly through the headers parameter when constructing the request, or add the request header by calling the add_header() method of the request instance. The most common usage is to modify the user agent to disguise the browser. The default user agent is Python urllib, we can modify it to disguise the browser. For example, to disguise Firefox, you can set it to: Mozilla/s.o (X11; U; Linux i686) Gecko/20071127 Firefox/2.0.0.11

origin_req_host: refers to the host name or IP address of the requestor

Unverifiable: whether the request is unverifiable or not. The default is False, which means that the user does not have sufficient permission to choose to receive the result of the request. For example, we request images in HTML documents, but we do not have the permission to automatically capture images. In this case, the value of unverifiable is True

Method: A string indicating the method used in the request, such as GET \POST\ PUT, etc

import urllib.request,parse

url = "http://httpbin.org/post"

headers = {

"User-Agent" : "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.190 Safari/537.36",

"Host": "httpbin.org"

}

dict = {

"name" : "zhangsan"

}

data = bytes(urllib.parse.urlencode(dict),encoding="utf-8")

requse_data = urllib.request.Request(url=url,data=data,headers=headers,method="POST")

respnse_data = urllib.request.urlopen(requse_data)

print(respnse_data.read().decode("utf-8"))In addition, headers can also be added with the add_header() method:

req = request.Request(url=url, data=data, method= ' POST ')

req .add_header('User-Agent','Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.190 Safari/537.36') Advanced Usage

Handling Cookies, proxy settings, etc The BaseHandler class in the urllib.request module, which is the parent class of all other handlers, provides the most basic methods, such as default_open(), protocol_request()

Hitpdefaultarrorhandler: used to handle response errors. All errors will throw HTTP Error type exceptions. HTTPRedirectHandler: used to handle redirection

HTTPCookieProcessor : Used to process Cookies

ProxyHandler: used to set the proxy. The default proxy is empty

HTTPPasswordMgr: used to manage passwords. It maintains a table of user names and passwords

HTTP basic authhandler: used to manage authentication. If authentication is required when a link is opened, it can be used to solve the authentication problem

Another important class is OpenerDirector, which we can call Opener. We have used urlopen() method before. In fact, it is an Opener provided by urllib;

Opener can use the open() method and return the same type as urlopen(). So, what does it have to do with Handler? In short, it uses Handler to build opener

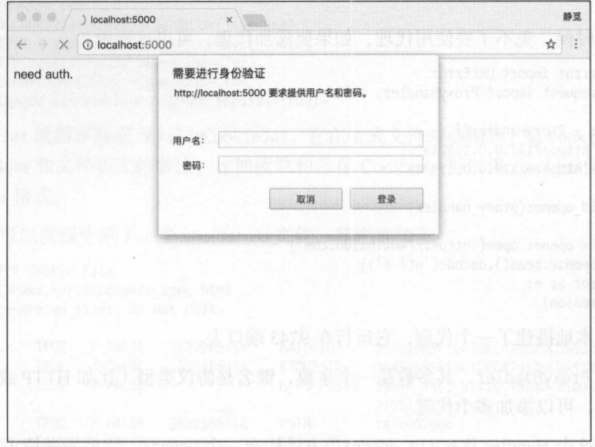

Password verification

Some websites will pop up a prompt box when opening, which directly prompts you to enter your user name and password. You can view the page only after successful verification

from urllib.request import HTTPPasswordMgrWithDefaultRealm,HTTPBasicAuthHandler,build_opener

from urllib.error import URLError

user_name = "username"

password = "password"

url = 'http://localhost:5000/'

p = HTTPPasswordMgrWithDefaultRealm()

p.add_password(None,url,user_name,password)

auth_handler = HTTPBasicAuthHandler(p)

opener = build_opener(auth_handler)

try:

result =opener.open(url)

html = result.read().decode("utf-8")

print(html)

except URLError as e:

print(e.reason)

1. Here, first instantiate the HTTPBasicAuthHandler object, whose parameter is the HTTPPasswordMgrWithDefaultRealm object. He uses add_password() to add the user name and password, so as to establish a Handler to handle authentication

2. Use this Handler and use the build_opener() method to build an Opener, which has been verified successfully when sending the request

3. Using Opener's open() method to open the link, you can complete the verification. The result obtained here is the verified page source code content

Use of agents

When you are a crawler, you have to use an agent. If you want to add an agent, you can do it here

from urllib.error import URLError

from urllib.request import ProxyHandler,build_opener

proxy_handler = ProxyHandler({

"http":"http://127.0.0.1:9743",

"https":"https://127.0.0.1:9743"

})

opener = build_opener(proxy_handler)

try:

response = opener.open("https://www.baidu.com")

print(response.read().decode("utf-8"))

except URLError as e:

print(e.reason)1. Here we set up a local agent, which runs on port 9743.

2. Using ProxyHandler, the parameter is a dictionary. When the key name is the protocol type (such as HTTP or HTTPS), the key value is the proxy link, and multiple proxies can be added

3. Use Handler and build here_ The Opener () method constructs an Opener, and then sends a request

Cookie processing

We also need relevant handler s for Cookies processing

import http.cookiejar,urllib.request

cookie = http.cookiejar.CookieJar()

handler = urllib.request.HTTPCookieProcessor(cookie)

opener = urllib.request.build_opener(handler)

response = opener.open("http://www.baidu.com")

for item in cookie:

print(item.name + "=" + item.value)

We show that a CookieJar object is declared. Next, we need to use HTTP cookieprocessor to build a Handler, and finally use build_ The Opener () method constructs Opener and executes the open() function

#Operation results: BAIDUID=30172F7439FC0FEED244E455C6D8204E:FG=1 BIDUPSID=30172F7439FC0FEE227F1F995DE95884 H_PS_PSSID=35292_34442_35104_35240_35048_35096_34584_34504_35167_35318_26350_35145_35301 PSTM=1638079765 BDSVRTM=0 BD_HOME=1

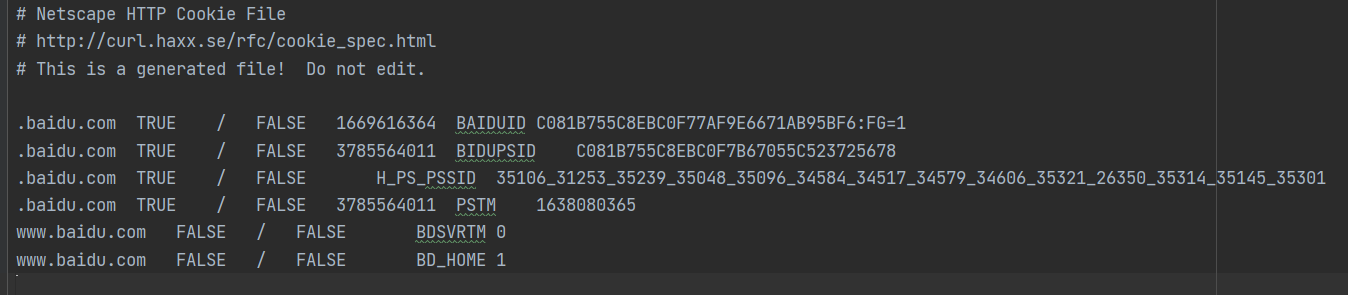

Cookies are actually saved as text

import http.cookiejar,urllib.request

filename = "cookies.txt"

cookie = http.cookiejar.MozillaCookieJar(filename)

handler = urllib.request.HTTPCookieProcessor(cookie)

opener = urllib.request.build_opener(handler)

response = opener.open("http://www.baidu.com")

cookie.save(ignore_discard=True,ignore_expires=True)

Cookie jar needs to be replaced by Mozilla cookie jar, which will be used when generating files. The subclass of cookie jar can be used to display Cookies and file related events, such as reading and saving Cookies, and can save Cookies into the cookie format of Mozilla browser. The execution found that a cookie.txt file was generated, the contents of which are as follows

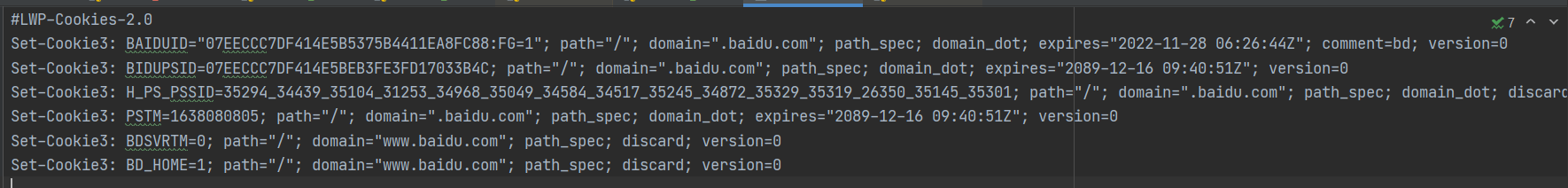

LWPCookieJar can also read and save Cookies, but the saved format is different from Mozilla cookiejar. It will save Cookies in libwww Perl (LWP) format.

filename = "cookies.txt"

cookie = http.cookiejar.LWPCookieJar(filename)

handler = urllib.request.HTTPCookieProcessor(cookie)

opener = urllib.request.build_opener(handler)

response = opener.open("http://www.baidu.com")

cookie.save(ignore_discard=True,ignore_expires=True)

Cookie format file content

Read Cookies file

import http.cookiejar,urllib.request

cookie = http.cookiejar.LWPCookieJar()

cookie.load("cookies.txt",ignore_expires=True,ignore_discard=True)

handler = urllib.request.HTTPCookieProcessor(cookie)

opener = urllib.request.build_opener(handler)

response = opener.open("http://www.baidu.com")

print(response.read().decode("utf-8"))

Here, the load() method is called to read the local cookie file and obtain the contents of the Cookies. However, on the premise, we first generate a file in LWPCookies format and save it as a file, and then use the same method to build the Handler and Opener after reading the Cookies