Operation requirements:

- Take out all the news of a news list page and wrap it into a function.

- Get the total number of news articles and calculate the total number of pages.

- Get all news details for all news list pages.

- Find a topic that you are interested in, do data crawling and word segmentation analysis. Can't be the same as other students.

The first three requirement codes are as follows:

import requests from bs4 import BeautifulSoup from datetime import datetime import re # Setting local is to process the time containing Chinese format (% Y% m% d) with an error: # UnicodeEncodeError: 'locale' codec can't encode character '\u5e74' # import locale # locale.setlocale(locale.LC_CTYPE, 'chinese') def crawlOnePageSchoolNews(page_url): res = requests.get(page_url) res.encoding = 'UTF-8' soup = BeautifulSoup(res.text, 'html.parser') news = soup.select('.news-list > li') for n in news: # print(n) print('**' * 5 + 'List page information' + '**' * 10) print('News link:' + n.a.attrs['href']) print('Headline:' + n.select('.news-list-title')[0].text) print('News Description:' + n.a.select('.news-list-description')[0].text) print('News time:' + n.a.select('.news-list-info > span')[0].text) print('Source:' + n.a.select('.news-list-info > span')[1].text) getNewDetail(n.a.attrs['href']) def getNewDetail(href): print('**' * 5 + 'Details page information' + '**' * 10) res1 = requests.get(href) res1.encoding = 'UTF-8' soup1 = BeautifulSoup(res1.text, 'html.parser') if soup1.select('.show-info'): # Prevent the previous web page from not showing_info news_info = soup1.select('.show-info')[0].text else:return info_list = ['source', 'Release time', 'click', 'author', 'To examine', 'Photography'] # Fields to be resolved news_info_set = set(news_info.split('\xa0')) - {' ', ''} # The & nbsp; in the web page will be resolved to \ xa0 after it is obtained, so you can use \ xa0 as the separator # Cycle print article information for n_i in news_info_set: for info_flag in info_list: if n_i.find(info_flag) != -1: # Because the colon of time uses the English character, it needs to be judged if info_flag == 'Release time': # Convert the publishing time string to datetime format for later storage in the database release_time = datetime.strptime(n_i[n_i.index(':') + 1:], '%Y-%m-%d %H:%M:%S ') print(info_flag + ':', release_time) elif info_flag == 'click': # The number of clicks is written using js after accessing php through the article id, so it is handled separately here getClickCount(href) else: print(info_flag + ':' + n_i[n_i.index(': ') + 1:]) news_content = soup1.select('#content')[0].text print(news_content) # Article content print('--' * 40) def getClickCount(news_url): # http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80 # The above link gives the URL of the number of visits for the article page click_num_url = 'http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80' # Get article id from regular expression click_num_url = click_num_url.format(re.search('_(.*)/(.*).html', news_url).group(2)) res2 = requests.get(click_num_url) res2.encoding = 'UTF-8' # $('#todaydowns').html('5');$('#weekdowns').html('106');$('#monthdowns').html('129');$('#hits').html('399'); # Above is the content of response # Using regular expression to get the number of hits # Res2. Text [res2. Text. Rindex ("(') + 2: res2. Text. Rindex ("'))], not in a regular way print('click:' + re.search("\$\('#hits'\).html\('(\d*)'\)", res2.text).group(1)) crawlOnePageSchoolNews('http://news.gzcc.cn/html/xiaoyuanxinwen/') pageURL = 'http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html' res = requests.get('http://news.gzcc.cn/html/xiaoyuanxinwen/') res.encoding = 'UTF-8' soup = BeautifulSoup(res.text, 'html.parser') newsSum = int(re.search('(\d*)strip', soup.select('a.a1')[0].text).group(1)) if newsSum % 10: pageSum = int(newsSum/10) + 1 else: pageSum = int(newsSum/10) for i in range(2, pageSum+1): crawlOnePageSchoolNews(pageURL.format(i))

Screenshot of results:

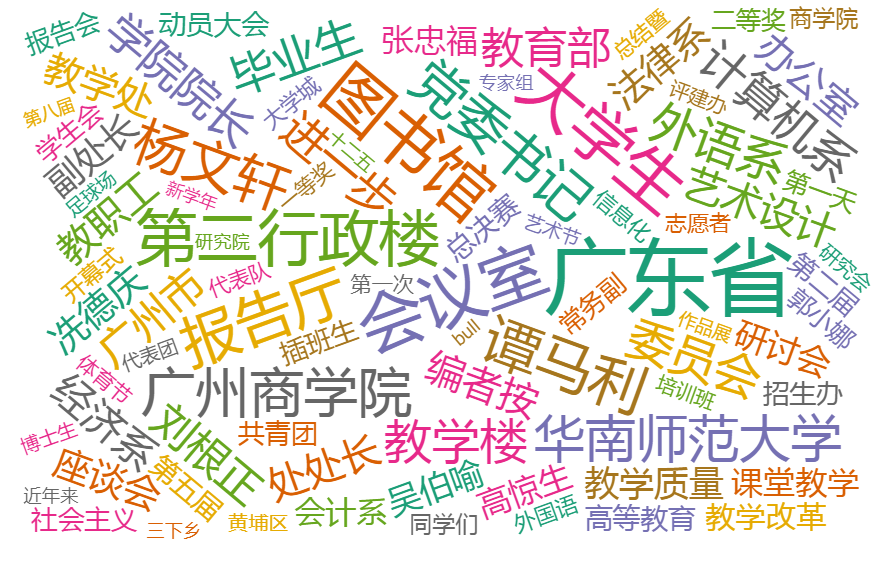

In the fourth requirement, I crawled through all the news descriptions on campus, analyzed what the school had done in recent years, where it had done it, what it emphasized, and counted the word cloud.

The main codes are as follows:

import requests from bs4 import BeautifulSoup import re import jieba editors = [] descriptions = '' def crawlOnePageSchoolNews(page_url): global descriptions res0 = requests.get(page_url) res0.encoding = 'UTF-8' soup0 = BeautifulSoup(res0.text, 'html.parser') news = soup0.select('.news-list > li') for n in news: print('News Description:' + n.a.select('.news-list-description')[0].text) print('Source:' + n.a.select('.news-list-info > span')[1].text) descriptions = descriptions + ' ' + n.a.select('.news-list-description')[0].text editors.append(n.a.select('.news-list-info > span')[1].text.split(' ')[0]) crawlOnePageSchoolNews('http://news.gzcc.cn/html/xiaoyuanxinwen/') pageURL = 'http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html' res = requests.get('http://news.gzcc.cn/html/xiaoyuanxinwen/') res.encoding = 'UTF-8' soup = BeautifulSoup(res.text, 'html.parser') newsSum = int(re.search('(\d*)strip', soup.select('a.a1')[0].text).group(1)) if newsSum % 10: pageSum = int(newsSum / 10) + 1 else: pageSum = int(newsSum / 10) for i in range(2, pageSum+1): crawlOnePageSchoolNews(pageURL.format(i)) with open('punctuation.txt', 'r', encoding='UTF-8') as punctuationFile: for punctuation in punctuationFile.readlines(): descriptions = descriptions.replace(punctuation[0], ' ') with open('meaningless.txt', 'r', encoding='UTF-8') as meaninglessFile: mLessSet = set(meaninglessFile.read().split('\n')) mLessSet.add(' ') # Load reserved words with open('reservedWord.txt', 'r', encoding='UTF-8') as reservedWordFile: reservedWordSet = set(reservedWordFile.read().split('\n')) for reservedWord in reservedWordSet: jieba.add_word(reservedWord) keywordList = list(jieba.cut(descriptions)) keywordSet = set(keywordList) - mLessSet # Remove meaningless words from word set keywordDict = {} # Count word frequency dictionary for word in keywordSet: keywordDict[word] = keywordList.count(word) # Sort word frequency keywordListSorted = list(keywordDict.items()) keywordListSorted.sort(key=lambda e: e[1], reverse=True) # Write all word frequencies to txt for word cloud analysis for topWordTup in keywordListSorted: print(topWordTup) with open('word.txt', 'a+', encoding='UTF-8') as wordFile: for i in range(0, topWordTup[1]): wordFile.write(topWordTup[0]+'\n')

Pass the result after the above processing https://wordsift.org/ The generated word cloud is as follows:

Some reserved words are not handled well, so some meaningless words are ignored selectively

The file above has been uploaded Here