Current Limiting Algorithms

- Counter Current Limitation

- Fixed window

- sliding window

- Barrel current limiting

- Token Bucket

- Leaky bucket

Counter

Counter current limiting can be divided into:

- Fixed window

- sliding window

Fixed window

The current limitation of fixed window counter is simple and clear, that is, to limit the number of requests between units, such as setting QPS to 10, then counting from the beginning when the request enters, judging whether to 10 before each count, rejecting the request when it arrives, and ensuring that the counting period is 1 second and the counter clears after 1 second.

Following is the implementation of distributed current limitation of counter using redis. The lua script that has been practiced on-line is as follows:

local key = KEYS[1]

local limit = tonumber(ARGV[1])

local refreshInterval = tonumber(ARGV[2])

local currentLimit = tonumber(redis.call('get', key) or '0')

if currentLimit + 1 > limit then

return -1;

else

redis.call('INCRBY', key, 1)

redis.call('EXPIRE', key, refreshInterval)

return limit - currentLimit - 1

end One obvious disadvantage is that the fixed window counter algorithm can not handle spike traffic, such as 10QPS, 10 requests in 1ms, and all subsequent 999ms requests will be rejected.

sliding window

In order to solve the problem of fixed windows, sliding windows refine windows and use smaller windows to limit traffic. For example, a one-minute fixed window is cut into 60 sliding windows of one second. Then the time range of statistics is synchronized and moved back over time.

Even though the sliding time window current limiting algorithm can ensure that the number of interface requests in any time window does not exceed the maximum flow value, it still can not prevent the problem of overly centralized access on fine time granularity.

In order to deal with the above problems, there are many improved versions of time window current limiting algorithm, such as:

Multi-level current limiting, we can set multiple current limiting rules for the same interface, in addition to no more than 100 times a second, we can also set 100 ms no more than 20 times (the average is written in the code twice), two rules at the same time limit, traffic will be smoother.

The code for simple implementation is as follows:

public class SlidingWindowRateLimiter {

// Small window list

LinkedList<Room> linkedList = null;

long stepInterval = 0;

long subWindowCount = 10;

long stepLimitCount = 0;

int countLimit = 0;

int count = 0;

public SlidingWindowRateLimiter(int countLimit, int interval){

// Time spacing of each small window

this.stepInterval = interval * 1000/ subWindowCount;

// Total Request Limitation

this.countLimit = countLimit;

// The number of requests per small window is set to twice the average.

this.stepLimitCount = countLimit / subWindowCount * 2;

// Time window start time

long start = System.currentTimeMillis();

// Initialization of contiguous linked lists of small windows

initWindow(start);

}

Room getAndRefreshWindows(long requestTime){

Room firstRoom = linkedList.getFirst();

Room lastRoom = linkedList.getLast();

// Initiate request time in main window

if(firstRoom.getStartTime() < requestTime && requestTime < lastRoom.getEndTime()){

long distanceFromFirst = requestTime - firstRoom.getStartTime();

int num = (int) (distanceFromFirst/stepInterval);

return linkedList.get(num);

}else{

long distanceFromLast = requestTime - lastRoom.getEndTime();

int num = (int)(distanceFromLast/stepInterval);

// Request time is more than one window in the main window

if(num >= subWindowCount){

initWindow(requestTime);

return linkedList.getFirst();

}else{

moveWindow(num+1);

return linkedList.getLast();

}

}

}

public boolean acquire(){

synchronized (mutex()) {

Room room = getAndRefreshWindows(System.currentTimeMillis());

int subCount = room.getCount();

if(subCount + 1 <= stepLimitCount && count + 1 <= countLimit){

room.increase();

count ++;

return true;

}

return false;

}

}

/**

* Initialization window

* @param start

*/

private void initWindow(long start){

linkedList = new LinkedList<Room>();

for (int i = 0; i < subWindowCount; i++) {

linkedList.add(new Room(start, start += stepInterval));

}

// Total Number Zero

count = 0;

}

/**

* move windows

* @param stepNum

*/

private void moveWindow(int stepNum){

for (int i = 0; i < stepNum; i++) {

Room removeRoom = linkedList.removeFirst();

count = count - removeRoom.count;

}

Room lastRoom = linkedList.getLast();

long start = lastRoom.endTime;

for (int i = 0; i < stepNum; i++) {

linkedList.add(new Room(start, start += stepInterval));

}

}

public static void main(String[] args) throws InterruptedException {

SlidingWindowRateLimiter slidingWindowRateLimiter = new SlidingWindowRateLimiter(20, 5);

for (int i = 0; i < 26; i++) {

System.out.println(slidingWindowRateLimiter.acquire());

Thread.sleep(300);

}

}

class Room{

Room(long startTime, long endTime) {

this.startTime = startTime;

this.endTime = endTime;

this.count = 0;

}

private long startTime;

private long endTime;

private int count;

long getStartTime() {

return startTime;

}

long getEndTime() {

return endTime;

}

int getCount() {

return count;

}

int increase(){

this.count++;

return this.count;

}

}

private volatile Object mutexDoNotUseDirectly;

private Object mutex() {

Object mutex = mutexDoNotUseDirectly;

if (mutex == null) {

synchronized (this) {

mutex = mutexDoNotUseDirectly;

if (mutex == null) {

mutexDoNotUseDirectly = mutex = new Object();

}

}

}

return mutex;

}

}The above implementations use the characteristics of linked list, which moves the way of deleting the front segment and adding the back segment in a certain window. Mobile operations are performed on request entry, without using separate threads to move windows. And according to the above, two dimensions control the total number of requests in one window, one is the number of requests in each individual window.

bucket

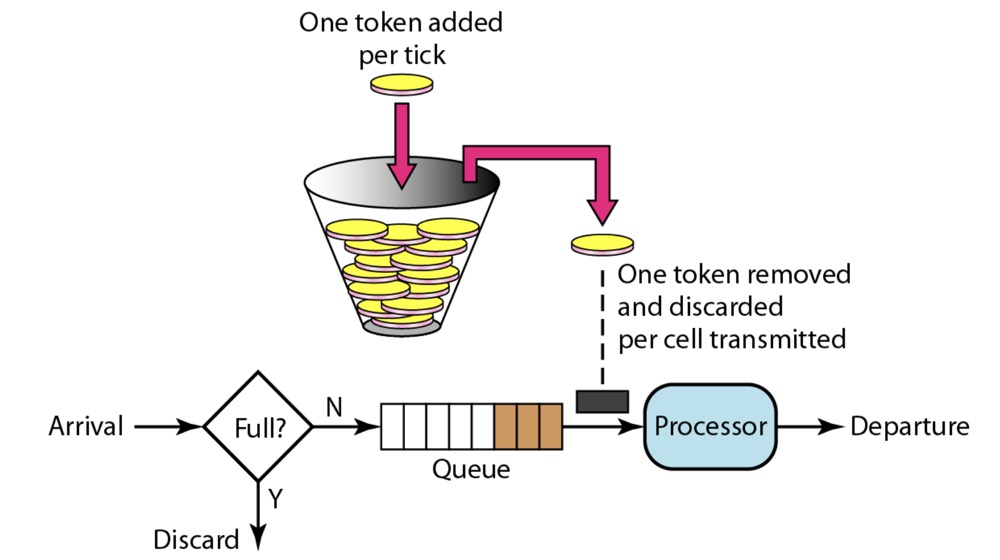

Token Bucket

The principle is as follows:

At present, token buckets are used to achieve the following current limitations: token buckets, token buckets, token buckets, token buckets, token buckets, token buckets and token buckets.

- Spring Cloud Gateway RateLimiter

- Guava RateLimiter

- Bucket4j

Spring Cloud Gateway RateLimiter

Spring Cloud developed its own gateway after zuul2 jump ticket, and the current limiter was also implemented internally.

Implementation Principle of Spring Cloud Gateway RedisRate Limiter

Because it is a micro-service architecture, multi-service deployment is an inevitable scenario, so the default provides redis as the implementation of storage carrier, so you can directly see how the rua script is implemented.

local tokens_key = KEYS[1]

local timestamp_key = KEYS[2]

--redis.log(redis.LOG_WARNING, "tokens_key " .. tokens_key)

// rate

local rate = tonumber(ARGV[1])

// Bucket capacity

local capacity = tonumber(ARGV[2])

// Initiation request time

local now = tonumber(ARGV[3])

// The number of request tokens is now fixed at 1

local requested = tonumber(ARGV[4])

// It takes time to fill a bucket.

local fill_time = capacity/rate

// Expiration time

local ttl = math.floor(fill_time*2)

--redis.log(redis.LOG_WARNING, "rate " .. ARGV[1])

--redis.log(redis.LOG_WARNING, "capacity " .. ARGV[2])

--redis.log(redis.LOG_WARNING, "now " .. ARGV[3])

--redis.log(redis.LOG_WARNING, "requested " .. ARGV[4])

--redis.log(redis.LOG_WARNING, "filltime " .. fill_time)

--redis.log(redis.LOG_WARNING, "ttl " .. ttl)

// The information requested last time exists redis

local last_tokens = tonumber(redis.call("get", tokens_key))

// Taskable initialization bucket is full

if last_tokens == nil then

last_tokens = capacity

end

--redis.log(redis.LOG_WARNING, "last_tokens " .. last_tokens)

// Time of last request

local last_refreshed = tonumber(redis.call("get", timestamp_key))

if last_refreshed == nil then

last_refreshed = 0

end

--redis.log(redis.LOG_WARNING, "last_refreshed " .. last_refreshed)

// The difference between now and the last request time is how long it was before the last request.

local delta = math.max(0, now-last_refreshed)

// The number of tokens that this bucket can provide at this time is the last remaining number of tokens plus the number of tokens that this time interval can generate at the rate of up to the bucket size.

local filled_tokens = math.min(capacity, last_tokens+(delta*rate))

// Comparisons of available tokens and required tokens

local allowed = filled_tokens >= requested

local new_tokens = filled_tokens

local allowed_num = 0

if allowed then

// Consumption token

new_tokens = filled_tokens - requested

allowed_num = 1

end

--redis.log(redis.LOG_WARNING, "delta " .. delta)

--redis.log(redis.LOG_WARNING, "filled_tokens " .. filled_tokens)

--redis.log(redis.LOG_WARNING, "allowed_num " .. allowed_num)

--redis.log(redis.LOG_WARNING, "new_tokens " .. new_tokens)

// Store the remaining tokens

redis.call("setex", tokens_key, ttl, new_tokens)

redis.call("setex", timestamp_key, ttl, now)

return { allowed_num, new_tokens }

Summary of Spring Cloud Gateway RedisRate Limiter

1. When requesting a token, the number of tokens in the bucket is refreshed by calculating the number of tokens generated by the last request and the time interval at the moment, and then the token is provided.

2. If the token is insufficient and there is no waiting, return directly.

3. The way to achieve this is to minimize the time interval of each request. As long as the request is smaller than this unit, it will be rejected. For example, the configuration of 100qps, 1 allows 10ms, and if two requests are closer than 10ms, the latter will be rejected.

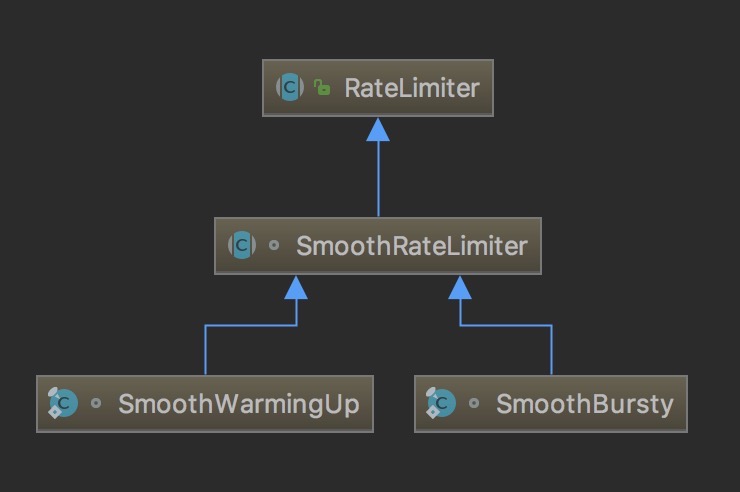

Guava RateLimiter

A token bucket current limiter implemented by Guava.

Guava RateLimiter Class Relations

Guava Rate Limiter uses

RateLimiter is a tool for distributing permits based on the configured rate. Each call to acquire() blocks until permit is obtained, and one permit can only be used once. RateLimiter is concurrent secure and will limit the total request rate of all threads, but RateLimiter is not guaranteed to be fair.

Speed limiters are often used to limit the rate of access to physical or logical resources. In contrast to java.util.concurrent.Semaphore, Semaphore is used to limit the number of simultaneous access, while it limits the rate of access (there is a correlation between the two: little's law).

RateLimiter is defined primarily by the speed of licensing. If there are no other configurations, licenses will be distributed at a fixed rate, defined in the form of licenses per second. Licenses will be distributed smoothly and the delay between licenses will be adjusted to the rate of configuration. (Smooth Bursty)

RateLimiter can also be configured to have a preheating period during which licenses issued per second increase steadily until a steady rate is reached. (Smooth Warming Up)

One example: We need to execute a task list, but we can't submit more than two tasks per second.

final RateLimiter rateLimiter = RateLimiter.create(2.0); // rate is "2 permits per second"

void submitTasks(List<Runnable> tasks, Executor executor) {

for (Runnable task : tasks) {

rateLimiter.acquire(); // may wait

executor.execute(task);

}

}Another example: We produce a data stream that we want to transmit at a rate of 5 KB per second. This can be done by matching a permit to a byte and defining a rate of 5,000/s.

final RateLimiter rateLimiter = RateLimiter.create(5000.0); // rate = 5000 permits per second

void submitPacket(byte[] packet) {

rateLimiter.acquire(packet.length);

networkService.send(packet);

}It is important to note that the number of request tokens will not affect the throttling of the request itself, but will affect the next request. If a task requiring a large number of tokens arrives, it will be granted immediately, but the next request will pay for the last expensive task.

How and why is Guava Rate Limiter designed?

The key feature for RateLimiter is the maximum allowable rate in general - "constant rate". This requires compulsory execution of incoming requests, i.e., computation of throttling, as well as appropriate throttling time for requesting threads to wait.

The easiest way to maintain the speed of QPS is to keep the timestamp of the last permission request to ensure that the time elapsed since then and QPS can be calculated. For example, a QPS is at a rate of 5 (5 token s per second), and if we ensure that the request is granted 200 ms earlier than the last request, we reach the desired rate. If the last permitted request is only 100 ms, then we need to wait for 100 ms. At this rate, it naturally takes 3s to provide 15 new licenses (that is, call acquire(15)).

RateLimiter remembers only the timestamp of the last request, but a very shallow memory of the past. It's important to realize that. If you haven't used RateLimiter for a long time and then a request comes, is it granted immediately? Such RateLimiter will immediately forget the underutilized time just past. Ultimately, the irregularity of the actual request rate results in either underutilization or overflow. In the past, underutilization meant that there was a surplus of available resources, so RateLimiter needed to speed up for a while if he wanted to use them. The rate of application (bandwidth limitation) in computer networks, which used to be underutilized, is usually converted into "almost empty buffers" that can be used immediately for subsequent traffic.

On the other hand, inadequate utilization in the past also means that "the server is getting less and less ready to process future requests". That is to say, when the cache expires, requests are more likely to trigger expensive operations (in a more extreme case, when a service starts, it is usually busy improving its speed).

To deal with such scenarios, we added an additional dimension to model past underutilization into the storedPermits field. When there is no underutilization, the value of this field is 0. As the underutilization is gradually reached, the value of this field will increase to a maximum maxStoredPermits.

Therefore, when the acquire(permits) method is executed, the license is requested from two aspects:

- 1. Storage licenses (if available)

- 2. New licenses (for any remaining licenses).

Here's an example of how RateLimiter works: Suppose RateLimiter generates a token every second, and every second passes without RateLimiter being used, storedPermits will be added 1. We don't use RateLimiter for 10 seconds (that is, we expect a request to be made at an X time, but the request actually arrives after X+10 seconds; this is related to the conclusion of the last paragraph), and store Permits becomes 10 (assuming maxStored Permits > = 10). At that time a request calling acquire(3) arrived. We use storedPermits to reduce it to 7 to respond to this request (how this is translated to throttling time is discussed later), and then the request for acquire(10) arrives immediately. We used all the remaining seven permits in storedPermits and the remaining three new permits produced by RateLimiter. We already know how long it takes to get three fresh permits: if the rate is "one token per second", it takes three seconds. But what does it mean to provide permits with seven stores? There is no single answer in the previous explanation. If we focus on dealing with underutilization, we store permits to give faster than fresh permits because underutilization = idle resources. If you focus on overflow scenarios, storage permits can be given slower than fresh permits. So we need a way to convert storedPermits into throttling time.

The storedPermits ToWaitTime (double storedPermits, double permits ToTake) method plays the role of this transformation. Its basic model is the integration of a continuous function mapping storage token (from 0 to maxStoredPermits) at 1/Rate. We use free time to store tokens, so storedPermits essentially measures unused time. Rate=permits/time, 1/Rate=time/permits, so the time can be calculated by multiplying 1 / Rate and permits. When dealing with a certain number of token requests, integrating this function is the minimum interval corresponding to subsequent requests.

An example of store dPermits ToWaitTime: If store dPermits==10, we need three from store dPermits, store dPermits down to 7, store dPermits ToWaitTime (store dPermits=10, permitsToToken=3) to calculate throttling time, which will calculate the integral value of the function from 7 to 10.

Using integrals ensures that a separate acquire(3) is the same as splitting it into {acquire(1),acquire(1),acquire(1)} or {acquire(2),acquire(1)}. In addition, regardless of the function, the integral of function [7,10] is equal to the sum of [7,8], [8,9], [9,10]. This ensures that we can handle requests with different weights (permits are different), regardless of the function, so we can adjust the algorithm of the function freely (obviously, there is only one requirement: integrals can be calculated). Note that if this function draws a horizontal line with a value of exactly 1/QPS, the function loses its function because it indicates that the rate of storing tokens is the same as the rate of producing new tokens, and this technique will be used later. If the value of this function is below the horizontal line, i.e. f(x)<1/Rate, it means that we have reduced the area of integration, that is, the throttling time of the same token number mapping is less than that of the normal rate generation, and that RateLimiter is faster after a period of idleness. Conversely, if the value of the function is above the horizontal line, which means that the area of the integral is increased and the consumption of the storage token is greater than that of the new production token, it means that RateLimiter slows down after a period of idleness.

Last but not least, if RateLimiter uses QPS=1 speed, then when the more expensive acquire(100) arrives, there is no need to wait 100 seconds to start the actual task. Why not do something while waiting? Better yet, we can start executing tasks right away (as it looks like acqui (1) requests one by one) and need to defer future requests.

In this version, we allow tasks to be executed immediately and future requests to be deferred by 100 seconds, so we allow work to be done during this time, not just idle.

This leads to an important conclusion: RateLimiter does not need to remember the time of the last request, but only the time when the next request is expected to arrive. Because we've been sticking to this point, we can immediately identify whether a request (time out) timeout meets the expected point of arrival of the next request. With this concept, we can redefine "an idle Rate Limiter": when we observe that "the expected time for the next request to arrive" has actually passed, and that the difference (now-past) is a large amount of time converted into stored Permits. (We add storedPermits to tokens produced in free time.) So, if Rate=1 permit/s and arrival time is exactly one second later than the previous request, storedPermits will never increase - we will only increase it when arrival time is later than one second.

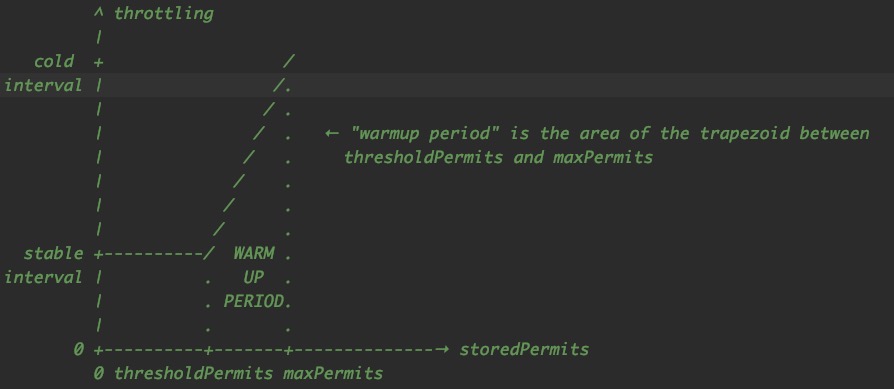

Smooth Warming Up Implementation Principle: As the name implies, what we want to achieve here is a RateLimiter with preheating capability. The author also draws this picture in his notes:

Before we go into detailed implementation, let's first remember a few basic principles:

- The Y axis represents the status of RateLimiter (number of storedPermits), and different storedPermits correspond to different throttling times.

- As RateLimiter idle time passes, storedPermits move to the right until maxPermits.

- If we have storedPermits, we use it first, so as RateLimiter is used, it moves to the left until 0.

- When we start to be idle, we move at a constant speed! The rate at which we move to the right is chosen as the maxPermits/preheating cycle. This ensures that the time spent from 0 to maxpermit is equal to the preheating time. (Controlled by coolDownIntervalMicros method)

- When used, as explained in the previous class annotations, assuming we want to use K storedPermits, it takes as much time as the function integrates between the X license and the X-K license.

In short, the throttling time required to move K permits to the left is equal to the area of a function whose length is K. Assuming that RateLimiter's storedPermits are saturated, warmupPeriod is equivalent from maxPermits to thresholdPermits. The time from threshold Permits to 0 is warmupPeriod/2 (the reason is to maintain previous implementations, where coldFactor is hard coded as 3)

The formulas for calculating thresholdPermits and maxPermits are as follows:

The throttling time spent from threshold Permits to 0 is equal to the integral of the function from 0 to threshold Permits, that is, threshold Permits * stable Intervals. It is also equal to warmupPeriod/2.

thresholdPermits=0.5*warmupPeriod/stableIntervalFrom maxPermits to thresholdPermits is the integral of a function from thresholdPermits to maxPermits, which is the area of the trapezoidal part. It is equal to 0.5 (stable Interval + coldInterval) (maxPermits - thresholdPermits), that is, warmupPeriod.

maxPermits = thresholdPermits + 2*warmupPeriod/(stableInterval+coldInterval)

Guava RateLimiter source code

Here the source code starts with SmoothBursty and starts with the creation of the RateLimiter class:

public static RateLimiter create(double permitsPerSecond) {

return create(permitsPerSecond, SleepingStopwatch.createFromSystemTimer());

}

@VisibleForTesting

static RateLimiter create(double permitsPerSecond, SleepingStopwatch stopwatch) {

// maxBurstSeconds represents the number of seconds converted by the number of tokens that the bucket can store. The number of seconds can tell the number of tokens that can be stored, which is the size of the bucket.

RateLimiter rateLimiter = new SmoothBursty(stopwatch, 1.0 /* maxBurstSeconds */);

rateLimiter.setRate(permitsPerSecond);

return rateLimiter;

}We see that the outside world can't customize the bucket size of SmoothBursty, so no matter what rate we create, RateLimiter must be the bucket size of rate*1. Then someone is reflecting to meet their needs of changing the bucket size: https://github.com/vipshop/vjtools/commit/9eacb861960df0c41. B2323ce14da037a9fdc0629

setRate method:

public final void setRate(double permitsPerSecond) {

checkArgument(

permitsPerSecond > 0.0 && !Double.isNaN(permitsPerSecond), "rate must be positive");

// Global synchronization is required

synchronized (mutex()) {

doSetRate(permitsPerSecond, stopwatch.readMicros());

}

}

private volatile Object mutexDoNotUseDirectly;

// Generate a synchronized object that adapts to double-checked locks to ensure that the object is a singleton

private Object mutex() {

Object mutex = mutexDoNotUseDirectly;

if (mutex == null) {

synchronized (this) {

mutex = mutexDoNotUseDirectly;

if (mutex == null) {

mutexDoNotUseDirectly = mutex = new Object();

}

}

}

return mutex;

}

Mutex DoNotUse Directly field modified by volatile and double check synchronization lock are used to ensure the generation of singletons, thus ensuring that mutex() is locked with the same object every time it is called. This is also needed in subsequent acquisition tokens.

The doSetRate method is a subclass SmoothRateLimiter implementation:

// Currently stored tokens

double storedPermits;

// Maximum tokens that can be stored

double maxPermits;

// Fixed interval between two acquisitions of tokens

double stableIntervalMicros;

// When the next request can be granted, the value will be deferred after one request, and a large request will be deferred more than a small request.

// This is related to pre-consumption, the token of last consumption

private long nextFreeTicketMicros = 0L;

final void doSetRate(double permitsPerSecond, long nowMicros) {

resync(nowMicros);

// Calculate the interval between requests

double stableIntervalMicros = SECONDS.toMicros(1L) / permitsPerSecond;

this.stableIntervalMicros = stableIntervalMicros;

doSetRate(permitsPerSecond, stableIntervalMicros);

}

// Update storedPermits and nextFreeTicketMicros

void resync(long nowMicros) {

// The current time is longer than the next request time, indicating that this request does not have to wait, if not there will be no operation to add tokens.

if (nowMicros > nextFreeTicketMicros) {

// Calculate additional tokens based on the last request and the request interval

double newPermits = (nowMicros - nextFreeTicketMicros) / coolDownIntervalMicros();

// Stored tokens cannot exceed maxPermits

storedPermits = min(maxPermits, storedPermits + newPermits);

// Modify nextFreeTicketMicros to the current time

nextFreeTicketMicros = nowMicros;

}

}

// Subclass implementation

abstract void doSetRate(double permitsPerSecond, double stableIntervalMicros);

// The number of microseconds to wait for a new license to be obtained during return cooling. Subclass implementation

abstract double coolDownIntervalMicros();Implementation of SmoothBursty

static final class SmoothBursty extends SmoothRateLimiter {

/** The work (permits) of how many seconds can be saved up if this RateLimiter is unused? */

final double maxBurstSeconds;

SmoothBursty(SleepingStopwatch stopwatch, double maxBurstSeconds) {

super(stopwatch);

this.maxBurstSeconds = maxBurstSeconds;

}

@Override

void doSetRate(double permitsPerSecond, double stableIntervalMicros) {

double oldMaxPermits = this.maxPermits;

// Calculate the maximum of the barrel

maxPermits = maxBurstSeconds * permitsPerSecond;

if (oldMaxPermits == Double.POSITIVE_INFINITY) {

// if we don't special-case this, we would get storedPermits == NaN, below

storedPermits = maxPermits;

} else {

// StordPermits are calculated at the ratio of new maxPermits to old maxPermits when initialized to 0 and subsequently reset

storedPermits =

(oldMaxPermits == 0.0)

? 0.0 // initial state

: storedPermits * maxPermits / oldMaxPermits;

}

}

@Override

long storedPermitsToWaitTime(double storedPermits, double permitsToTake) {

return 0L;

}

// There is no cooling time for SmoothBursty, so it always returns stable IntervalMicros.

@Override

double coolDownIntervalMicros() {

return stableIntervalMicros;

}

}Then look at the acquisition token:

public double acquire() {

return acquire(1);

}

public double acquire(int permits) {

long microsToWait = reserve(permits);

// Waiting for microsToWait Time Control Rate

stopwatch.sleepMicrosUninterruptibly(microsToWait);

return 1.0 * microsToWait / SECONDS.toMicros(1L);

}

final long reserve(int permits) {

checkPermits(permits);

synchronized (mutex()) {

return reserveAndGetWaitLength(permits, stopwatch.readMicros());

}

}

// Get the time to wait

final long reserveAndGetWaitLength(int permits, long nowMicros) {

long momentAvailable = reserveEarliestAvailable(permits, nowMicros);

return max(momentAvailable - nowMicros, 0);

}

// Implementation of Subclass SmoothRateLimiter

abstract long reserveEarliestAvailable(int permits, long nowMicros);

ReserveEarliest Available method to refresh the time when the next request can be granted

final long reserveEarliestAvailable(int requiredPermits, long nowMicros) {

// Each acquisition triggers resync

resync(nowMicros);

// The return value is the time when the next request can be granted

long returnValue = nextFreeTicketMicros;

// Select the smaller number of request tokens and storage tokens, that is, the number of consumed tokens from storage.

double storedPermitsToSpend = min(requiredPermits, this.storedPermits);

// Compute the token created by the required line in this request

double freshPermits = requiredPermits - storedPermitsToSpend;

// Calculating the waiting time is the time consumed to store the token + the time required to generate the new token

long waitMicros =

storedPermitsToWaitTime(this.storedPermits, storedPermitsToSpend)

+ (long) (freshPermits * stableIntervalMicros);

// Refreshing the next request can be granted by adding the waiting time to the original value is to delay the waiting time generated by the request to the next request. This is a big request that will be granted immediately, but subsequent requests will be waiting for a long time. So the core idea here is that every request is always the same. Predicting the time of the next request

this.nextFreeTicketMicros = LongMath.saturatedAdd(nextFreeTicketMicros, waitMicros);

// Refresh storage token

this.storedPermits -= storedPermitsToSpend;

return returnValue;

}

// This is used to calculate the throttling time of storage tokens when they are consumed, that is, the rate of storage tokens can be controlled by this method subclass. We see that the implementation of SmothBursty always returns 0 to indicate that the token consuming storage does not require additional waiting time. We can see different implementations in the preheated implementation.

abstract long storedPermitsToWaitTime(double storedPermits, double permitsToTake);

Let's look at the method of requesting a token with a timeout:

public boolean tryAcquire(long timeout, TimeUnit unit) {

return tryAcquire(1, timeout, unit);

}

public boolean tryAcquire(int permits) {

return tryAcquire(permits, 0, MICROSECONDS);

}

public boolean tryAcquire() {

return tryAcquire(1, 0, MICROSECONDS);

}

public boolean tryAcquire(int permits, long timeout, TimeUnit unit) {

long timeoutMicros = max(unit.toMicros(timeout), 0);

checkPermits(permits);

long microsToWait;

synchronized (mutex()) {

long nowMicros = stopwatch.readMicros();

// Determine whether the set timeout time is sufficient to wait for the next token to be given, and fail directly if you can't wait.

if (!canAcquire(nowMicros, timeoutMicros)) {

return false;

} else {

// Get the time to wait

microsToWait = reserveAndGetWaitLength(permits, nowMicros);

}

}

// wait for

stopwatch.sleepMicrosUninterruptibly(microsToWait);

return true;

}

private boolean canAcquire(long nowMicros, long timeoutMicros) {

return queryEarliestAvailable(nowMicros) - timeoutMicros <= nowMicros;

}Above, we can basically understand a common way to realize the current limiter. We can see that we can customize our current limiter strategy through doSetRate, storedPermits ToWaitTime and coolDownIntervalMicros.

So here's SmoothBursty's strategy:

- Bucket size controls maxPermits = maxBurstSeconds * permitsPerSecond through fixed maxBurstSeconds.

- Consumption of cumulative tokens does not count into waiting time

- The rate at which tokens are accumulated is consistent with the token consumption rate

Let's continue with the slightly more complex Smooth Warming Up, after all, to illustrate that other authors have sketched with annotations.

static final class SmoothWarmingUp extends SmoothRateLimiter {

// Cumulative preheating consumption time

private final long warmupPeriodMicros;

/**

* The slope of the line from the stable interval (when permits == 0), to the cold interval

* (when permits == maxPermits)

*/

private double slope;

private double thresholdPermits;

// Cooling factor fixed is 3, which means that the maximum time required to consume a token when the token bucket is full can be calculated by this factor.

private double coldFactor;

SmoothWarmingUp(

SleepingStopwatch stopwatch, long warmupPeriod, TimeUnit timeUnit, double coldFactor) {

super(stopwatch);

this.warmupPeriodMicros = timeUnit.toMicros(warmupPeriod);

this.coldFactor = coldFactor;

}

@Override

void doSetRate(double permitsPerSecond, double stableIntervalMicros) {

double oldMaxPermits = maxPermits;

// By using the coldFactor, we can calculate the highest point of coldInterval, which is three times that of stableIntervalMicros. That is to say, the trapezoidal highest point in the graph is fixed.

double coldIntervalMicros = stableIntervalMicros * coldFactor;

// Converting threshold Permits according to warmupPeriodMicros*2=thresholdPermits*stableIntervalMicros because we see that the trapezoidal peak is fixed, we can control threshold Permits by setting warmupPeriod, thus controlling the value of maxPermits.

thresholdPermits = 0.5 * warmupPeriodMicros / stableIntervalMicros;

// maxPermits is also calculated based on the formulas mentioned above.

maxPermits =

thresholdPermits + 2.0 * warmupPeriodMicros / (stableIntervalMicros + coldIntervalMicros);

// Inclination angle

slope = (coldIntervalMicros - stableIntervalMicros) / (maxPermits - thresholdPermits);

if (oldMaxPermits == Double.POSITIVE_INFINITY) {

// if we don't special-case this, we would get storedPermits == NaN, below

storedPermits = 0.0;

} else {

storedPermits =

(oldMaxPermits == 0.0)

? maxPermits // The initial value here is maxPermits.

: storedPermits * maxPermits / oldMaxPermits;

}

}

@Override

long storedPermitsToWaitTime(double storedPermits, double permitsToTake) {

// Storage tokens that exceed thresholdPermits

double availablePermitsAboveThreshold = storedPermits - thresholdPermits;

long micros = 0;

// measuring the integral on the right part of the function (the climbing line)

if (availablePermitsAboveThreshold > 0.0) {

double permitsAboveThresholdToTake = min(availablePermitsAboveThreshold, permitsToTake);

// Here we begin to calculate the area of the trapezoid. The area of the trapezoid is equal to (bottom + bottom) * height / 2.

double length =

permitsToTime(availablePermitsAboveThreshold)

+ permitsToTime(availablePermitsAboveThreshold - permitsAboveThresholdToTake);

micros = (long) (permitsAboveThresholdToTake * length / 2.0);

permitsToTake -= permitsAboveThresholdToTake;

}

// measuring the integral on the left part of the function (the horizontal line)

micros += (long) (stableIntervalMicros * permitsToTake);

return micros;

}

private double permitsToTime(double permits) {

return stableIntervalMicros + permits * slope;

}

// To ensure that the time spent from 0 to maxpermit equals the preheating time, look at the use of coolDownIntervalMicros in resync method

@Override

double coolDownIntervalMicros() {

return warmupPeriodMicros / maxPermits;

}

}Summary of Guava Rate Limiter

1. Guava uses the token bucket algorithm in RateLimiter design. It provides two models: ordinary and preheating. The design idea of increasing and consuming storage tokens is to calculate the function integral.

2. For the first new request, no matter how many tokens are requested, it is not blocked.

3. Internally, thread sleep is used to achieve thread waiting, and no timeout will wait until tokens are available.

4. Token storage occurs when requesting a token, and no separate thread is needed to implement an algorithm that generates tokens continuously.

5. Internally designed class parameters are not open to external configuration

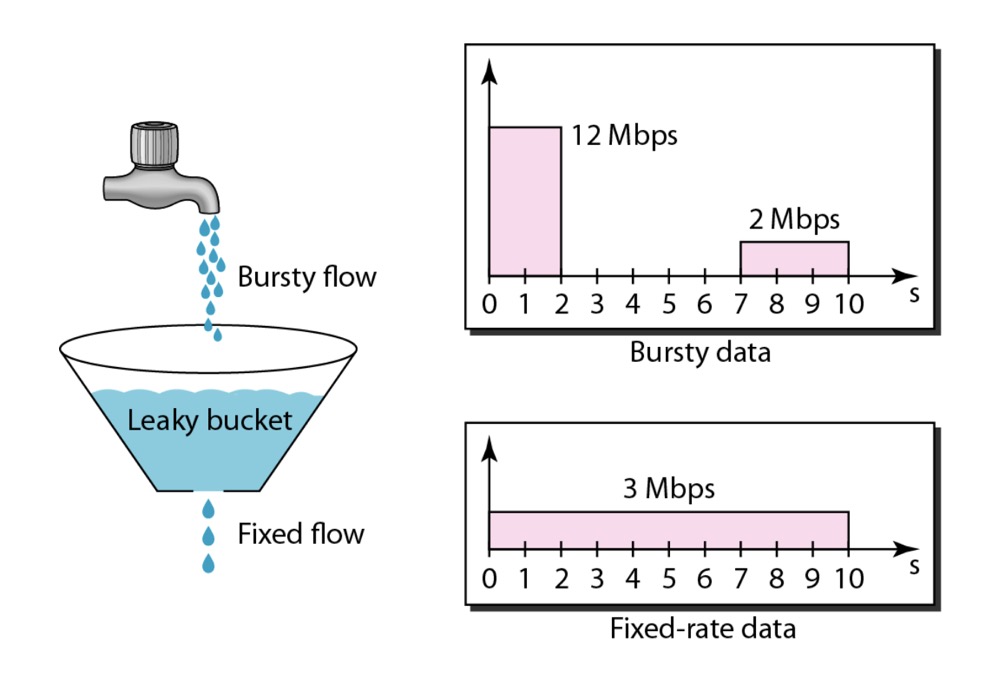

Leaky bucket

The principle is as follows:

We have a fixed barrel. The discharge rate of this barrel is constant. When the inflow rate is faster than the outflow rate, there can be a buffer in the barrel. But when a certain amount exceeds the capacity of the barrel, the barrel will overflow and new requests will not be accepted.

The idea is not to block queues, as long as the consumption rate remains constant.

Implement code similar to the following (schematic use):

public class LeakyBucketLimiter {

BlockingQueue<String> leakyBucket;

long rate;

public LeakyBucketLimiter(int capacity, long rate) {

this.rate = rate;

this.leakyBucket = new ArrayBlockingQueue<String>(capacity);

}

public boolean offer(){

return leakyBucket.offer("");

}

class Consumer implements Runnable{

public void run() {

try {

while (true){

Thread.sleep(1000/rate);

leakyBucket.take();

}

} catch (InterruptedException e) {

}

}

}

}The leaky bucket algorithm guarantees a constant rate, unlike the high rate at which the token bucket allows certain burst requests and the reduced rate at idle time.

Last

- Current limitation will inevitably bring about performance loss. How to avoid it?

One idea is to monitor the status of the system, such as cpu, memory, io, etc., and switch the current limiter based on this information.

- Is it single-machine current limiting or distributed current limiting in the actual scenario?

In distributed systems, if you want to use distributed current limiting, you need public storage carriers, such as redis, zk, etc. It is also necessary to consider the failure of distributed storage carriers, which can not affect normal business functions.

- Expand

This paper is not all about current limiting. We only talk about some conventional algorithms about current limiting, which can be said to be the tip of the iceberg. There is still a lot of knowledge waiting for us to explore. There is a long way to go.

In addition, we will refer to open source current limiting schemes Sentinel and Bucket4j for further study of practical current limiting landing schemes.

Reference resources

http://www.cs.ucc.ie/~gprovan/CS6323/2014/L11-Congesion-Control.pdf.