Reading guide

As an open source distributed file system, CEPH can easily expand the storage capacity to more than PB and has good performance. CEPH provides three storage modes: object storage, block storage and file system. If you don't want to spend time installing CEPH, you can deploy CEPH clusters through CEPH docker. One advantage of deploying CEPH clusters using containers is that you don't have to worry about upgrading. This article will take you to quickly deploy CEPH clusters on a single node.

The Linux and related software versions used in this tutorial are as follows:

CentOS Linux release version 7.8.2003

Docker version is 20.10.10

The version of Ceph is Nautilus latest 14.2.22

Machine initialization

Before deploying Ceph, we need to initialize the environment of our own machine. It mainly involves firewall, host name and other settings.

1. Turn off the firewall

systemctl stop firewalld && systemctl disable firewalld

2. Close SELinux (security subsystem of Linux)

sed -i 's/enforcing/disabled/' /etc/selinux/config setenforce 0 PS: When the formal environment is actually deployed, it is best to join IP Whitelist way to operate, rather than directly close the firewall.

3. Set the host name and set the host name of the virtual machine to ceph

hostnamectl set-hostname ceph Perform the following command configuration on the node host. cat >> /etc/hosts <<EOF 192.168.129.16 ceph EOF

4. Set time synchronization

The chronyd service is used to synchronize the time of different machines. If the time synchronization service is not turned on, the problem of clock skew detected on mon may occur.

timedatectl set-timezone Asia/Shanghai date yum -y install chrony systemctl enable chronyd systemctl start chronyd.service sed -i -e '/^server/s/^/#/' -e '1a server ntp.aliyun.com iburst' /etc/chrony.conf systemctl restart chronyd.service sleep 10 timedatectl

5. In other configurations, the ceph command alias in the container is local for easy use. Other ceph related commands can also be added by reference:

echo 'alias ceph="docker exec mon ceph"' >> /etc/profile source /etc/profile

6. Create the CEPH directory. Create the CEPH directory on the host computer and establish a mapping with the container to facilitate direct manipulation and management of the CEPH configuration file. Create the four folders on the node CEPH as root. The commands are as follows:

mkdir -p /usr/local/ceph/{admin,etc,lib,logs}

This command will create 4 specified directories at a time. Note that they are separated by commas and cannot have spaces. Of which:

- The admin folder is used to store startup scripts.

- ceph.conf and other configuration files are stored in the etc folder.

- The key files of each component are stored in the lib folder.

- The logs folder stores ceph's log files.

7. Authorize users in docker for folders

chown -R 167:167 /usr/local/ceph/ #The user id in docker is 167. Authorization is performed here chmod 777 -R /usr/local/ceph

After the above initialization is completed, let's start the specific deployment.

Deploy ceph cluster

Install the basic components of ceph cluster, Mon, OSD, Mgr, rgw and MDS

1. Create OSD disk

Create an OSD disk. The OSD service is an object storage daemon. It is responsible for storing objects to the local file system. There must be an independent disk for storage.

lsblk fdisk /dev/vdb Create three partitions./dev/vdb1 /dev/vdb2 /dev/vdb3 For operation methods, refer to: https://jingyan.baidu.com/article/cbf0e500a9731e2eab289371.html Format and hang on disk mkfs.xfs -f /dev/vdb1;mkfs.xfs -f /dev/vdb2;mkfs.xfs -f /dev/vdb3 mkdir -p /dev/osd1 ;mount /dev/vdb1 /dev/osd1 mkdir -p /dev/osd2 ;mount /dev/vdb2 /dev/osd2 mkdir -p /dev/osd3 ;mount /dev/vdb3 /dev/osd3 Effect after creation [root@ceph ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT vda 252:0 0 50G 0 disk ├─vda1 252:1 0 2M 0 part ├─vda2 252:2 0 256M 0 part /boot └─vda3 252:3 0 49.8G 0 part └─centos-root 253:0 0 49.8G 0 lvm / vdb 252:16 0 300G 0 disk ├─vdb1 252:17 0 100G 0 part /dev/osd1 ├─vdb2 252:18 0 100G 0 part /dev/osd2 └─vdb3 252:19 0 100G 0 part /dev/osd3

2. Install docker and obtain ceph image

Install dependent packages

yum install -y yum-utils device-mapper-persistent-data lvm2

#Add yum source for docker package

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

Direct installation Docker CE the latest version

yum install docker-ce -y

systemctl restart docker && systemctl enable docker

#Configure docker to use Alibaba cloud acceleration

mkdir -p /etc/docker/

touch /etc/docker/daemon.json

> /etc/docker/daemon.json

cat <<EOF >> /etc/docker/daemon.json

{

"registry-mirrors":["https://q2hy3fzi.mirror.aliyuncs.com"]

}

EOF

systemctl daemon-reload && systemctl restart docker

docker info

Pull ceph image

It's used here dockerhub The most popular in the world ceph/daemon Mirror image (pull here) nautilus Version ceph,latest-nautilus)

docker pull ceph/daemon:latest-nautilus

3. Write a startup script to start deployment. (all scripts are placed in the admin folder)

(1) Create and start the mon component

vi /usr/local/ceph/admin/start_mon.sh

#!/bin/bash

docker run -d --net=host \

--name=mon \

-v /etc/localtime:/etc/localtime \

-v /usr/local/ceph/etc:/etc/ceph \

-v /usr/local/ceph/lib:/var/lib/ceph \

-v /usr/local/ceph/logs:/var/log/ceph \

-e MON_IP=192.168.129.16 \

-e CEPH_PUBLIC_NETWORK=192.168.128.0/20 \

ceph/daemon:latest-nautilus mon

This script is to start the monitor. The monitor is used to maintain the global status of the whole Ceph cluster. A cluster must have at least one monitor, preferably an odd number of monitors. It is convenient to select other available monitors when one monitor is hung. Description of the startup script:

- The name parameter specifies the node name, which is set to mon here

- -v xxx:xxx is to establish the directory mapping relationship between the host and the container, including etc, lib and logs directories.

- MON_IP is the IP address of Docker (query through ifconfig and take the inet IP in eth0). Here we have three servers, so MAN_IP needs to write three IPS. If the IP is CEPH_PUBLIC_NETWORK across network segments, all network segments must be written.

- CEPH_PUBLIC_NETWORK configures all network segments running the Docker host. The nautilus version must be specified here, or the latest version ceph will be operated by default. mon must be consistent with the name defined above.

Start MON and execute on node ceph

bash /usr/local/ceph/admin/start_mon.sh

After startup, view the startup results through docker ps -a|grep mon. After successful startup, generate configuration data, and add the following contents to the ceph main configuration file:

cat >>/usr/local/ceph/etc/ceph.conf <<EOF # Tolerate more clock errors mon clock drift allowed = 2 mon clock drift warn backoff = 30 # Allow deletion of pool mon_allow_pool_delete = true mon_warn_on_pool_no_redundancy = false osd_pool_default_size = 1 osd_pool_default_min_size = 1 osd crush chooseleaf type = 0 [mgr] # Open WEB dashboard mgr modules = dashboard [client.rgw.ceph1] # Set the web access port of rgw gateway rgw_frontends = "civetweb port=20003" EOF

After startup, check the cluster status through ceph -s. at this time, the status should be HEALTH_OK.

If health: health_warn mon is allowing execute global_id callback

You can execute the following command:

ceph config set mon auth_allow_insecure_global_id_reclaim false

(2) Create and start osd components

vi /usr/local/ceph/admin/start_osd.sh

#!/bin/bash

docker run -d \

--name=osd1 \

--net=host \

--restart=always \

--privileged=true \

--pid=host \

-v /etc/localtime:/etc/localtime \

-v /usr/local/ceph/etc:/etc/ceph \

-v /usr/local/ceph/lib:/var/lib/ceph \

-v /usr/local/ceph/logs:/var/log/ceph \

-v /dev/osd1:/var/lib/ceph/osd \

ceph/daemon:latest-nautilus osd_directory

docker run -d \

--name=osd2 \

--net=host \

--restart=always \

--privileged=true \

--pid=host \

-v /etc/localtime:/etc/localtime \

-v /usr/local/ceph/etc:/etc/ceph \

-v /usr/local/ceph/lib:/var/lib/ceph \

-v /usr/local/ceph/logs:/var/log/ceph \

-v /dev/osd2:/var/lib/ceph/osd \

ceph/daemon:latest-nautilus osd_directory

docker run -d \

--name=osd3 \

--net=host \

--restart=always \

--privileged=true \

--pid=host \

-v /etc/localtime:/etc/localtime \

-v /usr/local/ceph/etc:/etc/ceph \

-v /usr/local/ceph/lib:/var/lib/ceph \

-v /usr/local/ceph/logs:/var/log/ceph \

-v /dev/osd3:/var/lib/ceph/osd \

ceph/daemon:latest-nautilus osd_directory

This script is used to start OSD components. OSD (Object Storage Device) is a RADOS component, which is used to store resources. Script description:

- Name is the name used to specify the OSD container

- net is used to specify the host, which is the host we configured earlier

- restart is specified as always, so that the osd component can be restarted when it is down.

- privileged is used to specify that the OSD is private. Here we use the osd_directory image mode

PS: the number of OSDs should be maintained at an odd number

Start OSD and execute it on the node ceph. Before executing the start_osd.sh script, first generate the key information of OSD on the mon node, otherwise an error will be reported if you start directly. The command is as follows:

docker exec -it mon ceph auth get client.bootstrap-osd -o /var/lib/ceph/bootstrap-osd/ceph.keyring

Then execute the following command on the node:

bash /usr/local/ceph/admin/start_osd.sh

After all OSDs are started, wait a moment and execute ceph -s to check the status. You should see three more OSDs

(3) Create and start mgr components

vi /usr/local/ceph/admin/start_mgr.sh #!/bin/bash docker run -d --net=host \ --name=mgr \ -v /etc/localtime:/etc/localtime \ -v /usr/local/ceph/etc:/etc/ceph \ -v /usr/local/ceph/lib:/var/lib/ceph \ -v /usr/local/ceph/logs:/var/log/ceph \ ceph/daemon:latest-nautilus mgr

This script is used to start the mgr component. Its main function is to share and extend some functions of monitor and provide a graphical management interface so that we can better manage the ceph storage system. Its startup script is relatively simple and will not be repeated here.

Start MGR and directly execute the following commands on node ceph:

bash /usr/local/ceph/admin/start_mgr.sh

After the mgr is started, wait a moment and execute ceph -s to check the status. You should see one more mgr

(4) Create and start rgw components

vi /usr/local/ceph/admin/start_rgw.sh

#!/bin/bash

docker run \

-d --net=host \

--name=rgw \

-v /etc/localtime:/etc/localtime \

-v /usr/local/ceph/etc:/etc/ceph \

-v /usr/local/ceph/lib:/var/lib/ceph \

-v /usr/local/ceph/logs:/var/log/ceph \

ceph/daemon:latest-nautilus rgw

The script is mainly used to start the rgw component. As an object storage gateway system, rgw (Rados GateWay) plays the role of RADOS cluster client to provide data storage for object storage applications, and plays the role of HTTP server to receive and analyze data transmitted from the Internet.

To start rgw, we first need to generate the key information of rgw at the mon node. The command is as follows:

docker exec mon ceph auth get client.bootstrap-rgw -o /var/lib/ceph/bootstrap-rgw/ceph.keyring

Then execute the following command on node ceph:

bash /usr/local/ceph/admin/start_rgw.sh rgw After startup, ceph -s Viewing may appear Degraded In case of degradation, we need to set it manually rgw pool of size and min_size Minimum 1 ceph osd pool set .rgw.root min_size 1 ceph osd pool set .rgw.root size 1 ceph osd pool set default.rgw.control min_size 1 ceph osd pool set default.rgw.control size 1 ceph osd pool set default.rgw.meta min_size 1 ceph osd pool set default.rgw.meta size 1 ceph osd pool set default.rgw.log min_size 1 ceph osd pool set default.rgw.log size 1

If ceph -s reports an error, the prompt pools have not replicas configured can be ignored temporarily, because an alarm will be triggered when pool size is set to 1 in this version.

Pool size needs to be greater than 1 otherwise HEALTH_WARN is reported. Ceph will issue a health warning if a RADOS pool's size is set to 1 or if the pool is configured with no redundancy. Ceph will stop issuing the warning if the pool size is set to the minimum recommended value: Refer to: https://documentation.suse.com/ses/6/html/ses-all/bk02apa.html The following commands can be used to prohibit sending alarms: (if they still exist, ignore them temporarily) ceph config set global mon_warn_on_pool_no_redundancy false

(5) Create and start mds components

vi /usr/local/ceph/admin/start_mds.sh

#!/bin/bash

docker run -d --net=host \

--name=mds \

--privileged=true \

-v /etc/localtime:/etc/localtime \

-v /usr/local/ceph/etc:/etc/ceph \

-v /usr/local/ceph/lib:/var/lib/ceph \

-v /usr/local/ceph/logs:/var/log/ceph \

-e CEPHFS_CREATE=O \

-e CEPHFS_METADATA_POOL_PG=64 \

-e CEPHFS_DATA_POOL_PG=64 \

ceph/daemon:latest-nautilus mds

Start MDS

bash /usr/local/ceph/admin/start_mds.sh

At this point, all components are started.

4. Create FS file system

It can be executed at the ceph node.

Create Data Pool and create Metadata Pool:

ceph osd pool create cephfs_data 64 64 ceph osd pool create cephfs_metadata 64 64

Note: if affected by mon_ max_ pg_ per_ The OSD limit cannot be set to 128. You can turn it down to 64.

Create CephFS:

ceph fs new cephfs cephfs_metadata cephfs_data

Associate the above data pool with the metadata pool, create the file system of cephfs and view the FS information:

ceph fs ls name: cephfs, metadata pool: cephfs_metadata, data pools: [cephfs_data ] perhaps ceph fs status cephfs ceph osd pool set cephfs_data min_size 1 ceph osd pool set cephfs_data size 1 ceph osd pool set cephfs_metadata min_size 1 ceph osd pool set cephfs_metadata size 1

After startup, check the status of the cluster through ceph-s

[root@ceph ~]# ceph -s

cluster:

id: 7a3e7b33-2b71-45ee-bb62-3320fb0e9d51

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph (age 21m)

mgr: ceph(active, since 20m)

mds: cephfs:1 {0=ceph=up:active}

osd: 3 osds: 3 up (since 56m), 3 in (since 56m)

rgw: 1 daemon active (ceph)

task status:

data:

pools: 6 pools, 256 pgs

objects: 209 objects, 3.4 KiB

usage: 3.0 GiB used, 297 GiB / 300 GiB avail

pgs: 256 active+clean

[root@ceph ~]#

5. Verify cephfs availability

Install CEPH fuse on another node:

yum -y install epel-release

rpm -Uhv http://download.ceph.com/rpm-jewel/el7/noarch/ceph-release-1-1.el7.noarch.rpm

yum install -y ceph-fuse

mkdir /etc/ceph

stay ceph Get in cluster admin.key

ceph auth get-key client.admin

to configure admin.key

vi /etc/ceph/ceph.client.admin.keyring

[client.admin]

key = xxxxxxxxxxxxxxx

After successful installation, use the following command to mount:

ceph-fuse -m 192.168.129.16:6789 -r / /mnt/ -o nonempty df -h View mount status ceph-fuse 300G 3.1G 297G 2% /mnt

Mount succeeded.

6. Install the Dashboard management background of ceph

It can be executed at the ceph node.

Turn on the dashboard function

docker exec mgr ceph mgr module enable dashboard

Create certificate

docker exec mgr ceph dashboard create-self-signed-cert

Create login user and password:

docker exec mgr ceph dashboard set-login-credentials admin admin Set user name to admin, Password is admin. Note that I reported an error when using this command dashboard set-login-credentials <username> : Set the login credentials. Password read from -i <file> I manually mgr Created in container/tmp/ceph-password.txt The password is written in it admin Then execute the following command successfully: docker exec -it mgr bash vi /tmp/ceph-password.txt admin exit docker exec mgr ceph dashboard ac-user-create admin -i /tmp/ceph-password.txt administrator

Configure external access ports

docker exec mgr ceph config set mgr mgr/dashboard/server_port 18080

Configure external access IP

docker exec mgr ceph config set mgr mgr/dashboard/server_addr 192.168.129.16

Turn off HTTPS (it can be turned off if there is no certificate or intranet access)

docker exec mgr ceph config set mgr mgr/dashboard/ssl false

Restart the Mgr DashBoard service

docker restart mgr

see Mgr DashBoard Service information

[root@ceph admin]# docker exec mgr ceph mgr services

{

"dashboard": "http://ceph:18080/"

}

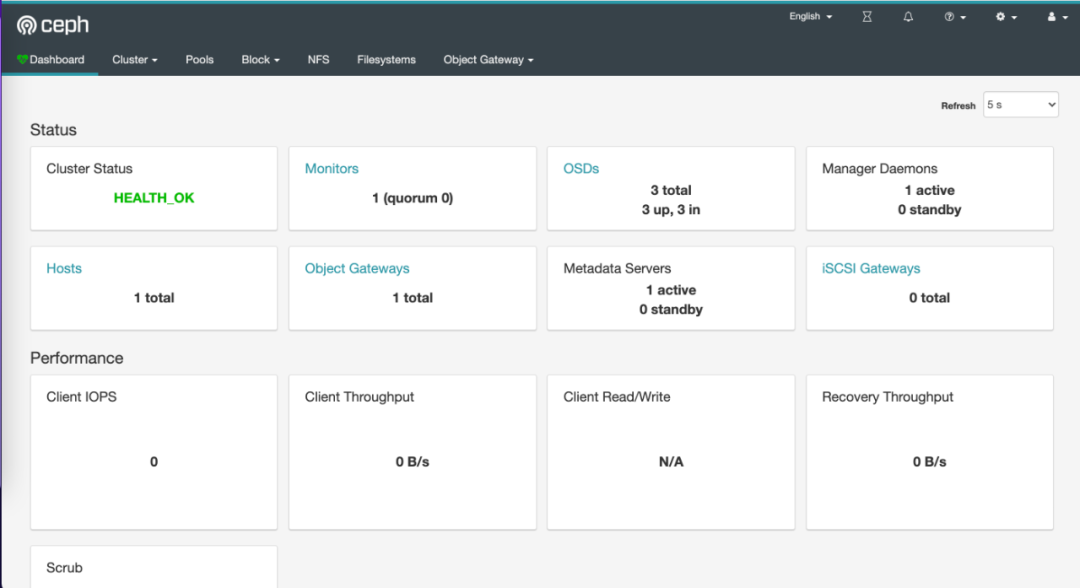

7. Access the management console interface:

Access in browser:

http://192.168.129.16:18080/#/dashboard

User name and password: admin/admin

Reference link

https://blog.csdn.net/hxx688/article/details/103440967

https://zhuanlan.zhihu.com/p/325630308