Operating environment

Centos 7

DRBDADM_API_VERSION=2

DRBD_KERNEL_VERSION=9.0.14

DRBDADM_VERSION_CODE=0x090301

DRBDADM_VERSION=9.3.1

Corosync Cluster Engine, version '2.4.3'

Pacemaker 1.1.18-11.el7_5.3

crm 3.0.0

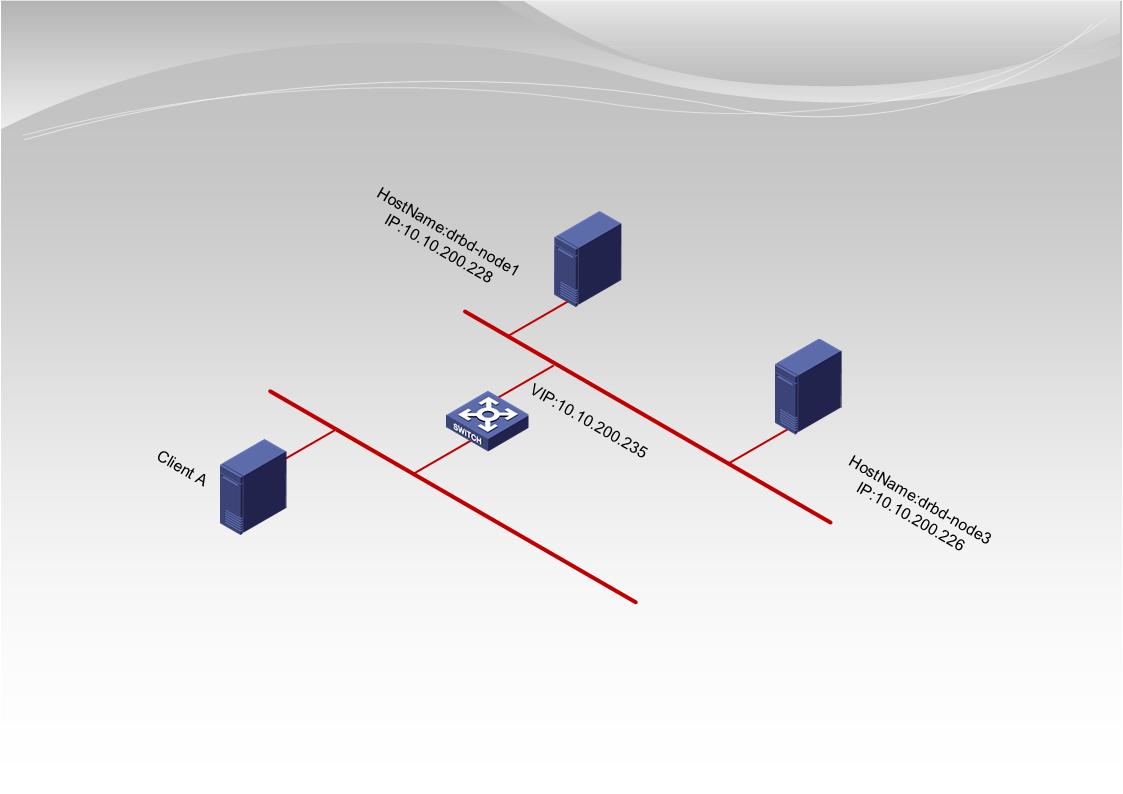

Network Topology

Installation configuration steps

Install DRBD/Corosync/Pacemaker/Crmsh

Reference for installation and configuration steps of DRBD/Corosync/Pacemaker/Crmsh "Configuring DRBD to Build Active/Stanby iSCSi Cluster under Centos7" After installing the components, check the Cluster status

[root@drbd-node1 ~]# crm_mon -rf -n1 Stack: corosync Current DC: drbd-node3 (version 1.1.18-11.el7_5.3-2b07d5c5a9) - partition with quorum Last updated: Sat Jul 7 18:06:54 2018 Last change: Sat Jul 7 17:54:52 2018 by root via cibadmin on drbd-node1 2 nodes configured 0 resources configured Node drbd-node1: online Node drbd-node3: online No inactive resources

In addition, we need to configure the file system, format drbd resource operations on the primary node, and create corresponding directories.

[root@drbd-node1 ~]# mkfs.xfs /dev/drbd0 -f

meta-data=/dev/drbd0 isize=512 agcount=4, agsize=32766998 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=131067991, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=63998, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@drbd-node1 ~]# mkdir /mnt/nfs

Formatting is not required on another node, but the corresponding directory is created

[root@drbd-node3 ~]# mkdir /mnt/nfs

Configuring DRBD resources

The annotation drbd_resource name is configured in drbd.

[root@drbd-node1 ~]# crm configure crm(live)configure# primitive p_drbd_r0 ocf:linbit:drbd \ > params drbd_resource=scsivol \ > op start interval=0s timeout=240s \ > op stop interval=0s timeout=100s \ > op monitor interval=31s timeout=20s role=Slave \ > op monitor interval=29s timeout=20s role=Master crm(live)configure# ms ms_drbd_r0 p_drbd_r0 meta master-max=1 \ > master-node-max=1 clone-max=2 clone-node-max=1 notify=true crm(live)configure# verify crm(live)configure# commit crm(live)configure# exit bye

After the above configuration, through crm_mon, you can see that pacemaker has managed two resources, while in the previous section, the number of manageable resources queried by the same command is 0.

[root@drbd-node1 ~]# crm_mon -rf -n1

Stack: corosync

Current DC: drbd-node3 (version 1.1.18-11.el7_5.3-2b07d5c5a9) - partition with quorum

Last updated: Sat Jul 7 18:22:58 2018

Last change: Sat Jul 7 18:19:53 2018 by root via cibadmin on drbd-node1

2 nodes configured

2 resources configured

Node drbd-node1: online

p_drbd_r0 (ocf::linbit:drbd): Master

Node drbd-node3: online

p_drbd_r0 (ocf::linbit:drbd): Slave

No inactive resources

Configuration file system

Note that the parameters device / direct / fstyle are all configured above.

[root@drbd-node1 ~]# crm configure crm(live)configure# primitive p_fs_drbd0 ocf:heartbeat:Filesystem \ > params device=/dev/drbd0 directory=/mnt/nfs fstype=xfs \ > options=noatime,nodiratime \ > op start interval="0" timeout="60s" \ > op stop interval="0" timeout="60s" \ > op monitor interval="20" timeout="40s" crm(live)configure# order o_drbd_r0-before-fs_drbd0 \ > inf: ms_drbd_r0:promote p_fs_drbd0:start crm(live)configure# colocation c_fs_drbd0-with_drbd-r0 \ > inf: p_fs_drbd0 ms_drbd_r0:Master crm(live)configure# verify crm(live)configure# commit crm(live)configure# exit bye

By looking at the managed resources through crm_mon, you can see the newly added file system resources, and the number of resources managed by pacemaker is 3.

[root@drbd-node1 ~]# crm_mon -rf -n1

Stack: corosync

Current DC: drbd-node3 (version 1.1.18-11.el7_5.3-2b07d5c5a9) - partition with quorum

Last updated: Sat Jul 7 18:29:24 2018

Last change: Sat Jul 7 18:28:06 2018 by root via cibadmin on drbd-node1

2 nodes configured

3 resources configured

Node drbd-node1: online

p_drbd_r0 (ocf::linbit:drbd): Master

p_fs_drbd0 (ocf::heartbeat:Filesystem): Started

Node drbd-node3: online

p_drbd_r0 (ocf::linbit:drbd): Slave

No inactive resources

Configuring NFS services

Install nfs on two nodes

[root@drbd-node1 ~]# yum -y install nfs-utils rpcbind [root@drbd-node1 ~]# systemctl enable rpcbind Created symlink from /etc/systemd/system/multi-user.target.wants/rpcbind.service to /usr/lib/systemd/system/rpcbind.service. [root@drbd-node1 ~]# systemctl start rpcbind

[root@drbd-node3 ~]# yum -y install nfs-utils rpcbind [root@drbd-node3 ~]# systemctl enable rpcbind Created symlink from /etc/systemd/system/multi-user.target.wants/rpcbind.service to /usr/lib/systemd/system/rpcbind.service. [root@drbd-node3 ~]# systemctl start rpcbind

Configuring nfs server resources

[root@drbd-node1 ~]# crm configure crm(live)configure# primitive p_nfsserver ocf:heartbeat:nfsserver \ > ? params nfs_shared_infodir=/mnt/nfs/nfs_shared_infodir nfs_ip=10.10.200.235 \ > ? op start interval=0s timeout=40s \ > ? op stop interval=0s timeout=20s \ > ? op monitor interval=10s timeout=20sCtrl-C, leaving bye [root@drbd-node1 ~]# crm configure crm(live)configure# primitive p_nfsserver ocf:heartbeat:nfsserver \ > params nfs_shared_infodir=/mnt/nfs/nfs_shared_infodir nfs_ip=10.10.200.235 \ > op start interval=0s timeout=40s \ > op stop interval=0s timeout=20s \ > op monitor interval=10s timeout=20s crm(live)configure# order o_fs_drbd0-before-nfsserver \ > inf: p_fs_drbd0 p_nfsserver crm(live)configure# colocation c_nfsserver-with-fs_drbd0 \ > inf: p_nfsserver p_fs_drbd0 crm(live)configure# verify crm(live)configure# commit crm(live)configure# exit bye

View the manageable resources of pacemaker through crm_mon, adding nfs server resources

[root@drbd-node1 ~]# crm_mon -rf -n1

Stack: corosync

Current DC: drbd-node3 (version 1.1.18-11.el7_5.3-2b07d5c5a9) - partition with quorum

Last updated: Sat Jul 7 18:37:51 2018

Last change: Sat Jul 7 18:37:10 2018 by root via cibadmin on drbd-node1

2 nodes configured

4 resources configured

Node drbd-node1: online

p_drbd_r0 (ocf::linbit:drbd): Master

p_fs_drbd0 (ocf::heartbeat:Filesystem): Started

p_nfsserver (ocf::heartbeat:nfsserver): Started

Node drbd-node3: online

p_drbd_r0 (ocf::linbit:drbd): Slave

No inactive resources

Configure nfs exportfs

Create exportfs directory

[root@drbd-node1 ~]# mkdir -p /mnt/nfs/exports/dir1 [root@drbd-node1 ~]# chown nfsnobody:nfsnobody /mnt/nfs/exports/dir1/

Configuring exportfs resources

[root@drbd-node1 ~]# crm configure crm(live)configure# primitive p_exportfs_dir1 ocf:heartbeat:exportfs \ > params clientspec=10.10.200.0/24 directory=/mnt/nfs/exports/dir1 fsid=1 \ > unlock_on_stop=1 options=rw,sync \ > op start interval=0s timeout=40s \ > op stop interval=0s timeout=120s \ > op monitor interval=10s timeout=20s crm(live)configure# order o_nfsserver-before-exportfs-dir1 \ > inf: p_nfsserver p_exportfs_dir1 crm(live)configure# colocation c_exportfs-with-nfsserver \ > inf: p_exportfs_dir1 p_nfsserver crm(live)configure# verify crm(live)configure# commit crm(live)configure# exit

View exportfs

[root@drbd-node1 ~]# showmount -e drbd-node1 Export list for drbd-node1: /mnt/nfs/exports/dir1 10.10.200.0/24

View the manageable resources of pacemaker through crm_mon, and add the exportfs resources.

[root@drbd-node1 ~]# crm_mon -rf -n1

Stack: corosync

Current DC: drbd-node3 (version 1.1.18-11.el7_5.3-2b07d5c5a9) - partition with quorum

Last updated: Sat Jul 7 22:41:23 2018

Last change: Sat Jul 7 22:40:10 2018 by root via cibadmin on drbd-node1

2 nodes configured

5 resources configured

Node drbd-node1: online

p_drbd_r0 (ocf::linbit:drbd): Master

p_fs_drbd0 (ocf::heartbeat:Filesystem): Started

p_nfsserver (ocf::heartbeat:nfsserver): Started

p_exportfs_dir1 (ocf::heartbeat:exportfs): Started

Node drbd-node3: online

p_drbd_r0 (ocf::linbit:drbd): Slave

No inactive resources

Configuring Virtual IP

Note the device name of the network card behind the nic parameter

[root@drbd-node1 ~]# crm configure crm(live)configure# primitive p_virtip_dir1 ocf:heartbeat:IPaddr2 \ > params ip=10.10.200.235 cidr_netmask=24 nic=ens3 \ > op monitor interval=20s timeout=20s \ > op start interval=0s timeout=20s \ > op stop interval=0s timeout=20s crm(live)configure# order o_exportfs_dir1-before-p_virtip_dir1 \ > inf: p_exportfs_dir1 p_virtip_dir1 crm(live)configure# colocation c_virtip_dir1-with-exportfs-dir1 \ > inf: p_virtip_dir1 p_exportfs_dir1 crm(live)configure# verify crm(live)configure# commit crm(live)configure# exit bye

At this point, we can view nfs information from nfs client through virtual IP address.

[root@kvm-node ~]# showmount -e 10.10.200.235 Export list for 10.10.200.235: /mnt/nfs/exports/dir1 10.10.200.0/24

View the manageable resources of pacemaker

[root@drbd-node1 ~]# crm_mon -rf -n1

Stack: corosync

Current DC: drbd-node3 (version 1.1.18-11.el7_5.3-2b07d5c5a9) - partition with quorum

Last updated: Sat Jul 7 22:51:21 2018

Last change: Sat Jul 7 22:48:45 2018 by root via cibadmin on drbd-node1

2 nodes configured

6 resources configured

Node drbd-node1: online

p_drbd_r0 (ocf::linbit:drbd): Master

p_fs_drbd0 (ocf::heartbeat:Filesystem): Started

p_nfsserver (ocf::heartbeat:nfsserver): Started

p_exportfs_dir1 (ocf::heartbeat:exportfs): Started

p_virtip_dir1 (ocf::heartbeat:IPaddr2): Started

Node drbd-node3: online

p_drbd_r0 (ocf::linbit:drbd): Slave

No inactive resources

All the configurations of nfs cluster have been completed, and the following tests are carried out

Testing NFS connections

Hang the exportfs directory of the above configuration on the nfs client side

[root@kvm-node ~]# mount 10.10.200.235:/mnt/nfs/exports/dir1 /mnt/nfs/ [root@kvm-node ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/centos-root 50G 2.5G 48G 5% / devtmpfs 7.8G 0 7.8G 0% /dev tmpfs 7.8G 0 7.8G 0% /dev/shm tmpfs 7.8G 8.8M 7.8G 1% /run tmpfs 7.8G 0 7.8G 0% /sys/fs/cgroup /dev/sdb 931G 37G 894G 4% /drbd1 /dev/sde 931G 501G 431G 54% /drbd4 /dev/sdc 931G 501G 431G 54% /drbd2 /dev/sdd 931G 8.1G 923G 1% /drbd3 /dev/sdf 931G 501G 431G 54% /drbd5 /dev/sda1 1014M 191M 824M 19% /boot /dev/mapper/centos-home 872G 35G 838G 4% /home tmpfs 1.6G 0 1.6G 0% /run/user/0 10.10.200.235:/mnt/nfs/exports/dir1 500G 32M 500G 1% /mnt/nfs

Failure switching test

Simulate the downtime of main node drbd-node 1. Before the downtime of drbd-node 1, the status information is as follows:

[root@drbd-node1 nfs]# crm status

Stack: corosync

Current DC: drbd-node3 (version 1.1.18-11.el7_5.3-2b07d5c5a9) - partition with quorum

Last updated: Sat Jul 7 22:56:58 2018

Last change: Sat Jul 7 22:48:45 2018 by root via cibadmin on drbd-node1

2 nodes configured

6 resources configured

Online: [ drbd-node1 drbd-node3 ]

Full list of resources:

Master/Slave Set: ms_drbd_r0 [p_drbd_r0]

Masters: [ drbd-node1 ]

Slaves: [ drbd-node3 ]

p_fs_drbd0 (ocf::heartbeat:Filesystem): Started drbd-node1

p_nfsserver (ocf::heartbeat:nfsserver): Started drbd-node1

p_exportfs_dir1 (ocf::heartbeat:exportfs): Started drbd-node1

p_virtip_dir1 (ocf::heartbeat:IPaddr2): Started drbd-node1

After simulating the downtime of the primary node drbd-node 1, all resources are switched to the standby node drbd-node 3

[root@drbd-node3 ~]# crm status

Stack: corosync

Current DC: drbd-node3 (version 1.1.18-11.el7_5.3-2b07d5c5a9) - partition with quorum

Last updated: Sat Jul 7 22:57:58 2018

Last change: Sat Jul 7 22:48:45 2018 by root via cibadmin on drbd-node1

2 nodes configured

6 resources configured

Online: [ drbd-node3 ]

OFFLINE: [ drbd-node1 ]

Full list of resources:

Master/Slave Set: ms_drbd_r0 [p_drbd_r0]

Masters: [ drbd-node3 ]

Stopped: [ drbd-node1 ]

p_fs_drbd0 (ocf::heartbeat:Filesystem): Started drbd-node3

p_nfsserver (ocf::heartbeat:nfsserver): Started drbd-node3

p_exportfs_dir1 (ocf::heartbeat:exportfs): Started drbd-node3

p_virtip_dir1 (ocf::heartbeat:IPaddr2): Started drbd-node3

View exportfs information and nfs mounting in nfs client

[root@kvm-node ~]# showmount -e 10.10.200.235 Export list for 10.10.200.235: /mnt/nfs/exports/dir1 10.10.200.0/24 [root@kvm-node ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/centos-root 50G 2.5G 48G 5% / devtmpfs 7.8G 0 7.8G 0% /dev tmpfs 7.8G 0 7.8G 0% /dev/shm tmpfs 7.8G 8.8M 7.8G 1% /run tmpfs 7.8G 0 7.8G 0% /sys/fs/cgroup /dev/sdb 931G 37G 894G 4% /drbd1 /dev/sde 931G 501G 431G 54% /drbd4 /dev/sdc 931G 501G 431G 54% /drbd2 /dev/sdd 931G 8.1G 923G 1% /drbd3 /dev/sdf 931G 501G 431G 54% /drbd5 /dev/sda1 1014M 191M 824M 19% /boot /dev/mapper/centos-home 872G 35G 838G 4% /home tmpfs 1.6G 0 1.6G 0% /run/user/0 10.10.200.235:/mnt/nfs/exports/dir1 500G 32M 500G 1% /mnt/nfs