1, Environment introduction

Operating system introduction: ubuntu 18.04

FFMPEG version: 4.4.2

Camera: USB camera, virtual machine attached camera of the machine

2, Download and compile FFMPEG and X264

X264 download address: http://www.videolan.org/developers/x264.html

FFMPEG download address: https://ffmpeg.org/download.html

Download address on the official website of Yasm Library: http://yasm.tortall.net/Download.html

Compiling X264 on PC Linux requires the support of yasm library.

To compile the yasm Library:

./configure make make install

Compile the X264 Library:

./configure --prefix=$PWD/_install --enable-shared --enable-static make install

Compile FFMPEG Library: (need to rely on X264 library and modify the path by yourself)

./configure --enable-static --enable-shared --prefix=$PWD/ _install --extra-cflags=-I/home/wbyq/pc_work/x264-snapshot-20160527-2245/_install/includ e --extra-ldflags=-L/home/wbyq/pc_work/x264-snapshot-20160527-2245/_install/lib --enable -ffmpeg --enable-libx264 --enable-gpl

3, Program function introduction

Note: the following program refers to the example program provided by FFMPEG, muxing.c, for modification.

Function introduction: there is a sub thread and a main thread in the program. The sub thread reads the camera data (YUYV) through the linux standard V4L2 framework and converts it to YUV420P format (H264 must use this format for encoding), and the main thread encodes video and audio. At present, there is no real-time audio acquisition function in the program, and the fixed voice generated in the example code of audio direct use.

The article of audio collection under linux: https://blog.csdn.net/xiaolong1126626497/article/details/104916277

In order to paste the code conveniently, the code of the project is all in one. c, without multi file storage.

Introduction to the program running process: the camera video collected for 10 seconds each time is saved to the local area and collected circularly. The name of the video is named according to the time of the current system, and the video format is MP4 format.

4, Source code

#include <stdlib.h>

#include <stdio.h>

#include <string.h>

#include <math.h>

#include <time.h>

#include <libavutil/avassert.h>

#include <libavutil/channel_layout.h>

#include <libavutil/opt.h>

#include <libavutil/mathematics.h>

#include <libavutil/timestamp.h>

#include <libavformat/avformat.h>

#include <libswscale/swscale.h>

#include <libswresample/swresample.h>

#include <stdio.h>

#include <sys/ioctl.h>

#include <linux/videodev2.h>

#include <string.h>

#include <sys/types.h>

#include <sys/stat.h>

#include <fcntl.h>

#include <sys/mman.h>

#include <poll.h>

#include <stdlib.h>

#include <stdio.h>

#include <sys/types.h>

#include <sys/socket.h>

#include <arpa/inet.h>

#include <pthread.h>

#include <signal.h>

#include <unistd.h>

#include <string.h>

#Define stream_duration 10.0 / * record 10 seconds of video, generally only 8 seconds due to buffering*/

#define STREAM_FRAME_RATE 25 /* 25 images/s avfilter_get_by_name */

#define STREAM_PIX_FMT AV_PIX_FMT_YUV420P /* default pix_fmt */

#define SCALE_FLAGS SWS_BICUBIC

//Fixed camera output size

#define VIDEO_WIDTH 640

#define VIDEO_HEIGHT 480

//Store the converted data read from the camera

unsigned char YUV420P_Buffer[VIDEO_WIDTH*VIDEO_HEIGHT*3/2];

unsigned char YUV420P_Buffer_temp[VIDEO_WIDTH*VIDEO_HEIGHT*3/2];

/*Global variables for some cameras*/

unsigned char *image_buffer[4];

int video_fd;

pthread_mutex_t mutex;

pthread_cond_t cond;

// Wrapper for single output AVStream

typedef struct OutputStream {

AVStream *st;

AVCodecContext *enc;

/* Number of points for next frame*/

int64_t next_pts;

int samples_count;

AVFrame *frame;

AVFrame *tmp_frame;

float t, tincr, tincr2;

struct SwsContext *sws_ctx;

struct SwrContext *swr_ctx;

} OutputStream;

static int write_frame(AVFormatContext *fmt_ctx, const AVRational *time_base, AVStream *st, AVPacket *pkt)

{

/*Readjust the output packet timestamp value from codec to stream time base */

av_packet_rescale_ts(pkt, *time_base, st->time_base);

pkt->stream_index = st->index;

/*Write compressed frame to media file*/

return av_interleaved_write_frame(fmt_ctx, pkt);

}

/* Add an output stream. */

static void add_stream(OutputStream *ost, AVFormatContext *oc,

AVCodec **codec,

enum AVCodecID codec_id)

{

AVCodecContext *c;

int i;

/* find the encoder */

*codec = avcodec_find_encoder(codec_id);

if (!(*codec)) {

fprintf(stderr, "Could not find encoder for '%s'\n",

avcodec_get_name(codec_id));

exit(1);

}

ost->st = avformat_new_stream(oc, NULL);

if (!ost->st) {

fprintf(stderr, "Could not allocate stream\n");

exit(1);

}

ost->st->id = oc->nb_streams-1;

c = avcodec_alloc_context3(*codec);

if (!c) {

fprintf(stderr, "Could not alloc an encoding context\n");

exit(1);

}

ost->enc = c;

switch ((*codec)->type) {

case AVMEDIA_TYPE_AUDIO:

c->sample_fmt = (*codec)->sample_fmts ? (*codec)->sample_fmts[0] : AV_SAMPLE_FMT_FLTP;

c->bit_rate = 64000; //Set code rate

c->sample_rate = 44100; //Audio sampling rate

c->channels= av_get_channel_layout_nb_channels(c->channel_layout);

c->channel_layout = AV_CH_LAYOUT_MONO; ////AV? Ch? Layout? Mono mono AV? Ch? Layout? Stereo

c->channels = av_get_channel_layout_nb_channels(c->channel_layout);

ost->st->time_base = (AVRational){ 1, c->sample_rate };

break;

case AVMEDIA_TYPE_VIDEO:

c->codec_id = codec_id;

//Code rate: affects the volume in direct proportion to the volume: the larger the code rate is, the larger the volume is; the smaller the code rate is, the smaller the volume is.

c->bit_rate = 400000; //Set code rate 400kps

/*Resolution must be a multiple of 2. */

c->width =VIDEO_WIDTH;

c->height = VIDEO_HEIGHT;

/*Time base: This is the basic unit of time (in seconds)

*Represents the frame timestamp in it. For fixed fps content,

*Time base should be 1 / framerate, timestamp increment should be

*Equal to 1.*/

ost->st->time_base = (AVRational){1,STREAM_FRAME_RATE};

c->time_base = ost->st->time_base;

c->gop_size = 12; /* Up to one frame per 12 frames */

c->pix_fmt = STREAM_PIX_FMT;

c->max_b_frames = 0; //No B frames.

if (c->codec_id == AV_CODEC_ID_MPEG1VIDEO)

{

c->mb_decision = 2;

}

break;

default:

break;

}

/* Some formats want the stream headers to separate. */

if (oc->oformat->flags & AVFMT_GLOBALHEADER)

c->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

}

/**************************************************************/

/* audio output */

static AVFrame *alloc_audio_frame(enum AVSampleFormat sample_fmt,

uint64_t channel_layout,

int sample_rate, int nb_samples)

{

AVFrame *frame = av_frame_alloc();

frame->format = sample_fmt;

frame->channel_layout = channel_layout;

frame->sample_rate = sample_rate;

frame->nb_samples = nb_samples;

if(nb_samples)

{

av_frame_get_buffer(frame, 0);

}

return frame;

}

static void open_audio(AVFormatContext *oc, AVCodec *codec, OutputStream *ost, AVDictionary *opt_arg)

{

AVCodecContext *c;

int nb_samples;

int ret;

AVDictionary *opt = NULL;

c = ost->enc;

av_dict_copy(&opt, opt_arg, 0);

ret = avcodec_open2(c, codec, &opt);

av_dict_free(&opt);

/*The following three lines of code are used to generate the frequency parameters of the virtual sound settings*/

ost->t = 0;

ost->tincr = 2 * M_PI * 110.0 / c->sample_rate;

ost->tincr2 = 2 * M_PI * 110.0 / c->sample_rate / c->sample_rate;

//AAC code is fixed here as 1024

nb_samples = c->frame_size;

ost->frame = alloc_audio_frame(c->sample_fmt, c->channel_layout,

c->sample_rate, nb_samples);

ost->tmp_frame = alloc_audio_frame(AV_SAMPLE_FMT_S16, c->channel_layout,

c->sample_rate, nb_samples);

/* copy the stream parameters to the muxer */

avcodec_parameters_from_context(ost->st->codecpar, c);

/* create resampler context */

ost->swr_ctx = swr_alloc();

/* set options */

av_opt_set_int (ost->swr_ctx, "in_channel_count", c->channels, 0);

av_opt_set_int (ost->swr_ctx, "in_sample_rate", c->sample_rate, 0);

av_opt_set_sample_fmt(ost->swr_ctx, "in_sample_fmt", AV_SAMPLE_FMT_S16, 0);

av_opt_set_int (ost->swr_ctx, "out_channel_count", c->channels, 0);

av_opt_set_int (ost->swr_ctx, "out_sample_rate", c->sample_rate, 0);

av_opt_set_sample_fmt(ost->swr_ctx, "out_sample_fmt", c->sample_fmt, 0);

/* initialize the resampling context */

swr_init(ost->swr_ctx);

}

/*

Preparing virtual audio frames

This can be replaced by PCM data read from the sound card

*/

static AVFrame *get_audio_frame(OutputStream *ost)

{

AVFrame *frame = ost->tmp_frame;

int j, i, v;

int16_t *q = (int16_t*)frame->data[0];

/* Check whether we want to generate more frames to judge whether it is over*/

if (av_compare_ts(ost->next_pts, ost->enc->time_base,STREAM_DURATION, (AVRational){ 1, 1 }) >= 0)

return NULL;

for(j = 0; j<frame->nb_samples; j++) //NB? Samples: number of audio samples described in this frame (per channel)

{

v=(int)(sin(ost->t) * 1000);

for(i=0;i<ost->enc->channels;i++) //Channels: number of audio channels

{

*q++ = v; //Audio data

}

ost->t += ost->tincr;

ost->tincr += ost->tincr2;

}

frame->pts = ost->next_pts;

ost->next_pts += frame->nb_samples;

return frame;

}

/*

*Encode an audio frame and send it to the multiplexer

*Return 1 after coding, otherwise return 0

*/

static int write_audio_frame(AVFormatContext *oc, OutputStream *ost)

{

AVCodecContext *c;

AVPacket pkt = { 0 };

AVFrame *frame;

int ret;

int got_packet;

int dst_nb_samples;

av_init_packet(&pkt);

c = ost->enc;

frame = get_audio_frame(ost);

if(frame)

{

/*Use resampler to convert samples from native format to target codec format*/

/*Calculate the target number of samples*/

dst_nb_samples = av_rescale_rnd(swr_get_delay(ost->swr_ctx, c->sample_rate) + frame->nb_samples,

c->sample_rate, c->sample_rate, AV_ROUND_UP);

av_assert0(dst_nb_samples == frame->nb_samples);

av_frame_make_writable(ost->frame);

/*Convert to destination format */

swr_convert(ost->swr_ctx,

ost->frame->data, dst_nb_samples,

(const uint8_t **)frame->data, frame->nb_samples);

frame = ost->frame;

frame->pts = av_rescale_q(ost->samples_count, (AVRational){1, c->sample_rate}, c->time_base);

ost->samples_count += dst_nb_samples;

}

avcodec_encode_audio2(c, &pkt, frame, &got_packet);

if (got_packet)

{

write_frame(oc, &c->time_base, ost->st, &pkt);

}

return (frame || got_packet) ? 0 : 1;

}

static AVFrame *alloc_picture(enum AVPixelFormat pix_fmt, int width, int height)

{

AVFrame *picture;

int ret;

picture = av_frame_alloc();

picture->format = pix_fmt;

picture->width = width;

picture->height = height;

/* allocate the buffers for the frame data */

av_frame_get_buffer(picture, 32);

return picture;

}

static void open_video(AVFormatContext *oc, AVCodec *codec, OutputStream *ost, AVDictionary *opt_arg)

{

AVCodecContext *c = ost->enc;

AVDictionary *opt = NULL;

av_dict_copy(&opt, opt_arg, 0);

/* open the codec */

avcodec_open2(c, codec, &opt);

av_dict_free(&opt);

/* allocate and init a re-usable frame */

ost->frame = alloc_picture(c->pix_fmt, c->width, c->height);

ost->tmp_frame = NULL;

/* Copy stream parameters to multiplexer */

avcodec_parameters_from_context(ost->st->codecpar, c);

}

/*

Prepare image data

YUV422 Occupied memory = w * h * 2

YUV420 Occupied memory = width*height*3/2

*/

static void fill_yuv_image(AVFrame *pict, int frame_index,int width, int height)

{

int y_size=width*height;

/*Waiting conditions hold*/

pthread_cond_wait(&cond,&mutex);

memcpy(YUV420P_Buffer_temp,YUV420P_Buffer,sizeof(YUV420P_Buffer));

/*Mutex unlocking*/

pthread_mutex_unlock(&mutex);

//Copy YUV data to buffer y_size=wXh

memcpy(pict->data[0],YUV420P_Buffer_temp,y_size);

memcpy(pict->data[1],YUV420P_Buffer_temp+y_size,y_size/4);

memcpy(pict->data[2],YUV420P_Buffer_temp+y_size+y_size/4,y_size/4);

}

static AVFrame *get_video_frame(OutputStream *ost)

{

AVCodecContext *c = ost->enc;

/* Check whether we want to generate more frames -- judge whether to end recording */

if(av_compare_ts(ost->next_pts, c->time_base,STREAM_DURATION, (AVRational){ 1, 1 }) >= 0)

return NULL;

/*When we pass a frame to the encoder, it may retain a reference to it

*Inside; make sure we don't cover it here*/

if (av_frame_make_writable(ost->frame) < 0)

exit(1);

//Make a virtual image

//DTS (decode timestamp) and PTS (display timestamp)

fill_yuv_image(ost->frame, ost->next_pts, c->width, c->height);

ost->frame->pts = ost->next_pts++;

return ost->frame;

}

/*

*Encode a video frame and send it to the multiplexer

*Return 1 after coding, otherwise return 0

*/

static int write_video_frame(AVFormatContext *oc, OutputStream *ost)

{

int ret;

AVCodecContext *c;

AVFrame *frame;

int got_packet = 0;

AVPacket pkt = { 0 };

c=ost->enc;

//Get a frame of data

frame = get_video_frame(ost);

av_init_packet(&pkt);

/* Encoding image */

ret=avcodec_encode_video2(c, &pkt, frame, &got_packet);

if(got_packet)

{

ret=write_frame(oc, &c->time_base, ost->st, &pkt);

}

else

{

ret = 0;

}

return (frame || got_packet) ? 0 : 1;

}

static void close_stream(AVFormatContext *oc, OutputStream *ost)

{

avcodec_free_context(&ost->enc);

av_frame_free(&ost->frame);

av_frame_free(&ost->tmp_frame);

sws_freeContext(ost->sws_ctx);

swr_free(&ost->swr_ctx);

}

//Encoding video and audio

int video_audio_encode(char *filename)

{

OutputStream video_st = { 0 }, audio_st = { 0 };

AVOutputFormat *fmt;

AVFormatContext *oc;

AVCodec *audio_codec, *video_codec;

int ret;

int have_video = 0, have_audio = 0;

int encode_video = 0, encode_audio = 0;

AVDictionary *opt = NULL;

int i;

/* Assign output environment */

avformat_alloc_output_context2(&oc,NULL,NULL,filename);

fmt=oc->oformat;

/*Use the codec of the default format to add audio and video streams and initialize the codec. */

if(fmt->video_codec != AV_CODEC_ID_NONE)

{

add_stream(&video_st,oc,&video_codec,fmt->video_codec);

have_video = 1;

encode_video = 1;

}

if(fmt->audio_codec != AV_CODEC_ID_NONE)

{

add_stream(&audio_st, oc, &audio_codec, fmt->audio_codec);

have_audio = 1;

encode_audio = 1;

}

/*Now that you have all the parameters set, you can turn on the audio and video codec and allocate the necessary encoding buffer. */

if (have_video)

open_video(oc, video_codec, &video_st, opt);

if (have_audio)

open_audio(oc, audio_codec, &audio_st, opt);

av_dump_format(oc, 0, filename, 1);

/* Open output file (if required) */

if(!(fmt->flags & AVFMT_NOFILE))

{

ret = avio_open(&oc->pb, filename, AVIO_FLAG_WRITE);

if (ret < 0)

{

fprintf(stderr, "Unable to open output file: '%s': %s\n", filename,av_err2str(ret));

return 1;

}

}

/* Write stream headers, if any*/

avformat_write_header(oc,&opt);

while(encode_video || encode_audio)

{

/* Select stream to encode*/

if(encode_video &&(!encode_audio || av_compare_ts(video_st.next_pts, video_st.enc->time_base,audio_st.next_pts, audio_st.enc->time_base) <= 0))

{

encode_video = !write_video_frame(oc,&video_st);

}

else

{

encode_audio = !write_audio_frame(oc,&audio_st);

}

}

av_write_trailer(oc);

if (have_video)

close_stream(oc, &video_st);

if (have_audio)

close_stream(oc, &audio_st);

if (!(fmt->flags & AVFMT_NOFILE))

avio_closep(&oc->pb);

avformat_free_context(oc);

return 0;

}

/*

Function function: camera device initialization

*/

int VideoDeviceInit(char *DEVICE_NAME)

{

/*1. Turn on camera device*/

video_fd=open(DEVICE_NAME,O_RDWR);

if(video_fd<0)return -1;

/*2. Set the color format and output image size supported by the camera*/

struct v4l2_format video_formt;

memset(&video_formt,0,sizeof(struct v4l2_format));

video_formt.type=V4L2_BUF_TYPE_VIDEO_CAPTURE; /*Video capture device*/

video_formt.fmt.pix.height=VIDEO_HEIGHT; //480

video_formt.fmt.pix.width=VIDEO_WIDTH; //640

video_formt.fmt.pix.pixelformat=V4L2_PIX_FMT_YUYV;

if(ioctl(video_fd,VIDIOC_S_FMT,&video_formt))return -2;

printf("Current camera size:width*height=%d*%d\n",video_formt.fmt.pix.width,video_formt.fmt.pix.height);

/*3.Number of request buffers*/

struct v4l2_requestbuffers video_requestbuffers;

memset(&video_requestbuffers,0,sizeof(struct v4l2_requestbuffers));

video_requestbuffers.count=4;

video_requestbuffers.type=V4L2_BUF_TYPE_VIDEO_CAPTURE; /*Video capture device*/

video_requestbuffers.memory=V4L2_MEMORY_MMAP;

if(ioctl(video_fd,VIDIOC_REQBUFS,&video_requestbuffers))return -3;

printf("video_requestbuffers.count=%d\n",video_requestbuffers.count);

/*4. Get the first address of the buffer*/

struct v4l2_buffer video_buffer;

memset(&video_buffer,0,sizeof(struct v4l2_buffer));

int i;

for(i=0;i<video_requestbuffers.count;i++)

{

video_buffer.type=V4L2_BUF_TYPE_VIDEO_CAPTURE; /*Video capture device*/

video_buffer.memory=V4L2_MEMORY_MMAP;

video_buffer.index=i;/*Buffer number*/

if(ioctl(video_fd,VIDIOC_QUERYBUF,&video_buffer))return -4;

/*Mapping address*/

image_buffer[i]=mmap(NULL,video_buffer.length,PROT_READ|PROT_WRITE,MAP_SHARED,video_fd,video_buffer.m.offset);

printf("image_buffer[%d]=0x%X\n",i,image_buffer[i]);

}

/*5. Add buffer to collection queue*/

memset(&video_buffer,0,sizeof(struct v4l2_buffer));

for(i=0;i<video_requestbuffers.count;i++)

{

video_buffer.type=V4L2_BUF_TYPE_VIDEO_CAPTURE; /*Video capture device*/

video_buffer.memory=V4L2_MEMORY_MMAP;

video_buffer.index=i;/*Buffer number*/

if(ioctl(video_fd,VIDIOC_QBUF,&video_buffer))return -5;

}

/*6. Start collection queue*/

int opt=V4L2_BUF_TYPE_VIDEO_CAPTURE; /*Video capture device*/

if(ioctl(video_fd,VIDIOC_STREAMON,&opt))return -6;

return 0;

}

//YUYV==YUV422

int yuyv_to_yuv420p(const unsigned char *in, unsigned char *out, unsigned int width, unsigned int height)

{

unsigned char *y = out;

unsigned char *u = out + width*height;

unsigned char *v = out + width*height + width*height/4;

unsigned int i,j;

unsigned int base_h;

unsigned int is_u = 1;

unsigned int y_index = 0, u_index = 0, v_index = 0;

unsigned long yuv422_length = 2 * width * height;

//The sequence is YU YV YU YV, the length of a yuv422 frame is width * height * 2 bytes

//Discard even rows u v

for(i=0; i<yuv422_length; i+=2)

{

*(y+y_index) = *(in+i);

y_index++;

}

for(i=0; i<height; i+=2)

{

base_h = i*width*2;

for(j=base_h+1; j<base_h+width*2; j+=2)

{

if(is_u)

{

*(u+u_index) = *(in+j);

u_index++;

is_u = 0;

}

else

{

*(v+v_index) = *(in+j);

v_index++;

is_u = 1;

}

}

}

return 1;

}

/*

Sub thread function: collect data of camera

*/

void *pthread_read_video_data(void *arg)

{

/*1. Cycle to read data collected by camera*/

struct pollfd fds;

fds.fd=video_fd;

fds.events=POLLIN;

/*2. Apply for JPG data space*/

struct v4l2_buffer video_buffer;

while(1)

{

/*(1)Wait for the camera to collect data*/

poll(&fds,1,-1);

/*(2)Take out the collected buffer in the queue*/

video_buffer.type=V4L2_BUF_TYPE_VIDEO_CAPTURE; /*Video capture device*/

video_buffer.memory=V4L2_MEMORY_MMAP;

ioctl(video_fd,VIDIOC_DQBUF,&video_buffer);

/*(3)Processing image data*/

/*YUYV Data to YUV420P*/

pthread_mutex_lock(&mutex); /*Mutex lock*/

yuyv_to_yuv420p(image_buffer[video_buffer.index],YUV420P_Buffer,VIDEO_WIDTH,VIDEO_HEIGHT);

pthread_mutex_unlock(&mutex); /*Mutex unlocking*/

pthread_cond_broadcast(&cond);/*Broadcast mode wakes up dormant threads*/

/*(4)Put the buffer back on the queue*/

ioctl(video_fd,VIDIOC_QBUF,&video_buffer);

}

}

//Running example:. / a.out /dev/video0

int main(int argc,char **argv)

{

if(argc!=2)

{

printf("./app </dev/videoX>\n");

return 0;

}

int err;

pthread_t thread_id;

/*Initialize mutex*/

pthread_mutex_init(&mutex,NULL);

/*Initialization condition variable*/

pthread_cond_init(&cond,NULL);

/*Initialize camera device*/

err=VideoDeviceInit(argv[1]);

printf("VideoDeviceInit=%d\n",err);

if(err!=0)return err;

/*Create sub thread: collect camera data*/

pthread_create(&thread_id,NULL,pthread_read_video_data,NULL);

/*Set thread separation property: collect camera data*/

pthread_detach(thread_id);

char filename[100];

time_t t;

struct tm *tme;

//Start audio and video coding

while(1)

{

//Get local time

t=time(NULL);

t=t+8*60*60; //+Last 8 hours

tme=gmtime(&t);

sprintf(filename,"%d-%d-%d-%d-%d-%d.mp4",tme->tm_year+1900,tme->tm_mon+1,tme->tm_mday,tme->tm_hour,tme->tm_min,tme->tm_sec);

printf("Video name:%s\n",filename);

//Start video encoding

video_audio_encode(filename);

}

return 0;

}

Compiler's Makefile file:

app: gcc ffmpeg_encode_video_audio.c -I /home/wbyq/work_pc/ffmpeg-4.2.2/_install/include -L /home/wbyq/work_pc/ffmpeg-4.2.2/_install/lib -lavcodec -lavfilter -lavutil -lswresample -lavdevice -lavformat -lpostproc -lswscale -L/home/wbyq/work_pc/x264-snapshot-20181217-2245/_install/lib -lx264 -lm -lpthread

Program running example:

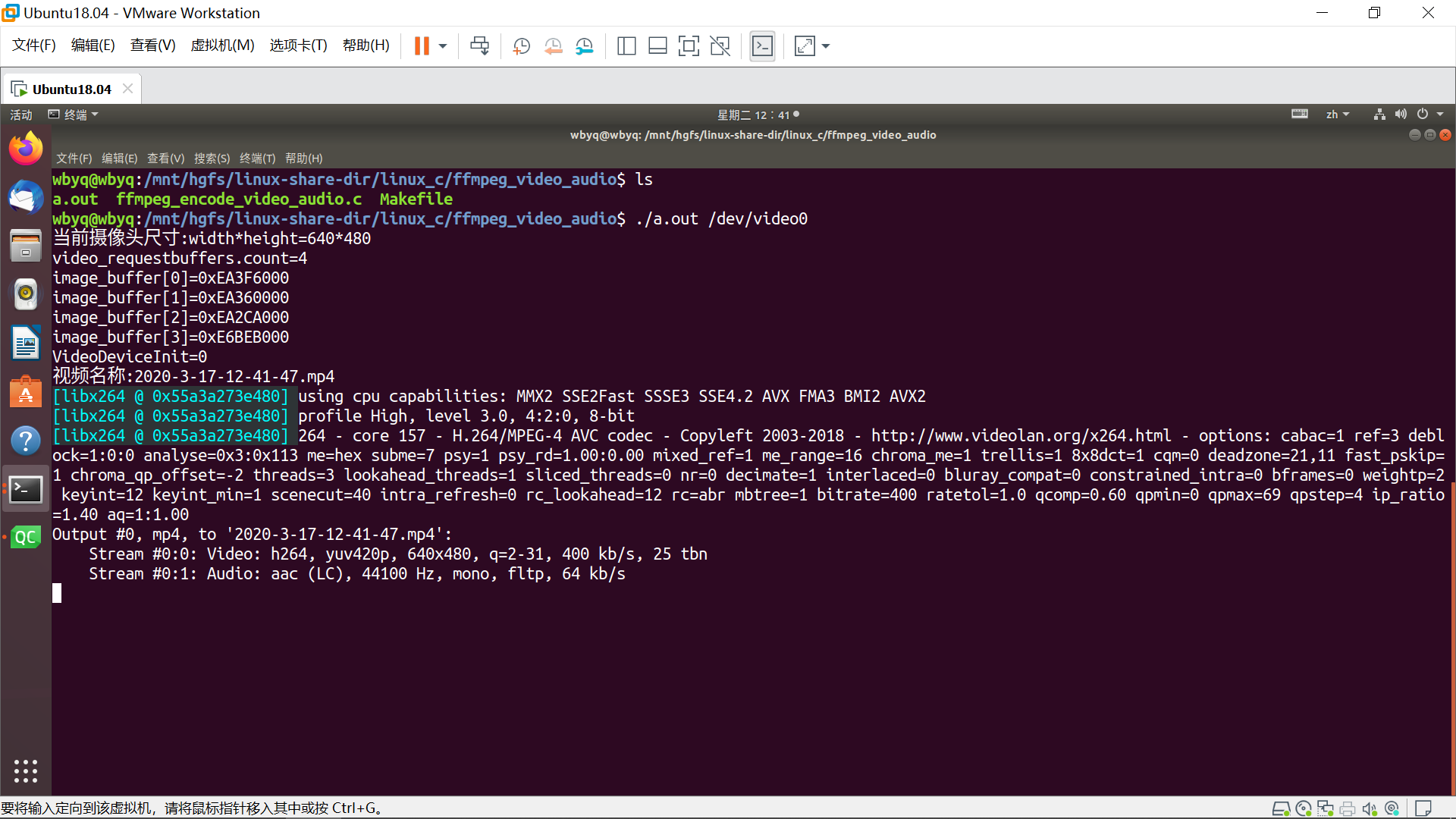

wbyq@wbyq:/mnt/hgfs/linux-share-dir/linux_c/ffmpeg_video_audio$ ./a.out /dev/video0

During recording:

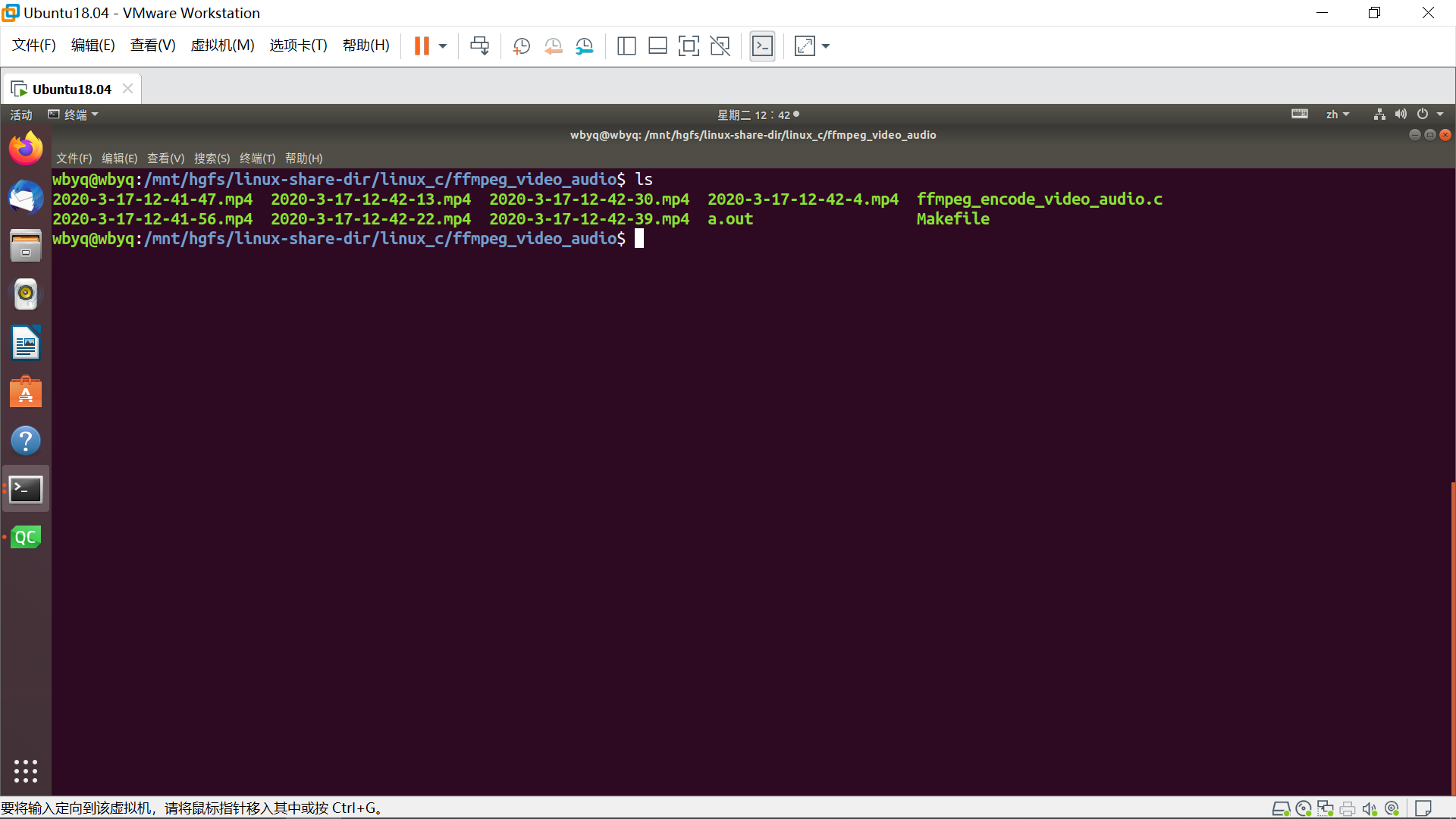

Saved video file:

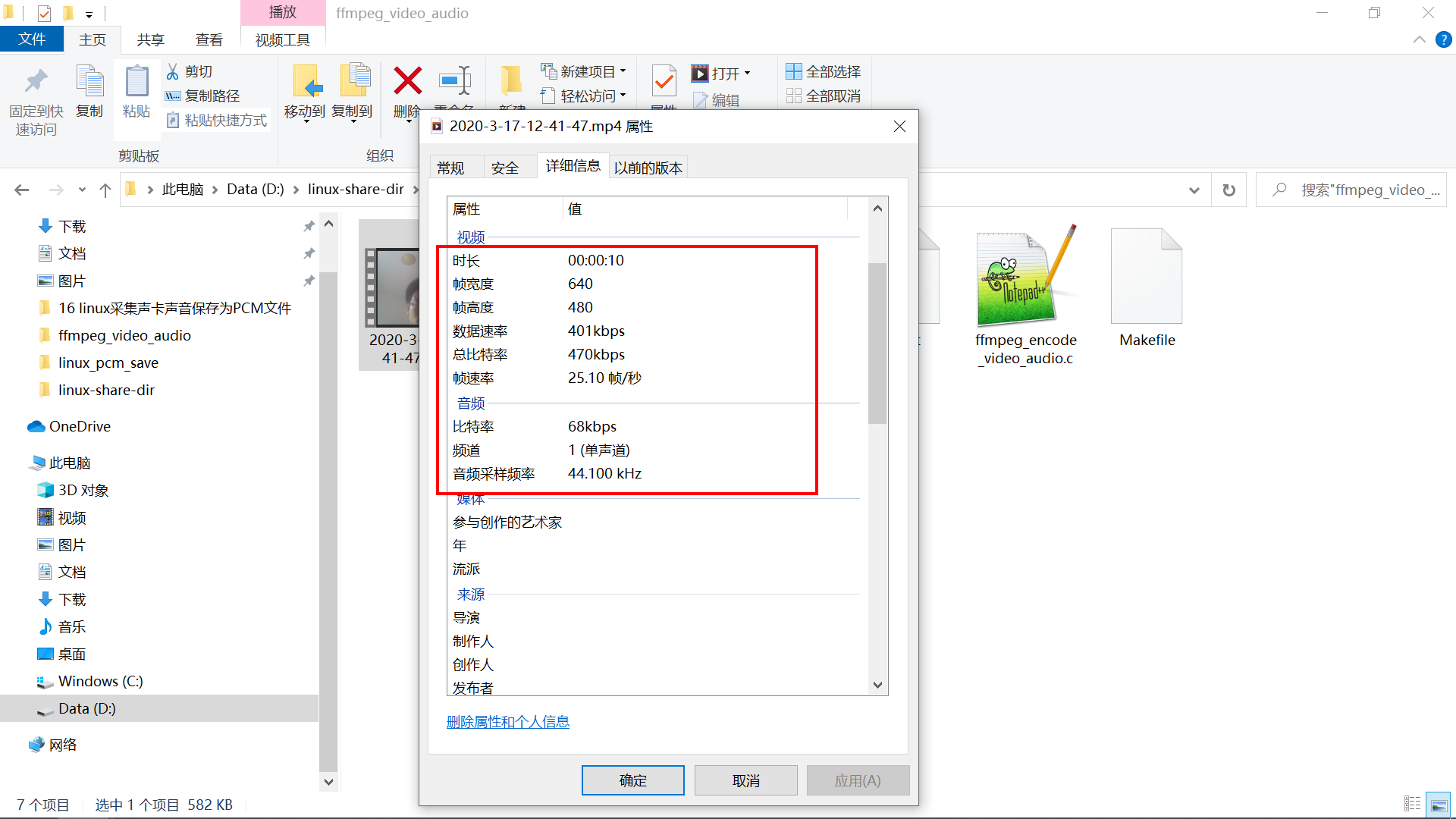

Video information: