Code completion fast food tutorial (1) - 30 lines of code witness miracle

Here is a code completion model I wrote with more than 30 lines of code, including many blank lines and comments. Let's see the effect first.

Completion effect cases

Let's take a look at the common (Python, Keras)

Known:

y_train = keras.utils.to_categorical(y_train, num_classes)\ny_test = keras.` //After completion, it is as follows: ```python y_train = keras.utils.to_categorical(y_train, num_classes) y_test = keras.utils.to_categorical(y_test, num_classes)

The algorithm can know to change the y-train in brackets into y-test.

Another one that moved me to tears (Typescript, vscode)

Enter the following:

text = "let disposable_begin_buffer = vscode.commands.registerCommand('extension.littleemacs.beginningOfBuffer',\nmove.beginningOfBuffer);\nlet disposable_end_buffer = vscode.commands."

The output is as follows:

let disposable_begin_buffer = vscode.commands.registerCommand('extension.littleemacs.beginningOfBuffer',move.beginningOfBuffer); let disposable_end_buffer = vscode.commands.registerCommand('extension.littleemacs.endendOfBuffer',move.endendOfBuffer);

Please note the difficulty in this. begin is used in variable definition, while beginning is used in extension and move. The algorithm can change it to end and keep OfBuffer unchanged.

Function completion (Java)

Enter the following:

public class Issue {\nprivate Long id;\nprivate String filename;\nprivate Long lineNum;\nprivate String issueString;\npublic Long getId() {

The output is as follows:

public class Issue { private Long id; private String filename; private Long lineNum; private String issueString; public Long getId() { return id; }

This is not a big deal for IntellJ IDEA, but for a text model that doesn't understand the Java language at all, it's amazing to be able to complete the return id with a few lines of other variables.

Can read loops (Java, Android)

The title is from Android source code: final int n = a.getindexcount(); \ nfor (int i = 0; I < n; I + +) {\ Nint index = a.

The results are as follows:

final int N = a.getIndexCount(); for (int i = 0; i < N; i++) { int attr = a.getAttribute(i);

From the context, ghost knows what type a is, but this model can guess the getAttribute according to the variable attr.

What should I do if my attr wants to get it with a.getIndex? Instead of retraining, just write an example to the model:

Enter the following:

int attr = a.getIndex(i);\nfor (int i = 0; i < N; i++) {\nint attr = a.

The result of completion is as follows:

int attr = a.getIndex(i); for (int i = 0; i < N; i++) { int attr = a.getIndex(i);

Also add variable names! (Python, Keras)

Enter the following:

tower_1 = Conv2D(64, (1, 1), padding='same', activation='relu')(input_img)\ntower_1 = Conv2D(

It's time to witness the miracle:

tower_1 = Conv2D(64, (1, 1), padding='same', activation='relu')(input_img) tower_2 = Conv2D(64

That is to say, after the completion of tower 2, the algorithm starts to name a tower 2.

It's too convenient for convolution to write many layers, is it?

This addition works for variable names and subscript variables.

Let's take another example:

x_train = x_train.reshape(x_train.shape[0], 1, img_rows, img_cols)

No matter y ﹣ train or X ﹣ test is used for completion, the [0] behind the shape will be changed to [1] by adding 1, which is not applicable here. Manual completion is required.

Multilingual support (common lisp)

To prove that this is not a special optimization for Java and Python, let's take a lisp example:

text = '(progn (setq a (func1 0)) (setq b'

The output is as follows:

(progn (setq a (func1 0)) (setq b (func2 0)) (setq c (func3 0)) (setq d (func4 0))

Not only do we know that func1 plus 1 changes the variables of func2 and setq, but also changes from b to c,d and so on.

30 lines of code create a miracle

With the help of the latest neural network natural processing tools, the above magical functions can be achieved in more than 30 lines of code:

import torch from transformers import GPT2Tokenizer, GPT2LMHeadModel # Load Glossary tokenizer = GPT2Tokenizer.from_pretrained('gpt2') # Enter text to be completed text = 'int idx = a.getIndex(i);\nfor (int i = 0; i < N; i++) {\nint attr = a.' predicted_text = text # Each one can only fill in a token. It takes many times to fill in a sentence. I pat my head 30 times. for i in range(0,30): # Take the last prediction result as the input of this time, so-called Autoregression indexed_tokens = tokenizer.encode(predicted_text) # Convert the read index mark to PyTorch vector tokens_tensor = torch.tensor([indexed_tokens]) # Pre trained weights in loading model model = GPT2LMHeadModel.from_pretrained('gpt2') # Set to eval mode so that the Dropout process in training mode is not executed model.eval() # Acceleration with GPU, not too fast honestly tokens_tensor = tokens_tensor.to('cuda') model.to('cuda') # Reasoning with torch.no_grad(): outputs = model(tokens_tensor) predictions = outputs[0] # Get next subword of forecast predicted_index = torch.argmax(predictions[0, -1, :]).item() # Decode it into text that we all understand. predicted_text = tokenizer.decode(indexed_tokens + [predicted_index]) # Print input results print(predicted_text)

For automatic writing

In fact, the gpt-2 model used above is not used to complete the code. It is used to write something automatically when it comes to its essence.

For example, you can try to complete "To be or not to be". My results a re as follows: "To be or not to be, the only thing that matters is that you're a good person."

I have a dream that one day I will be able to live in a world where I can be a part of something bigger than myself.

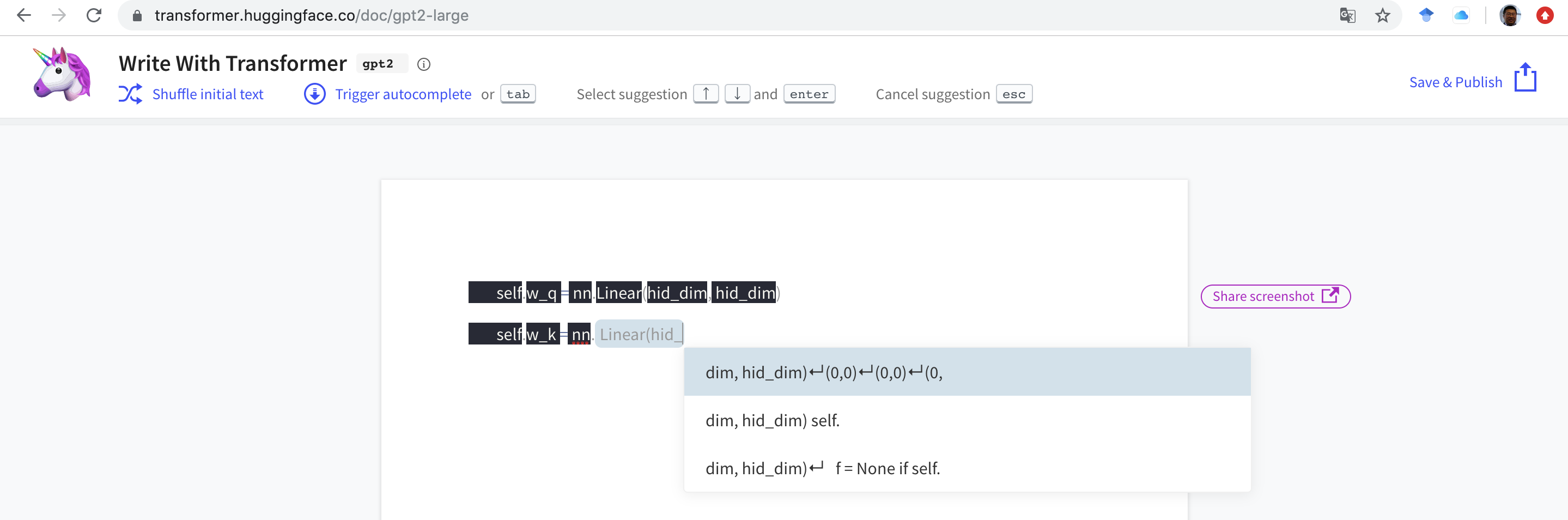

If you don't want to write code, you can directly https://transformer.huggingface.co/doc/gpt2-large Medium to direct test.

As shown in the following figure, you can write code and text:

Installation environment

If you want to try out the above code, just install the transformers library.

pip install transformers

In addition, the transformers library depends on one of PyTorch or Tensorflow. The above code is based on PyTorch, and the following PyTorch needs to be installed:

pip3 install torch torchvision

The installation commands under Windows are slightly different. You need to specify the version number, for example:

pip3 install torch===1.3.0 torchvision===0.4.1 -f https://download.pytorch.org/whl/torch_stable.html