This article is from lesson 3 of the golden series of Huawei cloud native King's road training camp. It is mainly taught by Ma Ma, the chief architect of Huawei cloud container batch computing, and introduces the related concepts and technical architecture of Kubernetes in the cloud native technology system.

01 introduction to kubernetes

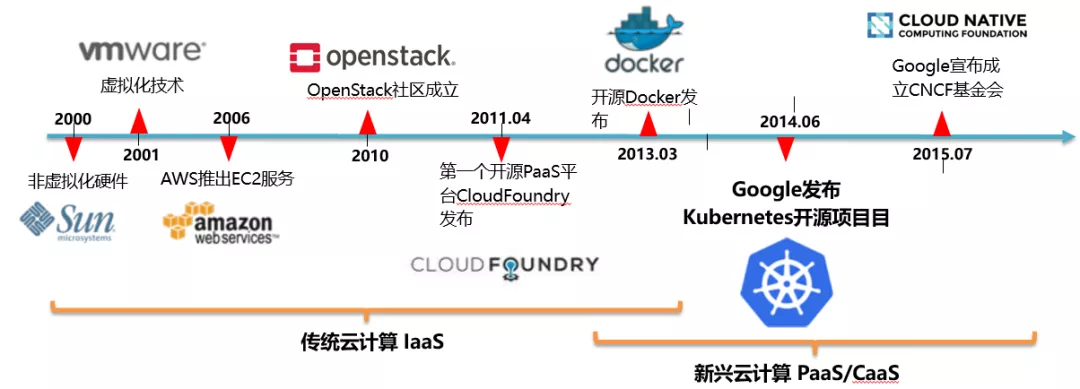

Development of cloud computing

In the view of users, the resources in the "cloud" can be infinitely expanded, and can be obtained at any time, used on demand, expanded at any time, and paid according to use. This feature is often referred to as using IT infrastructure like hydropower.

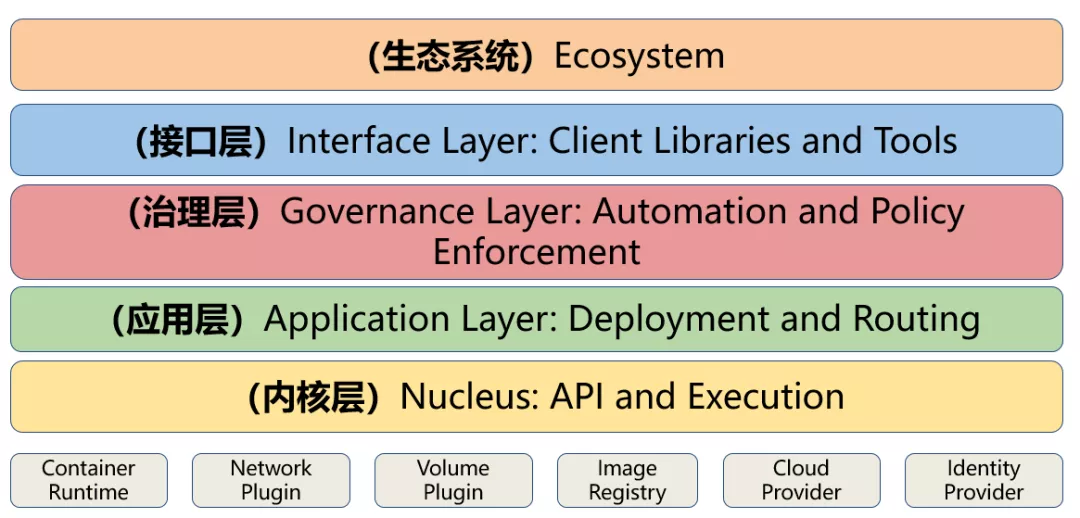

Kubernetes architecture layering

The figure shows several main levels involved in the whole Kubernetes ecology described by Kubernetes community:

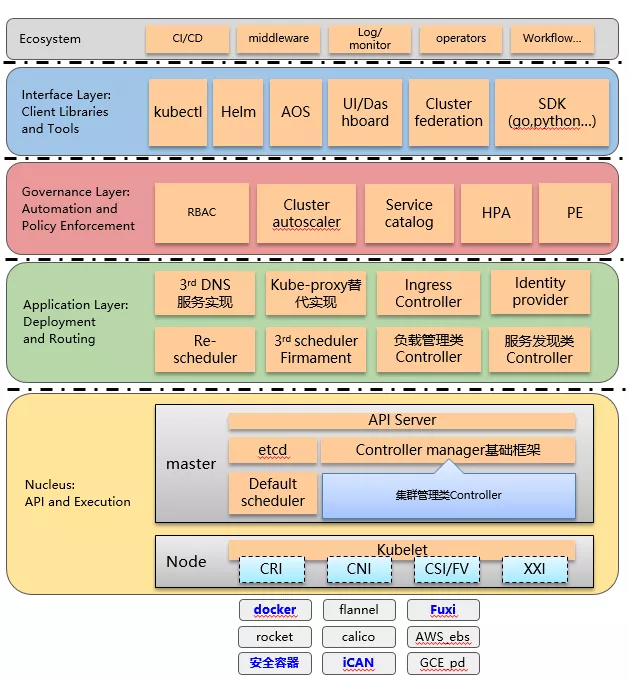

Detailed definition of each layer in K8S community architecture

The above figure is from top to bottom:

1) Ecological layer: not within the scope of K8S

2) Interface layer (tools, SDK libraries, UI, etc.):

- K8S official project will provide libraries, tools, UI and other peripheral tools

- External can provide its own implementation

3) Governance layer: policy execution and automated orchestration

- The optional layer for application operation. The absence of this layer function will not affect the execution of the application

- Automation API: Horizontal elastic scaling, tenant management, cluster management, dynamic LB, etc

- Policy API: Speed limit, resource quota, pod reliability policy, network policy, etc

4) Application layer: Deployment (stateless / stateful applications, batch processing, cluster applications, etc.) and routing (service discovery, DNS resolution, etc.)

- The K8S distribution has the necessary functions and API s. The K8S will provide a default implementation, such as Scheduler

- Controller and scheduler can be replaced by their respective implementations, but they must pass the conformance test

- Business management Controller: daemonset/replicaset/replication/statefulset/cronjob/service/endpoint

5) Kernel layer: the core function of Kubernetes, which provides API s to build high-level applications externally and plug-in application execution environment internally

- It is implemented by the mainstream K8S codebase (main project), which belongs to the kernel and minimum feature set of K8S. Equivalent to Linux Kernel

- Provide the default implementation of Controller and Scheduler

- Cluster management Controller: Node/gc/podgc/volume/namespace/resourcequota/serviceaccount

in general:

Kernel layer: it provides the minimum set of core features and API, which is a required module

Above the kernel layer: implement the kernel layer API in various Controller plug-ins, and support replaceable implementation

Below the kernel layer: various adaptive storage, networks, containers, cloud providers, etc

02 basic concepts of kubernetes

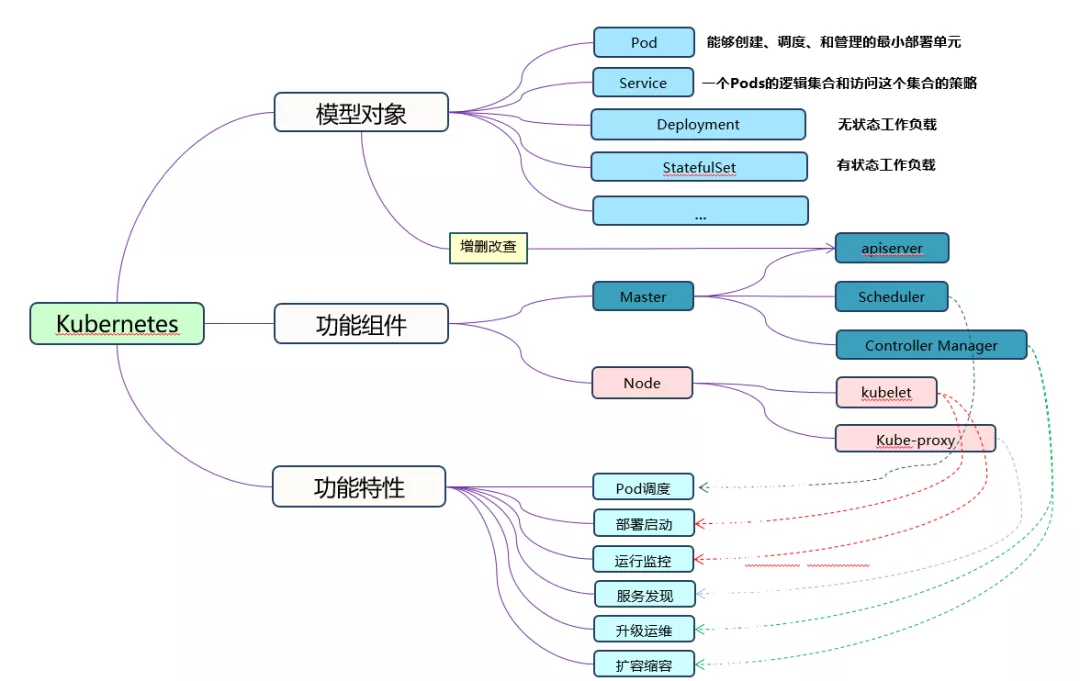

Kubernets overview

Kubernetes key concept Pod

- In Kubernetes, pods is the smallest deployment unit that can be created, scheduled, and managed. It is a collection of containers, not a separate application container

- Containers in the same Pod share the same network namespace, IP address and port space.

- In terms of life cycle, Pod is a short-term rather than a long-term application. Pods are scheduled to the node and remain on the node until destroyed.

POD instance:

{"kind": "Pod","apiVersion": "v1","metadata": {"name": "redis-django","labels": {"app": "webapp"}},"spec": {"containers": [{"name": "key-value-store","image": "redis"},{"name": "frontend","image": "django"}]}}Pod details - Containers

1) Infrastructure Container: base container

- The user is invisible and does not need to be aware

- Maintain the entire Pod cyberspace

2) InitContainers: initialization container, which is generally used for service waiting processing and registering Pod information

- Execute before business container

- Execute in sequence, exit after successful execution (exit 0), and start the business container after successful execution

3) Containers: Business Container

- Start in parallel and keep Running after successful startup

apiVersion: v1kind: Podmetadata:name: myapp-podlabels:app: myappspec:containers:- name: myapp-containerimage: busyboxcommand: ['sh', '-c', 'echo The app is running! && sleep 3600']initContainers:- name: init-myserviceimage: busyboxcommand: ['sh', '-c', 'until nslookup myservice; do echo waiting for myservice; sleep 2; done;']- name: init-mydbimage: busyboxcommand: ['sh', '-c', 'until nslookup mydb; do echo waiting for mydb; sleep 2; done;']

Basic composition of container

1) Mirror part:

- Mirror address and pull policy

- Pull the authentication credentials of the image

2) Start command:

- command: replace the entry point of the docker container

- args: as the input parameter of the docker container entrypoint

3) Computing resources:

- Request value: scheduling basis

- Limit value: the maximum usable size of the container

spec:

imagePullSecrets: - name: default-secret containers: - image: kube-dns:1.0.0 imagePullPolicy: IfNotPresent command: - /bin/sh - -c - /kube-dns 1>>/var/log/skydns.log 2>&1 --domain=cluster.local. --dns-port=10053 --config-dir=/kube-dns-config --v=2 resources: limits: cpu: 100m memory: 512Mi requests: cpu: 100m memory: 100Mi

Pod explanation - external input

External input methods that the Pod can receive: environment variables, configuration files, and keys.

Environment variable: easy to use, but the container must be restarted once it is changed.

- Key value customization

- From configuration file (configmap)

- From key (Secret)

It is mounted in the container in the form of volume, and the permission is controllable.

- Configuration file (configmap)

- Key (secret)

spec:

containers:- env:- name: APP_NAMEvalue: test- name: USER_NAMEvalueFrom:secretKeyRef:key: usernamename: secretenvFrom:- configMapRef:name: configvolumeMounts:- mountPath: /usr/local/configname: cfg- mountPath: /usr/local/secretname: sctvolumes:- configMap:defaultMode: 420items:- key: agepath: agename: configname: cfg- name: sctsecret:defaultMode: 420secretName: secret

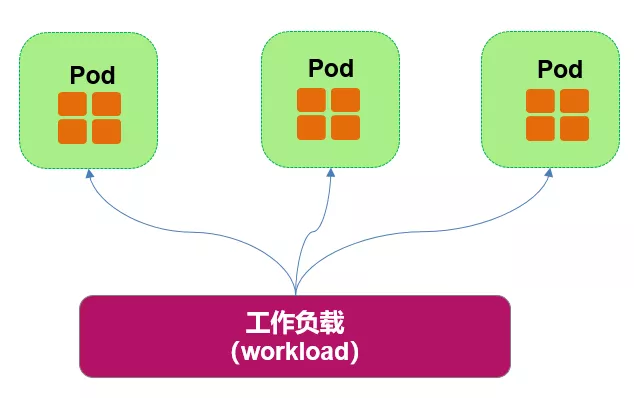

Relationship between Pod and workload

- Through label selector Associated with owerReference

- Pod realizes application operation and maintenance through workload, such as scaling, upgrading, etc

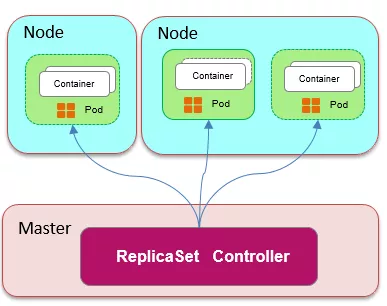

Critical workload - ReplicaSet

- ReplicaSet - replica controller

- Ensure that a certain number of copies of Pod are running. If this number is exceeded, the controller will kill some. If it is less, the controller will start some.

- ReplicaSet is used to solve the problem of pod expansion and shrinkage.

- Usually used for stateless applications

apiVersion: extensions/v1beta1kind: ReplicaSetmetadata:name: frontendspec:replicas: 3selector:matchLabels:tier: frontendmatchExpressions:- {key: tier, operator: In, values: [frontend]}template:metadata:labels:app: guestbooktier: frontendspec:containers:- name: php-redisimage: gcr.io/google_samples/gb-frontend:v3resources:requests:cpu: 100mmemory: 100Mienv:- name: GET_HOSTS_FROMvalue: dnsports:- containerPort: 80Critical workload Deployment

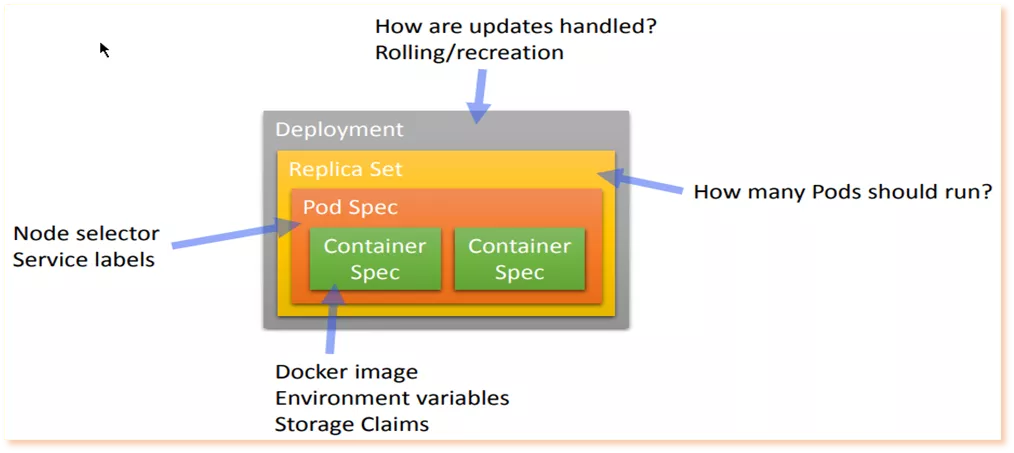

1) Kubernetes Deployment provides an official method for updating Pod and Replica Set (the next generation Replication Controller). You can only describe the desired ideal state (expected running state) in the Deployment object, and the Deployment controller converts the current actual state to your desired state;

2) Deployment integrates the functions of online deployment, rolling upgrade, creating replicas, suspending online tasks, resuming online tasks, and rolling back to a previous version (successful / stable) of deployment. To some extent, deployment can help us realize unattended online and greatly reduce the complex communication and operational risks in our online process.

3) Typical use cases of Deployment:

- Use Deployment to start (go online / deploy) a Pod or ReplicaSet

- Check whether a Deployment is successfully executed

- Update the Deployment to recreate the corresponding Pods (for example, you need to use a new Image)

- If the existing Deployment is unstable, roll back to an earlier stable Deployment version

apiVersion: extensions/v1beta1kind: Deploymentmetadata:name: nginx-deploymentspec:replicas: 3template:metadata:labels:app: nginxspec:containers:- name: nginximage: nginx:1.7.9ports:- containerPort: 80

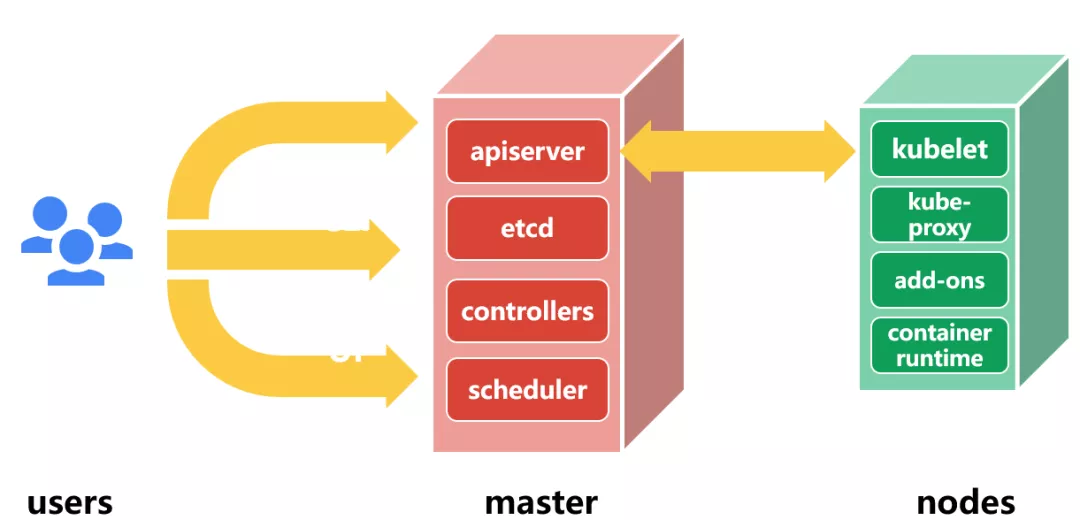

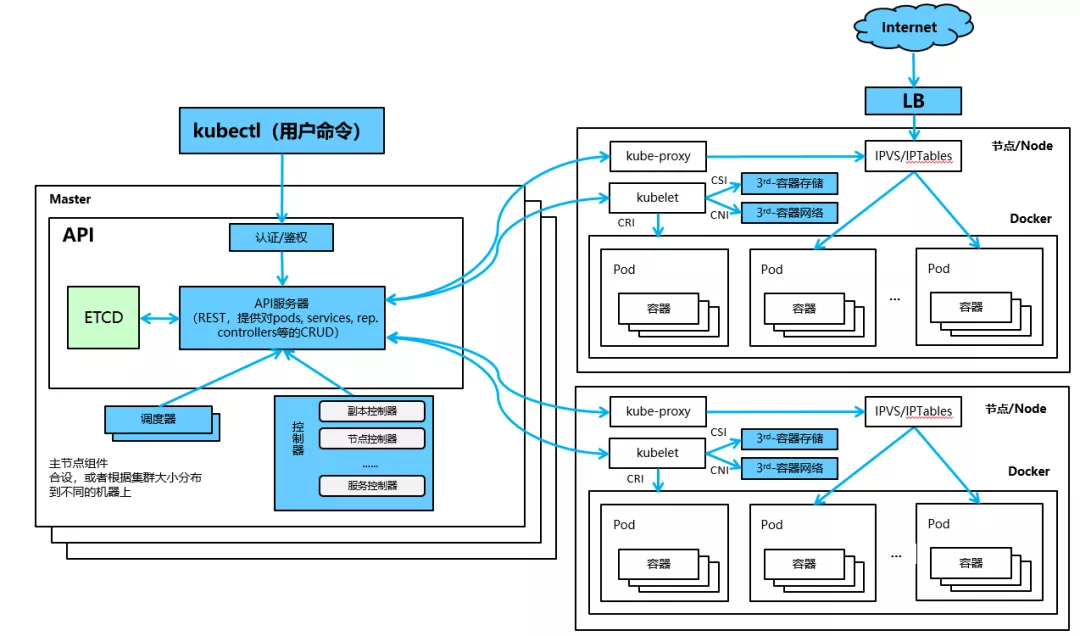

Kubernetes system components

03 overall architecture of kubernetes

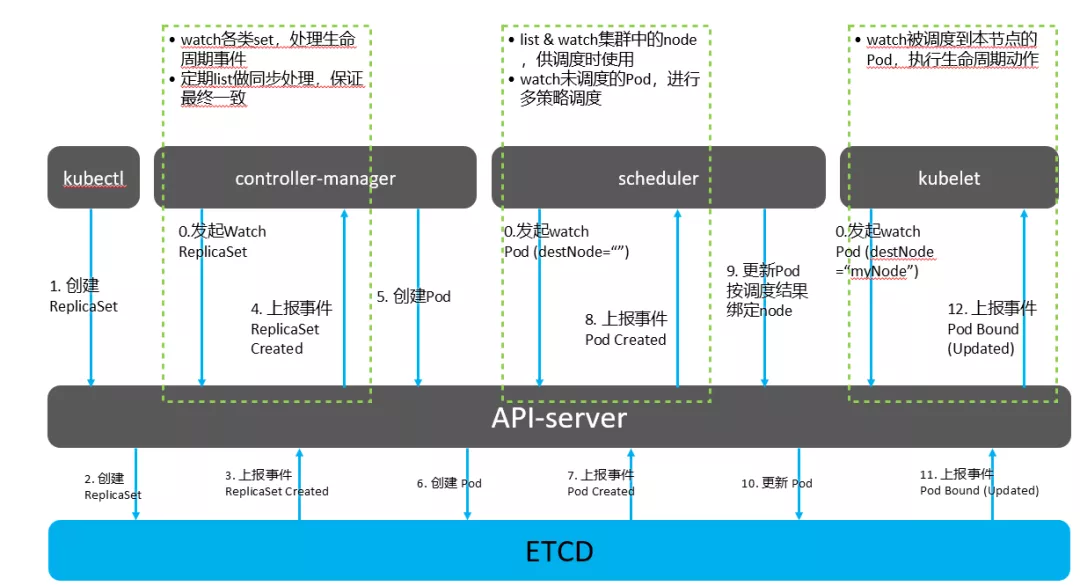

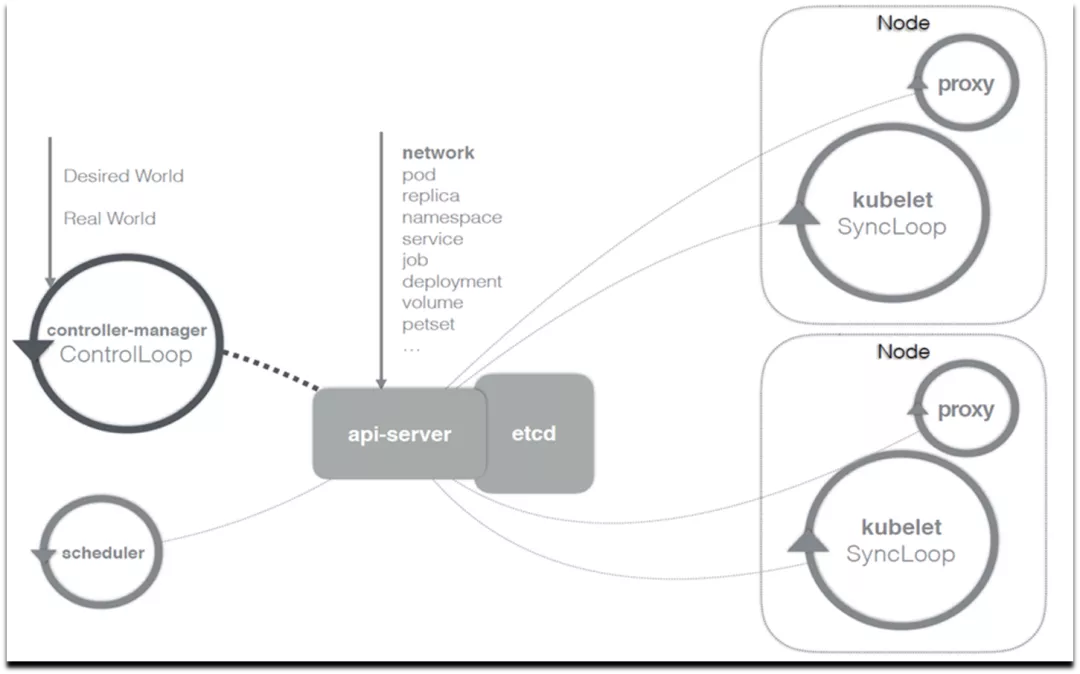

Kubernetes controller architecture based on list watch mechanism

Kubernetes Controllers

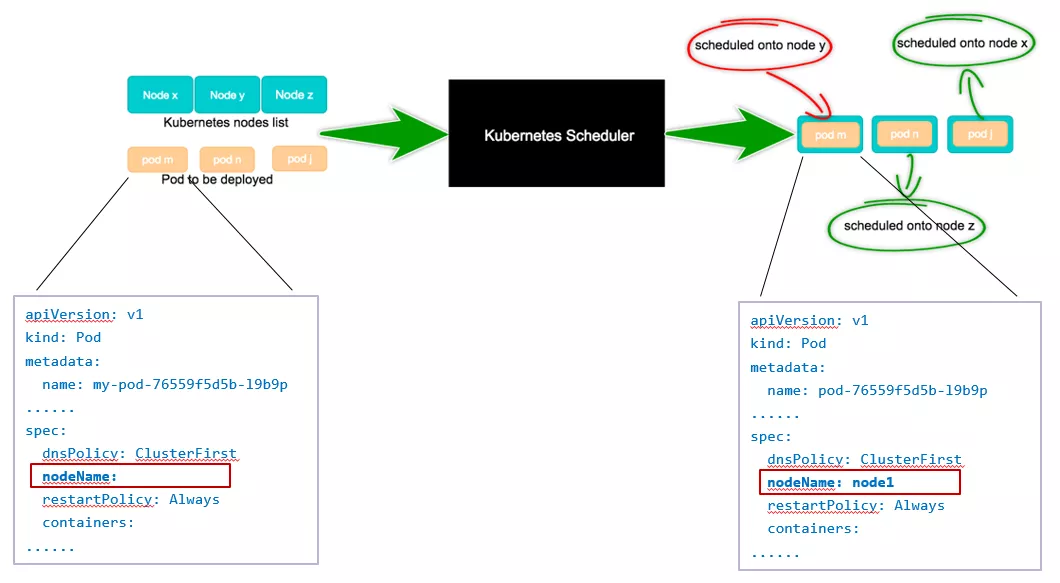

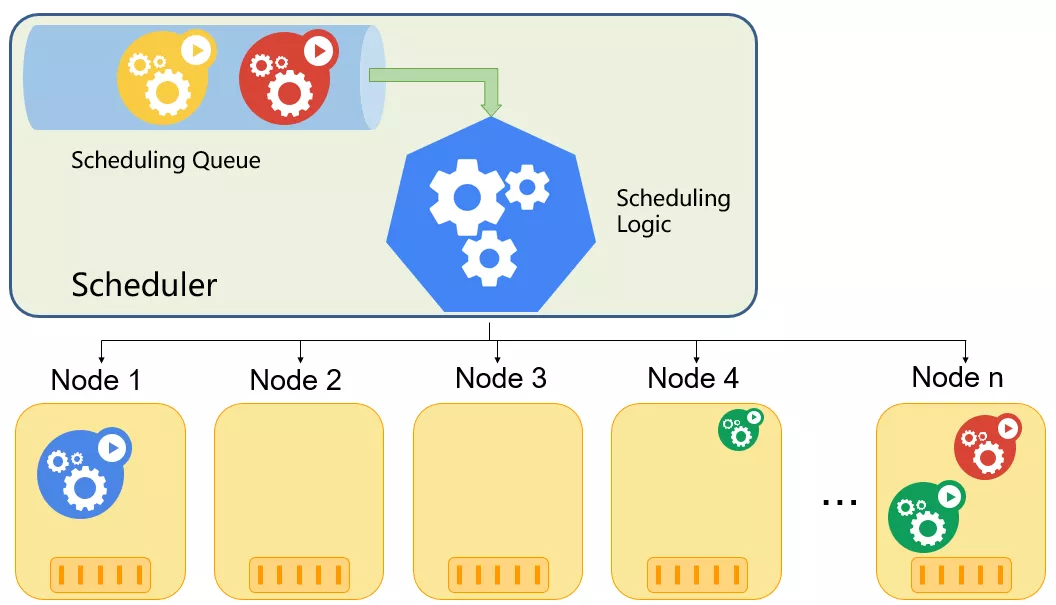

Scheduler: find a suitable Node for Pod

Kubernetes' Default scheduler

- Queue based scheduler

- Schedule one Pod at a time

- Global optimization of scheduling time

04 Introduction to Demo

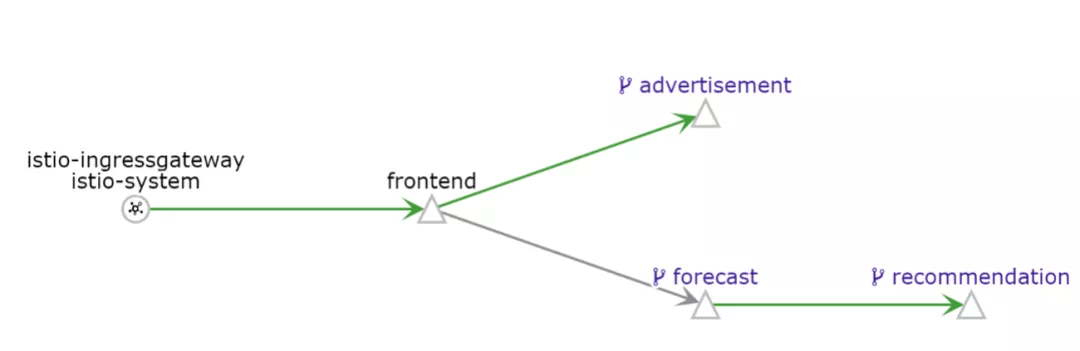

Weather Forecast is an application example for querying weather information of a city. It contains four micro services: frontend, advertisement, forecast and recommendation

- frontend: the foreground service will call advertisement and forecast services to display the whole application page, which is developed using React.js; frontend service has two versions: the interface button of v1 version is green; The interface button of v2 version is blue.

- Advertising: advertising service, which returns static advertising pictures and is developed by Golang.

- Forecast: weather forecast service, which returns the weather data of the corresponding city and is developed using Node.js; The forecast service has two versions: The v1 version directly returns the weather information. The v2 version will request the recommendation service to obtain the recommendation information and return the data together with the weather information.

- Recommendation: recommendation service, which is developed using Java to recommend clothing, sports and other information to users according to weather conditions.