edition:

Centos7.6

JDK1.8

Scala2.11

Python2.7

Git1.8.3.1

Apache Maven3.6.3

CDH6.3.2

Apache Flink1.12.0

The above software needs to be installed in advance!!!

1, Compile Flink

1 download the flink source code

git clone https://github.com/apache/flink.git git checkout release-1.12.0

2. Add maven image

Add the following mirrors in the mirrors tag of maven's setting.xml file

<mirrors>

<mirror>

<id>alimaven</id>

<mirrorOf>central</mirrorOf>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/repositories/central/</url>

</mirror>

<mirror>

<id>alimaven</id>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

<mirror>

<id>central</id>

<name>Maven Repository Switchboard</name>

<url>http://repo1.maven.org/maven2/</url>

<mirrorOf>central</mirrorOf>

</mirror>

<mirror>

<id>repo2</id>

<mirrorOf>central</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://repo2.maven.org/maven2/</url>

</mirror>

<mirror>

<id>ibiblio</id>

<mirrorOf>central</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://mirrors.ibiblio.org/pub/mirrors/maven2/</url>

</mirror>

<mirror>

<id>jboss-public-repository-group</id>

<mirrorOf>central</mirrorOf>

<name>JBoss Public Repository Group</name>

<url>http://repository.jboss.org/nexus/content/groups/public</url>

</mirror>

<mirror>

<id>google-maven-central</id>

<name>Google Maven Central</name>

<url>https://maven-central.storage.googleapis.com

</url>

<mirrorOf>central</mirrorOf>

</mirror>

<mirror>

<id>maven.net.cn</id>

<name>oneof the central mirrors in china</name>

<url>http://maven.net.cn/content/groups/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

</mirrors>

3 execute the compile command

# Enter the root directory of the flink source code cd /opt/os_ws/flink # Execute the package command, mvn clean install -DskipTests -Dfast -Drat.skip=true -Dhaoop.version=3.0.0-cdh6.3.2 -Pvendor-repos -Dinclude-hadoop -Dscala-2.11 -T2C

Note: you should modify the command parameters according to your version. If you have CDH6.2.0 and scala2.12, your command should be like this MVN clean install - dskiptests - dfast - drat. Skip = true - dhaoop. Version = 3.0.0-CDH6.2.0 - pvendor repos - include Hadoop - dsala-2.12 - T2C

Wait about 15 minutes for the compilation to complete

The result of compilation is the flink-1.12.0 folder in the Flink / Flink dist / target / flink-1.12.0-bin directory. Next, package flink-1.12.0 into a tar package

# Enter the packaging result directory cd /opt/os_ws/flink/flink-dist/target/flink-1.12.0-bin # Execute package command tar -zcf flink-1.12.0-bin-scala_2.11.tgz flink-1.12.0

In this way, you can get the flink installation package of CDH 6.3.2 and scala 2.11

2, Compile parcel

The flex - parcel tool is used to compile parcel here

1. Download Flink parcel

cd /opt/os_ws/ # Clone source code git clone https://github.com/pkeropen/flink-parcel.git cd flink-parcel

2. Modify parameters

vim flink-parcel.properties #FLINK download address FLINK_URL=https://archive.apache.org/dist/flink/flink-1.12.0/flink-1.12.0-bin-scala_2.11.tgz #flink version number FLINK_VERSION=1.12.0 #Extended version number EXTENS_VERSION=BIN-SCALA_2.11 #Operating system version, taking CentOS 7 as an example OS_VERSION=7 #CDH mini version CDH_MIN_FULL=5.15 CDH_MAX_FULL=6.3.2 #CDH large version CDH_MIN=5 CDH_MAX=6

3 copy installation package

Here, copy the previously compiled and packaged flink tar package to the root directory of the flink parcel project. If there is no flink package in the root directory during the creation of flink parcel, the tar package of flink will be downloaded from the address in the configuration file to the project root directory. If the installation package already exists in the root directory, the download will be skipped and the existing tar package will be used. Note: you must use your own compiled package here, not the package downloaded from the link!!!

# Copy the installation package and modify it according to the directory of your project cp /opt/os_ws/flink/flink-dist/target/flink-1.12.0-bin/flink-1.12.0-bin-scala_2.11.tgz /opt/os_ws/flink-parcel

4. Compile parcel

# Grant Execution Authority chmod +x ./build.sh # Execute compilation script ./build.sh parcel

After compilation, the flink-1.12.0-bin-scala will be generated in the root directory of the Flink parcel project_ 2.11_ Build folder

5 compile csd

# Compile standlone version ./build.sh csd_standalone # Compile the flex on yarn version ./build.sh csd_on_yarn

After compilation, two jar packages will be generated in the root directory of the Flink parcel project, FLINK-1.12.0.jar and FLINK_ON_YARN-1.12.0.jar

6 upload files

The flink-1.12.0-bin-scala generated after parcel will be compiled_ 2.11_ Copy the three files in the build folder to the / opt / cloudera / parcel repo directory of the node where the CDH Server is located. The Flink generated by csd will be compiled_ ON_ Copy yarn-1.12.0.jar to the / opt/cloudera/csd directory of the node where the CDH Server is located (here, because of the advantage of resource isolation, choose to deploy the flink on yarn mode)

# Copy the parcel, which is compiled on the primary node. If it is not the primary node, you can scp it cp /opt/os_ws/flink-parcel/FLINK-1.12.0-BIN-SCALA_2.11_build/* /opt/cloudera/parcel-repo # Copy scd, which is compiled on the primary node. If it is not the primary node, you can scp it cp /opt/os_ws/flink-parcel/FLINK_ON_YARN-1.12.0.jar /opt/cloudera/csd

Restart CDH server and agent

# Restart the server (only executed by the server node) systemctl stop cloudera-scm-server systemctl start cloudera-scm-server # Restart agent (all agent nodes execute) systemctl stop cloudera-scm-agent systemctl start cloudera-scm-agent

3, CDH integration

Operation steps

1 log in to CDH

Open the CDH login interface

Enter the user name and password and click login

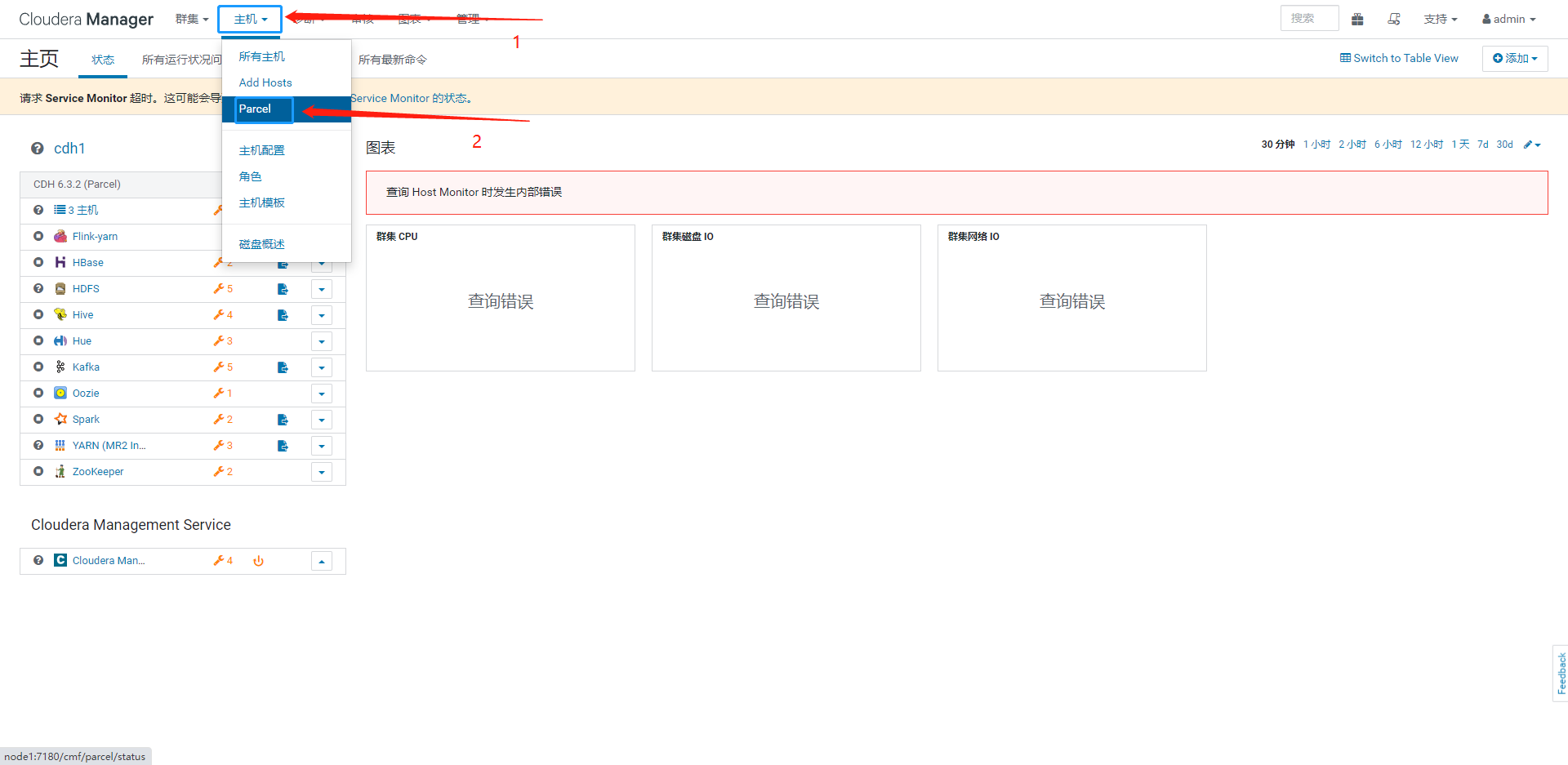

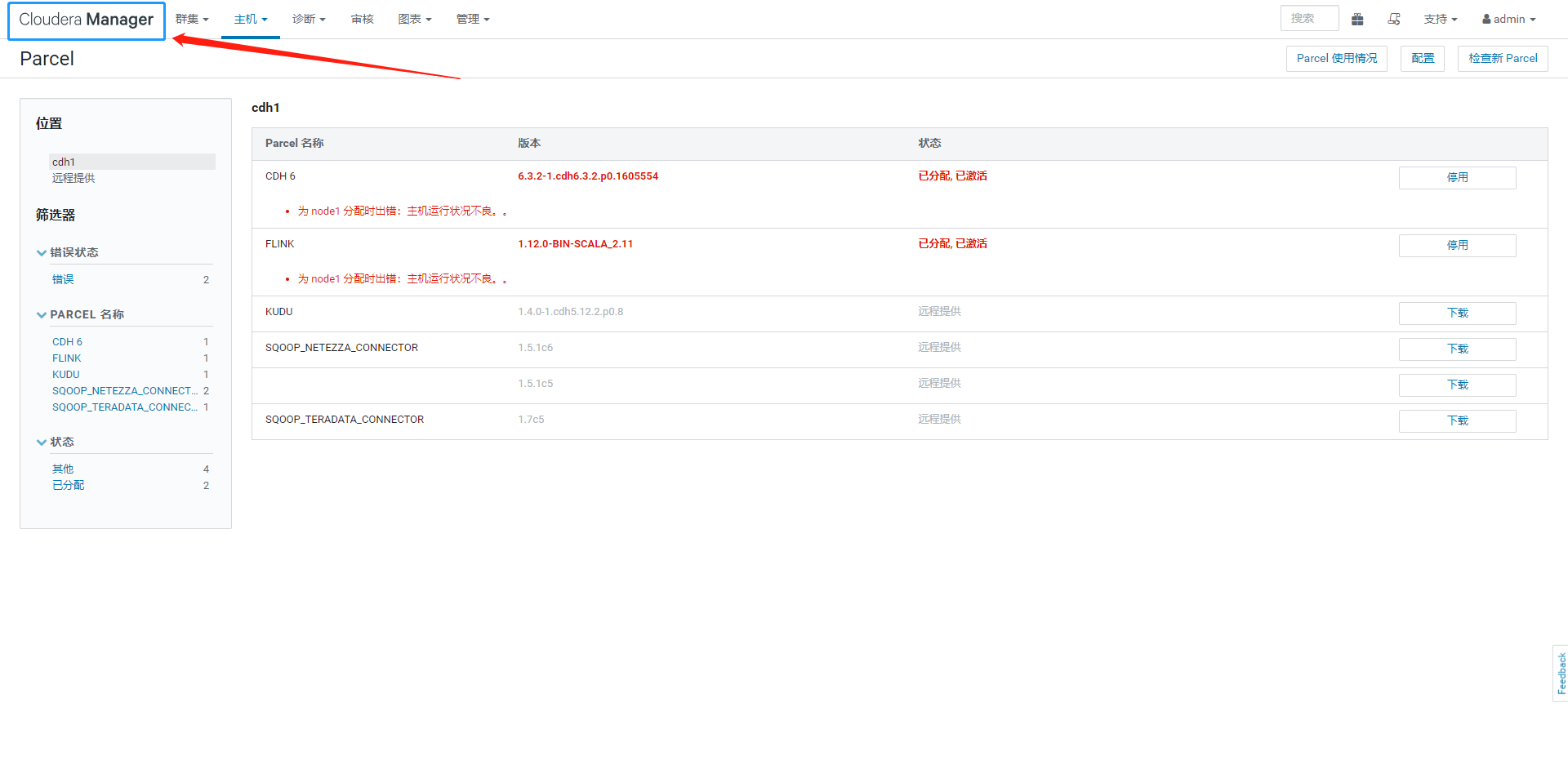

2. Enter the Parcel operation interface

Click host and Parcel

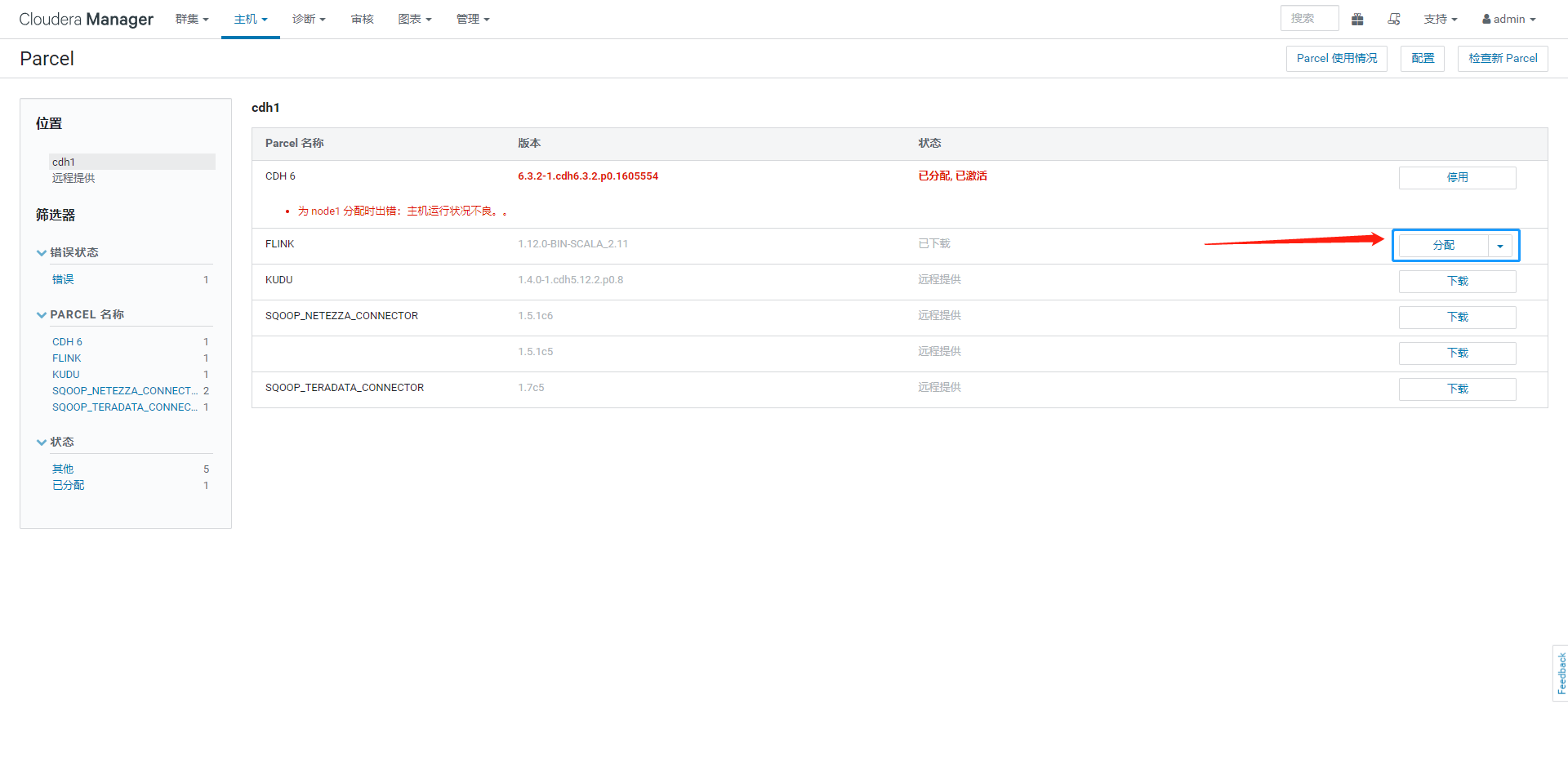

3. Assign Parcel

Click Assign

Waiting for allocation

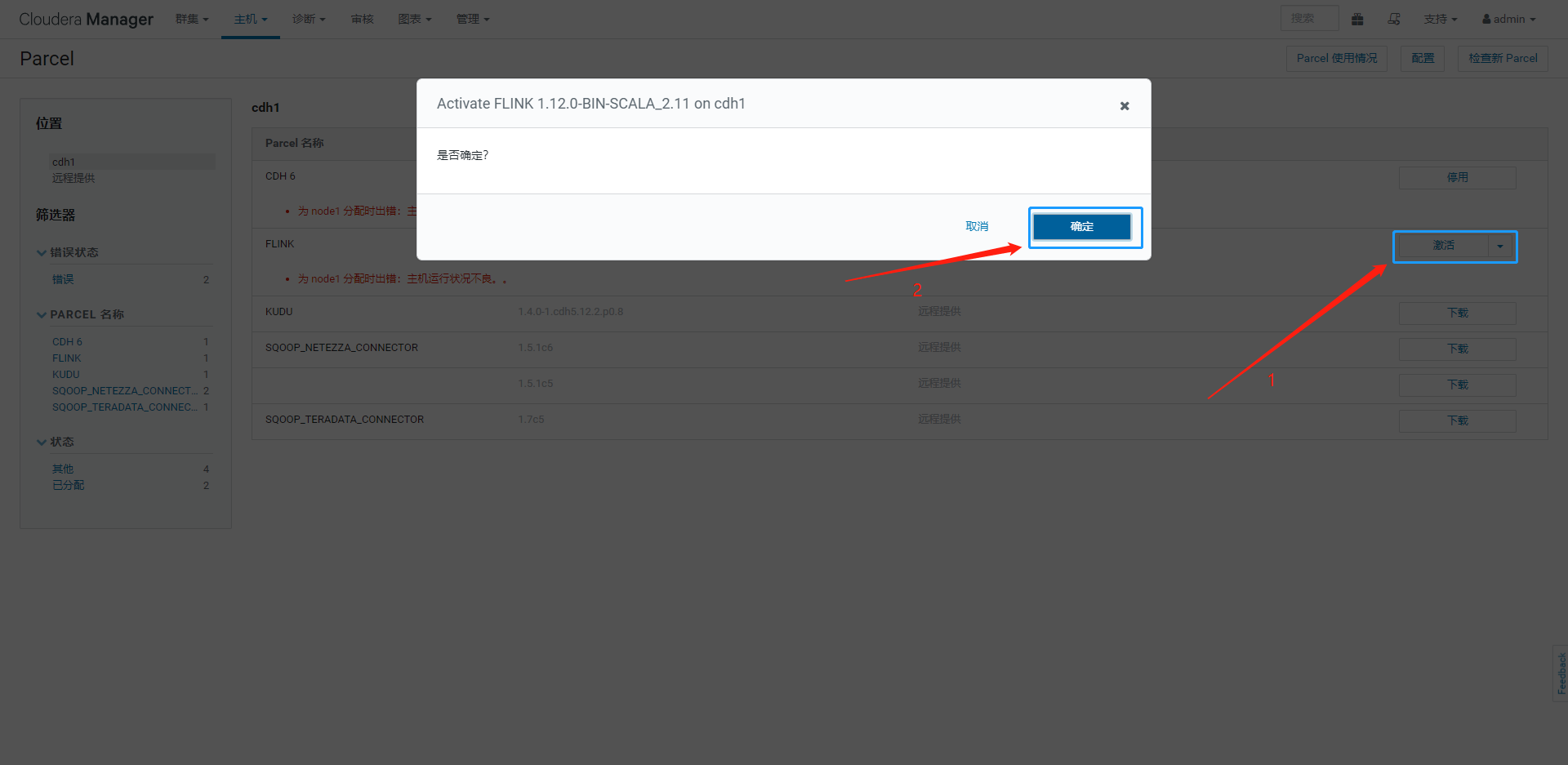

4 activate Parcel

Click activate and click OK

Wait for activation to complete

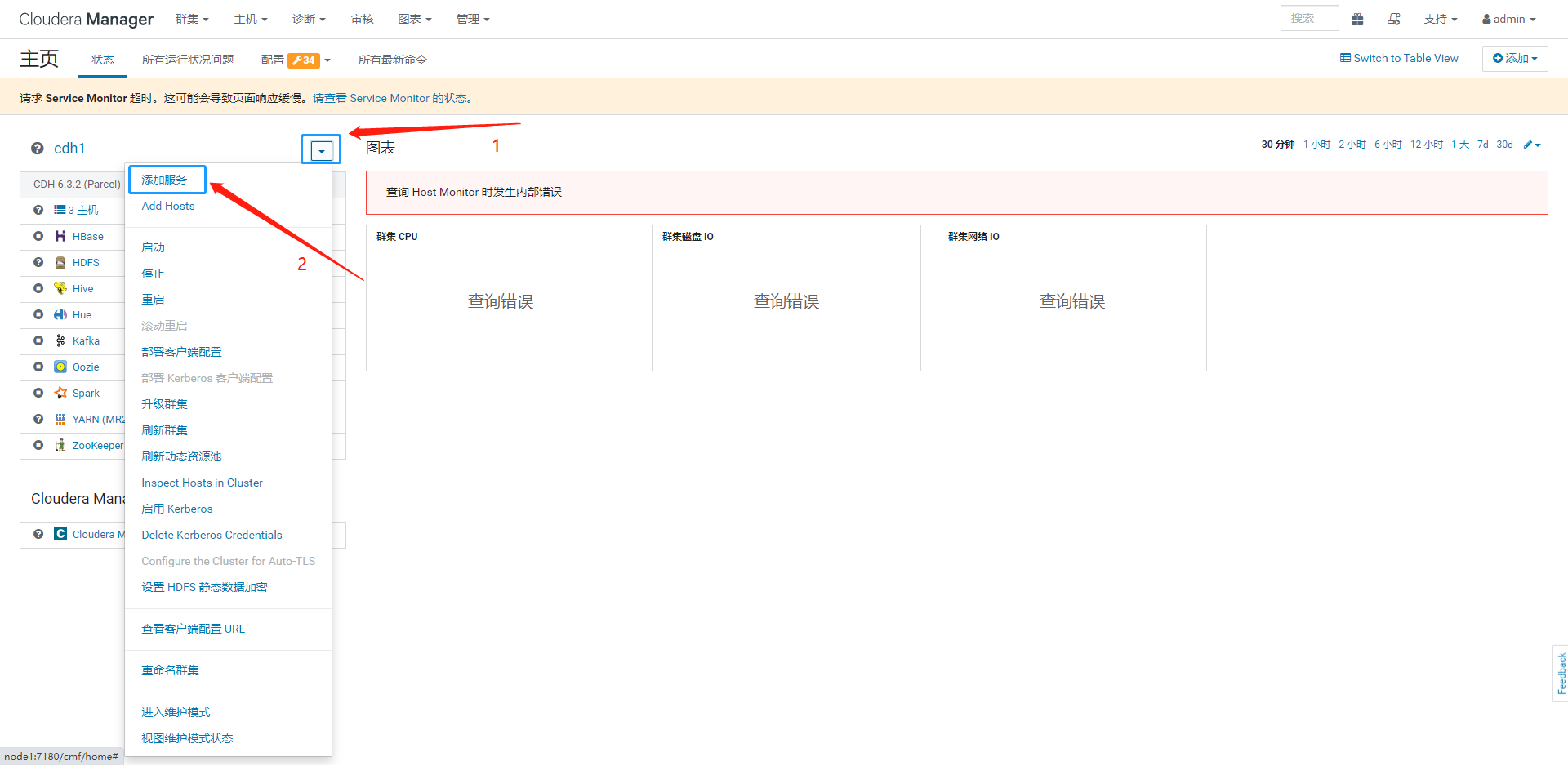

5 return to the main interface

Click Cloudera Manager

6 add service

Click the inverted triangle and click add service

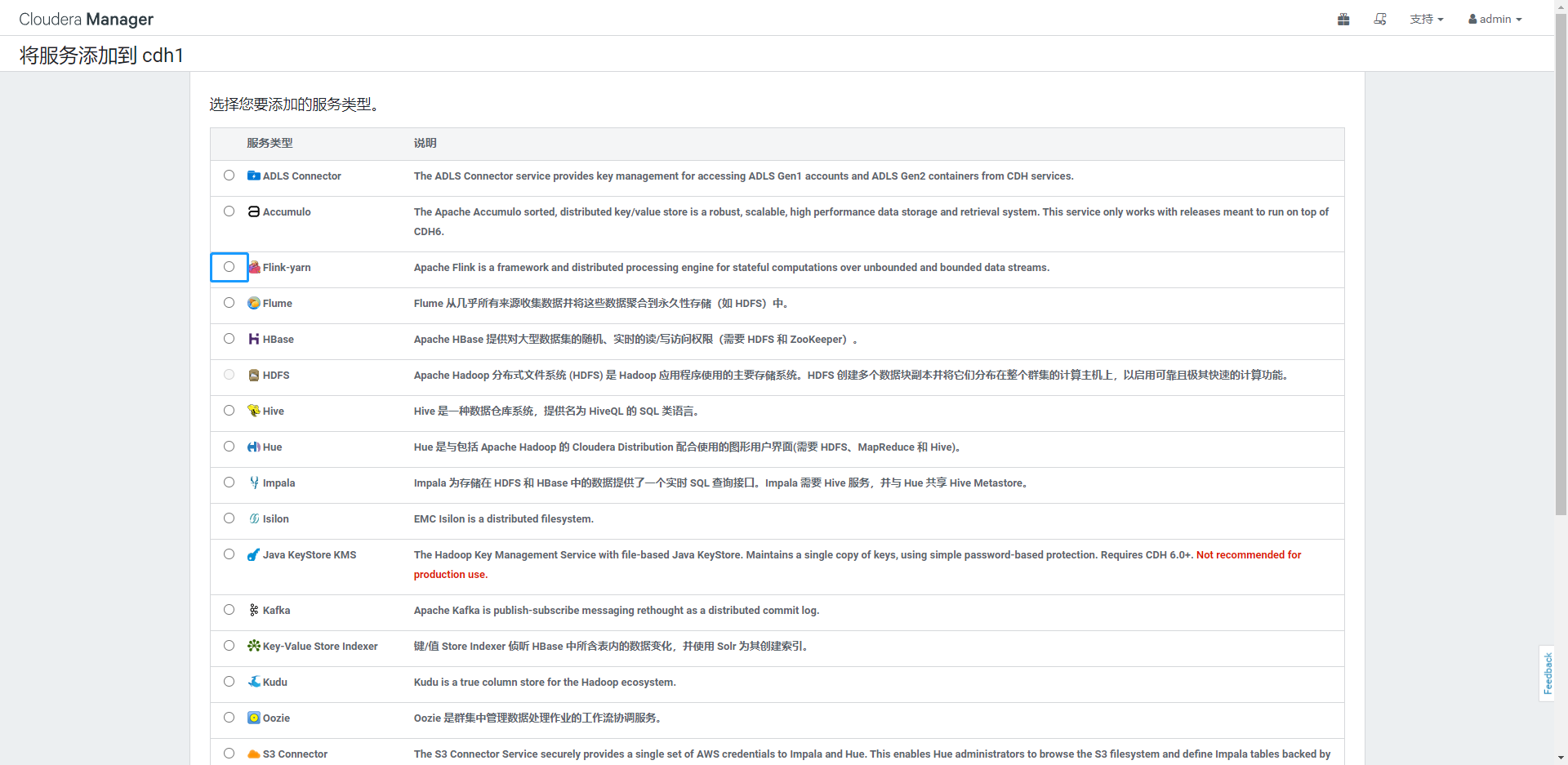

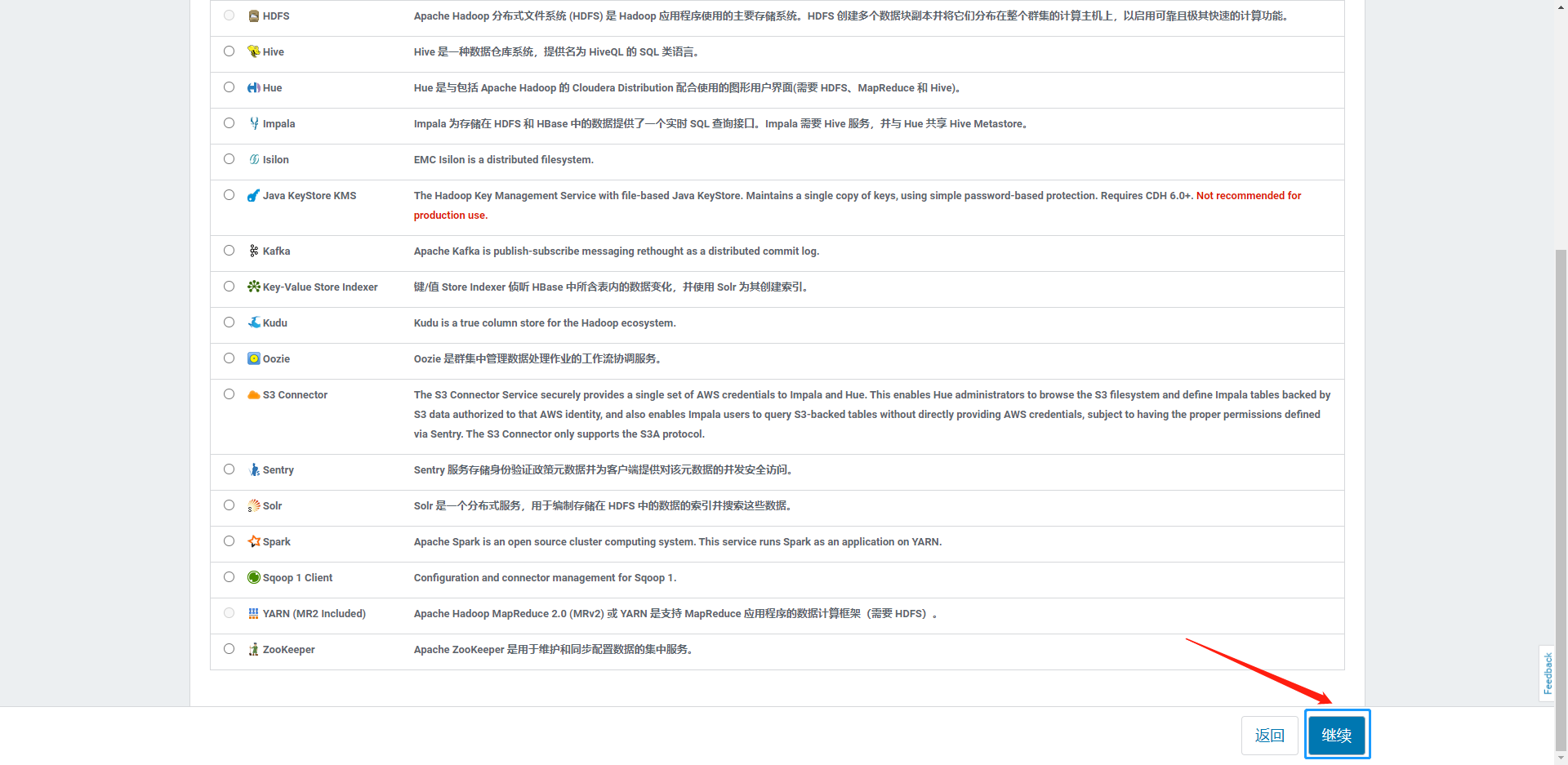

Click Flink yarn and click continue

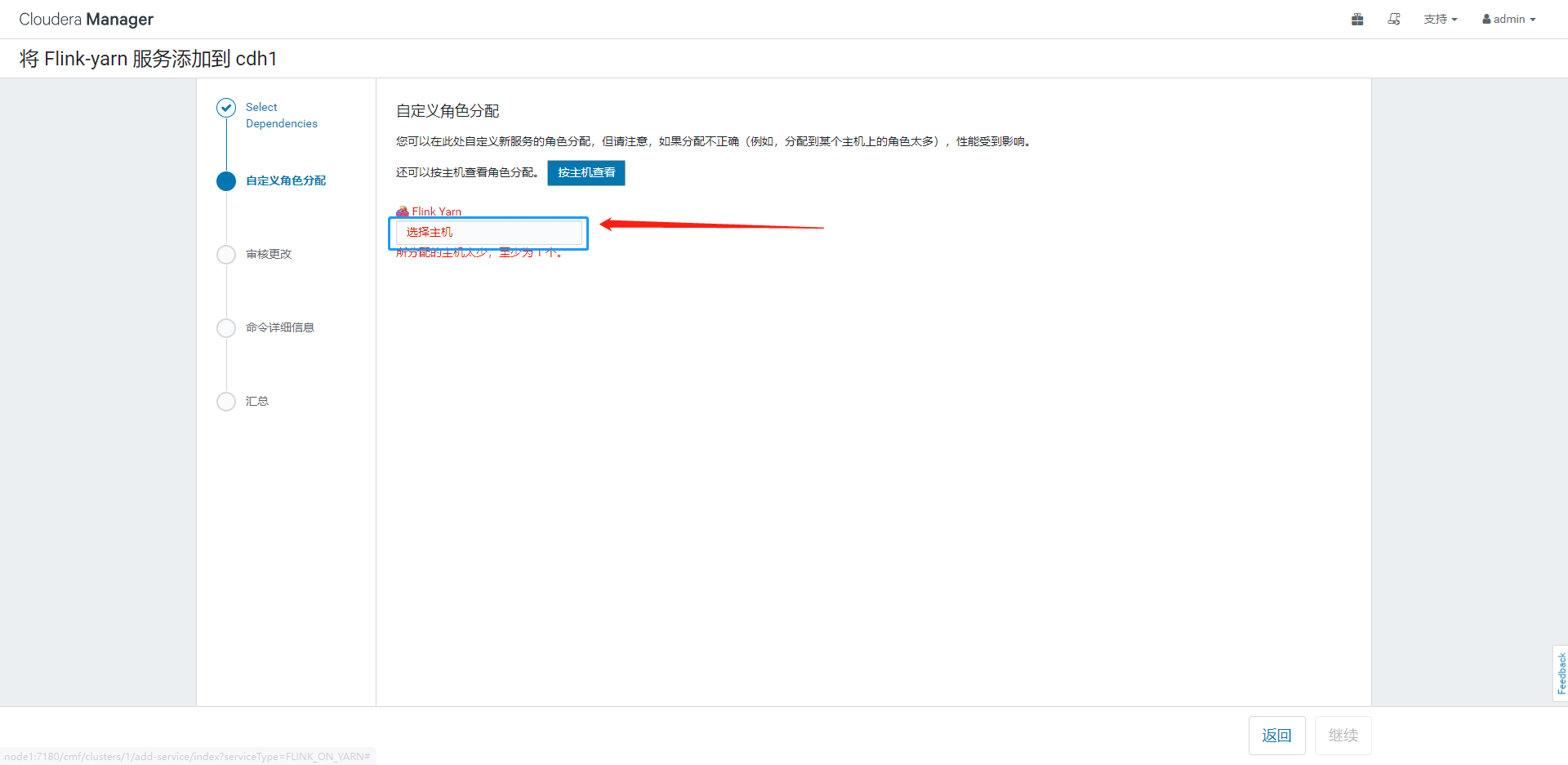

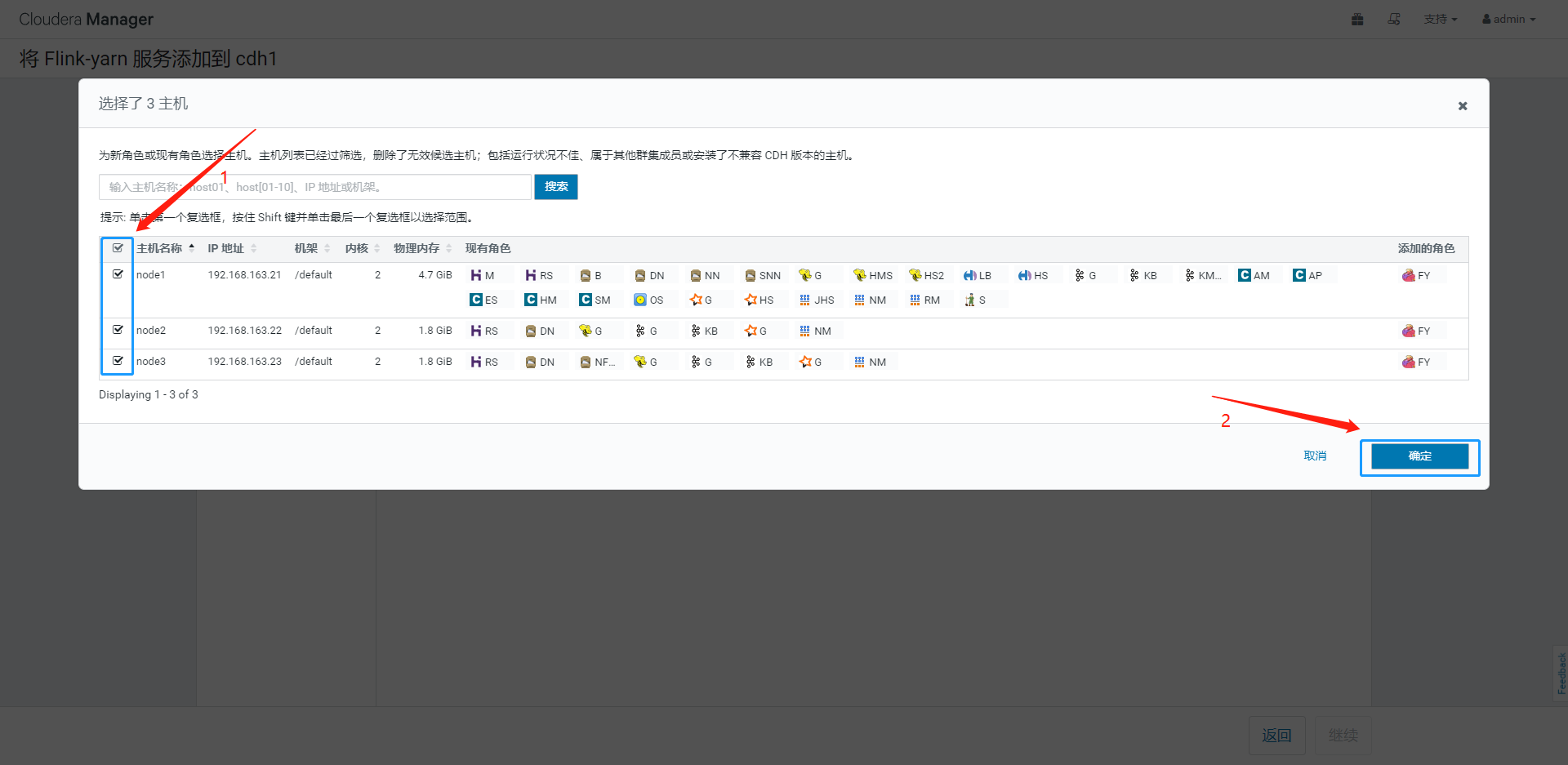

Click Select host to select which nodes to deploy the flink service, and select according to your own situation

Select the host and click continue

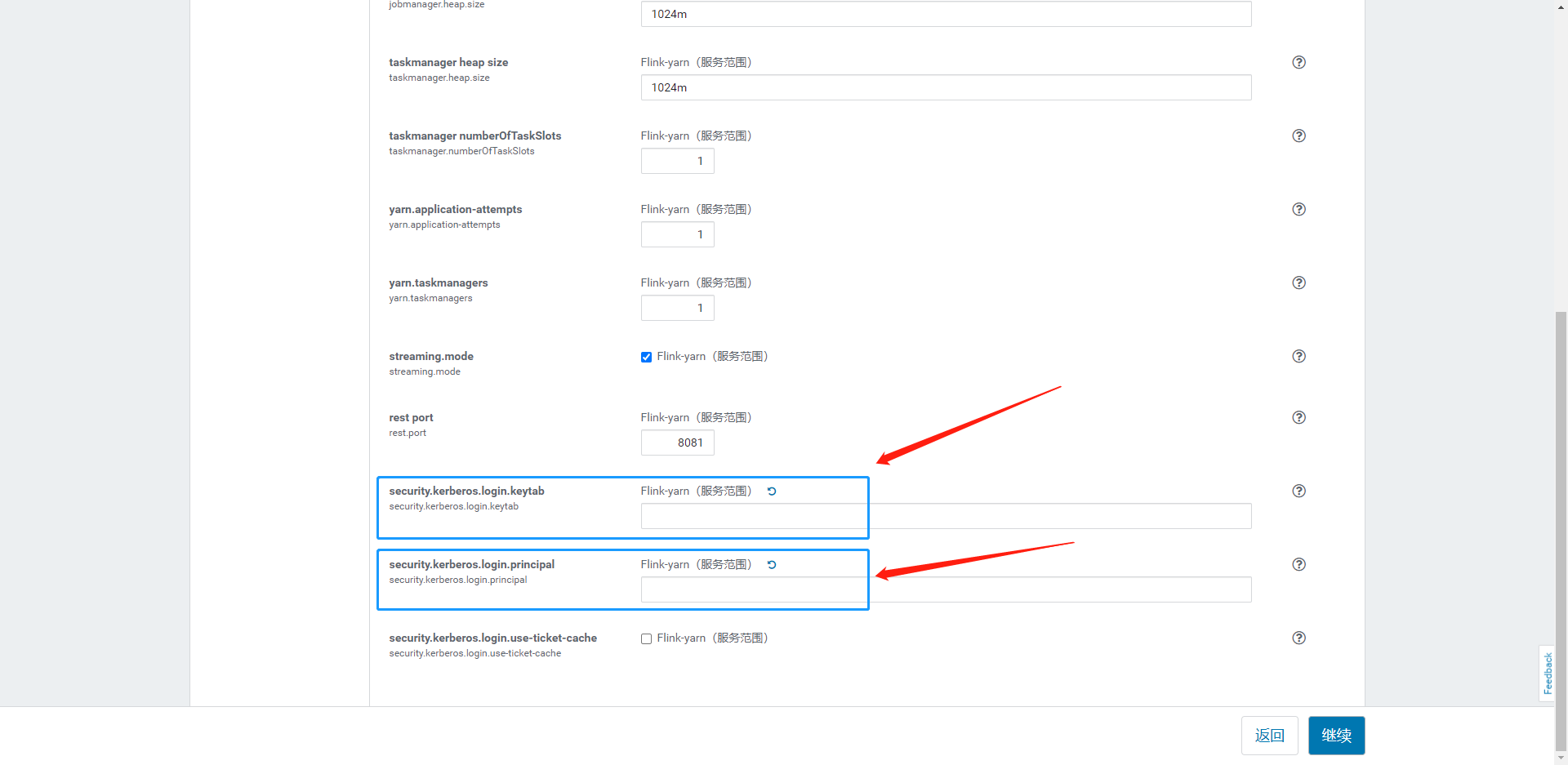

Audit the changes, set the two configurations security.kerberos.login.keytab and security.kerberos.login.principal as empty strings, and click continue

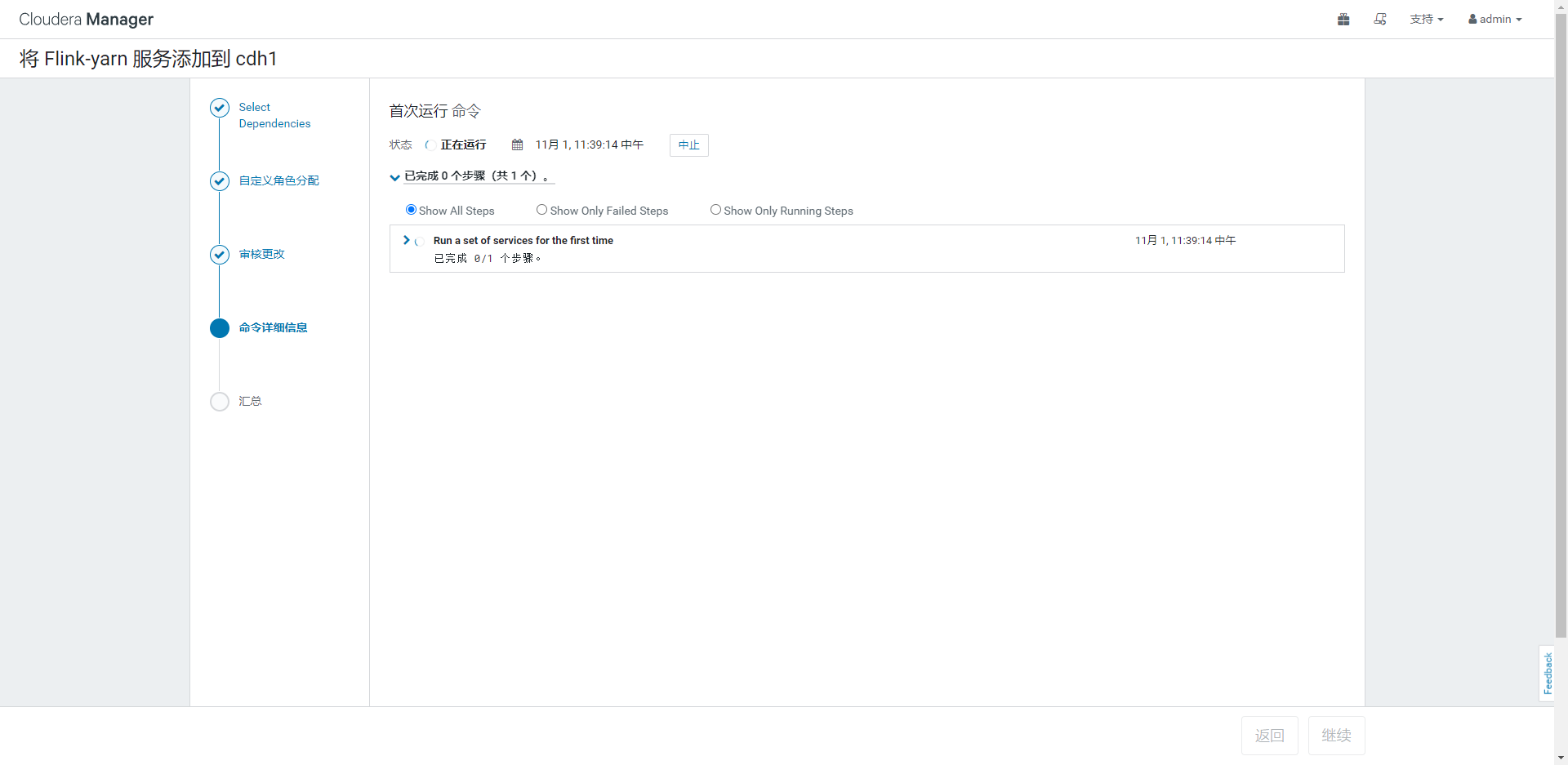

It starts running here. This step fails

report errors

Error Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.yarn.exceptions.YarnException

Full stderr log

Error: A JNI error has occurred, please check your installation and try again Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/yarn/exceptions/YarnException at java.lang.Class.getDeclaredMethods0(Native Method) at java.lang.Class.privateGetDeclaredMethods(Class.java:2701) at java.lang.Class.privateGetMethodRecursive(Class.java:3048) at java.lang.Class.getMethod0(Class.java:3018) at java.lang.Class.getMethod(Class.java:1784) at sun.launcher.LauncherHelper.validateMainClass(LauncherHelper.java:544) at sun.launcher.LauncherHelper.checkAndLoadMain(LauncherHelper.java:526) Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.yarn.exceptions.YarnException at java.net.URLClassLoader.findClass(URLClassLoader.java:381) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:349) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ... 7 more

The cause of the problem is the lack of hadoop packages

solve

1. If flink version > = 1.12.0

Flink yarn - > configuration - > Advanced - > Flink yarn service environment advanced configuration code segment (safety valve) Flink yarn (service scope) can add the following contents:

HADOOP_USER_NAME=flink HADOOP_CONF_DIR=/etc/hadoop/conf HADOOP_HOME=/opt/cloudera/parcels/CDH HADOOP_CLASSPATH=/opt/cloudera/parcels/CDH/jars/*

After adding the configuration, restart the Flink yarn service without reporting an error.

2. If flink version < 1.12.0

It is recommended to upgrade to above 1.12.0 (just kidding). If the flink version is lower than 1.12.0, you need to compile flink shaded. Please refer to this blog CDH6.2.1 integrated flynk

reference material

CDH6.3.2 compile and integrate Flink

CDH6.3.2 integrated installation of flink on yarn Service

Flink compiles the corresponding version of Flink 1.9.1-cdh6.2