1. What is the Tensorflow model?

We know that Tensorflow is composed of tensors and computational models. All computations in Tensorflow are converted to nodes on computational graphs. TensorFlow internally represents the operation process as a data flow graph. When you train a neural network, the system saves the results of the model. So what is the Tensorflow model? Tensorflow model mainly includes network design (or network diagram) and the values of trained network parameters. So the Tensorflow model has two main files:

1.1. Meta diagram:

Meta graph is a protocol buffer, which preserves the complete Tensorflow graph; for example, all variables, operations, sets, etc. The extension of this file is. meta.

1.2. Checkpoint file

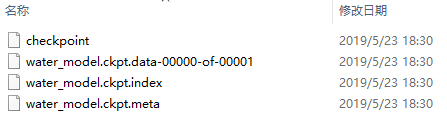

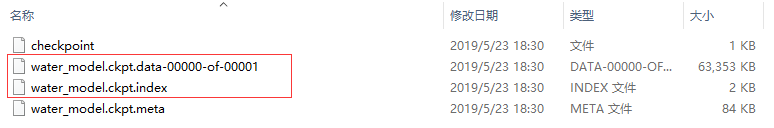

This is a binary file that holds the values of weights, biases, gradients, and all other variables, with an extension of. ckpt. However, since version 0.11, Tensorflow has made some changes to the file. The checkpoint file is no longer a single. CKPT file, but two files as follows:

1.3. About saver

Saver's function is to save the parameters of our trained model so that it can be used for training or testing next time.

saver.save(sess, 'save/water_model.ckpt')

Saver class is saved as checkpoints file after training. In general, Saver automatically manages Checkpoints files. We can specify to save the nearest N Checkpoints files, but it's also possible to save ckpt files at every step.

- saver() can select the global_step parameter to add a numeric tag to the ckpt file name:

- The max_to_keep parameter defines saver() to automatically save the nearest n ckpt files, default n=5, that is, to save the nearest five checkpoint ckpt files. If n=0 or None, save all ckpt files.

- keep_checkpoint_every_n_hours is similar to max_to_keep, which defines that an ckpt file is saved every n hours.

... # Create a saver. saver = tf.train.Saver(...variables...) # Launch the graph and train, saving the model every 1,000 steps. sess = tf.Session() for step in xrange(1000000): sess.run(..training_op..) if step % 1000 == 0: # Append the step number to the checkpoint name: saver.save(sess, 'my-model', global_step=step)

2. Training Model and Conversation Introduction

2.1. Model network structure

The names of input/output variables in the model need to be defined in the network structure when training the model so that they can be identified when reloading the network structure diagram. The variables are defined in placeholders as "x", "keep_prob", "y_conv" defined in the following code.

x = tf.placeholder("float", shape=[None, 20 * 20 * 1 ],name='x') #Picture Pixel 20*20 ...... keep_prob = tf.placeholder("float",name='keep_prob') ...... y_conv=tf.nn.softmax(tf.matmul(h_fc2_drop, W_fc3) + b_fc3,name='y_conv') # softmax layer, calculating output labels

2.2. Tensorflow Running Model - Session

There are two kinds of conversations used in Tensorflow:

The first mode requires explicitly calling session production functions and closing sessions.

sess = tf.InteractiveSession() tf.global_variables_initializer().run() for i in range(80): ...... training.run(feed_dict={x: batch_x, y: batch_y, keep_drop: 0.5}) sess.close()

The second mode is that Tensorflow can use sessions through Python's context manager, as follows: "with tf.Session() as sess", as long as all calculations are placed inside "with". When the context manager exits, the system automatically releases all resources.

The case code of this mode is detailed in the following contents.

3. Two methods of loading model

3.1. Define model network structure

3.1.1. Define the network structure

Defining the network structure of the model is to use the whole network structure code of the original training model and the variables used in it, that is, to express the network structure.

3.1.2. Recovery parameters

Restore model parameters using the saver.restore() function, consistent with the file path name that saves the model. Note in particular that the file name is not a full name and does not have the last suffix of the file name, such as:

saver.restore(sess,'save/water_model.ckpt') # uses the model, and the parameters are consistent with the previous code

#Placeholders, by creating nodes for input images and target output categories, start building computational graphs. x = tf.placeholder("float", shape=[None, 20 * 20 * 1 ],name='x') #Picture Pixel 20*20 y_ = tf.placeholder("float", shape=[None, 10],name='y_') #Output 10 digits ...... x_test = input_data('imgs_lib/img2_0.png') # Take pictures by catalogue with tf.Session() as sess: sess.run(tf.global_variables_initializer()) print("Start testing!") saver.restore(sess, "save/water_model.ckpt") #Using the model, the parameters are consistent with the previous code ret=sess.run(y_conv,feed_dict={x:x_test,keep_prob: 1.0}) predint=sess.run(tf.argmax(ret,1)) y_pre = predint

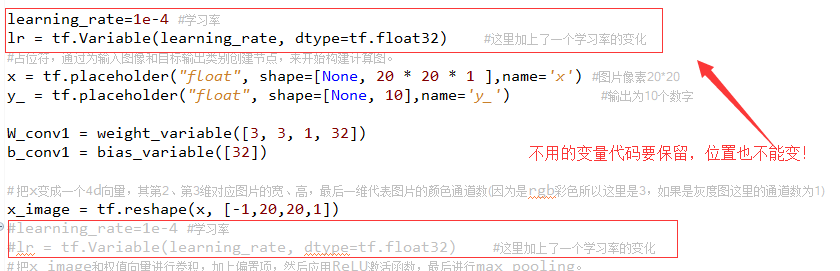

For example, when loading the model, a similar error occurs: "Assign required shapes of both tensors to match. LHS shape= [3, 3, 1, 16] RHS shape= []..." The reason is that the network structure of the model saved during training is not consistent with that of the test loading model. The reason is that model users and trainers may be different people, or different machines and equipment, etc.

The reason for the error is that the position of unused variables is moved. The unused variable code should not only be retained, but also the position can not be changed. This problem has been bothering me for several days. It will take a long time to train the model once.

3.2. Loading Model Network Architecture

Load all nodes of the saved graph from the file into the current default graph and return a saver. That is to say, in addition to preserving the values of variables, we also preserve the various nodes in the corresponding graphs, so the structure of the model is also preserved.

3.2.1. Import and Create Network Structure Diagram

Use the tf.train.import() function to load the previously saved network.

saver = tf.train.import_meta_graph('save/water_model.ckpt.meta')

Note that import_meta_graph adds the graph saved in the. meta file to the current graph. So, a graph / network is created, but we use parameters that need to be loaded into the graph.

3.2.2. Import loading parameters

Call restore method in object saver created by tf.train.Saver() to restore parameters in the network.

saver.restore(sess,tf.train.latest_checkpoint("save/"))

3.2.3. Input/output parameters in network structure

The variable identification name is defined when the training model is acquired by graph. See the definition in Chapter 3.1 for details.

graph = tf.get_default_graph() x = graph.get_tensor_by_name('x:0') keep_prob = graph.get_tensor_by_name('keep_prob:0') y_conv = graph.get_tensor_by_name('y_conv:0')

3.2.3. Sample code

The enclosed code is implemented by bypassing the first method, without the common network structure code and network model.

''' Created on 2019 May 23rd 2013 @author: xiaoyw ''' import tensorflow as tf import cv2 import numpy as np import datetime import os #Enter the name of the picture file, the picture is 20*20 def input_data(file_name): img = cv2.imread(file_name, cv2.IMREAD_GRAYSCALE) x_data = [] x_data.append(img.flatten()) x_data = np.array(x_data) x_data = x_data.astype("float") x_data = np.multiply(x_data, 1.0 / 255.0) return x_data def modele_test(): with tf.Session() as sess: sess.run(tf.global_variables_initializer()) saver = tf.train.import_meta_graph('save/water_model.ckpt.meta') print("Model initialization!") saver.restore(sess,tf.train.latest_checkpoint("save/")) graph = tf.get_default_graph() x = graph.get_tensor_by_name('x:0') keep_prob = graph.get_tensor_by_name('keep_prob:0') y_conv = graph.get_tensor_by_name('y_conv:0') file_list = readImgFileName('imgs_lib') #The test file path is imgs_lib for file_row in file_list: x_test = input_data(file_row[1]) feed_dict = {x:x_test,keep_prob:1.0} ret = sess.run(y_conv,feed_dict) predint=sess.run(tf.argmax(ret,1)) # Return the predicted results preddigit = predint[0] # Forecast figures digit = file_row[0] # True figures y_pre = ret[0] #Predicted return probability print('Input label: ' + str(digit) + ', Forecast results: ' + str(preddigit) ) if int(digit)!=int(preddigit): print ('Reference probability:' + str(y_pre)) print('Image file name:' + file_row[1]) #Read the list of source files and split them into data labels def readImgFileName(path): list = [file[2] for file in os.walk(path)] file_names = [file_name.strip('.png') for file_name in list[0]] file_list = [] for f0 in file_names: kk=f0.split('_') tmp = [] tmp.append(kk[len(kk)-1]) tmp.append(path + '/' + f0 + '.png') file_list.append(tmp) return file_list if __name__ == '__main__': nowTime=datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')#Now print('start :{}'.format(nowTime)) modele_test()

But it's important to note that when you use feed_dict to set tensor, you need to give the same value type as the type defined by placeholder.

Reference resources:

A Quick and Complete Tensorflow Model Preservation and Restoration Tutorial CSDN blog Meringue_zz January 2018

TensorFlow CNN Convolutional Neural Network for Classification and Recognition of Working Diagram (I) CSDN Blog Xiao Yongwei March 2019

"Learning Tensorflow Introduction and Tensorboard Practice Using Python Development Tool Jupyter Notebook" CSDN Blog Xiao Yongwei January 2019

TensorFlow Learning Notes: Saver and Restore Brief Book Dexter Lei October 2017

Two Ways to Save/Load TensorFlow Model CSDN blog thriving_fcl May 2017