The implementation of a simple crawler mainly includes two aspects: obtaining the HTML text of the specified web page; parsing the HTML text obtained in the previous step to obtain the required information, for the picture downloader, it is to parse the path of each picture on the network from the HTML text to further download.

These two aspects are described as follows:

1, Get HTML text

1.WebClient

If you know the URL address and want to download or upload data, the WebClient class is the most convenient. WebClient can use HTTP protocol to get files and upload data, but it can't be used in FTP protocol.

For crawlers, WebClient can download data in the following ways:

DownloadString: downloads a web page from a resource and returns text.

DownloadData: downloads data from a resource and returns an array of bytes.

DownloadFile: download data from resource to local file.

OpenRead: returns data as a Stream from a resource.

Use the DownloadString method to Download HTML text as follows:

string url = "https://www.baidu.com/";//Specify the URL of the page WebClient client = new WebClient(); client.Encoding = Encoding.UTF8; string pagehtml = client.DownloadString(url); Console.WriteLine(pagehtml);

It should be noted that the setting of character Encoding is consistent with the actual web page programming, and UTF8 is used for most web pages.

If you need to add message header information, you can use client.Headers.Add() method to add

For example:

client.Headers.Add("user-agent",""Mozilla/5.0 (Windows NT 10.0; Win64; x64));

2.WebRequest

System.Net There are several classes that can be used to write software that talks to a Web server. These classes are derived from WebRequest and WebRespomse.

FileWebRequest and FileWebResponse classes are used to handle addresses representing local files, which also start with file: / /.

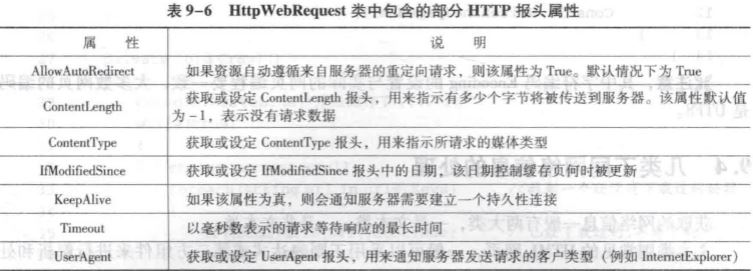

HttpWebRequest and HttpWebResponse classes can use HTTP to interact with the server to implement our crawler. Create an HttpWebRequest object to send request information to the Web server using HTTP. This class contains many properties equivalent to the HTTP header field sent to the server, through which the header information can be set.

The specific implementation of obtaining HTML text through HttpWebRequest is as follows:

string url = "https://www.baidu.com/"; HttpWebRequest webRequest = WebRequest.CreateHttp(url); webRequest.Method = "GET";//Set to GET request webRequest.UserAgent = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) "; HttpWebResponse webResponse = (HttpWebResponse)webRequest.GetResponse();//Get a response //Stream file read StreamReader streamReader = new StreamReader(webResponse.GetResponseStream(), Encoding.UTF8); string pagehtml = streamReader.ReadToEnd(); Console.WriteLine(pagehtml); streamReader.Close();

2, Parsing HTML text

1. Regular expression

For the obtained HTML text, the hyperlink (such as the link address of the picture) can be obtained by parsing the regular expression matching string, and then it can be further downloaded.

System.Text.RegularExpressions The. Regex class provides a pattern matching function based on text strings.

There are two common construction methods of Regex:

Regex();

Regex(string);

The Regex object created by the constructor with string parameter can be precompiled, which makes later pattern matching faster.

To get the information of text matching, you can use the Match() and matches () methods. Match() returns a match object, which includes the result of the matching process, the matching location and other information. Matches() returns a MatchCollection object, which is the collection object of match. You can get the matching information through foreach statement and GetEnumerator() method.

Here is a complete example of how to parse HTML text and download pictures through regular expressions:

//Download pictures to save on local computer string path = @"D:\picture\picture\"; string url = "https://www.jianshu.com/p/e783d92ad201"; WebClient client = new WebClient(); client.Encoding = Encoding.UTF8; string pagehtml = client.DownloadString(url);//HTML text obtained Console.WriteLine(pagehtml); if (string.IsNullOrEmpty(pagehtml)) { Console.WriteLine("-----Error -————————"); Console.ReadKey(); } //Regular Expression Matching Regex regex = new Regex("<img.*?src=['|\"](?<Collect>(.*?(?:\\.(?:png|jpg|gif))))['|\"]"); MatchCollection matchresults = regex.Matches(pagehtml); int name = 0;//Picture file name try { foreach (Match match1 in matchresults) { string src = match1.Groups["Collect"].Value; src = "http:" + src;//Construct the full URL of the picture name++; client.DownloadFile(src, path + name + ".jpg"); Console.WriteLine("\n Crawling -——————" + "|" + src); } } catch (Exception ex) { Console.WriteLine("-------------" + ex); } Console.ReadKey();

2. Third party components (take Html Agility Pack as an example)

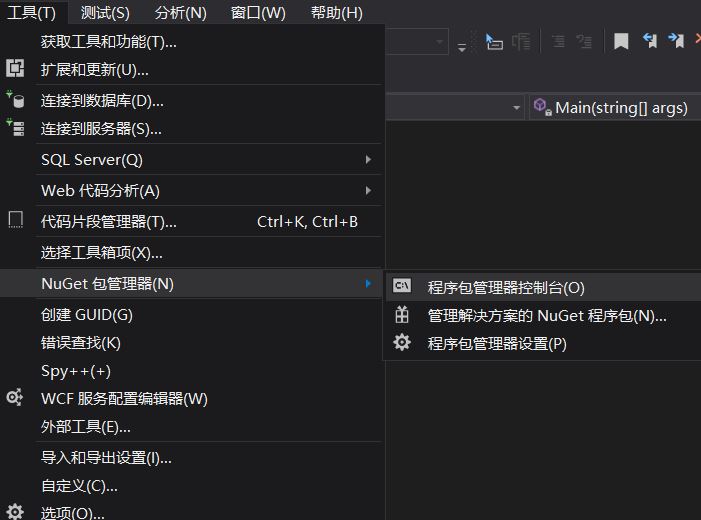

First install the Html Agility Pack component in Visual Studio.

Click "tools" - > "NuGet package manager" - > "package manager console", and then enter the following fame and fortune in the console to install:

Install-Package HtmlAgilityPack -Version 1.8.1

And then in the program head

using HtmlAgilityPack;

To use the Html Agility Pack component to parse HTML text, you need to instantiate an HtmlDocument object first. To use HtmlDocument, you need to:

using System.Collections;

Using Html Agility Pack component to filter web page content is mainly through XPath. The specific operation is through two methods: SelectNodes and SelectSingleNode.

To view the usage of Html Agility Pack component, you can visit its official website: https://html-agility-pack.net/

For an introduction to XPath, see this introduction: Learn the reptile tool XPath, and read this one

Its main principle is to get the image link address of img tag in HTML document through XPath. Consider each HTML tag as a Node, so if we want to locate the content of a tag, we need to know the related attributes of the tag. By the way, the HtmlNode class implements the IXPathNavigable interface, which shows that it can locate data through XPath. If you know the XmlDocument class that parses XML format data, especially those who have used the SelectNodes() and SelectSingleNode() methods, you will be familiar with the HtmlNode class. In fact, Html Agility Pack internally parses HTML into XML document format, so it supports some common query methods in XML.

For details, see the use of Xpath or the link reference blog provided in this article.

Here is a complete code for downloading pictures using the Html Agility Pack component:

string url = "https://3w.huanqiu.com/a/3458fa/3yhJLSo5asM?agt=8"; string path = @"D:\picture\picture\"; //Download pictures to save on local computer WebClient client = new WebClient(); client.Encoding = Encoding.UTF8; string pagehtml = client.DownloadString(url);//HTML text obtained Console.WriteLine(pagehtml); if (string.IsNullOrEmpty(pagehtml)) { Console.WriteLine("-----Error -————————"); Console.ReadKey(); } HtmlAgilityPack.HtmlDocument doc = new HtmlAgilityPack.HtmlDocument(); doc.LoadHtml(pagehtml); //Filter img tags with Xpath HtmlNodeCollection nodes = doc.DocumentNode.SelectNodes("//img"); int name = 0; if (nodes!=null) { foreach(HtmlNode htmlNode in nodes) { HtmlAttribute htmlAttribute = htmlNode.Attributes["src"];//Select src attribute in img node name++; string src =htmlAttribute.Value; client.DownloadFile(src, path + name + ".jpg"); Console.WriteLine("\n Crawling -——————" + "|" + src); } } Console.ReadKey();

Reference blog:

How to quickly implement and analyze Html Agility Pack of C-crawler

C ා HTML agility pack crawls static pages

C. reptile learning (2)