C + + common component thread pool implementation

Introduction to thread pool model

When dealing with a large number of concurrent tasks, if a request is processed by a thread in the traditional way, the creation and destruction of a large number of threads will consume too much system resources, and increase the overhead of thread context switching. These problems can be solved by thread pool technology. Thread pool technology creates a certain number of threads in the system in advance. When the task request comes, a pre created thread is allocated from the thread pool to process the task. After processing the task, the thread can be reused, not destroyed, but wait for the next task. In this way, through the thread pool, a large number of thread creation and destruction actions can be avoided, thus saving system resources. One advantage of this is that for multi-core processors, because threads will be allocated to multiple CPU s, the efficiency of parallel processing will be improved. Another advantage is that each thread is blocked independently, which can prevent the main thread from being blocked and the main process from being blocked, resulting in other requests not responding.

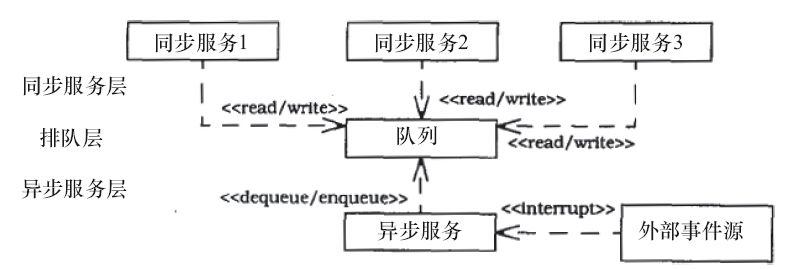

Thread pool is divided into semi synchronous semi asynchronous thread pool and leader follower thread pool. This chapter will mainly introduce semi synchronous semi asynchronous thread pool, which is simpler in implementation, more used and more convenient. The semi synchronous and semi asynchronous thread pool is divided into three layers. As shown in the figure below

The first layer is the synchronous service layer, which processes the task requests from the upper layer. The upper layer requests may be concurrent. These requests will not be processed immediately, but will be put into a synchronous queuing layer, waiting for processing. The second layer is the synchronous queuing layer. All task requests from the upper layer will be added to the queuing layer for processing. The third layer is asynchronous service layer. In this layer, there will be multiple threads processing tasks in the queuing layer at the same time. Asynchronous service layer takes tasks from the synchronous queuing layer for parallel processing.

This three-tier structure can handle the concurrent requests of the upper layer to the greatest extent. For the upper layer, just drop the task into the synchronization queue. As for who will handle it, when will it be processed, the main thread will not block, and can continue to initiate new requests. As for how to deal with tasks, these details are all completed by asynchronous multi-threaded parallel of asynchronous service layer. These threads are created at the beginning, and will not create new threads due to the arrival of a large number of tasks, avoiding the system overhead caused by frequent creation and destruction of threads, and greatly improving the processing efficiency through multi-core processing.

Key technology analysis of thread pool implementation

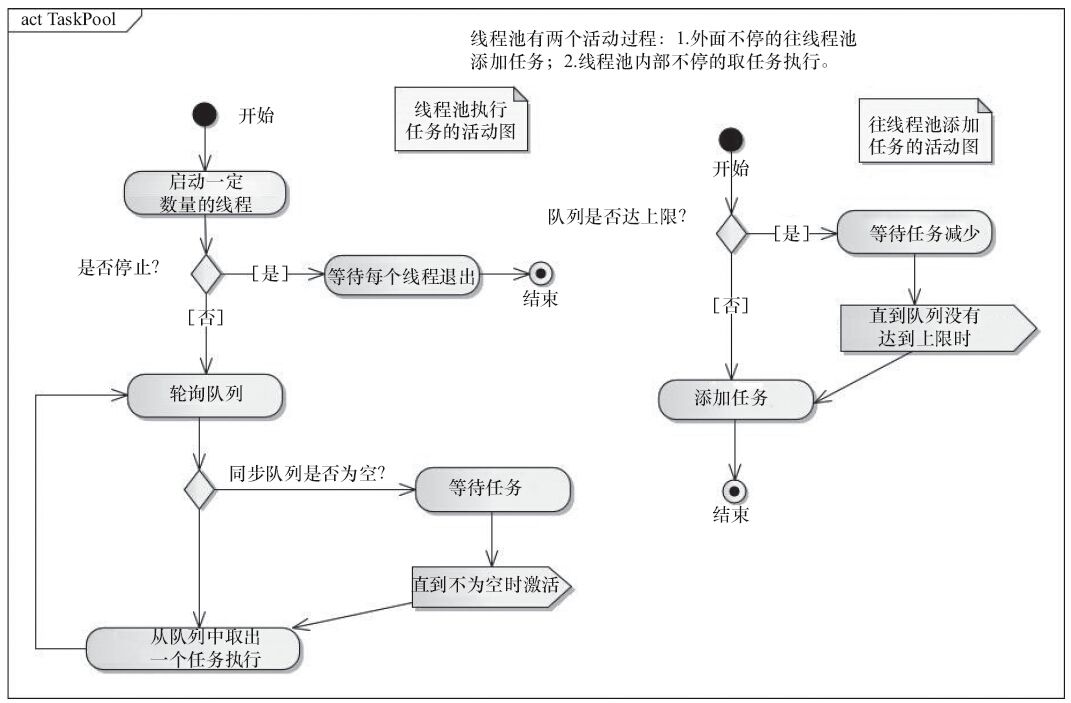

The previous section introduces the basic concept and structure of thread pool, which is composed of three layers: synchronous service layer, queuing layer and asynchronous service layer. Among them, queuing layer is the core, because the upper layer will add tasks to the queuing layer, and asynchronous service layer will take out tasks at the same time. Here is a synchronous process. In the implementation, the queuing layer is a synchronous queue, which allows multiple threads to add or remove tasks at the same time, and to ensure that the operation process is safe. The thread pool has two active processes, one is to add tasks to the synchronization queue, the other is to get tasks from the synchronization queue, as shown in the following figure

From the activity diagram, you can see the activity process of the thread pool. At the beginning, the thread pool will start a certain number of threads. These threads belong to the asynchronous layer and are mainly used to process tasks in the queuing layer in parallel. If the number of tasks in the queuing layer is empty, these threads wait for the arrival of tasks. If there are tasks in the queuing layer, the thread pool will wake up from these waiting threads One to handle the new task. The synchronous service layer will continuously add new tasks to the synchronous queuing layer. Here is a problem worthy of attention. It is possible that there are many tasks in the upper layer, and the tasks are very time-consuming. At this time, if the threads in the asynchronous layer cannot handle it, the tasks in the synchronous queuing layer will continue to increase. If the synchronous queuing layer does not add upper limit control, it may cause the tasks in the queuing layer to increase The problem of too many tasks and memory explosion. Therefore, the queue layer needs to increase the upper limit control. When the number of tasks in the queue layer reaches the upper limit, the upper level tasks will not be added, which plays a role of restriction and protection.

Implementation of thread pool model in C++ 11

1. github sample code address

2. Implementation code

- SyncQueue.hpp

#ifndef __SYNC_QUEUE__

#define __SYNC_QUEUE__

#include<list>

#include<mutex>

#include<thread>

#include<condition_variable>

#include <iostream>

using namespace std;

template<typename T>

class SyncQueue {

public:

SyncQueue(int maxSize) :m_maxSize(maxSize), m_needStop(false) {}

void Put(const T& x) {

Add(x);

}

void Put(T&& x) {

Add(std::forward<T>(x));

}

void Take(std::list<T>& list) {

std::unique_lock<std::mutex> locker(m_mutex);

m_notEmpty.wait(locker, [this] {return m_needStop || NotEmpty(); });

if (m_needStop)

return;

list = std::move(m_queue);

m_notFull.notify_one();

}

void Take(T& t){

std::unique_lock<std::mutex> locker(m_mutex);

m_notEmpty.wait(locker, [this] {return m_needStop || NotEmpty(); });

if (m_needStop)

return;

t = m_queue.front();

m_queue.pop_front();

m_notFull.notify_one();

}

void Stop(){

{

std::lock_guard<std::mutex> locker(m_mutex);

m_needStop = true;

}

m_notFull.notify_all();

m_notEmpty.notify_all();

}

bool Empty(){

std::lock_guard<std::mutex> locker(m_mutex);

return m_queue.empty();

}

bool Full(){

std::lock_guard<std::mutex> locker(m_mutex);

return m_queue.size() == m_maxSize;

}

size_t Size(){

std::lock_guard<std::mutex> locker(m_mutex);

return m_queue.size();

}

int Count(){

return m_queue.size();

}

private:

bool NotFull() const{

bool full = m_queue.size() >= m_maxSize;

//if (full)

// Cout < < buffer full, need to wait... < endl;

return !full;

}

bool NotEmpty() const{

bool empty = m_queue.empty();

//if (empty)

// Cout < < the buffer is empty and needs to wait... The thread ID of the asynchronous layer: "< STD:: this thread:: get_id() < endl;

return !empty;

}

template<typename F>

void Add(F&& x){

std::unique_lock< std::mutex> locker(m_mutex);

m_notFull.wait(locker, [this] {return m_needStop || NotFull(); });

if (m_needStop)

return;

m_queue.push_back(std::forward<F>(x));

m_notEmpty.notify_one();

}

private:

std::list<T> m_queue; // Buffer

std::mutex m_mutex; // Mutex and conditional variables

std::condition_variable m_notEmpty; // Condition variable not empty

std::condition_variable m_notFull; // No full condition variable

unsigned int m_maxSize; // Maximum size of synchronization queue

bool m_needStop; // Stop sign

};

#endif // !__SYNC_QUEUE__

- Thread pool ThreadPool.hpp

#ifndef __THREAD_POOL__

#define __THREAD_POOL__

#include<list>

#include<thread>

#include<functional>

#include <type_traits>

//#include <function_traits>

#include<memory>

#include <atomic>

#include "SyncQueue.hpp"

const int MaxTaskCount = 100;

template <typename R = void>

class ThreadPool {

public:

using Task = std::function<R()>;

public:

ThreadPool(int numThreads = std::thread::hardware_concurrency()) : m_queue(MaxTaskCount) {

Start(numThreads);

}

~ThreadPool(void) {

// Actively stop the thread pool if it is not stopped

Stop();

}

void Stop() {

// Ensure to call StopThreadGroup only once in case of multithreading

std::call_once(m_flag, [this] {StopThreadGroup(); });

}

public:

void AddTask(Task&& task) {

m_queue.Put(std::forward<Task>(task));

}

void AddTask(const Task& task) {

m_queue.Put(task);

}

template<typename Fun,typename Arg ,typename... Args>

void AddTask(Fun&& f, Arg&& arg,Args&&... args) {

auto f_task = std::bind(std::forward<Fun>(f), std::forward<Arg>(arg),std::forward<Args>(args)...);

m_queue.Put(std::move(f_task));

}

public:

void Start(int numThreads) {

m_running = true;

// Create thread group

for (int i = 0; i < numThreads; ++i) {

m_threadgroup.push_back(std::make_shared<std::thread>(&ThreadPool::RunInThread, this));

}

}

void RunInThread() {

while (m_running) {

// Take tasks and execute them separately

std::list<Task> list;

m_queue.Take(list);

for (auto& task : list) {

if (!m_running)

return;

task();

}

}

}

void StopThreadGroup() {

m_queue.Stop(); // Stop the threads in the synchronization queue

m_running = false; // Set false to let the internal thread jump out of the loop and exit

for (auto thread : m_threadgroup) { // Wait for the thread to end

if (thread)

thread->join();

}

m_threadgroup.clear();

}

private:

std::list<std::shared_ptr<std::thread>> m_threadgroup; // Thread groups processing tasks

SyncQueue<Task> m_queue; // Synchronous queue

atomic_bool m_running; // Stop flag

std::once_flag m_flag;

};

#endif // !__THREAD_POOL__

- test

#include<string>

#include <iostream>

#include "ThreadPool.hpp"

using namespace std;

int add_fun1(int n) {

std::cout << "commonfun:add_fun1:n=" << n << std::endl;

return n + 1;

}

double add_fun2(int n) {

std::cout << "commonfun:add_fun2:n=" << n << std::endl;

return n + 1.0;

}

void TestThdPool(){

{

ThreadPool<> pool;

pool.Start(2);

std::thread thd1([&pool] {

for (int i = 0; i < 1; i++) {

auto thdId = this_thread::get_id();

pool.AddTask([thdId] {

cout << "lambda Expression:Threads of synchronization layer thread 1 ID:" << thdId << endl; });

}});

std::thread thd2([&pool] {

auto thdId = this_thread::get_id();

pool.AddTask([thdId] {

cout << "lambda Expression:Threads of synchronization layer thread 2 ID:" << thdId << endl; });

std::function<int()> f = std::bind(add_fun1, 1);

pool.AddTask(f);//In fact, the parameter should be STD:: function < void() >

pool.AddTask(add_fun2, 1);

});

this_thread::sleep_for(std::chrono::seconds(2));

pool.Stop();

thd1.join();

thd2.join();

}

{

ThreadPool<int> pool;

pool.Start(1);

std::thread thd1([&pool] {

auto thdId = this_thread::get_id();

auto f = std::bind(add_fun1, 1);

pool.AddTask(f);

});

this_thread::sleep_for(std::chrono::seconds(2));

pool.Stop();

thd1.join();

}

{

ThreadPool<double> pool;

pool.Start(1);

std::thread thd1([&pool] {

auto thdId = this_thread::get_id();

pool.AddTask(add_fun2,1);

});

this_thread::sleep_for(std::chrono::seconds(2));

pool.Stop();

thd1.join();

}

}

int main() {

TestThdPool();

return 0;

}