hadoop startup error

ERROR: Cannot set priority of datanode process

The cluster was not formatted

./bin/hdfs namenode -format

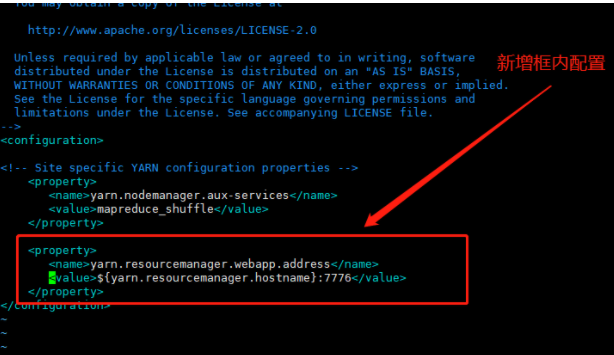

Modify yarn default port

After that, it needs to be restarted because it is not hot

database

Error reporting 1045

[root@test ~]# systemctl stop mariadb ##Close mysql

[root@test ~]# mysqld_safe --skip-grant-tables & ##Open the mysql login interface and ignore the authorization table

[root@test ~]# mysql ##You can log in directly without password

MariaDB [(none)]> update mysql.user set Password=password('123') where User='root'; ##Change password

MariaDB [(none)]> quit

[root@test ~]# ps aux | grep mysql ##Filter all mysql processes

[root@test ~]# kill -9 9334 ##End process

[root@test ~]# kill -9 9489 ##End process

[root@test ~]# systemctl start mariadb ##Restart mariadb

[root@test ~]# mysql -uroot -p123 ##land

Change the network user password after logging in

MariaDB [(none)]> update mysql.user set Password=password('123') where User='white';

3.Change normal user permissions

MariaDB [mysql]> grant all on *.* to white@'%'; ##User authorization

MariaDB [mysql]> show grants for white@'%'; ##View user rights

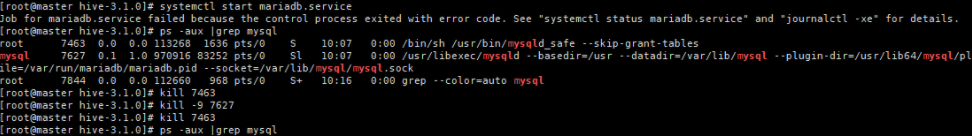

An error occurs when starting or restarting the database

Job for mariadb.service failed because the control process exited with error code. See "systemctl status mariadb.service" and "journalctl -xe" for details.

resolvent

- ps -aux|grep mysql find his process, kill him and start it

kill -9 pid

hbase

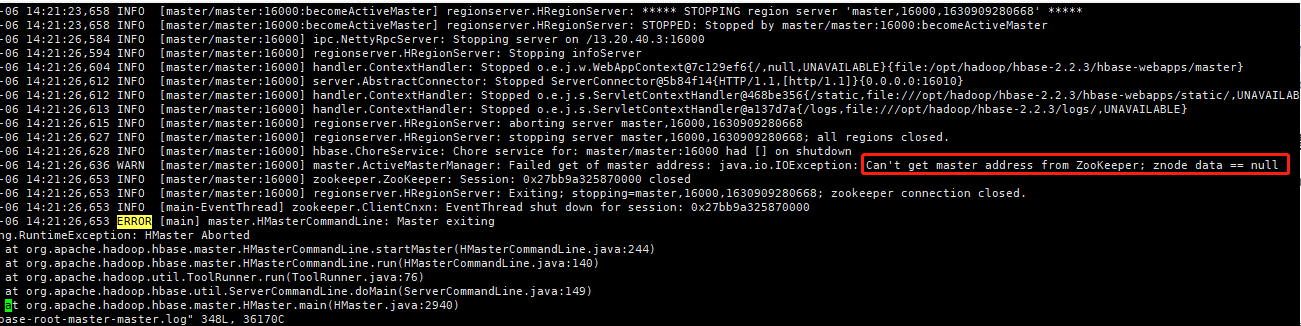

This problem may be caused by the instability of zookeeper. The virtual machine is often suspended. The following error occurs when using the list command of hbase. This may be caused by the stability of hbase. There are two solutions. The first method is used here.

Can't get master address from ZooKeeper; znode data == null

First kind

1. Restart hbase

stop-hbase.sh then

2.start-hbase.sh

2. Solution 2:

(1) Cause: the user running hbase(zookeeper) cannot write the zookeeper file, resulting in empty znode data. Solution: in hbase-site.xml, specify a directory where the user running HBase has permission to write files as the zookeeper data directory, such as

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/home/user88/zk_data</value>

</property>

(2)hbase-site.xml In file

<property>

<name>hbase.rootdir</name>

<value>hdfs://hadoop1:49002/hbase</value>

</property>

rootdir Medium IP Setting is very important. You need to set the corresponding IP

And core-site.xml in fs.defaultFS The paths in are different

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop1:9000</value>

</property>

3. Solution 2: format the namenode, which will not be repeated here. But generally, don't format it when there is no way.

Turn off virtual memory check

yarn-site.xml

<property> <name>yarn.nodemanager.vmem-check-enabled</name> <value>false</value> </property>

zookeeper

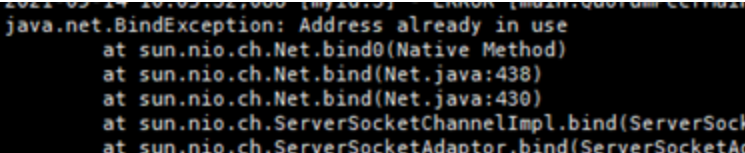

Prompt that the port is occupied,

This error is reported. Check whether myid corresponds to the zoo.cfg file. Delete the extra spaces. After stop ping, kill the process

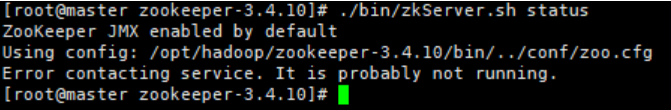

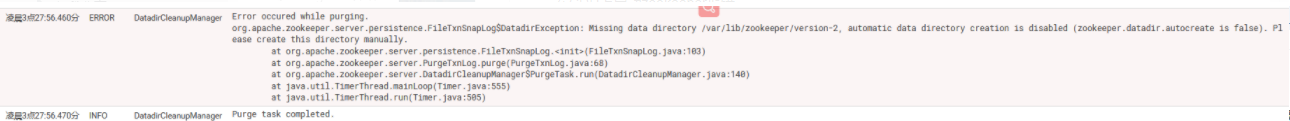

Error in starting zookeeper on CDH

Missing data directory /var/lib/zookeeper/version-2, automatic data directory creation is disabled (zookeeper.datadir.autocreate is false). Please create this directory manually

Solution: create the data and version-2 directories in the zookeeper directory, and grant the highest permission

CDH environment

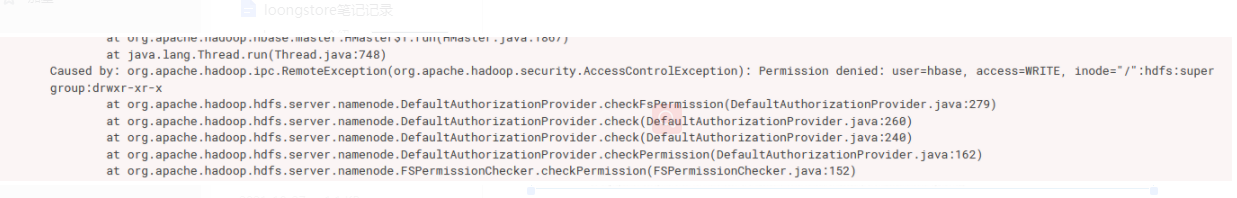

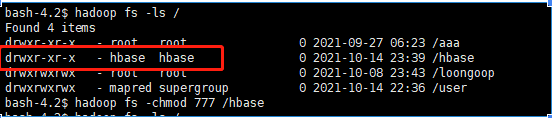

CDH start hbase error

Permission denied: user=hbase, access=WRITE, inode="/":hdfs:supergroup:drwxr-xr-x

solve

Step 1: su hdfs, enter hdfs.

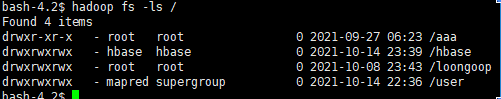

Step 2: hadoop fs -ls, check the user's permissions.

Step 3: modify permissions

hadoop fs -chmod 777 /hbase

If an error is reported, build the following components first and restart the service

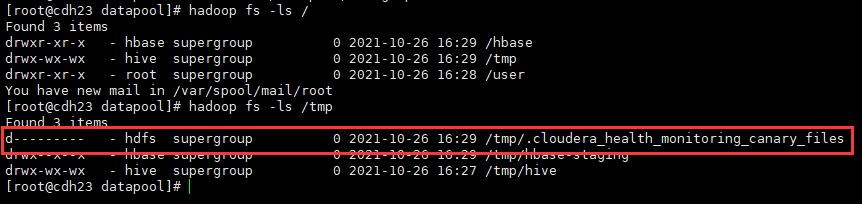

hive metastore canary failed to create hue hdfs home directory

Manually create this file cloudera_health_monitoring_canary_files

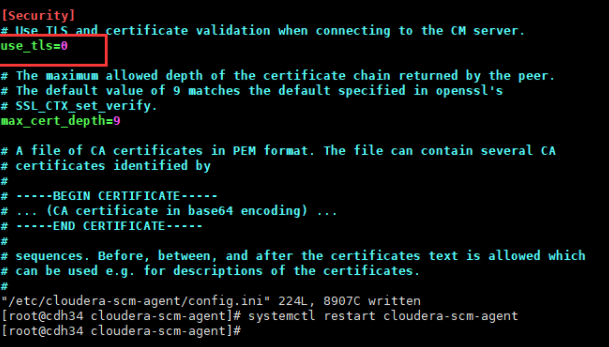

vim /etc/cloudera-scm-agent/config.ini

All nodes are changed to 0,

ps -aux|grep super

kill -9

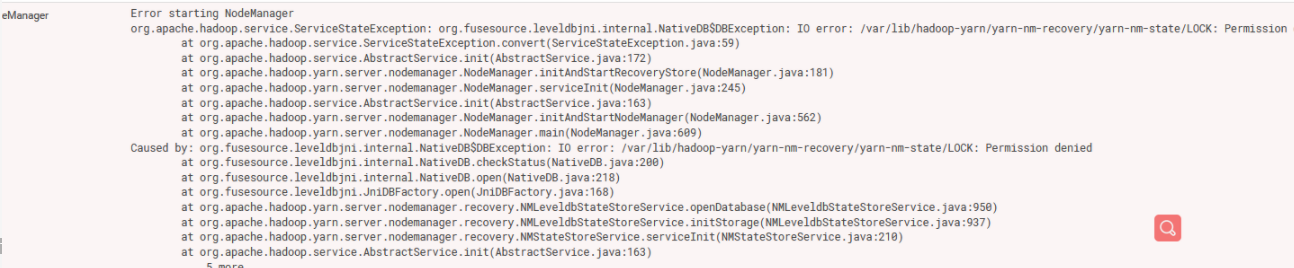

yarn startup error permission error

IO error: /var/lib/hadoop-yarn/yarn-nm-recovery/yarn-nm-state/LOCK: Permission denied

Solution: modify the / var / lib / Hadoop yarn directory to the highest permission

spark

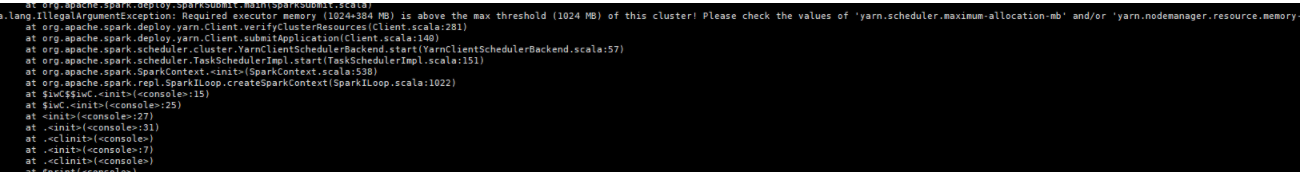

Execute spark shell -- Master yarn and report an error

Required executor memory (1024+384 MB) is above the max threshold (1024 MB) of this cluster! Please check the values of 'yarn.scheduler.maximum-allocation-mb' and/or 'yarn.nodemanager.resource.memory-mb'.

Find yarn on the web page, click Configure, search container memory, and add the maximum container memory to 3 gigabytes and the minimum to 2 gigabytes