BlockingQueue introduction

Blocking queue, as its name implies, is a queue. Through a shared queue, data can be input from one end of the queue and output from the other end;

When the queue is empty, the operation of getting elements from the queue will be blocked

When the queue is full, adding elements from the queue will be blocked

Threads trying to get elements from an empty queue will be blocked until other threads insert new elements into the empty queue

Threads trying to add new elements to a full queue will be blocked until other threads remove one or more elements from the queue or completely empty, making the queue idle and adding new elements later

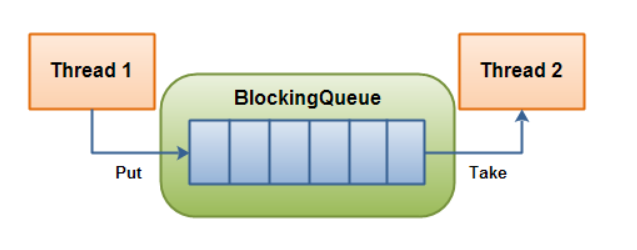

The blocking queue is a queue. Its role in the data structure is shown in the following figure:

Thread 1 adds elements to the blocking queue, and thread 2 removes elements from the blocking queue

There are two common queues:

First in first out (FIFO): the elements of the queue inserted first are also out of the queue first, which is similar to the function of queuing. To some extent, this queue also reflects a kind of fairness

Last in first out (LIFO): the elements inserted later in the queue are out of the queue first. This kind of queue gives priority to the recent events (stack)

In the field of multithreading: the so-called blocking will suspend the thread (i.e. blocking) in some cases. Once the conditions are met, the suspended thread will be automatically aroused

The advantage of BlockingQueue is that we don't need to care when we need to block threads and wake up threads, because all this is done by BlockingQueue.

Before the release of concurrent package, in a multithreaded environment, each programmer must control these details, especially considering efficiency and thread safety, which will bring great complexity to our program.

In the new Concurrent package, BlockingQueue solves the problem of how to "transmit" data efficiently and safely in multithreading. These efficient and thread safe queue classes bring great convenience for us to quickly build high-quality multithreaded programs.

In multi-threaded environment, data sharing can be easily realized through queues. For example, in the classical "producer" and "consumer" models, data sharing between them can be easily realized through queues. Suppose we have several producer threads and several consumer threads. If the producer thread needs to share the prepared data with the consumer thread and use the queue to transfer the data, it can easily solve the problem of data sharing between them. But what if the producer and consumer do not match the data processing speed in a certain period of time? Ideally, if the producer produces data faster than the consumer consumes, and when the produced data accumulates to a certain extent, the producer must pause and wait (block the producer thread) in order to wait for the consumer thread to process the accumulated data, and vice versa.

When there is no data in the queue, all threads on the consumer side will be automatically blocked (suspended) until data is put into the queue

When the queue is filled with data, all threads on the producer side will be automatically blocked (suspended) until there is an empty position in the queue and the thread will be awakened automatically

A queue is a container for storing data

BlockingQueue core method

public interface BlockingQueue<E> extends Queue<E> {

//Set the given element to the queue. If the setting is successful, it returns true. Otherwise, an exception is thrown. If you are setting a value to a queue with a limited length, the offer() method is recommended.

boolean add(E e);

//Set the given element to the queue. If the setting is successful, it returns true, otherwise it returns false. The value of e cannot be null, otherwise a null pointer exception will be thrown. (this method does not block the thread currently executing the method)

boolean offer(E e);

//Set the element to the queue. If there is no extra space in the queue, the method will block until there is extra space in the queue.

void put(E e) throws InterruptedException;

//Set the given element to the queue within a given time. If the setting is successful, it returns true, otherwise it returns false

boolean offer(E e, long timeout, TimeUnit unit)

throws InterruptedException;

//Get the value from the queue. If there is no value in the queue, the thread will block until there is a value in the queue and the method obtains the value.

E take() throws InterruptedException;

//At a given time, get the value from the queue. If it does not return null.

E poll(long timeout, TimeUnit unit)

throws InterruptedException;

//Gets the space remaining in the queue.

int remainingCapacity();

//Removes the specified value from the queue.

boolean remove(Object o);

//Determine whether the value is in the queue.

public boolean contains(Object o);

//Remove all the values in the queue and set them to a given set concurrently. Through this method, the efficiency of obtaining data can be improved; There is no need to lock or release locks in batches.

int drainTo(Collection<? super E> c);

//Specify the maximum number limit, remove all the values in the queue, and set them concurrently to the given collection. Through this method, the efficiency of obtaining data can be improved; There is no need to lock or release locks in batches.

int drainTo(Collection<? super E> c, int maxElements);

}

common method

| Methods \ processing methods | Throw exception | Return special value | Always blocked | Timeout exit |

|---|---|---|---|---|

| Insertion method | add(e) | offer(e) | put(e) | offer(e,time,unit) |

| Remove Method | remove() | poll() | take() | poll(time,unit) |

| Inspection method | element() | peek() | Not available | Not available |

Method description:

Throw exception:

When the blocking queue is full, inserting elements into the queue will throw an IllegalStateException("Queue full") exception.

When the blocking queue is empty, NoSuchElementException will be thrown when getting elements from the queue.

Return special value:

The insertion method will return whether it is successful, true for success and false for failure.

To remove a method, an element is taken from the queue. If there is no element, null is returned

Always blocked:

When the blocking queue is full, if the producer thread continues to put elements into the queue, the queue will block the producer thread until the put gets the data or exits in response to the interrupt.

When the blocking queue is empty, the consumer thread attempts to take elements from the queue, and the queue will also block the consumer thread until the queue is available.

Timeout exit:

When the blocking queue is full, the queue will block the producer thread for a period of time. If it exceeds a certain time, the producer thread will exit.

case

Throw exception

add (e)

remove()

element()

@Test

public void test1() {

//Create a blocking queue with a capacity of 3

BlockingQueue<String> blockingQueue = new ArrayBlockingQueue<>(3);

//insert

System.out.println(blockingQueue.add("a"));

System.out.println(blockingQueue.add("b"));

System.out.println(blockingQueue.add("c"));

//inspect

System.out.println(blockingQueue.element());

System.out.println(blockingQueue);

}

}

Operation results:

true

true

true

a

[a, b, c]

@Test

public void test2() {

//Create a blocking queue with a capacity of 3

BlockingQueue<String> blockingQueue = new ArrayBlockingQueue<>(3);

//insert

System.out.println(blockingQueue.add("a"));

System.out.println(blockingQueue.add("b"));

System.out.println(blockingQueue.add("c"));

//inspect

System.out.println(blockingQueue.element());

System.out.println(blockingQueue);

//Insert fourth element

System.out.println(blockingQueue.add("d"));

}

Operation results:

true

true

true

a

[a, b, c]

java.lang.IllegalStateException: Queue full

@Test

public void test3() {

//Create a blocking queue with a capacity of 3

BlockingQueue<String> blockingQueue = new ArrayBlockingQueue<>(3);

//insert

System.out.println(blockingQueue.add("a"));

System.out.println(blockingQueue.add("b"));

System.out.println(blockingQueue.add("c"));

System.out.println(blockingQueue);

System.out.println(blockingQueue.remove());

System.out.println(blockingQueue.remove());

System.out.println(blockingQueue.remove());

System.out.println(blockingQueue.remove());

}

Operation results:

true

true

true

[a, b, c]

a

b

c

java.util.NoSuchElementException

Return special value

offer(e)

poll()

peek()

@Test

public void test4() {

//Create a blocking queue with a capacity of 3

BlockingQueue<String> blockingQueue = new ArrayBlockingQueue<>(3);

//insert

System.out.println(blockingQueue.offer("a"));

System.out.println(blockingQueue.offer("b"));

System.out.println(blockingQueue.offer("c"));

System.out.println(blockingQueue.offer("d"));

}

Operation results:

true

true

true

false

@Test

public void test4() {

//Create a blocking queue with a capacity of 3

BlockingQueue<String> blockingQueue = new ArrayBlockingQueue<>(3);

//insert

System.out.println(blockingQueue.offer("a"));

System.out.println(blockingQueue.offer("b"));

System.out.println(blockingQueue.offer("c"));

System.out.println(blockingQueue.offer("d"));

System.out.println(blockingQueue.poll());

System.out.println(blockingQueue.poll());

System.out.println(blockingQueue.poll());

System.out.println(blockingQueue.poll());

}

Operation results:

true

true

true

false

a

b

c

null

block

put(e)

take()

@Test

public void test5() {

//Create a blocking queue with a capacity of 3

BlockingQueue<String> blockingQueue = new ArrayBlockingQueue<>(3);

//insert

try {

blockingQueue.put("a");

blockingQueue.put("b");

blockingQueue.put("c");

blockingQueue.put("d");

} catch (InterruptedException e) {

e.printStackTrace();

}

}

The fourth element is put in. It can't be put in. It blocks until the queue is available.

@Test

public void test5() {

//Create a blocking queue with a capacity of 3

BlockingQueue<String> blockingQueue = new ArrayBlockingQueue<>(3);

//insert

try {

blockingQueue.put("a");

blockingQueue.put("b");

blockingQueue.put("c");

blockingQueue.take();

blockingQueue.take();

blockingQueue.take();

blockingQueue.take();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

When the fourth element is taken out, there are no elements in the queue. It is blocked until the queue is available

overtime

offer(e,time,unit)

poll(time,unit)

@Test

public void test6() {

//Create a blocking queue with a capacity of 3

BlockingQueue<String> blockingQueue = new ArrayBlockingQueue<>(3);

//insert

try {

System.out.println(blockingQueue.offer("a"));

System.out.println(blockingQueue.offer("b"));

System.out.println(blockingQueue.offer("c"));

System.out.println(blockingQueue.offer("d",3, TimeUnit.SECONDS));//Exit after blocking for up to 3 seconds

} catch (InterruptedException e) {

e.printStackTrace();

}

}

Operation results:

true

true

true

false

Exit after blocking for 3 seconds

@Test

public void test6() {

//Create a blocking queue with a capacity of 3

BlockingQueue<String> blockingQueue = new ArrayBlockingQueue<>(3);

//insert

try {

System.out.println(blockingQueue.offer("a"));

System.out.println(blockingQueue.offer("b"));

System.out.println(blockingQueue.offer("c"));

System.out.println(blockingQueue.offer("d", 3, TimeUnit.SECONDS));//Exit after blocking for up to 3 seconds

System.out.println(blockingQueue.poll());

System.out.println(blockingQueue.poll());

System.out.println(blockingQueue.poll());

System.out.println(blockingQueue.poll(3, TimeUnit.SECONDS));

} catch (InterruptedException e) {

e.printStackTrace();

}

}

Operation results:

true

true

true

false

a

b

c

null

The plug-in fails to block for 3 seconds, and the extraction fails to block for 3 seconds, with a total of 6 seconds

Common BlockingQueue

ArrayBlockingQueue

Implementation of blocking queue based on array, which sorts elements according to the principle of first in first out (FIFO). Inside the ArrayBlockingQueue, a fixed length array is maintained to cache the data objects in the queue. This is a common blocking queue. In addition to a fixed length array, ArrayBlockingQueue also stores two shaping variables, which respectively identify the position of the head and tail of the queue in the array.

ArrayBlockingQueue shares the same lock object when the producer places data and the consumer obtains data, which also means that the two cannot run in parallel, which is especially different from LinkedBlockingQueue; According to the analysis of the implementation principle, the ArrayBlockingQueue can completely adopt the separation lock, so as to realize the complete parallel operation of producer and consumer operations. The reason why Doug Lea didn't do this may be that the data writing and obtaining operations of ArrayBlockingQueue are light enough to introduce an independent locking mechanism. In addition to bringing additional complexity to the code, it can't take any advantage in performance. Another obvious difference between ArrayBlockingQueue and LinkedBlockingQueue is that the former will not generate or destroy any additional object instances when inserting or deleting elements, while the latter will generate an additional Node object. In the system that needs to process large quantities of data efficiently and concurrently for a long time, its impact on GC is still different. When creating ArrayBlockingQueue, we can also control whether the internal lock of the object adopts fair lock, and non fair lock is adopted by default.

Summary: bounded blocking queue composed of array structure (locking)

LinkedBlockingQueue

Similar to ArrayListBlockingQueue, the blocking queue based on linked list also maintains a data buffer queue (the queue is composed of a linked list). When the producer puts a data into the queue, the queue will get the data from the producer and cache it in the queue, and the producer will return immediately; Only when the queue buffer reaches the maximum cache capacity (LinkedBlockingQueue can specify this value through the constructor) will the producer queue be blocked until the consumer consumes a copy from the queue

Data, the producer thread will be awakened. On the contrary, the processing on the consumer side is also based on the same principle. The reason why LinkedBlockingQueue can efficiently process concurrent data is that it uses independent locks for producer and consumer to control data synchronization, which also means that in the case of high concurrency, producers and consumers can operate the data in the queue in parallel, so as to improve the concurrency performance of the whole queue.

ArrayBlockingQueue and LinkedBlockingQueue are the two most common and commonly used blocking queues. In general, using these two classes is sufficient to deal with producer consumer problems between multiple threads.

Summary: bounded (but the default size is integer.MAX_VALUE) blocking queue (locking) composed of linked list structure

DelayQueue

The element in the DelayQueue can be obtained from the queue only when the specified delay time has expired. DelayQueue is a queue with no size limit, so the operation (producer) that inserts data into the queue will never be blocked, but only the operation (consumer) that obtains data will be blocked.

Summary: delay unbounded blocking queue (locking) implemented with priority queue PriorityBlockingQueue

PriorityBlockingQueue

Priority based blocking queue (priority is determined by the comparer object passed in by the constructor). It is sorted naturally by default. However, it should be noted that PriorityBlockingQueue will not block data producers, but only block data consumers when there is no consumable data.

Therefore, special attention should be paid when using. The speed of producer production data must not be faster than that of consumer consumption data, otherwise all available heap memory space will be exhausted over time.

When implementing PriorityBlockingQueue, the lock for internal thread synchronization adopts fair lock.

Summary: unbounded blocking queue supporting thread prioritization (locking)

SynchronousQueue

A non buffered waiting queue is similar to the direct transaction without intermediary. It is a bit like the producers and consumers in the primitive society. The producers take the products to the market to sell to the final consumers of the products, and the consumers must go to the market in person to find the direct producer of the goods they want. If one party fails to find a suitable target, I'm sorry, everyone is waiting in the market. Compared with the buffered BlockingQueue, there is no intermediate dealer link (buffer zone). If there is a dealer, the producer directly wholesales the products to the dealer, without worrying that the dealer will eventually sell these products to those consumers. Because the dealer can stock some goods, compared with the direct transaction mode, Generally speaking, the intermediate dealer mode will have a higher throughput (can be sold in batches); On the other hand, due to the introduction of dealers, additional transaction links are added from producers to consumers, and the timely response performance of a single product may be reduced.

There are two different ways to declare a synchronous queue, which have different behavior.

The difference between fair mode and unfair mode:

• fair mode: the SynchronousQueue will adopt fair lock and cooperate with a FIFO queue to block redundant producers and consumers, so as to the overall fair strategy of the system;

• unfair mode (SynchronousQueue default): SynchronousQueue uses unfair locks and cooperates with a LIFO queue to manage redundant producers and consumers. In the latter mode, if there is a gap in processing speed between producers and consumers, it is easy to be hungry, that is, some producers or consumers' data may never be processed.

Summary: blocking queues that do not store elements, that is, queues of individual elements (locked)

LinkedTransferQueue

LinkedTransferQueue is an unbounded blocked TransferQueue queue composed of a linked list structure. Compared with other blocking queues, LinkedTransferQueue has more tryTransfer and transfer methods.

LinkedTransferQueue adopts a preemptive mode. This means that when the consumer thread takes an element, if the queue is not empty, it will directly take the data. If the queue is empty, it will generate a node (the node element is null) to join the queue, and then the consumer thread will be waiting on this node. When the producer thread joins the queue, it finds a node with null element, the producer thread will not join the queue, and will directly fill the element into the queue

The node and wakes up the waiting thread of the node. The awakened consumer thread takes the element and returns from the called method.

Summary: unbounded blocking queue composed of linked list (locking)

LinkedBlockingDeque

LinkedBlockingDeque is a bidirectional blocking queue composed of linked list structure, that is, elements can be inserted and removed from both ends of the queue.

For some specified operations, if the queue state does not allow the operation when inserting or obtaining queue elements, the thread may be blocked until the queue state changes to allow operation. There are generally two cases of blocking here

• when inserting an element: if the current queue is full, it will enter the blocking state. Wait until the queue has an empty position before inserting the element. This operation can be performed by setting the timeout parameter, and returning false after timeout indicates that the operation has failed, or it can be blocked without setting the timeout parameter, and throw an InterruptedException exception after interruption

• when reading elements: if the current queue is empty, it will block until the queue is not empty, and then return elements. You can also set the timeout parameter

Conclusion: the bidirectional (unbounded) blocking queue (no lock) composed of linked lists can reduce the lock contention to half at most when multiple threads are concurrent.

summary

- In the field of multithreading: the so-called blocking will suspend the thread (i.e. blocking) in some cases. Once the conditions are met, the suspended thread will be automatically aroused

- Why BlockingQueue? Before the release of concurrent package, in a multithreaded environment, each programmer must control these details, especially considering efficiency and thread safety, which will bring great complexity to our program. After use, we don't need to care about when to block the thread and when to wake up the thread, because all this is done by BlockingQueue

reference resources: