- brief introduction

RAIN-0: Mainly any disk is damaged and all data on RAID will be lost and unreadable. (Half to half)

RAID-1: The biggest advantage is probably backup of data. However, since half of the disk capacity is used for backup, the total capacity will be only half of the total disk capacity. (Mirror)

RAID 1+0, RAID 0+1: With the advantages of RAID-0, the performance can be improved, and with the advantages of RAID-1, the data can be backed up. But RAID-1 also has the disadvantage of reducing the total capacity by half.

The total capacity of RAID-5 will reduce the total number of disks by one. RAID-5 supports only one disk damage by default

RAID-6 supports simultaneous two disk corruption - Advantages of Disk Array

When the disk of the disk array is damaged, you have to unplug the damaged disk and replace it with a new one. After switching to a new disk and starting the disk array smoothly, the disk array will actively rebuild the original broken disk data to the new disk! Then the data on your disk array is restored! - mdadm: floppy disk array settings

Parameters:

create: Options for creating RAID;

auto=yes: Decide to create the following software disk array devices, i.e. / dev/md0, /dev/md1, etc.

Chuk = Nk: The chunk size of the device can also be considered as the stripe size, usually 64K or 512K.

raid-devices=N: Devices that use several partition s as disk arrays

spare-devices=N: Use several disks as spare devices

level=[015]: Set the level of the array. There's a lot of support, but recommendation 0,1,5 is enough.

detail: Details of the disk array that you connect to later

- add: Adding subsequent devices to this md

remove: remove subsequent devices from md

fail: Sets the following device to an error state

-

mdadm: Create RAID

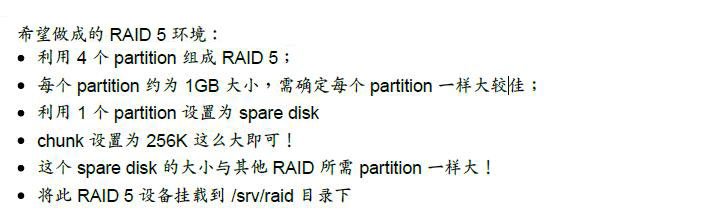

# Add five hard disks to the virtual machine (sdcb,sdc,sdd,sde,sdf) # Creating RAID with mdadm [root@CentOS ~]# mdadm --create --auto=yes /dev/md0 --level=5 --raid-devices=4 --spare-devices=1 /dev/sd{b,c,d,e,f} mdadm: Defaulting to version 1.2 metadata mdadm: array /dev/md0 started. [root@CentOS ~]# mdadm --detail /dev/md0 /dev/md0: # Device File Name Version : 1.2 Creation Time : Sat Mar 18 10:08:48 2017 Raid Level : raid5 Array Size : 62863872 (59.95 GiB 64.37 GB) Used Dev Size : 20954624 (19.98 GiB 21.46 GB) Raid Devices : 4 #The number used as RAID is 4 Total Devices : 5 #Total number of equipment is 5 Persistence : Superblock is persistent Update Time : Sat Mar 18 10:10:45 2017 State : clean Active Devices : 4 #Number of devices started Working Devices : 5 #Number of working equipment Failed Devices : 0 #Number of Faulty Equipment Spare Devices : 1 #Number of reserve disks Layout : left-symmetric Chunk Size : 512K Name : CentOS:0 (local to host CentOS) UUID : 2be487b3:4e6688cd:c5d5fbb4:991f3593(Device Representation) Events : 20 Number Major Minor RaidDevice State 0 8 16 0 active sync /dev/sdb 1 8 32 1 active sync /dev/sdc 2 8 48 2 active sync /dev/sdd 5 8 64 3 active sync /dev/sde 4 8 80 - spare /dev/sdf # spare rebuilding is a process being created (referring to an incomplete state) # Four active sync s, one spare # View the RAID status of the system [root@CentOS ~]# cat /proc/mdstat Personalities : [raid6] [raid5] [raid4] md0 : active raid5 sde[5] sdf[4](S) sdd[2] sdc[1] sdb[0] 62863872 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU] unused devices: <none> # The above expression md0 is raid5 and consists of five disks (sequentially identified) # sdf[4](S):S stands for spare [UUUU]Represents four normal devices # Formatting and mounting RAID [root@CentOS ~]# mkfs -t ext4 /dev/md0 [root@CentOS ~]# mkdir /mnt/raid [root@CentOS ~]# mount /dev/md0 /mnt/raid [root@CentOS ~]# df Filesystem 1K-blocks Used Available Use% Mounted on /dev/md0 61876732 184136 58549404 1% /mnt/raid -

Emulation of RAID Error Rescue Mode

# Copy something into / mnt/raid, assuming RAID is already in use [root@CentOS ~]# cp -a /etc/ /var/log /mnt/raid/ [root@CentOS ~]# df /mnt/raid/; du -sm /mnt/raid/* Filesystem 1K-blocks Used Available Use% Mounted on /dev/md0 61876732 236008 58497532 1% /mnt/raid 42 /mnt/raid/etc 9 /mnt/raid/log 1 /mnt/raid/lost+found # Assuming / dev/sdd error, the actual simulation method: [root@CentOS ~]# mdadm --manage /dev/md0 --fail /dev/sdd mdadm: set /dev/sdd faulty in /dev/md0 [root@CentOS ~]# mdadm --detail /dev/md0 /dev/md0: Active Devices : 3 Working Devices : 4 Failed Devices : 1 #One mistake Spare Devices : 1 Layout : left-symmetric Chunk Size : 512K Rebuild Status : 10% complete Name : CentOS:0 (local to host CentOS) UUID : 2be487b3:4e6688cd:c5d5fbb4:991f3593 Events : 23 Number Major Minor RaidDevice State 0 8 16 0 active sync /dev/sdb 1 8 32 1 active sync /dev/sdc 4 8 80 2 spare rebuilding /dev/sdf 5 8 64 3 active sync /dev/sde 2 8 48 - faulty /dev/sdd # Show that recovery is in progress [root@CentOS ~]# cat /proc/mdstat Personalities : [raid6] [raid5] [raid4] md0 : active raid5 sde[5] sdf[4] sdd[2](F) sdc[1] sdb[0] 62863872 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/3] [UU_U] [===============>.....] recovery = 75.1% (15752192/20954624) finish=0.5min speed=152286K/sec unused devices: <none> [UU_U]:A disk error # Reconstructed RAID 5: [root@CentOS ~]# mdadm --detail /dev/md0 Number Major Minor RaidDevice State 0 8 16 0 active sync /dev/sdb 1 8 32 1 active sync /dev/sdc 4 8 80 2 active sync /dev/sdf 5 8 64 3 active sync /dev/sde 2 8 48 - faulty /dev/sdd [root@CentOS ~]# cat /proc/mdstat Personalities : [raid6] [raid5] [raid4] md0 : active raid5 sde[5] sdf[4] sdd[2](F) sdc[1] sdb[0] 62863872 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU] unused devices: <none> # It's back to normal! # Add a new disk of the same size to the virtual machine # Add new disks to pull out the problem [root@CentOS ~]# mdadm --manage /dev/md0 --add /dev/sdg /remove /dev/sdd [root@CentOS ~]# mdadm --detail /dev/md0 Number Major Minor RaidDevice State 0 8 16 0 active sync /dev/sdb 1 8 32 1 active sync /dev/sdc 4 8 80 2 active sync /dev/sdf 5 8 64 3 active sync /dev/sde 6 8 96 - spare /dev/sdg - Turn off RAID

[root@CentOS ~]# umount /dev/md0

[root@CentOS ~]# mdadm --stop /dev/md127