Binder is an interprocess communication tool used by Android. Android itself is a complex operating system, running a large number of applications and services, the communication between them is particularly important. When the application program uses Binder to communicate between processes, the implementation method is very simple. It only needs to write AIDL files, and the IPC communication code will be generated when the system is compiled. This simple approach also allows application developers to avoid understanding the underlying transport mechanism of Binder. But for system developers, it is necessary to be familiar with Binder's principles, and most of the cases are entangled with Binder when analyzing and solving problems. This article explains the first step in using Binder to open and initialize a Binder device.

Open the Binder device

Binder driver is registered as a character device "/ dev/binder" when initialized, and implements poll, ioctl, mmap and other interfaces. The application layer controls the binder through ioctl, and the content of IPC communication is transmitted through the IOCTL command "BINDER_WRITE_READ".

Most of the Binder communication in Android is to initialize the Binder device through ProcessState.

frameworks/native/libs/binder/ProcessState.cpp #define BINDER_VM_SIZE ((1*1024*1024) - (4096 *2)) ...... ProcessState::ProcessState() : mDriverFD(open_driver()) //Open the binder device , mVMStart(MAP_FAILED) , mManagesContexts(false) , mBinderContextCheckFunc(NULL) , mBinderContextUserData(NULL) , mThreadPoolStarted(false) , mThreadPoolSeq(1) { if (mDriverFD >= 0) { // XXX Ideally, there should be a specific define for whether we // have mmap (or whether we could possibly have the kernel module // availabla). #if !defined(HAVE_WIN32_IPC) // mmap the binder, providing a chunk of virtual address space to receive transactions. // Mapping binder address space mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0); if (mVMStart == MAP_FAILED) { // *sigh* ALOGE("Using /dev/binder failed: unable to mmap transaction memory.\n"); close(mDriverFD); mDriverFD = -1; } #else mDriverFD = -1; #endif } LOG_ALWAYS_FATAL_IF(mDriverFD < 0, "Binder driver could not be opened. Terminating."); }

As you can see, the Binder device is first opened to obtain device descriptor during the construction of ProcessState, and then the address space of (1M - 8K) size is mapped by mmap for data transmission. Let's take a look at open_driver().

frameworks/native/libs/binder/ProcessState.cpp static int open_driver() { int fd = open("/dev/binder", O_RDWR); if (fd >= 0) { fcntl(fd, F_SETFD, FD_CLOEXEC); int vers = 0; status_t result = ioctl(fd, BINDER_VERSION, &vers); if (result == -1) { ALOGE("Binder ioctl to obtain version failed: %s", strerror(errno)); close(fd); fd = -1; } if (result != 0 || vers != BINDER_CURRENT_PROTOCOL_VERSION) { ALOGE("Binder driver protocol does not match user space protocol!"); close(fd); fd = -1; } size_t maxThreads = 15; result = ioctl(fd, BINDER_SET_MAX_THREADS, &maxThreads); if (result == -1) { ALOGE("Binder ioctl to set max threads failed: %s", strerror(errno)); } } else { ALOGW("Opening '/dev/binder' failed: %s\n", strerror(errno)); } return fd; }

Here turn on the device "/ dev/binder" and verify the version information. Then the maximum number of Binder threads in a process is set by BINDER_SET_MAX_THREADS, and proc-> max_threads is set in the driver. The value set in the code is 15, but the count in the driver starts at 0, so in fact the maximum number of Binder threads is 16. Next, let's look at the driver's open function. It's simpler to create a Binder process structure and initialize it.

drivers/staging/android/binder.c static int binder_open(struct inode *nodp, struct file *filp) { struct binder_proc *proc; // Binder process structure ...... proc = kzalloc(sizeof(*proc), GFP_KERNEL); if (proc == NULL) return -ENOMEM; get_task_struct(current); proc->tsk = current; INIT_LIST_HEAD(&proc->todo); init_waitqueue_head(&proc->wait); proc->default_priority = task_nice(current); // Default priority binder_lock(__func__); binder_stats_created(BINDER_STAT_PROC); // Record binder status hlist_add_head(&proc->proc_node, &binder_procs); proc->pid = current->group_leader->pid; INIT_LIST_HEAD(&proc->delivered_death); filp->private_data = proc; // Device private data is set to binder process structure binder_unlock(__func__); ...... }

Bind_proc is used to manage Binder processes. A process has only one binder_proc, which records all the information transmitted by Binder. Let's look at the definition of binder_proc.

drivers/staging/android/binder.c struct binder_proc { struct hlist_node proc_node; // The node of the binder process is mounted in the binder_procs list struct rb_root threads; // binder Thread ID Red-Black Tree struct rb_root nodes; // binder entity object red-black tree struct rb_root refs_by_desc; // binder refers to the red-black tree of the object, with handle as the key struct rb_root refs_by_node; // binder refers to the red-black tree of the object, with node as the key int pid; // Process ID struct vm_area_struct *vma; // Virtual address space pointer for the process struct mm_struct *vma_vm_mm; // The memory structure of the process struct task_struct *tsk; // The task structure of the process struct files_struct *files; // The file structure of the process struct hlist_node deferred_work_node; // Delayed work queue node mounted in binder_deferred_list list list int deferred_work; // Types of delayed work void *buffer; // Mapping Kernel Space Address ptrdiff_t user_buffer_offset; // The Shift of Mapping Kernel Space and User Space struct list_head buffers; // All buffer list struct rb_root free_buffers; // Red and Black Trees with Free buffer struct rb_root allocated_buffers; // Allocated buffer red-black trees size_t free_async_space; // Residual asynchronous transfer space size struct page **pages; // Physical memory pages size_t buffer_size; // buffer size of mapping uint32_t buffer_free; // Residual Available buffer Size struct list_head todo; // Process Work Link List wait_queue_head_t wait; // Waiting queue struct binder_stats stats; // binder statistics struct list_head delivered_death; // List of Death Notices Published int max_threads; // Maximum number of threads int requested_threads; // Number of threads requested int requested_threads_started; // The number of threads requested that have been started int ready_threads; // Number of threads ready long default_priority; // Default Priority struct dentry *debugfs_entry; // debugf entry pointer };

Memory space mapping

Create binder_proc, and after initialization, the application layer calls mmap() to map the address space.

drivers/staging/android/binder.c static int binder_mmap(struct file *filp, struct vm_area_struct *vma) { int ret; struct vm_struct *area; struct binder_proc *proc = filp->private_data; const char *failure_string; struct binder_buffer *buffer; if (proc->tsk != current) return -EINVAL; // Mapping space cannot be greater than 4M if ((vma->vm_end - vma->vm_start) > SZ_4M) vma->vm_end = vma->vm_start + SZ_4M; ...... // fork's subprocesses cannot copy the mapping space and do not allow modification of write attributes vma->vm_flags = (vma->vm_flags | VM_DONTCOPY) & ~VM_MAYWRITE; ...... // Getting the kernel virtual address space area = get_vm_area(vma->vm_end - vma->vm_start, VM_IOREMAP); ...... proc->buffer = area->addr; proc->user_buffer_offset = vma->vm_start - (uintptr_t)proc->buffer; mutex_unlock(&binder_mmap_lock); ...... // Create physical page structure proc->pages = kzalloc(sizeof(proc->pages[0]) * ((vma->vm_end - vma->vm_start) / PAGE_SIZE), GFP_KERNEL); ...... proc->buffer_size = vma->vm_end - vma->vm_start; vma->vm_ops = &binder_vm_ops; vma->vm_private_data = proc; // Assign a physical page and map it to the virtual address space if (binder_update_page_range(proc, 1, proc->buffer, proc->buffer + PAGE_SIZE, vma)) { ...... // Create buffers list and insert the first free buffer buffer = proc->buffer; INIT_LIST_HEAD(&proc->buffers); list_add(&buffer->entry, &proc->buffers); buffer->free = 1; binder_insert_free_buffer(proc, buffer); // Asynchronous transfer available space size is set to half of the map size proc->free_async_space = proc->buffer_size / 2; barrier(); proc->files = get_files_struct(current); proc->vma = vma; proc->vma_vm_mm = vma->vm_mm; ...... }

Binder_mmap() mainly completes the allocation of virtual address space, address space mapping and initialization of memory-related parameters in binder_proc. Real physical page allocation is done through binder_update_page_range(), where only one physical page is allocated into the free_buffers tree. Later, buffers are allocated and reclaimed as needed during Binder transmission.

drivers/staging/android/binder.c static int binder_update_page_range(struct binder_proc *proc, int allocate, void *start, void *end, struct vm_area_struct *vma) { void *page_addr; unsigned long user_page_addr; struct vm_struct tmp_area; struct page **page; struct mm_struct *mm; ...... // vma is not NULL when mmap is the only one. In other cases, memory-related data will be obtained according to proc. if (vma) mm = NULL; else // Get the memory descriptor and increase the user count to prevent mm_struct from being released mm = get_task_mm(proc->tsk); if (mm) { down_write(&mm->mmap_sem); vma = proc->vma; if (vma && mm != proc->vma_vm_mm) { pr_err("%d: vma mm and task mm mismatch\n", proc->pid); vma = NULL; } } // Allocation is 0 when memory is reclaimed if (allocate == 0) goto free_range; ...... // Circular allocation of physical pages, one page at a time for (page_addr = start; page_addr < end; page_addr += PAGE_SIZE) { int ret; struct page **page_array_ptr; page = &proc->pages[(page_addr - proc->buffer) / PAGE_SIZE]; BUG_ON(*page); // Assign a physical page and save the address to proc *page = alloc_page(GFP_KERNEL | __GFP_HIGHMEM | __GFP_ZERO); ...... tmp_area.addr = page_addr; tmp_area.size = PAGE_SIZE + PAGE_SIZE /* guard page? */; page_array_ptr = page; // Create a mapping between page tables and physical pages ret = map_vm_area(&tmp_area, PAGE_KERNEL, &page_array_ptr); ...... user_page_addr = (uintptr_t)page_addr + proc->user_buffer_offset; // Insert physical page into user virtual address space ret = vm_insert_page(vma, user_page_addr, page[0]); ...... } if (mm) { up_write(&mm->mmap_sem); // Reducing the user count of memory descriptors mmput(mm); } return 0; free_range: for (page_addr = end - PAGE_SIZE; page_addr >= start; page_addr -= PAGE_SIZE) { page = &proc->pages[(page_addr - proc->buffer) / PAGE_SIZE]; if (vma) // Unmapping User Virtual Address Space to Physical Pages zap_page_range(vma, (uintptr_t)page_addr + proc->user_buffer_offset, PAGE_SIZE, NULL); err_vm_insert_page_failed: // Unmapping Physical Pages to Kernel Page Tables unmap_kernel_range((unsigned long)page_addr, PAGE_SIZE); err_map_kernel_failed: // Release physical pages __free_page(*page); *page = NULL; err_alloc_page_failed: ; } ........ }

When the ProcessState constructor is executed, the initialization of the Binder device and the memory space mapping are completed. The application layer obtains the descriptor mDriverFD of Binder device, and then IPC transmission can be carried out through ioctl operation.

The first Binder thread

Previously, it was analyzed that a Binder process can have up to 16 Binder threads, and each Binder thread can carry out Binder transmission independently. The first Binder thread is usually created by ProcessState::startThreadPool() after creating the ProcessState object.

frameworks/native/libs/binder/ProcessState.cpp class PoolThread : public Thread { public: PoolThread(bool isMain) : mIsMain(isMain) { } protected: virtual bool threadLoop() { // When created through startThreadPool, mIsMain is true and is the main thread IPCThreadState::self()->joinThreadPool(mIsMain); return false; } const bool mIsMain; }; ...... void ProcessState::startThreadPool() { AutoMutex _l(mLock); // The first thread must be created through startThreadPool if (!mThreadPoolStarted) { mThreadPoolStarted = true; spawnPooledThread(true); } } ...... void ProcessState::spawnPooledThread(bool isMain) { if (mThreadPoolStarted) { // Construct the binder name: Binder_XX String8 name = makeBinderThreadName(); ALOGV("Spawning new pooled thread, name=%s\n", name.string()); sp<Thread> t = new PoolThread(isMain); t->run(name.string()); } }

The final real Binder thread creation is achieved through IPCThreadState::joinThreadPool().

frameworks/native/libs/binder/IPCThreadState.cpp void IPCThreadState::joinThreadPool(bool isMain) { ...... // The main thread uses the command BC_ENTER_LOOPER, and subsequently creates threads through BC_REGISTER_LOOPER. mOut.writeInt32(isMain ? BC_ENTER_LOOPER : BC_REGISTER_LOOPER); ...... // Set the thread as the foreground group to ensure the initialization of Binder transmission set_sched_policy(mMyThreadId, SP_FOREGROUND); status_t result; do { // Clean up the command queue processPendingDerefs(); // now get the next command to be processed, waiting if necessary // Get a command and execute it result = getAndExecuteCommand(); ...... // When a non-main thread is not required, it exits. The main thread will live forever if(result == TIMED_OUT && !isMain) { break; } } while (result != -ECONNREFUSED && result != -EBADF); ...... // Thread Exit Command mOut.writeInt32(BC_EXIT_LOOPER); talkWithDriver(false); }

Join ThreadPool () sends the command BC_ENTER_LOOPER to create the first Binder thread. Then enter the loop, get the command through getAndExecuteCommand() and execute it. Just look at how it interacts with the driver.

frameworks/native/libs/binder/IPCThreadState.cpp status_t IPCThreadState::getAndExecuteCommand() { status_t result; int32_t cmd; // Interacting with Binder Drivers result = talkWithDriver(); if (result >= NO_ERROR) { size_t IN = mIn.dataAvail(); ...... // Executive order result = executeCommand(cmd); ...... // When executing a command, you may set the thread to a background group and then to a foreground group again. set_sched_policy(mMyThreadId, SP_FOREGROUND); } return result; } ...... status_t IPCThreadState::talkWithDriver(bool doReceive) { ...... // Write data as mOut bwr.write_size = outAvail; bwr.write_buffer = (uintptr_t)mOut.data(); // This is what we'll read. // Read data as mIn if (doReceive && needRead) { bwr.read_size = mIn.dataCapacity(); bwr.read_buffer = (uintptr_t)mIn.data(); } else { bwr.read_size = 0; bwr.read_buffer = 0; } ...... // Read and write the driver using BINDER_WRITE_READ if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0) ...... }

Binder-driven reads and writes are accomplished by BINDER_WRITE_READ command of ioctl. The data of reads and writes are mIn and mOut. MIn and mOut are Parcel instances. Binder's data need to be serialized by Parcel before transmission. The data received by the application is read out by deserialization. Let's first look at thread creation and see how BC_ENTER_LOOPER is handled in the driver.

drivers/staging/android/binder.c static int binder_thread_write(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) { ...... case BC_ENTER_LOOPER: ...... // Setting thread looper status thread->looper |= BINDER_LOOPER_STATE_ENTERED; break; ...... } ...... static struct binder_thread *binder_get_thread(struct binder_proc *proc) { struct binder_thread *thread = NULL; struct rb_node *parent = NULL; struct rb_node **p = &proc->threads.rb_node; // Finding threads in the proc threads tree while (*p) { parent = *p; thread = rb_entry(parent, struct binder_thread, rb_node); if (current->pid < thread->pid) p = &(*p)->rb_left; else if (current->pid > thread->pid) p = &(*p)->rb_right; else break; } // If no thread is found in the threads tree, create a thread and insert it into the tree if (*p == NULL) { thread = kzalloc(sizeof(*thread), GFP_KERNEL); if (thread == NULL) return NULL; binder_stats_created(BINDER_STAT_THREAD); thread->proc = proc; thread->pid = current->pid; init_waitqueue_head(&thread->wait); INIT_LIST_HEAD(&thread->todo); rb_link_node(&thread->rb_node, parent, p); rb_insert_color(&thread->rb_node, &proc->threads); thread->looper |= BINDER_LOOPER_STATE_NEED_RETURN; thread->return_error = BR_OK; thread->return_error2 = BR_OK; } return thread; } ...... static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) { ...... // Get the current thread's binder thread in the binder process thread = binder_get_thread(proc); ...... switch (cmd) { case BINDER_WRITE_READ: { if (bwr.write_size > 0) { // Writing data ret = binder_thread_write(proc, thread, bwr.write_buffer, bwr.write_size, &bwr.write_consumed); ...... if (bwr.read_size > 0) { // Read data ret = binder_thread_read(proc, thread, bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK); ...... if (copy_to_user(ubuf, &bwr, sizeof(bwr))) { ret = -EFAULT; goto err; } break;BC_ENTER_LOOPER } ...... }

Tidy up the process created by the first Binder thread.

- The application creates a thread Binder_1 by calling startThreadPool() after creating the ProcessState instance.

- The Binder_1 runtime calls IPCThreadState:: join ThreadPool () to interact with the driver and sends the command BC_ENTER_LOOPER.

When the driver reads and writes data, it creates a Binder thread and adds it to the proc - > threads tree. This is that there should be only one node on the red-black tree whose pid is Binder_1. - The driver executes the command BC_ENTER_LOOPER and sets the Binder thread looper status to BINDER_LOOPER_STATE_ENTERED.

- The driver calls binder_thread_read() to wait for data to be written.

Creation of subsequent Binder threads

After the first Binder thread in the process is created, there are two ways to create a Binder thread.

- Call IPCThreadState:: join ThreadPool (): Some Native Service s use this approach, such as MediaServer, DrmServer.

- By ProcessState:: span Pooled Thread (false): executed when processing BR_SPAWN_LOOPER commands.

Most subsequent Binder threads are created through the BR_SPAWN_LOOPER command. This command is issued by the Binder driver. When the Binder driver checks that the Binder thread is insufficient, it sends BR_SPAWN_LOOPER to the application layer to create the Binder thread.

drivers/staging/android/binder.c static int binder_thread_read(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block) { ...... // Whether to retrieve work items from proc wait_for_proc_work = thread->transaction_stack == NULL && list_empty(&thread->todo); ...... // Setting the state to Binder thread is waiting for processing thread->looper |= BINDER_LOOPER_STATE_WAITING; // If you handle proc work, let ready_threads add 1 if (wait_for_proc_work) proc->ready_threads++; binder_unlock(__func__); ...... // Threads go to sleep, waiting for data to arrive if (wait_for_proc_work) { ...... ret = wait_event_freezable_exclusive(proc->wait, binder_has_proc_work(proc, thread)); } else { ...... ret = wait_event_freezable(thread->wait, binder_has_thread_work(thread)); } binder_lock(__func__); // If you process proc work, subtract ready_threads by 1, and the surface Binder thread starts processing data. if (wait_for_proc_work) proc->ready_threads--; // Remove Binder thread waiting state thread->looper &= ~BINDER_LOOPER_STATE_WAITING; ...... // If there are no remaining available Binder threads and the maximum number of threads is not reached, BR_SPAWN_LOOPER is sent. if (proc->requested_threads + proc->ready_threads == 0 && proc->requested_threads_started < proc->max_threads && (thread->looper & (BINDER_LOOPER_STATE_REGISTERED | BINDER_LOOPER_STATE_ENTERED)) /* the user-space code fails to */ /*spawn a new thread if we leave this out */) { proc->requested_threads++; binder_debug(BINDER_DEBUG_THREADS, "%d:%d BR_SPAWN_LOOPER\n", proc->pid, thread->pid); if (put_user(BR_SPAWN_LOOPER, (uint32_t __user *)buffer)) return -EFAULT; binder_stat_br(proc, thread, BR_SPAWN_LOOPER); } return 0; }

When there are no remaining Binder threads available in the process, the driver sends the BR_SPAWN_LOOPER command, and the application layer executes spawnPooledThread(false) when it receives the command. This function, which has been analyzed above, sends the BC_REGISTER_LOOPER command to the driver.

drivers/staging/android/binder.c static int binder_thread_write(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed) { ...... case BC_REGISTER_LOOPER: ...... } else { // Update thread status count in proc proc->requested_threads--; proc->requested_threads_started++; } // Setting thread looper status thread->looper |= BINDER_LOOPER_STATE_REGISTERED; break; ...... }

As you can see, there are three thread-related counts in proc.

- ready_threads: This count is updated when processing proc work items, indicating how many Binder threads are waiting for processing commands.

- Requ_threads: When the driver requests to create a Binder thread, it counts + 1. When the application layer completes the thread creation and sends BC_REGISTER_LOOPER to register the driver, it counts - 1. So the count should be zero after the Binder thread creation process is completed.

- Require_threads_start: Indicates how many Binder threads have been started. However, the count is not updated when the Binder main thread is established through BC_ENTER_LOOPER. So the maximum number of Binder threads is (max_threads + 1).

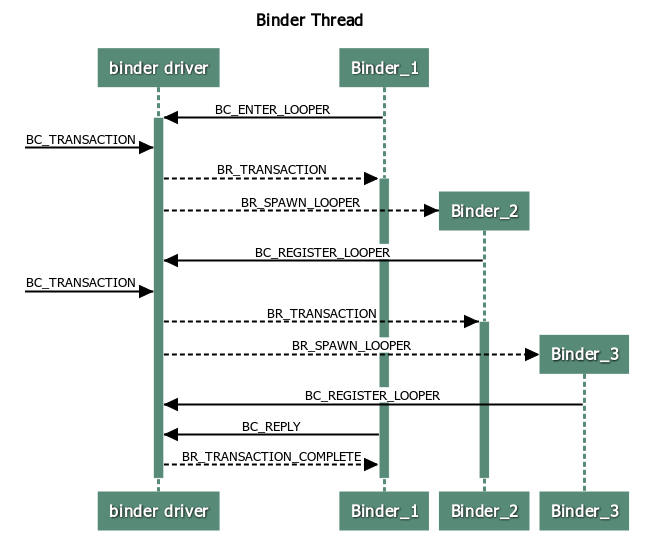

Binder thread creation process

Based on the above analysis, you can see that there are two ways to create a Binder thread.

- Binder main thread: When the Binder process is initialized, a thread is created and BC_ENTER_LOOPER is sent to the Binder driver to register as the main thread. There is only one main thread in a Binder process and it cannot be destroyed.

- Binder auxiliary threads: When the Binder process has no spare Binder threads, the Binder driver sends BR_SPAWN_LOOPER creation. The application layer creates a thread to register through BC_REGISTER_LOOPE. Binder auxiliary threads can be multiple and can be destroyed when not needed.

The figure above is a simple collation of the Binder thread creation process. You can see that if the Binder main thread receives a message, it will create a Binder thread again, and then if there is no free thread when it receives the message, it will create it. So in some Native Service s, join ThreadPool () is called at initialization to create a secondary thread, so that the Service will have two Binder threads at the start of the run, because they are clearly bound to use cross-process communication.