For the first time, it's a little bit rough to do big data analysis. But fortunately, you can come out with something. Anyway, after you get the data, you can do your own analysis

The city with relatively high salary, according to Baidu recruitment information to analyze

According to the market demand analysis, the age of developers

Analysis of market demand for talents

Requirements of major cities for development experience

Data crawling, source code

public class GetDate {

public static void main(String[] args) throws JSONException {

String srr[]={"Beijing","Shanghai","Guangzhou","Tianjin","Wuhan","Shenyang","Harbin","Xi'an","Nanjing","Chengdu","Chongqing city; Shenzhen","Hangzhou","Qingdao","Suzhou","Taiyuan","Zhengzhou","Ji'nan","Changchun"," Hefei",

"Changsha","Nanchang","Wuxi","Kunming","Ningbo","Fuzhou","The larger city of Shijiazhuang; Nanning","Xuzhou","Yantai","Tangshan","city in Guangxi","Changzhou","Anshan","Xiamen","Fushun","Jilin City","Luoyang","Da Tong","Baotou",

"Daqing","Zibo","Urumqi","Foshan","Hohhot","Qiqihar","Quanzhou","Xining","Lanzhou","Guiyang","Wenzhou"};

String brr[]={"java","python","C++",".NET","WEB Front end","UI Designer","Android","IOS","PHP","C","C#"," R","Swift","GO "," big data "};

//java

//String urlX="http://zhaopin.baidu.com/api/quanzhiasync?query=java&sort_type=1&detailmode=close&rn=20&pn=";

//python

//String urlX="http://zhaopin.baidu.com/api/quanzhiasync?query=python&sort_type=1&detailmode=close&rn=20&pn=";

//c++

String urlX="http://zhaopin.baidu.com/api/quanzhiasync?sort_type=1&detailmode=close&rn=20&pn=";

for (int d = 0; d < brr.length; d++) {

String query=brr[d];

System.err.println(query);

for (int c = 0; c < srr.length; c++) {

//City list city =% E6% 9D% ad% E5% B7% 9e&

String city =srr[c];

for (int j =0; j <=740; j+=20) {

try{

String url=urlX+j+"&city="+city+"&query="+query;

String json=loadJSON(url);

json=jsonJX(json);

//JSONObject jsonObject =new JSONObject(json);

JSONArray array = new JSONArray(json);//Convert json string to json array

for (int i = 0; i < array.length(); i++) {

JSONObject ob = (JSONObject) array.get(i);//Get the json object

insert(ob.toString());

}

}catch (Exception e) {

System.err.println(".................error................");

}

}

}

}

}

//Database storage

public static void insert(String json){

try {

//String jsons=json.substring(1, json.length()-1);

JSONObject jsonObject =new JSONObject(json);

String jobfirstclass=jsonObject.getString("jobfirstclass");

String joblink=jsonObject.getString("joblink");

String experience=jsonObject.getString("experience");

String education=jsonObject.getString("education");

String employertype=jsonObject.getString("employertype");

String ori_city=jsonObject.getString("ori_city");

String salary=jsonObject.getString("salary");

String title=jsonObject.getString("title");

String sql="insert into Baidu (jobfirstclass,joblink,experience,education,employertype,ori_city,salary,title) VALUES(?,?,?,?,?,?,?,?)";

Object [] obj={jobfirstclass,joblink,experience,education,employertype,ori_city,salary,title};

DataSource dataSource = DBUtils.getDataSource();

QueryRunner qr = new QueryRunner(dataSource);

qr.execute(sql, obj);

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

//salary

}

//Baidu json analysis

public static String jsonJX(String json) throws JSONException{

JSONObject jsonObject =new JSONObject(json);

String date2=new JSONObject(jsonObject.getString("data")).getString("main");

String Date3=new JSONObject(date2).getString("data");

String BaiduDate=new JSONObject(Date3).getString("disp_data");

System.out.println(BaiduDate);

return BaiduDate;

}

//Get Json data

public static String loadJSON (String url) {

StringBuilder json = new StringBuilder();

try {

URL oracle = new URL(url);

URLConnection yc = oracle.openConnection();

BufferedReader in = new BufferedReader(new InputStreamReader(

yc.getInputStream()));

String inputLine = null;

while ( (inputLine = in.readLine()) != null) {

json.append(inputLine);

}

in.close();

} catch (MalformedURLException e) {

} catch (IOException e) {

}

return json.toString();

}

Tool class

public class DBUtils {

// Get c3p0 connection pool object

private static ComboPooledDataSource dataSource = new ComboPooledDataSource();

static {

// Configure the four parameters of the pool

try {

dataSource.setDriverClass("com.mysql.jdbc.Driver");

} catch (PropertyVetoException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

dataSource.setJdbcUrl("jdbc:mysql://localhost:3306/stone?useUnicode=true&characterEncoding=UTF-8");

dataSource.setUser("root");

dataSource.setPassword("admin");

// Pool configuration

//How many connections are added each time

dataSource.setAcquireIncrement(5);

//What is the initial number of connections

dataSource.setInitialPoolSize(20);

//Minimum connections

dataSource.setMinPoolSize(2);

//maximum connection

dataSource.setMaxPoolSize(50);

}

/**

* Get database connection object

*

* @return

* @throws SQLException

*/

public static Connection getConnection() throws SQLException {

return dataSource.getConnection();

}

/**

* Get c3p0 connection pool object

* @return

*/

public static DataSource getDataSource() {

return dataSource;

}

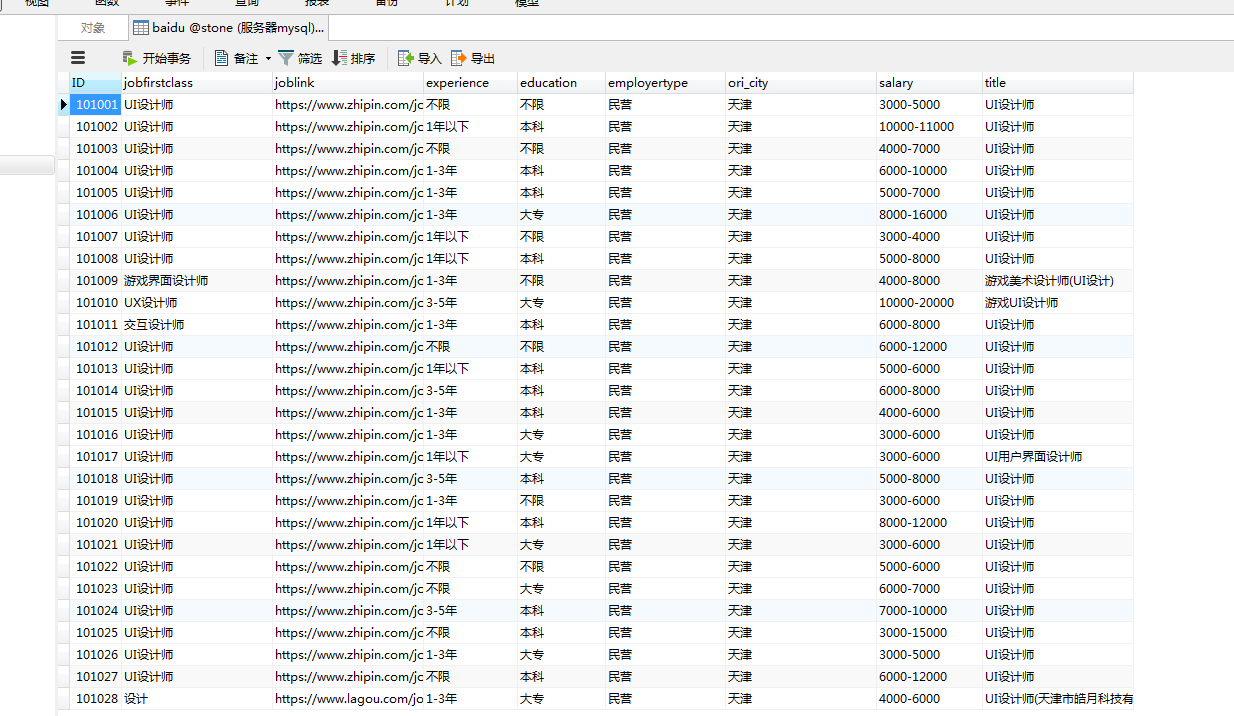

Then I'll show you the effect:

Some data can't be crawled, which should be Baidu's anti climbing mechanism

Copyright belongs to the author. Please keep the original link address for reprint