1. In depth learning development process

1.1 Turing test

Turing test was invented by Alan Matheson Turing. It means that when the tester is separated from the subject (a person and a machine), the subject can be asked questions at will through some devices (such as a keyboard). After multiple tests, if the machine asks each participant to make more than 30% miscalculation on average, the machine passes the test and is considered to have human intelligence. Turing test comes from a paper "computer and intelligence" written by Alan Matheson Turing, a pioneer of computer science and Cryptography in 1950. 30% of it is Turing's prediction of machine thinking ability in 2000. At present, we are far behind this prediction.

1.2 hierarchical processing information

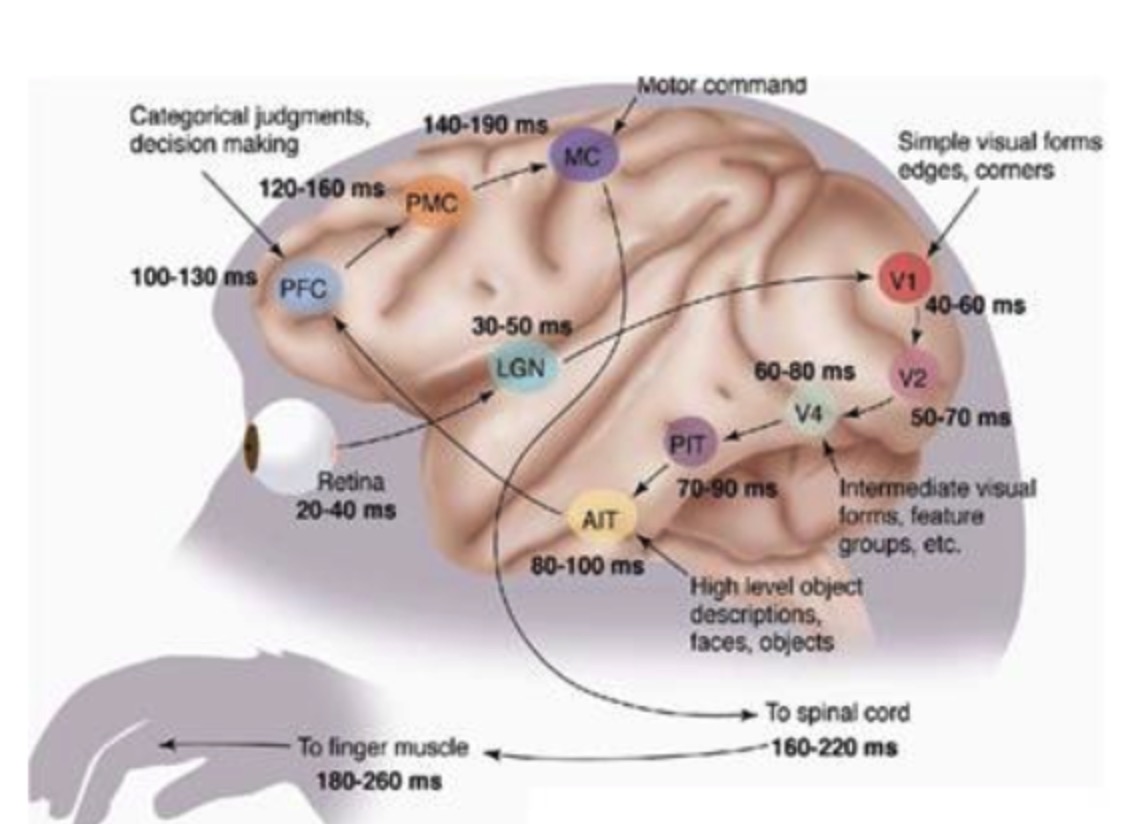

Brain cognition: 1. Edge feature 2. Basic shape and local feature of target 3. Whole target

The high-level features are the combination of the low-level features. The feature expression from the low-level to the high-level is more and more abstract and conceptualized, that is to say, more and more semantic or intention can be expressed. Starting from the retina, edge features are extracted from the low-level V1 area, to the basic shape of V2 area or the part of the target, then to the whole target (such as a face) at the high level, and to the PFC (prefrontal cortex) at the higher level for classification and judgment.

The following is the schematic diagram of the action of neurons in human brain:

1.3 in depth learning

Deep learning is precisely to form more abstract high-level features (or attribute categories) by combining low-level features.

2. Machine learning

2.1 category

Machine learning refers to the ability of computers to learn by using big data sets rather than hard coding rules.

Machine learning allows computers to learn by themselves. This way of learning makes use of the processing power of modern computers, and can easily handle large data sets. Basically, machine learning is a subset of artificial intelligence; more specifically, it is just a technology to realize AI, a model of training algorithm, which enables computers to learn how to make decisions. In a sense, machine learning programs adjust themselves according to the data that computers are exposed to.

2.2 classification

Supervised learning: data sets that require input and expected output markers. Such as weather forecast artificial intelligence.

Unsupervised learning: machine learning is based on information that is neither classified nor labeled, allowing algorithms to operate on information without guidance. Such as Amazon e-commerce website behavior prediction AI.

3. Neural network

3.1 initial understanding of neural network

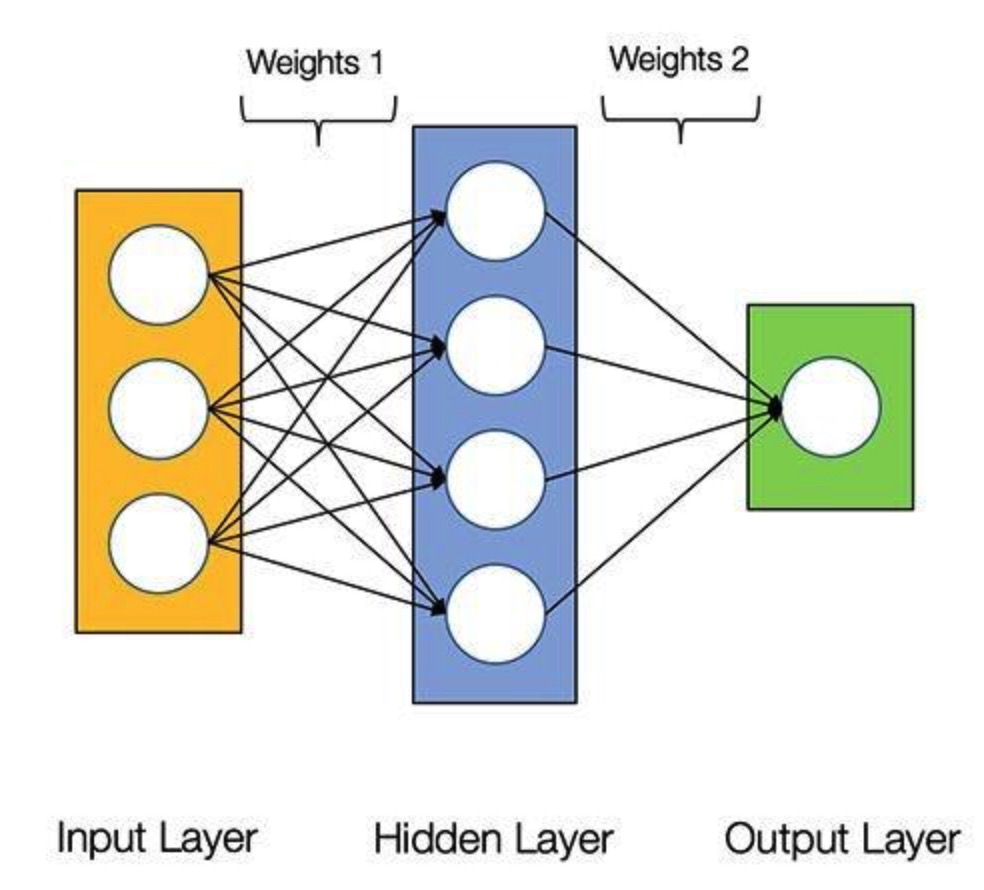

Neural network is a group of algorithms that roughly imitate human brain for pattern recognition. The term neural network comes from the inspiration behind the architecture design of these systems, which are used to simulate the basic structure of biological brain's own neural network, so that computers can perform specific tasks. The "AI price evaluation" model is also composed of neurons (circles). In addition, these neurons are interconnected.

Neurons are divided into three different types of layers:

-

The input layer receives input data. The input layer passes the input data to the first hidden layer.

-

The hidden layer computes the input data mathematically. One of the challenges of creating neural networks is to determine the number of hidden layers and the number of neurons in each layer.

-

The output layer of the artificial neural network is the last layer of the neuron. Its main function is to generate the given output for this program.

Each connection between neurons has a weight. This weight represents the importance of the input value. What the model does is learn how much each element contributes to the price. These "contributions" are weights in the model. The higher the weight of a feature, the more important it is than other features. Each neuron has an activation function. It is mainly a function that transfers output based on input. When a group of input data passes through all layers in the neural network, the output data is finally returned through the output layer.

In the process of training AI, it is important to give its input data set (a data set is a data set accessed individually or in combination or as a whole). In addition, it is also necessary to compare its output results with those of the data set.

3.2 example of neural network - paddle learning handwritten numeral classification

Use data set: MNIST data set, including 60000 training images and 10000 test images. It is divided into picture and label. The picture is a 28 * 28 pixel matrix, and the label is 0-9 digits.

The data set is very small, which is suitable for the introduction of image recognition. There are four files in the data set, namely training data and its corresponding label, test data and its corresponding label. The file is shown in the table:

| File name | Size | Explain |

|---|---|---|

| train-images-idx3-ubyte | 9.9M | Training data picture, 60000 pieces of data |

| train-labels-idx1-ubyte | 28.9K | Training data label, 60000 pieces of data |

| t10k-images-idx3-ubyte | 1.6M | Test data picture, 10000 pieces of data |

| t10k-labels-idx1-ubyte | 4.5K | Test data label, 10000 pieces of data |

Task: divide the gray images of handwritten numbers into 10 categories.

There are three classifiers provided by the government, which are Softmax regression, multi-layer perceptron and convolutional neural network LeNet-5. Convolutional neural network has been widely used in image recognition.

Step 1: prepare the data

In the startup environment of Baidu AI Studio, you can enter the Notebook interface and run the program directly without trial running on other editors.

Import dependency package:

#Import required packages import numpy as np import paddle as paddle import paddle.fluid as fluid from PIL import Image import matplotlib.pyplot as plt import os train_reader = paddle.batch(paddle.reader.shuffle(paddle.dataset.mnist.train(),buf_size=512),batch_size=128) # The interface of paddle.dataset.mnist.train() or test() has processed the gray level, normalization, and centering of the picture for us. test_reader = paddle.batch(paddle.dataset.mnist.test(),batch_size=128)

Step 2: define neural network - convolution neural network LeNet-5

# coding=utf-8 import paddle.v2 as paddle # Convolutional neural network LeNet-5 to obtain classifier def convolutional_neural_network(): # Define data model, data size is 28 * 28, i.e. 784 img = paddle.layer.data(name="pixel",type=paddle.data_type.dense_vector(784)) # First convolution -- pooling layer conv_pool_1 = paddle.networks.simple_img_conv_pool(input=img,filter_size=5,num_filters=20,num_channel=1,pool_size=2,pool_stride=2,act=paddle.activation.Relu()) # The second convolution -- pooling layer conv_pool_2 = paddle.networks.simple_img_conv_pool(input=conv_pool_1,filter_size=5,num_filters=50,num_channel=20,pool_size=2,pool_stride=2,act=paddle.activation.Relu()) # For the fully connected output layer with softmax as the activation function, the size of the output layer must be 10 numbers predict = paddle.layer.fc(input=conv_pool_2,size=10,act=paddle.activation.Softmax()) return predict

Step 3: initialize paddle

class TestMNIST: def __init__(self): # The model runs on a CUP with 2 cups paddle.init(use_gpu=False, trainer_count=2)

Step 4: get the trainer

# *****************Get trainer******************************** def get_trainer(self): # Get classifier out = convolutional_neural_network() # Define Tags label = paddle.layer.data(name="label",type=paddle.data_type.integer_value(10)) # Get loss function cost = paddle.layer.classification_cost(input=out, label=label) # Get parameters parameters = paddle.parameters.create(layers=cost) """ //Define optimization methods learning_rate Speed of iteration momentum Proportion to the previous momentum optimization regularzation Regularization,Prevent over fitting :leng re """ optimizer = paddle.optimizer.Momentum(learning_rate=0.1 / 128.0,momentum=0.9,regularization=paddle.optimizer.L2Regularization(rate=0.0005 * 128)) ''' //Create trainer cost loss function parameters Training parameters,Can be created by,You can also use previously trained parameters update_equation optimization method ''' trainer = paddle.trainer.SGD(cost=cost,parameters=parameters,update_equation=optimizer) return trainer

Step 5: start training

# *****************Start training******************************** def start_trainer(self): # Get trainer trainer = self.get_trainer() # Define training events def event_handler(event): lists = [] if isinstance(event, paddle.event.EndIteration): if event.batch_id % 100 == 0: print "\nPass %d, Batch %d, Cost %f, %s" % ( event.pass_id, event.batch_id, event.cost, event.metrics) else: sys.stdout.write('.') sys.stdout.flush() if isinstance(event, paddle.event.EndPass): # Save the trained parameters model_path = '../model' if not os.path.exists(model_path): os.makedirs(model_path) with open(model_path + "/model.tar", 'w') as f: trainer.save_parameter_to_tar(f=f) result = trainer.test(reader=paddle.batch(paddle.dataset.mnist.test(), batch_size=128)) print "\nTest with Pass %d, Cost %f, %s\n" % (event.pass_id, result.cost, result.metrics) lists.append((event.pass_id, result.cost, result.metrics['classification_error_evaluator'])) # get data reader = paddle.batch(paddle.reader.shuffle(paddle.dataset.mnist.train(), buf_size=20000), batch_size=128) ''' //Start training reader Training data num_passes Number of training rounds event_handler Training events,For example, what to do in training ''' trainer.train(reader=reader, num_passes=100, event_handler=event_handler)

Main function:

if __name__ == "__main__": testMNIST = TestMNIST() # Start training testMNIST.start_trainer()

Reference blog: