Avfunction learning notes - audio and video editing

Reading and writing of media data

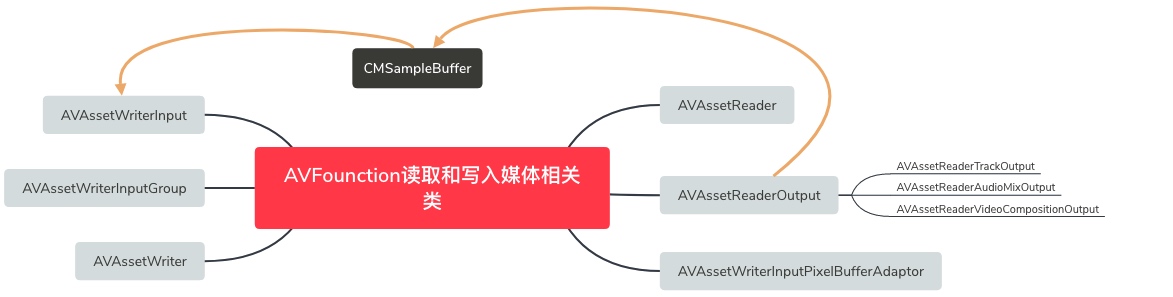

- AVAssetReader

AVAssetReader is used to read media samples from an AVAsset instance. One or more instances of AVAssetReaderOutput are usually configured and access audio samples and video frames through the copyNextSampleBuffer method. It is only for media samples with one resource.

- AVAssetWriter

AVAssetWriter is used to encode and write media resources to a container file. It is configured by one or more AVAssetWriterInput objects to attach CMSampleBuffer objects that will contain media samples written to the container. AVAssetWriter can automatically support cross media samples. It can be used for real-time operation and offline operation.

- Demo example

// Configure AVAssetReader

NSURL * url = [[NSBundle mainBundle] URLForResource:@"Test" withExtension:@"mov"];

AVAsset * asset = [AVAsset assetWithURL:url];

AVAssetTrack * track = [[asset tracksWithMediaType:AVMediaTypeVideo] firstObject];

self.assetReader = [[AVAssetReader alloc] initWithAsset:asset error:nil];

NSDictionary * readerOutputSettings = @{(id)kCVPixelBufferPixelFormatTypeKey : @(kCVPixelFormatType_32BGRA)};

AVAssetReaderTrackOutput * trackOutput = [[AVAssetReaderTrackOutput alloc] initWithTrack:track outputSettings:readerOutputSettings];

[self.assetReader addOutput:trackOutput];

[self.assetReader startReading];

// Configure AVAssetWriter

NSURL * outputURL = [[NSURL alloc] initFileURLWithPath:@"/Users/mac/Desktop/T/T/Writer.h264"];

NSError * error = nil;

self.assetWriter = [[AVAssetWriter alloc] initWithURL:outputURL fileType:AVFileTypeQuickTimeMovie error:&error];

if (error) {

NSLog(@"assetWriter error = %@", error.localizedDescription);

return;

}

NSDictionary * writeOutputSettings = @{

AVVideoCodecKey: AVVideoCodecTypeH264,

AVVideoWidthKey: @1280,

AVVideoHeightKey: @720,

AVVideoCompressionPropertiesKey: @{

AVVideoMaxKeyFrameIntervalKey: @1,

AVVideoAverageBitRateKey: @10500000,

AVVideoProfileLevelKey: AVVideoProfileLevelH264Main31

}

};

AVAssetWriterInput * writerInput = [[AVAssetWriterInput alloc] initWithMediaType:AVMediaTypeVideo outputSettings: writeOutputSettings];

[self.assetWriter addInput:writerInput];

[self.assetWriter startWriting];

// Start writing to container

[self.assetWriter startSessionAtSourceTime:kCMTimeZero];

[writerInput requestMediaDataWhenReadyOnQueue:dispatch_queue_create("com.writerQueue", NULL) usingBlock:^{

BOOL complete = NO;

while ([writerInput isReadyForMoreMediaData] && !complete) {

CMSampleBufferRef sampleBuffer = [trackOutput copyNextSampleBuffer];

if (sampleBuffer) {

BOOL result = [writerInput appendSampleBuffer:sampleBuffer];

CFRelease(sampleBuffer);

complete = !result;

} else {

[writerInput markAsFinished];

complete = YES;

}

}

if (complete) {

[self.assetWriter finishWritingWithCompletionHandler:^{

AVAssetWriterStatus status = self.assetWriter.status;

if (status == AVAssetWriterStatusCompleted) {

NSLog(@"success");

} else {

NSLog(@"error - %@", self.assetWriter.error.localizedDescription);

}

}];

}

}];

Media composition and editing

The combination and editing of media are realized by avfunction framework, including the combination and cutting of audio and audio, audio and video, video and video, etc.

Classes involved

AVAssetTrack

AVMutableComposition

AVMutableCompositionTrack

AVURLAsset

Implementation steps:

1. Create audio and video resources to be edited

2. Create media combination container and add audio and video track

3. Obtain track data of original audio and video

4. Add the required track data to the container

5. Create an export Session and export configuration parameters to the target location

let videoMov = Bundle.main.url(forResource: "Test", withExtension: "mov")

let videoMovAsset = AVURLAsset.init(url: videoMov!)

let audioMp3 = Bundle.main.url(forResource: "test", withExtension: "mp3")

let audioMp3Asset = AVURLAsset.init(url: audioMp3!)

let composition = AVMutableComposition()

// video

let videoTrack = composition.addMutableTrack(withMediaType: AVMediaType.video, preferredTrackID: kCMPersistentTrackID_Invalid)

// audio

let audioTrack = composition.addMutableTrack(withMediaType: AVMediaType.audio, preferredTrackID: kCMPersistentTrackID_Invalid)

// video

let cursorTime = kCMTimeZero

let videoDurtion = CMTimeMake(10, 1)

let videoTimeRange = CMTimeRangeMake(kCMTimeZero, videoDurtion)

var assetTrack = videoMovAsset.tracks(withMediaType: AVMediaType.video).first

do {

try videoTrack?.insertTimeRange(videoTimeRange, of: assetTrack!, at: cursorTime)

} catch let error {

print(" video error = \(error.localizedDescription)")

}

// audio frequency

let audioDurtion = CMTimeMake(10, 1)

let range = CMTimeRangeMake(kCMTimeZero, audioDurtion)

assetTrack = audioMp3Asset.tracks(withMediaType: AVMediaType.audio).first

do {

try audioTrack?.insertTimeRange(range, of: assetTrack!, at: cursorTime)

} catch let error {

print("audio error = \(error.localizedDescription)")

}

let outputUrl = URL(fileURLWithPath: "/Users/mac/Documents/iOSProject/AVFounctionStudy/AVFounctionStudy/edit.mp4")

let session = AVAssetExportSession(asset: composition, presetName: AVAssetExportPreset640x480)

session?.outputFileType = AVFileType.mp4

session?.outputURL = outputUrl

session?.exportAsynchronously(completionHandler: {

if AVAssetExportSessionStatus.completed == session?.status {

print("Export success")

} else {

print("Export failure = \(session?.error?.localizedDescription ?? "nil")")

}

})