AVFounction Learning Notes - Media Capture

- Basic knowledge

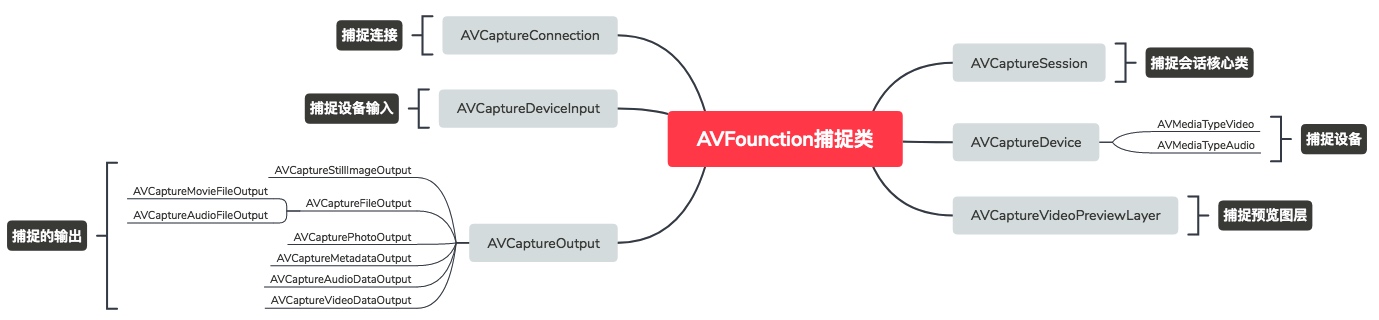

First, we introduce AVFounction capture related classes. As shown in the following figure

1. AVCaptureSession captures session core classes

2. AVCaptureDevice capture device, the most commonly used are audio and video

3. Preview Layer of AV CaptureVideo Preview Layer Video Capture

4. AVCaptureConnection Catches Connection Classes

5. AVCaptureDeviceInput Catches Connection Classes

6. The output captured by AVCaptureOutput. Include:

AV Capture Still Image Output

AV Capture Movie File Output (Video)

AV Capture Audio File Output (Audio)

AV Capture Photo Output

AVCapture Metadata Output

AVCapture Audio Data Output

AVCaptureVideo Data Output

- The simplest capture example

Core steps:

1. Creating a Session Layer

2. Adding input devices

3. Adding Output Devices

4. Create a preview layer

5. Start a session

// 1. Creating a Session Layer

let session = AVCaptureSession()

let cameraDevice = AVCaptureDevice.default(for: AVMediaType.video)

do {

// 2. Adding input devices

let cameraDeviceInput = try AVCaptureDeviceInput(device: cameraDevice!)

if session.canAddInput(cameraDeviceInput) {

session.addInput(cameraDeviceInput)

}

// 3. Adding Output Devices

let imageOutput = AVCapturePhotoOutput()

if session.canAddOutput(imageOutput) {

session.addOutput(imageOutput)

}

// 4. Create a preview layer

let layer = AVCaptureVideoPreviewLayer(session: session)

layer.frame = view.bounds

view.layer.addSublayer(layer)

// 5. Start a session

session.startRunning()

} catch let error {

print("Failed to create capture video device error = \(error)")

}

Demo examples illustrate common functions

Here are some code snippets to illustrate the use of some common API functions.

- Building Basic Conversations

- (BOOL)setupSession:(NSError **)error {

// Initialize session

self.captureSession = [[AVCaptureSession alloc] init];

self.captureSession.sessionPreset = AVCaptureSessionPresetHigh;

// Setting up Video Equipment

AVCaptureDevice * videoDevice = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeVideo];

AVCaptureDeviceInput * videoInput = [AVCaptureDeviceInput deviceInputWithDevice:videoDevice error:error];

if (videoInput) {

if ([self.captureSession canAddInput:videoInput]) {

[self.captureSession addInput:videoInput];

self.activeVideoInput = videoInput;

}

} else {

return NO;

}

// Setting up Audio Equipment

AVCaptureDevice * audioDevice = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeAudio];

AVCaptureDeviceInput * audioInput = [AVCaptureDeviceInput deviceInputWithDevice:audioDevice error:error];

if (audioInput) {

if ([self.captureSession canAddInput:audioInput]) {

[self.captureSession addInput:audioInput];

}

} else {

return NO;

}

// Setting image output

self.imageOutput = [[AVCaptureStillImageOutput alloc] init];

self.imageOutput.outputSettings = @{AVVideoCodecKey: AVVideoCodecJPEG};

if ([self.captureSession canAddOutput:self.imageOutput]) {

[self.captureSession addOutput:self.imageOutput];

}

// Setting movie output

self.movieOutput = [[AVCaptureMovieFileOutput alloc] init];

if ([self.captureSession canAddOutput:self.movieOutput]) {

[self.captureSession addOutput:self.movieOutput];

}

self.videoQueue = dispatch_queue_create("com.videoQueue", NULL);

return YES;

}

- Camera operation

- (AVCaptureDevice *)cameraWithPosition:(AVCaptureDevicePosition)position {

// Obtaining effective camera parameters

NSArray * devices = [AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo];

for (AVCaptureDevice * device in devices) {

if (device.position == position) {

return device;

}

}

return nil;

}

- (AVCaptureDevice *)activeCamera {

// Returns the attributes of the activated capture device input

return self.activeVideoInput.device;

}

- (AVCaptureDevice *)inactiveCamera {

AVCaptureDevice * device = nil;

// Get the reverse camera of the current camera

if (self.cameraCount > 1) {

if ([self activeCamera].position == AVCaptureDevicePositionBack) {

device = [self cameraWithPosition:AVCaptureDevicePositionFront];

} else {

device = [self cameraWithPosition:AVCaptureDevicePositionBack];

}

}

return device;

}

- (BOOL)canSwitchCameras {

// Determine if there are more than two cameras

return self.cameraCount > 1;

}

- (NSUInteger)cameraCount {

// Get the number of cameras

return [[AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo] count];

}

- Switching Camera

- (BOOL)switchCameras {

// Determine whether camera can be switched

if (![self canSwitchCameras]) {

return NO;

}

NSError * error;

// Get the inactive camera and create a new input

AVCaptureDevice * videoDevoce = [self inactiveCamera];

AVCaptureDeviceInput * videoInput = [AVCaptureDeviceInput deviceInputWithDevice:videoDevoce error:&error];

if (videoInput) {

// Remove old input and add new input

[self.captureSession beginConfiguration];

[self.captureSession removeInput:self.activeVideoInput];

if ([self.captureSession canAddInput:videoInput]) {

[self.captureSession addInput:videoInput];

self.activeVideoInput = videoInput;

} else {

[self.captureSession addInput:self.activeVideoInput];

}

[self.captureSession commitConfiguration];

} else {

NSLog(@"error = %@", error.localizedDescription);

return NO;

}

return YES;

}

- Realization of camera focusing

- (BOOL)cameraSupportsTapToFocus {

// Determine whether the device supports focusing

return [[self activeCamera] isFocusPointOfInterestSupported];

}

- (void)focusAtPoint:(CGPoint)point {

AVCaptureDevice * device = [self activeCamera];

// Determine whether Focusing is Supported and Autofocus

if (device.isFocusPointOfInterestSupported && [device isFocusModeSupported:AVCaptureFocusModeAutoFocus]) {

NSError * error;

if ([device lockForConfiguration:&error]) {

// Autofocus

device.focusPointOfInterest = point;

device.focusMode = AVCaptureFocusModeAutoFocus;

[device unlockForConfiguration];

} else {

NSLog(@"error = %@", error.localizedDescription);

}

}

}

- Camera exposure

// Click exposure

- (BOOL)cameraSupportsTapToExpose {

// Determine whether the activation device supports exposure

return [[self activeCamera] isExposurePointOfInterestSupported];

}

static const NSString *THCameraAdjustingExposureContext;

- (void)exposeAtPoint:(CGPoint)point {

AVCaptureDevice * device = [self activeCamera];

AVCaptureExposureMode exposureMode = AVCaptureExposureModeContinuousAutoExposure;

if (device.isExposurePointOfInterestSupported && [device isExposureModeSupported:exposureMode]) {

NSError * error;

// Lock device configuration, set exposurePointOfInterest, exposureMode values

if ([device lockForConfiguration:&error]) {

device.exposurePointOfInterest = point;

device.exposureMode = exposureMode;

// Determine whether the device supports automatic exposure mode

if ([device isExposureModeSupported:AVCaptureExposureModeLocked]) {

[device addObserver:self forKeyPath:@"adjustingExposure" options:NSKeyValueObservingOptionNew context:&THCameraAdjustingExposureContext];

}

[device unlockForConfiguration];

} else {

NSLog(@"error = %@", error.localizedDescription);

}

}

}

- (void)observeValueForKeyPath:(NSString *)keyPath

ofObject:(id)object

change:(NSDictionary *)change

context:(void *)context {

if (context == &THCameraAdjustingExposureContext) {

AVCaptureDevice * device = (AVCaptureDevice *)object;

// Determine whether the exposure level is no longer adjusted by the device

if (!device.isAdjustingExposure && [device isExposureModeSupported:AVCaptureExposureModeLocked]) {

// Remove notification

[object removeObserver:self forKeyPath:@"adjustingExposure" context:&THCameraAdjustingExposureContext];

dispatch_async(dispatch_get_main_queue(), ^{

NSError * error;

if ([device lockForConfiguration:&error]) {

device.exposureMode = AVCaptureExposureModeLocked;

[device unlockForConfiguration];

} else {

NSLog(@"error = %@", error.localizedDescription);

}

});

}

} else {

[super observeValueForKeyPath:keyPath ofObject:object change:change context:context];

}

}

// Refocus and Exposure

- (void)resetFocusAndExposureModes {

AVCaptureDevice * device = [self activeCamera];

AVCaptureFocusMode focusMode = AVCaptureFocusModeContinuousAutoFocus;

BOOL canResetFocus = [device isFocusPointOfInterestSupported] && [device isFocusModeSupported:focusMode];

AVCaptureExposureMode exposureMode = AVCaptureExposureModeContinuousAutoExposure;

BOOL canResetExposure = [device isExposurePointOfInterestSupported] && [device isExposureModeSupported:exposureMode];

CGPoint centerPoint = CGPointMake(0.5, 0.5);

NSError * error;

if ([device lockForConfiguration:&error]) {

if (canResetFocus) {

device.focusMode = focusMode;

device.focusPointOfInterest = centerPoint;

}

if (canResetExposure) {

device.exposureMode = exposureMode;

device.exposurePointOfInterest = centerPoint;

}

[device unlockForConfiguration];

} else {

NSLog(@"error = %@", error.localizedDescription);

}

}

- Flash and flashlight modes

// Adjust flash and flashlight modes

- (BOOL)cameraHasFlash {

return [[self activeCamera] hasFlash];

}

- (AVCaptureFlashMode)flashMode {

return [[self activeCamera] flashMode];

}

- (void)setFlashMode:(AVCaptureFlashMode)flashMode {

AVCaptureDevice * device = [self activeCamera];

if ([device isFlashModeSupported:flashMode]) {

NSError * error;

if ([device lockForConfiguration:&error]) {

device.flashMode = flashMode;

[device unlockForConfiguration];

} else {

NSLog(@"error = %@", error.localizedDescription);

}

}

}

- (BOOL)cameraHasTorch {

return [[self activeCamera] hasTorch];

}

- (AVCaptureTorchMode)torchMode {

return [[self activeCamera] torchMode];

}

- (void)setTorchMode:(AVCaptureTorchMode)torchMode {

AVCaptureDevice * device = [self activeCamera];

if ([device isTorchModeSupported:torchMode]) {

NSError * error;

if ([device lockForConfiguration:&error]) {

device.torchMode = torchMode;

[device unlockForConfiguration];

} else {

NSLog(@"error = %@", error.localizedDescription);

}

}

}

- Take photos and write them to the photo library

- (void)captureStillImage {

AVCaptureConnection * connection = [self.imageOutput connectionWithMediaType:AVMediaTypeVideo];

// Direction of adjustment

if (connection.isVideoOrientationSupported) {

connection.videoOrientation = [self currentVideoOrientation];

}

[self.imageOutput captureStillImageAsynchronouslyFromConnection:connection completionHandler:^(CMSampleBufferRef _Nullable imageDataSampleBuffer, NSError * _Nullable error) {

if (imageDataSampleBuffer != NULL) {

// Getting Picture Information

NSData * imageData = [AVCaptureStillImageOutput jpegStillImageNSDataRepresentation:imageDataSampleBuffer];

UIImage * image = [[UIImage alloc] initWithData:imageData];

NSLog(@"image = %@", image);

[self writeImageToAssetsLibrary:image];

} else {

NSLog(@"Capture static pictures sampleBuffer error = %@", error.localizedDescription);

}

}];

}

- (AVCaptureVideoOrientation)currentVideoOrientation {

AVCaptureVideoOrientation orientation;

// Switch the AVCaptureVideo Orientation direction according to the direction of the device. Note that the left and right are opposite.

switch ([UIDevice currentDevice].orientation) {

case UIDeviceOrientationPortrait:

orientation = AVCaptureVideoOrientationPortrait;

break;

case UIDeviceOrientationLandscapeRight:

orientation = AVCaptureVideoOrientationLandscapeLeft;

break;

case UIDeviceOrientationPortraitUpsideDown:

orientation = AVCaptureVideoOrientationPortraitUpsideDown;

break;

default:

orientation = AVCaptureVideoOrientationLandscapeRight;

break;

}

return orientation;

}

// Write the picture to the photo library

- (void)writeImageToAssetsLibrary:(UIImage *)image {

// Listing 6.13

ALAssetsLibrary * library = [[ALAssetsLibrary alloc] init];

[library writeImageToSavedPhotosAlbum:image.CGImage orientation:(NSInteger)image.imageOrientation completionBlock:^(NSURL *assetURL, NSError *error) {

if (!error) {

NSLog(@"Successful writing of photos"),

} else {

NSLog(@"writeImageToAssetsLibrary error = %@", error.localizedDescription);

}

}];

}

- Record videos and write them to photo galleries

- (BOOL)isRecording {

// Is it on tape?

return self.movieOutput.isRecording;

}

- (void)startRecording {

if (![self isRecording]) {

// Get the current connection information

AVCaptureConnection * videoConnection = [self.movieOutput connectionWithMediaType:AVMediaTypeVideo];

// Direction processing

if ([videoConnection isVideoOrientationSupported]) {

videoConnection.videoOrientation = self.currentVideoOrientation;

}

// Setting Recording Video Stability

if ([videoConnection isVideoOrientationSupported]) {

videoConnection.enablesVideoStabilizationWhenAvailable = YES;

}

// Reducing Focusing Speed and Smoothing Focusing

AVCaptureDevice * device = [self activeCamera];

if (device.isSmoothAutoFocusSupported) {

NSError * error;

if ([device lockForConfiguration:&error]) {

device.smoothAutoFocusEnabled = YES;

[device unlockForConfiguration];

} else {

NSLog(@"error = %@", error.localizedDescription);

}

}

// Getting Video Storage Address

self.outputURL = [self uniqueURL];

// Setting up an agent to start recording

[self.movieOutput startRecordingToOutputFileURL:self.outputURL recordingDelegate:self];

}

}

- (CMTime)recordedDuration {

return self.movieOutput.recordedDuration;

}

- (NSURL *)uniqueURL {

// Generate an address to store video

NSFileManager * fileManager = [NSFileManager defaultManager];

NSString * dirPath = [fileManager temporaryDirectoryWithTemplateString:@"kamera.XXXXXX"];

if (dirPath) {

NSString * filePath = [dirPath stringByAppendingPathComponent:@"kamera_movie.mov"];

return [NSURL fileURLWithPath:filePath];

}

return nil;

}

- (void)stopRecording {

if ([self isRecording]) {

[self.movieOutput stopRecording];

}

}

#pragma mark - AVCaptureFileOutputRecordingDelegate

- (void)captureOutput:(AVCaptureFileOutput *)captureOutput

didFinishRecordingToOutputFileAtURL:(NSURL *)outputFileURL

fromConnections:(NSArray *)connections

error:(NSError *)error {

if (error) {

[self.delegate mediaCaptureFailedWithError:error];

} else {

// Write video

[self writeVideoToAssetsLibrary:[self.outputURL copy]];

}

self.outputURL = nil;

}

- (void)writeVideoToAssetsLibrary:(NSURL *)videoURL {

ALAssetsLibrary * library = [[ALAssetsLibrary alloc] init];

// Check if the video can be written

if ([library videoAtPathIsCompatibleWithSavedPhotosAlbum:videoURL]) {

[library writeVideoAtPathToSavedPhotosAlbum:videoURL completionBlock:^(NSURL *assetURL, NSError *error) {

if (error) {

NSLog(@"error = %@", error.localizedDescription);

} else {

[self generateThumbnailForVideoAtURL:videoURL];

}

}];

}

}

// Get a thumbnail of the first time point of the video

- (void)generateThumbnailForVideoAtURL:(NSURL *)videoURL {

dispatch_async(self.videoQueue, ^{

AVAsset * asset = [AVAsset assetWithURL:videoURL];

AVAssetImageGenerator * imageGenerator = [AVAssetImageGenerator assetImageGeneratorWithAsset:asset];

imageGenerator.maximumSize = CGSizeMake(100, 0);

// Capture the direction of thumbnails

imageGenerator.appliesPreferredTrackTransform = YES;

CGImageRef imageRef = [imageGenerator copyCGImageAtTime:kCMTimeZero actualTime:NULL error:nil];

UIImage *image = [UIImage imageWithCGImage:imageRef];

CGImageRelease(imageRef);

dispatch_async(dispatch_get_main_queue(), ^{

NSLog(@"Back to the mainline layer, other operations");

});

});

}