1, Foreword

As for the role of sitemap, webmasters who have done website SEO should be familiar with it. The following is an explanation of Baidu search for your understanding:

**sitemap : * * you can regularly put website links into Sitemap and submit Sitemap to Baidu. Baidu will periodically grab and check the Sitemap you submitted and process the links, but the collection speed is slower than API push.

It can be seen from the explanation given by Baidu that if Baidu spider grabs Sitemap, the time is random, and it is slower for collection than API active push.

As a possible loose question, suppose you publish an original article created hard, and the result is "stolen" and published by other sites at the first time, but your site does not push this article to Baidu spider at the first time. As a result, the stolen site is pushed to Baidu spider first (or Baidu spider grabs the other party's sitemap file first), Then it may cause a problem - Baidu will think that the article is original by the other party, not itself, so it will give the other party higher traffic, but it doesn't. Yes? Is there a sad feeling of being cut off

Therefore, it is necessary for SEO to actively push Sitemap.

Active push strategies include:

- Push new articles immediately after release: if new articles are released, push them to Baidu spider immediately after release.

- Regularly push sitemap files: for example, push sitemap files to Baidu spider at a specific time every day.

This article will introduce the second method: regularly push Sitemap files to Baidu spider. The following is an automated script that can submit the Sitemap of Typecho website to Baidu search spider.

2, Use requirements

- Only Typecho sites are supported;

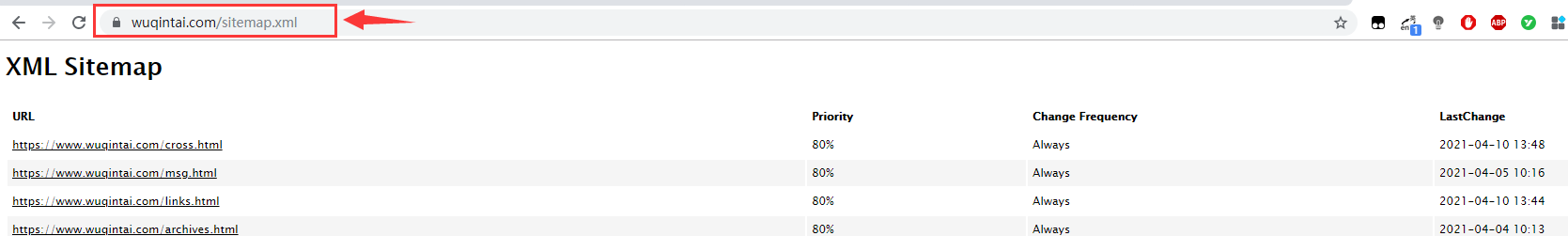

- already installed Sitemap This plug-in and ensure its availability;

- Make sure that python and pip are installed on the system (the default Linux system is built-in).

Verification method:

python -v pip -v

If the corresponding version information is output, it indicates that the installation has been completed. Otherwise, please complete the installation by yourself

3, Installation & Configuration

Step 1: install dependent packages

pip install requests pip install commands pip install beautifulsoup4

Step 2: create a directory for storing scripts and switch to this directory

mkdir -p /usr/tmp/SitemapAutoCommitScript cd /usr/tmp/SitemapAutoCommitScript

Step3: edit automation script

Execute vim main.py command to create and open the file, then press i key to enter editing mode, and then paste the following code:

#!/usr/bin/python

# coding: utf-8

######################################

# Author: wuqintai

# Time: April 13, 2021

# Website: www.wuqintai.com

######################################

import requests

from bs4 import BeautifulSoup

import commands

import datetime

def commit_sitemap(sitemap_url, post_api):

"""

# Submit the Sitemap.xml file of the website to Baidu collection center

"""

execution_time = datetime.datetime.now().strftime('%Y/%m/%d %H:%M:%S')

try:

# (1) Extract data

soup = BeautifulSoup(requests.get(sitemap_url).text, "lxml")

# (2) Filter website Sitemap information and write urls.txt

with open("./urls.txt", "w") as fp:

fp.truncate() # Empty original content

for tag in soup.find_all("loc"):

fp.write(tag.text + "\n")

# (3) Submit to Baidu

status, output = commands.getstatusoutput('curl -H "Content-Type:text/plain" --data-binary @urls.txt \"' + post_api + '\"')

# (4) Write log

save_commit_log("./Baidu_commit.log", execution_time + "\t" + output.split('\n')[-1] + "\n")

except Exception:

save_commit_log("./Baidu_commit.log", "Sitemap.xml grab failed")

def save_commit_log(file_name, text):

"""

# Write execution log

"""

with open(file_name, "a+") as fp:

fp.write(text)

if __name__ == "__main__":

# Site Sitemap.xml address

sitemap_url = "https://www.wuqintai.com/sitemap.xml"

# Baidu curl push API interface

baidu_post_api = "http://data.zz.baidu.com/urls?site=https://www.wuqintai.com&token=AeUAatgO96pSIKCj"

commit_sitemap(sitemap_url, baidu_post_api)

be careful:

The following two items need to be modified in the above code:

- sitemap_url: change to the address of sitemap.xml of your website.

- baidu_post_api: modify it to the interface call address you submitted in Baidu resource platform > > corresponding site > > General Collection > > resource submission > > API submission.

After modification, press Esc to exit the editing mode, then enter: wq enter to execute, save and exit the file.

Step 4: test script availability

In the script directory, execute the following command:

python main.py or ./main.py

If everything goes well, the program will not prompt any information. At this time, the following two files will be generated in the directory where the script is stored:

- urls.txt: collection of submitted URL s (data from sitemap.xml).

- Baidu_commit_sitemap.log: the log submitted to Baidu.

Step5: configure scheduled tasks

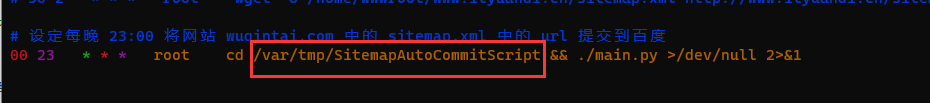

Execute the vim /etc/crontab command to start editing the system timing task file, and add the following contents at the end of the file:

# Set 23:00 every night to submit the url in the website sitemap.xml file to Baidu 00 23 * * * root cd /var/tmp/SitemapAutoCommitScript && ./main.py >/dev/null 2>&1

Note: if the path where you store the script is inconsistent with that in this tutorial, the above script also needs to be adjusted appropriately.

If you need to know whether the configuration is available, you can judge whether the script is executed normally by adjusting the promotion time and viewing the log.

In this way, the system will automatically help us submit the website sitemap every day!