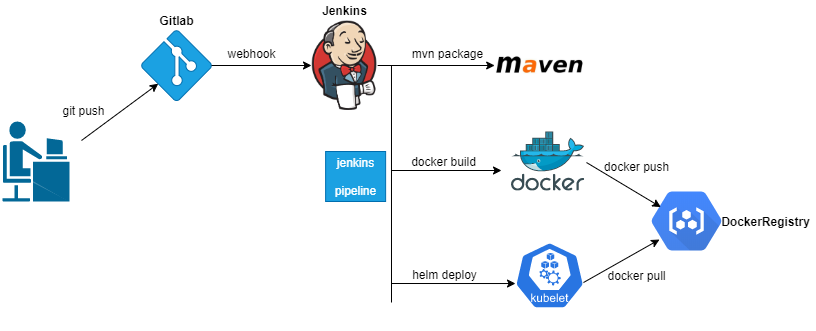

From a practical point of view, this paper introduces how to combine our commonly used Gitlab and Jenkins to realize the automatic deployment of the project through K8s. Examples will include the server-side project based on SpringBoot and the Web project based on Vue.js.

The tools and technologies involved in this paper include:

- Gitlab -- a common source code management system

- Jenkins, Jenkins Pipeline - a common automatic build and deployment tool. Pipeline organizes the steps of build and deployment in a pipeline manner

- Docker, dockerfile - container engine. All applications are ultimately run in docker containers. Dockerfile is the docker image definition file

- Kubernetes -- Google's open source container orchestration management system

- Helm - package management tool of Kubernetes, similar to package management tools such as yum, apt of Linux or npm of Node, can organize and manage applications and related dependent services in Kubernetes in the form of package (Chart)

Environmental background:

- Gitlab has been used for source code management. The source code has established development (corresponding development environment), pre release (corresponding test environment) and master (corresponding production environment) branches according to different environments

- Jenkins service has been set up

- Existing Docker Registry services are used for Docker image storage (self built based on Docker Registry or Harbor, or using cloud services. Alibaba cloud container image service is used in this paper)

- K8s cluster has been built

Expected effect:

- Deploy applications by environment. The development environment, test environment and production environment are separated and deployed in different namespaces of the same cluster or in different clusters (for example, the development test is deployed in different namespaces of the local cluster and the production environment is deployed in the cloud cluster)

- The configuration should be as general as possible. Only a few configuration attributes of a few configuration files need to be modified to complete the automatic deployment configuration of new projects

- The development test environment automatically triggers the build and deployment when pushing the code. The production environment adds the version tag on the master branch and triggers the automatic deployment after pushing the tag

- The overall interaction process is shown in the figure below

Project profile

First, we need to add some necessary configuration files in the root path of the project, as shown in the following figure

include:

- Dockerfile file, which is used to build Docker image (refer to Docker notes (XI): Dockerfile details and best practices)

- Helm related configuration files. Helm is the package management tool of Kubernetes, which can package the Deployment, Service, Ingress, etc. related to application Deployment for publishing and management (helm's specific introduction will be supplemented later)

- Jenkins file, Jenkins' pipeline definition file, defines the tasks to be performed in each stage

Dockerfile

Add a Dockerfile (the file name is Dockerfile) in the root directory of the project to define how to build a Docker image. Take the Spring Boot project as an example,

FROM frolvlad/alpine-java:jdk8-slim

#You can modify the build image through -- build args profile = XXX

ARG profile

ENV SPRING_PROFILES_ACTIVE=${profile}

#Port of the project

EXPOSE 8000

WORKDIR /mnt

#Modify time zone

RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.ustc.edu.cn/g' /etc/apk/repositories \

&& apk add --no-cache tzdata \

&& ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime \

&& echo "Asia/Shanghai" > /etc/timezone \

&& apk del tzdata \

&& rm -rf /var/cache/apk/* /tmp/* /var/tmp/* $HOME/.cache

COPY ./target/your-project-name-1.0-SNAPSHOT.jar ./app.jar

ENTRYPOINT ["java", "-jar", "/mnt/app.jar"]SPRING_PROFILES_ACTIVE is exposed through the parameter profile. During construction, it can be dynamically set through - build args profile = XXX to meet the image construction requirements of different environments.

SPRING_PROFILES_ACTIVE could have used docker run - e spring when the Docker container was started_ PROFILES_ Active = XXX. Since Helm is used for deployment here and does not run directly through docker run, it is specified during image construction through ARG

Helm profile

Helm is the package management tool of Kubernetes. It packages the Deployment, Service and Ingress related to application Deployment for publishing and management (it can be stored in the warehouse like a Docker image). As shown in the figure above, helm's configuration file includes:

helm - chart Directory name of the package ├── templates - k8s Configure template directory │ ├── deployment.yaml - Deployment Configure templates to define how to deploy Pod │ ├── _helpers.tpl - Files that begin with an underscore, helm As a common library definition file, it is used to define common sub templates, functions, variables, etc │ ├── ingress.yaml - Ingress Configure templates to define how external data is accessed Pod Services provided, similar to Nginx Domain name path configuration for │ ├── NOTES.txt - chart Package help information file, execution helm install After the command succeeds, the contents of this file will be output │ └── service.yaml - Service Configure template, configure access Pod Service abstraction, including NodePort And ClusterIp etc. |── values.yaml - chart The parameter configuration file of the package. Each template file can refer to the parameters here ├── Chart.yaml - chart Definition, you can define chart Name, version number, etc ├── charts - Dependent sub package directory, which can contain multiple dependent packages chart Package. Generally, there is no dependency. I deleted it here

We can define the chart name (similar to the installation package name) of each project in Chart.yaml, such as

apiVersion: v2 name: your-chart-name description: A Helm chart for Kubernetes type: application version: 1.0.0 appVersion: 1.16.0

Define the variables needed in the template file in values.yaml, such as

#The number of copies of the deployed Pod, that is, how many containers are running

replicaCount: 1

#Container mirroring configuration

image:

repository: registry.cn-hangzhou.aliyuncs.com/demo/demo

pullPolicy: Always

# Overrides the image tag whose default is the chart version.

tag: "dev"

#Mirror warehouse access credentials

imagePullSecrets:

- name: aliyun-registry-secret

#Overwrite startup container name

nameOverride: ""

fullnameOverride: ""

#Port exposure and environment variable configuration of container

container:

port: 8000

env: []

#ServiceAccount, not created by default

serviceAccount:

# Specifies whether a service account should be created

create: false

# Annotations to add to the service account

annotations: {}

name: ""

podAnnotations: {}

podSecurityContext: {}

# fsGroup: 2000

securityContext: {}

# capabilities:

# drop:

# - ALL

# readOnlyRootFilesystem: true

# runAsNonRoot: true

# runAsUser: 1000

#Use the service of NodePort. The default is ClusterIp

service:

type: NodePort

port: 8000

#To access the Ingress configuration externally, you need to configure the hosts section

ingress:

enabled: true

annotations: {}

# kubernetes.io/ingress.class: nginx

# kubernetes.io/tls-acme: "true"

hosts:

- host: demo.com

paths: ["/demo"]

tls: []

# - secretName: chart-example-tls

# hosts:

# - chart-example.local

#.... Other default parameter configurations are omittedThe container part is added on the basis of the default generation. The port number of the container can be specified here without changing the template file (the template file is common in various projects and usually does not need to be changed). At the same time, the env configuration is added, and the environment variable can be passed into the container during helm deployment. Change the Service type from the default ClusterIp to NodePort. When deploying different projects of the same type, only a few configuration items of Chart.yaml and values.yaml files need to be configured according to the project situation. The template files in the templates directory can be reused directly.

During deployment, you need to pull images from the Docker image warehouse in the K8s environment, so you need to create image warehouse access credentials (imagePullSecrets) in K8s

# Log in to Docker Registry to generate / root/.docker/config.json file sudo docker login --username=your-username registry.cn-shenzhen.aliyuncs.com # Create a namespace develop ment (I'm building a namespace based on the name of the environment branch of the project) kubectl create namespace develop # Create a secret in namespace develop ment kubectl create secret generic aliyun-registry-secret --from-file=.dockerconfigjson=/root/.docker/config.json --type=kubernetes.io/dockerconfigjson --namespace=develop

Jenkinsfile

Jenkinsfile is a Jenkins pipeline configuration file. It follows Groovy syntax. For the construction and deployment of Spring Boot project, write jenkinsfile script files as follows,

image_tag = "default" //Set a global variable to store the tag (version) of the Docker image

pipeline {

agent any

environment {

GIT_REPO = "${env.gitlabSourceRepoName}" //Get the name of the Git project from the Jenkins Gitlab plug-in

GIT_BRANCH = "${env.gitlabTargetBranch}" //Branch of project

GIT_TAG = sh(returnStdout: true,script: 'git describe --tags --always').trim() //commit id or tag name

DOCKER_REGISTER_CREDS = credentials('aliyun-docker-repo-creds') //docker registry voucher

KUBE_CONFIG_LOCAL = credentials('local-k8s-kube-config') //kube credentials for developing test environments

KUBE_CONFIG_PROD = "" //credentials('prod-k8s-kube-config ') / / Kube credentials for the production environment

DOCKER_REGISTRY = "registry.cn-hangzhou.aliyuncs.com" //Docker warehouse address

DOCKER_NAMESPACE = "your-namespace" //Namespace

DOCKER_IMAGE = "${DOCKER_REGISTRY}/${DOCKER_NAMESPACE}/${GIT_REPO}" //Docker image address

INGRESS_HOST_DEV = "dev.your-site.com" //Domain name of development environment

INGRESS_HOST_TEST = "test.your-site.com" //Domain name of the test environment

INGRESS_HOST_PROD = "prod.your-site.com" //Domain name of production environment

}

parameters {

string(name: 'ingress_path', defaultValue: '/your-path', description: 'Service context path')

string(name: 'replica_count', defaultValue: '1', description: 'Number of container copies')

}

stages {

stage('Code Analyze') {

agent any

steps {

echo "1. Code static check"

}

}

stage('Maven Build') {

agent {

docker {

image 'maven:3-jdk-8-alpine'

args '-v $HOME/.m2:/root/.m2'

}

}

steps {

echo "2. Code compilation and packaging"

sh 'mvn clean package -Dfile.encoding=UTF-8 -DskipTests=true'

}

}

stage('Docker Build') {

agent any

steps {

echo "3. structure Docker image"

echo "Mirror address: ${DOCKER_IMAGE}"

//Log in to Docker warehouse

sh "sudo docker login -u ${DOCKER_REGISTER_CREDS_USR} -p ${DOCKER_REGISTER_CREDS_PSW} ${DOCKER_REGISTRY}"

script {

def profile = "dev"

if (env.gitlabTargetBranch == "develop") {

image_tag = "dev." + env.GIT_TAG

} else if (env.gitlabTargetBranch == "pre-release") {

image_tag = "test." + env.GIT_TAG

profile = "test"

} else if (env.gitlabTargetBranch == "master"){

// The master branch uses Tag directly

image_tag = env.GIT_TAG

profile = "prod"

}

//Set the profile through -- build Arg to distinguish different environments for image construction

sh "docker build --build-arg profile=${profile} -t ${DOCKER_IMAGE}:${image_tag} ."

sh "sudo docker push ${DOCKER_IMAGE}:${image_tag}"

sh "docker rmi ${DOCKER_IMAGE}:${image_tag}"

}

}

}

stage('Helm Deploy') {

agent {

docker {

image 'lwolf/helm-kubectl-docker'

args '-u root:root'

}

}

steps {

echo "4. Deploy to K8s"

sh "mkdir -p /root/.kube"

script {

def kube_config = env.KUBE_CONFIG_LOCAL

def ingress_host = env.INGRESS_HOST_DEV

if (env.gitlabTargetBranch == "pre-release") {

ingress_host = env.INGRESS_HOST_TEST

} else if (env.gitlabTargetBranch == "master"){

ingress_host = env.INGRESS_HOST_PROD

kube_config = env.KUBE_CONFIG_PROD

}

sh "echo ${kube_config} | base64 -d > /root/.kube/config"

//The services are deployed to different namespace s according to different environments, and the branch name is used here

sh "helm upgrade -i --namespace=${env.gitlabTargetBranch} --set replicaCount=${params.replica_count} --set image.repository=${DOCKER_IMAGE} --set image.tag=${image_tag} --set nameOverride=${GIT_REPO} --set ingress.hosts[0].host=${ingress_host} --set ingress.hosts[0].paths={${params.ingress_path}} ${GIT_REPO} ./helm/"

}

}

}

}

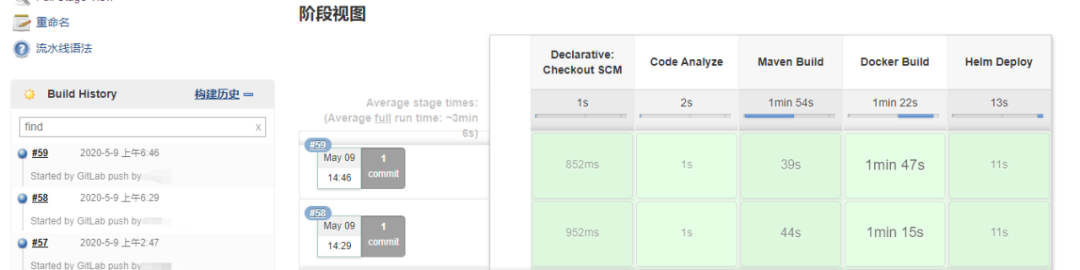

}Jenkinsfile defines the whole process of automated build and deployment:

- For code analysis, you can use static code analysis tools such as SonarQube to complete code inspection, which will be ignored here

- Maven Build: start a maven Docker container to complete the Maven Build and package of the project, and mount the Maven local warehouse directory to the host to avoid downloading the dependent package every time

- Docker Build builds docker images and pushes them to the image warehouse. Images in different environments are distinguished by tags. The development environment uses the form of dev.commitId, such as dev.88f5822. The test environment uses test.commitId. The production environment can set the webhook event to tag push event and directly use the tag name

- Helm Deploy uses helm to deploy new projects or upgrade existing projects. Different environments use different parameter configurations, such as access domain name and access credentials Kube of K8s cluster_ Config, etc

Jenkins configuration

Jenkins task configuration

Create a pipeline task in Jenkins, as shown in the figure below

Configure the build trigger, set the target branch as the develop ment branch, and generate a token, as shown in the figure below

Note the "GitLab webhook URL" and token value here, and use them in Gitlab configuration.

Configure the pipeline, select "Pipeline script from SCM" to obtain the pipeline script file from the project source code, configure the project Git address, and pull the source code certificate, as shown in the figure

Saving completes the Jenkins configuration of the project development environment. The test environment only needs to modify the corresponding branch to pre release

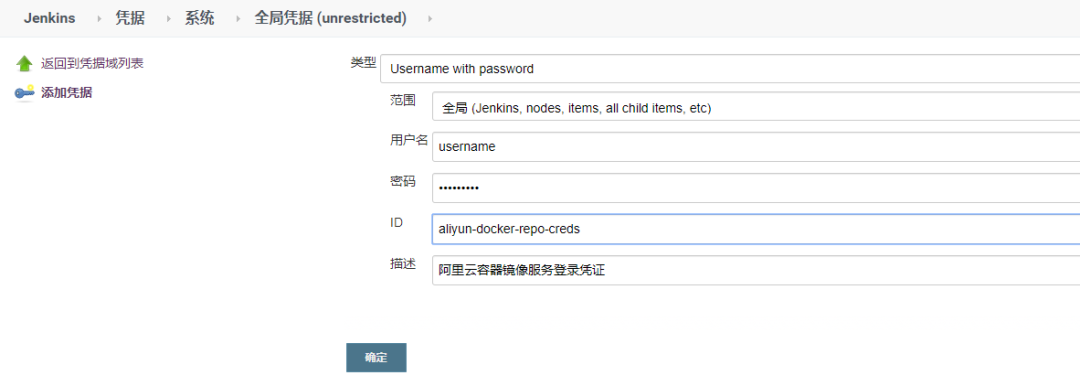

Jenkins credential configuration

In the Jenkinsfile file, we use two access credentials, the Docker Registry credential and the kube credential of the local K8s,

DOCKER_REGISTER_CREDS = credentials('aliyun-docker-repo-creds') //docker registry voucher

KUBE_CONFIG_LOCAL = credentials('local-k8s-kube-config') //kube credentials for developing test environmentsThese two vouchers need to be created in Jenkins.

Add the Docker Registry login credentials. On the Jenkins credentials page, add a username password type credential, as shown in the figure

Add the access credentials of the K8s cluster, and base64 code the contents of the / root/.kube/config file on the master node,

base64 /root/.kube/config > kube-config-base64.txt cat kube-config-base64.txt

Use the encoded content to create a Secret text type credential in Jenkins, as shown in the figure

Enter base64 encoded content in the Secret text box.

Gitlab configuration

Configure a webhook on the Settings - Integrations page of Gitlab project, fill in the URL and Secret Token with the "GitLab webhook URL" and token values in the Jenkins trigger section, and select "Push events" as the trigger event, as shown in the figure

If "Push events" is selected for the development and test environment, the Jenkins pipeline task of the development or test environment will be triggered to complete the automatic construction when the developer pushes the code or merge code to the development and pre release branch; Select "Tag push events" in the production environment to trigger automatic construction when pushing tag to the master branch. As shown in the figure, build the view for pipeline

summary

This paper introduces the use of Gitlab+Jenkins Pipeline+Docker+Kubernetes+Helm to realize the automatic deployment of Spring Boot projects. With a little modification, it can be applied to other Spring Boot based projects (the specific modifications are described in the Readme file of the source code).

Source: https://segmentfault.com/a/1190000022637144